It seems there’s a push on to establish Canada as a centre for artificial intelligence research and, if the federal and provincial governments have their way, for commercialization of said research. As always, there seems to be a bit of competition between Toronto (Ontario) and Montréal (Québec) as to which will be the dominant hub for the Canadian effort if one is to take Braga’s word for the situation.

In any event, Toronto seemed to have a mild advantage over Montréal initially with the 2017 Canadian federal government budget announcement that the Canadian Institute for Advanced Research (CIFAR), based in Toronto, would launch a Pan-Canadian Artificial Intelligence Strategy and with an announcement from the University of Toronto shortly after (from my March 31, 2017 posting),

On the heels of the March 22, 2017 federal budget announcement of $125M for a Pan-Canadian Artificial Intelligence Strategy, the University of Toronto (U of T) has announced the inception of the Vector Institute for Artificial Intelligence in a March 28, 2017 news release by Jennifer Robinson (Note: Links have been removed),

A team of globally renowned researchers at the University of Toronto is driving the planning of a new institute staking Toronto’s and Canada’s claim as the global leader in AI.

Geoffrey Hinton, a University Professor Emeritus in computer science at U of T and vice-president engineering fellow at Google, will serve as the chief scientific adviser of the newly created Vector Institute based in downtown Toronto.

“The University of Toronto has long been considered a global leader in artificial intelligence research,” said U of T President Meric Gertler. “It’s wonderful to see that expertise act as an anchor to bring together researchers, government and private sector actors through the Vector Institute, enabling them to aim even higher in leading advancements in this fast-growing, critical field.”

As part of the Government of Canada’s Pan-Canadian Artificial Intelligence Strategy, Vector will share $125 million in federal funding with fellow institutes in Montreal and Edmonton. All three will conduct research and secure talent to cement Canada’s position as a world leader in AI.

However, Montréal and the province of Québec are no slouches when it comes to supporting to technology. From a June 14, 2017 article by Matthew Braga for CBC (Canadian Broadcasting Corporation) news online (Note: Links have been removed),

One of the most promising new hubs for artificial intelligence research in Canada is going international, thanks to a $135 million investment with contributions from some of the biggest names in tech.

The company, Montreal-based Element AI, was founded last October [2016] to help companies that might not have much experience in artificial intelligence start using the technology to change the way they do business.

It’s equal parts general research lab and startup incubator, with employees working to develop new and improved techniques in artificial intelligence that might not be fully realized for years, while also commercializing products and services that can be sold to clients today.

It was co-founded by Yoshua Bengio — one of the pioneers of a type of AI research called machine learning — along with entrepreneurs Jean-François Gagné and Nicolas Chapados, and the Canadian venture capital fund Real Ventures.

In an interview, Bengio and Gagné said the money from the company’s funding round will be used to hire 250 new employees by next January. A hundred will be based in Montreal, but an additional 100 employees will be hired for a new office in Toronto, and the remaining 50 for an Element AI office in Asia — its first international outpost.

They will join more than 100 employees who work for Element AI today, having left jobs at Amazon, Uber and Google, among others, to work at the company’s headquarters in Montreal.

The expansion is a big vote of confidence in Element AI’s strategy from some of the world’s biggest technology companies. Microsoft, Intel and Nvidia all contributed to the round, and each is a key player in AI research and development.

The company has some not unexpected plans and partners (from the Braga, article, Note: A link has been removed),

The Series A round was led by Data Collective, a Silicon Valley-based venture capital firm, and included participation by Fidelity Investments Canada, National Bank of Canada, and Real Ventures.

What will it help the company do? Scale, its founders say.

“We’re looking at domain experts, artificial intelligence experts,” Gagné said. “We already have quite a few, but we’re looking at people that are at the top of their game in their domains.

“And at this point, it’s no longer just pure artificial intelligence, but people who understand, extremely well, robotics, industrial manufacturing, cybersecurity, and financial services in general, which are all the areas we’re going after.”

…

Gagné says that Element AI has already delivered 10 projects to clients in those areas, and have many more in development. In one case, Element AI has been helping a Japanese semiconductor company better analyze the data collected by the assembly robots on its factory floor, in a bid to reduce manufacturing errors and improve the quality of the company’s products.

…

There’s more to investment in Québec’s AI sector than Element AI (from the Braga article; Note: Links have been removed),

Element AI isn’t the only organization in Canada that investors are interested in.

In September, the Canadian government announced $213 million in funding for a handful of Montreal universities, while both Google and Microsoft announced expansions of their Montreal AI research groups in recent months alongside investments in local initiatives. The province of Quebec has pledged $100 million for AI initiatives by 2022.

…

Braga goes on to note some other initiatives but at that point the article’s focus is exclusively Toronto.

For more insight into the AI situation in Québec, there’s Dan Delmar’s May 23, 2017 article for the Montreal Express (Note: Links have been removed),

Advocating for massive government spending with little restraint admittedly deviates from the tenor of these columns, but the AI business is unlike any other before it. [emphasis misn] Having leaders acting as fervent advocates for the industry is crucial; resisting the coming technological tide is, as the Borg would say, futile.

The roughly 250 AI researchers who call Montreal home are not simply part of a niche industry. Quebec’s francophone character and Montreal’s multilingual citizenry are certainly factors favouring the development of language technology, but there’s ample opportunity for more ambitious endeavours with broader applications.

AI isn’t simply a technological breakthrough; it is the technological revolution. [emphasis mine] In the coming decades, modern computing will transform all industries, eliminating human inefficiencies and maximizing opportunities for innovation and growth — regardless of the ethical dilemmas that will inevitably arise.

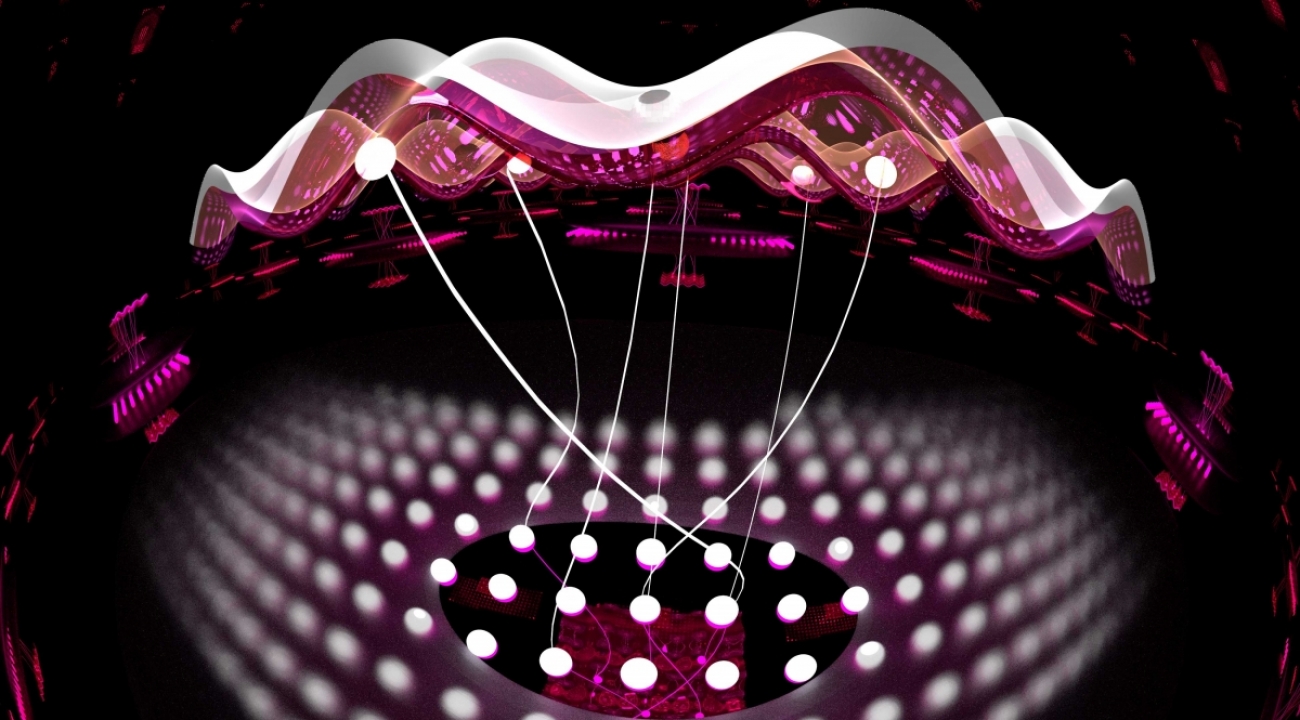

“By 2020, we’ll have computers that are powerful enough to simulate the human brain,” said (in 2009) futurist Ray Kurzweil, author of The Singularity Is Near, a seminal 2006 book that has inspired a generation of AI technologists. Kurzweil’s projections are not science fiction but perhaps conservative, as some forms of AI already effectively replace many human cognitive functions. “By 2045, we’ll have expanded the intelligence of our human-machine civilization a billion-fold. That will be the singularity.”

The singularity concept, borrowed from physicists describing event horizons bordering matter-swallowing black holes in the cosmos, is the point of no return where human and machine intelligence will have completed their convergence. That’s when the machines “take over,” so to speak, and accelerate the development of civilization beyond traditional human understanding and capability.

…

The claims I’ve highlighted in Delmar’s article have been made before for other technologies, “xxx is like no other business before’ and “it is a technological revolution.” Also if you keep scrolling down to the bottom of the article, you’ll find Delmar is a ‘public relations consultant’ which, if you look at his LinkedIn profile, you’ll find means he’s a managing partner in a PR firm known as Provocateur.

Bertrand Marotte’s May 20, 2017 article for the Montreal Gazette offers less hyperbole along with additional detail about the Montréal scene (Note: Links have been removed),

It might seem like an ambitious goal, but key players in Montreal’s rapidly growing artificial-intelligence sector are intent on transforming the city into a Silicon Valley of AI.

Certainly, the flurry of activity these days indicates that AI in the city is on a roll. Impressive amounts of cash have been flowing into academia, public-private partnerships, research labs and startups active in AI in the Montreal area.

…

…, researchers at Microsoft Corp. have successfully developed a computing system able to decipher conversational speech as accurately as humans do. The technology makes the same, or fewer, errors than professional transcribers and could be a huge boon to major users of transcription services like law firms and the courts.

Setting the goal of attaining the critical mass of a Silicon Valley is “a nice point of reference,” said tech entrepreneur Jean-François Gagné, co-founder and chief executive officer of Element AI, an artificial intelligence startup factory launched last year.

…

The idea is to create a “fluid, dynamic ecosystem” in Montreal where AI research, startup, investment and commercialization activities all mesh productively together, said Gagné, who founded Element with researcher Nicolas Chapados and Université de Montréal deep learning pioneer Yoshua Bengio.

“Artificial intelligence is seen now as a strategic asset to governments and to corporations. The fight for resources is global,” he said.

The rise of Montreal — and rival Toronto — as AI hubs owes a lot to provincial and federal government funding.

Ottawa promised $213 million last September to fund AI and big data research at four Montreal post-secondary institutions. Quebec has earmarked $100 million over the next five years for the development of an AI “super-cluster” in the Montreal region.

The provincial government also created a 12-member blue-chip committee to develop a strategic plan to make Quebec an AI hub, co-chaired by Claridge Investments Ltd. CEO Pierre Boivin and Université de Montréal rector Guy Breton.

But private-sector money has also been flowing in, particularly from some of the established tech giants competing in an intense AI race for innovative breakthroughs and the best brains in the business.

…

Montreal’s rich talent pool is a major reason Waterloo, Ont.-based language-recognition startup Maluuba decided to open a research lab in the city, said the company’s vice-president of product development, Mohamed Musbah.

“It’s been incredible so far. The work being done in this space is putting Montreal on a pedestal around the world,” he said.

Microsoft struck a deal this year to acquire Maluuba, which is working to crack one of the holy grails of deep learning: teaching machines to read like the human brain does. Among the company’s software developments are voice assistants for smartphones.

Maluuba has also partnered with an undisclosed auto manufacturer to develop speech recognition applications for vehicles. Voice recognition applied to cars can include such things as asking for a weather report or making remote requests for the vehicle to unlock itself.

Marotte’s Twitter profile describes him as a freelance writer, editor, and translator.