George Gershwin, along with his brother Ira, wrote jazz standards such as “I got rhythm” in 1930 and, before that, “Fascinating rhythm” in 1924 and both seem à propos in relation to this October 9, 2023 news item on phys.org,

f you could sink through the Earth’s crust, you might hear, with a carefully tuned ear, a cacophany of booms and crackles along the way. The fissures, pores, and defects running through rocks are like strings that resonate when pressed and stressed. And as a team of MIT geologists has found, the rhythm and pace of these sounds can tell you something about the depth and strength of the rocks around you.

…

An October 9, 2023 Massachusetts Institute of Technology news release (also on EurekAlert) by Jennifer Chu, which originated the news item, (word play alert) delves down into the material, Note: A link has been removed,

“If you were listening to the rocks, they would be singing at higher and higher pitches, the deeper you go,” says MIT geologist Matěj Peč.

Peč and his colleagues are listening to rocks, to see whether any acoustic patterns, or “fingerprints” emerge when subjected to various pressures. In lab studies, they have now shown that samples of marble, when subjected to low pressures, emit low-pitched “booms,” while at higher pressures, the rocks generate an ‘avalanche’ of higher-pitched crackles.

Peč says these acoustic patterns in rocks can help scientists estimate the types of cracks, fissures, and other defects that the Earth’s crust experiences with depth, which they can then use to identify unstable regions below the surface, where there is potential for earthquakes or eruptions. The team’s results, published in the Proceedings of the National Academy of Sciences, could also help inform surveyors’ efforts to drill for renewable, geothermal energy.

“If we want to tap these very hot geothermal sources, we will have to learn how to drill into rocks that are in this mixed-mode condition, where they are not purely brittle, but also flow a bit,” says Peč, who is an assistant professor in MIT’s Department of Earth, Atmospheric and Planetary Sciences (EAPS). “But overall, this is fundamental science that can help us understand where the lithosphere is strongest.”

Peč’s collaborators at MIT are lead author and research scientist Hoagy O. Ghaffari, technical associate Ulrich Mok, graduate student Hilary Chang, and professor emeritus of geophysics Brian Evans. Tushar Mittal, co-author and former EAPS postdoc, is now an assistant professor at Penn State University.

Fracture and flow

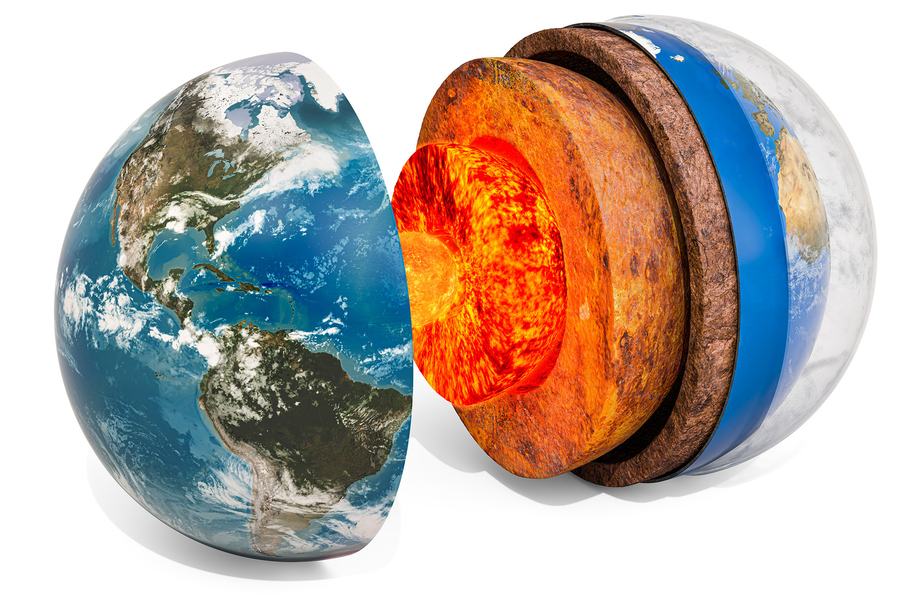

The Earth’s crust is often compared to the skin of an apple. At its thickest, the crust can be 70 kilometers deep — a tiny fraction of the globe’s total, 12,700-kilometer diameter. And yet, the rocks that make up the planet’s thin peel vary greatly in their strength and stability. Geologists infer that rocks near the surface are brittle and fracture easily, compared to rocks at greater depths, where immense pressures, and heat from the core, can make rocks flow.

The fact that rocks are brittle at the surface and more ductile at depth implies there must be an in-between — a phase in which rocks transition from one to the other, and may have properties of both, able to fracture like granite, and flow like honey. This “brittle-to-ductile transition” is not well understood, though geologists believe it may be where rocks are at their strongest within the crust.

“This transition state of partly flowing, partly fracturing, is really important, because that’s where we think the peak of the lithosphere’s strength is and where the largest earthquakes nucleate,” Peč says. “But we don’t have a good handle on this type of mixed-mode behavior.”

He and his colleagues are studying how the strength and stability of rocks — whether brittle, ductile, or somewhere in between — varies, based on a rock’s microscopic defects. The size, density, and distribution of defects such as microscopic cracks, fissures, and pores can shape how brittle or ductile a rock can be.

But measuring the microscopic defects in rocks, under conditions that simulate the Earth’s various pressures and depths, is no trivial task. There is, for instance, no visual-imaging technique that allows scientists to see inside rocks to map their microscopic imperfections. So the team turned to ultrasound, and the idea that, any sound wave traveling through a rock should bounce, vibrate, and reflect off any microscopic cracks and crevices, in specific ways that should reveal something about the pattern of those defects.

All these defects will also generate their own sounds when they move under stress and therefore both actively sounding through the rock as well as listening to it should give them a great deal of information. They found that the idea should work with ultrasound waves, at megahertz frequencies.

“This kind of ultrasound method is analogous to what seismologists do in nature, but at much higher frequencies,” Peč explains. “This helps us to understand the physics that occur at microscopic scales, during the deformation of these rocks.”

A rock in a hard place

In their experiments, the team tested cylinders of Carrara marble.

“It’s the same material as what Michaelangelo’s David is made from,” Peč notes. “It’s a very well-characterized material, and we know exactly what it should be doing.”

The team placed each marble cylinder in a a vice-like apparatus made from pistons of aluminum, zirconium, and steel, which together can generate extreme stresses. They placed the vice in a pressurized chamber, then subjected each cylinder to pressures similar to what rocks experience throughout the Earth’s crust.

As they slowly crushed each rock, the team sent pulses of ultrasound through the top of the sample, and recorded the acoustic pattern that exited through the bottom. When the sensors were not pulsing, they were listening to any naturally occurring acoustic emissions.

They found that at the lower end of the pressure range, where rocks are brittle, the marble indeed formed sudden fractures in response, and the sound waves resembled large, low-frequency booms. At the highest pressures, where rocks are more ductile, the acoustic waves resembled a higher-pitched crackling. The team believes this crackling was produced by microscopic defects called dislocations that then spread and flow like an avalanche.

“For the first time, we have recorded the ‘noises’ that rocks make when they are deformed across this brittle-to-ductile transition, and we link these noises to the individual microscopic defects that cause them,” Peč says. “We found that these defects massively change their size and propagation velocity as they cross this transition. It’s more complicated than people had thought.”

The team’s characterizations of rocks and their defects at various pressures can help scientists estimate how the Earth’s crust will behave at various depths, such as how rocks might fracture in an earthquake, or flow in an eruption.

“When rocks are partly fracturing and partly flowing, how does that feed back into the earthquake cycle? And how does that affect the movement of magma through a network of rocks?” Peč says. “Those are larger scale questions that can be tackled with research like this.”

This research was supported, in part, by the National Science Foundation.

Here’s a link to and a citation for the paper,

Microscopic defect dynamics during a brittle-to-ductile transition by Hoagy O’Ghaffari, Matěj Peč, Tushar Mittal, Ulrich Mok, Hilary Chang, and Brian Evans. Proceedings of the National Academy of Sciences 120 (42) e2305667120 DOI: https://doi.org/10.1073/pnas.2305667120 October 9, 2023

This paper is behind a paywall.