One health

This event at the Woodrow Wilson International Center for Scholars (Wilson Center) is the first that I’ve seen of its kind (from a November 2, 2018 Wilson Center Science and Technology Innovation Program [STIP] announcement received via email; Note: Logistics such as date and location follow directly after),

One Health in the 21st Century Workshop

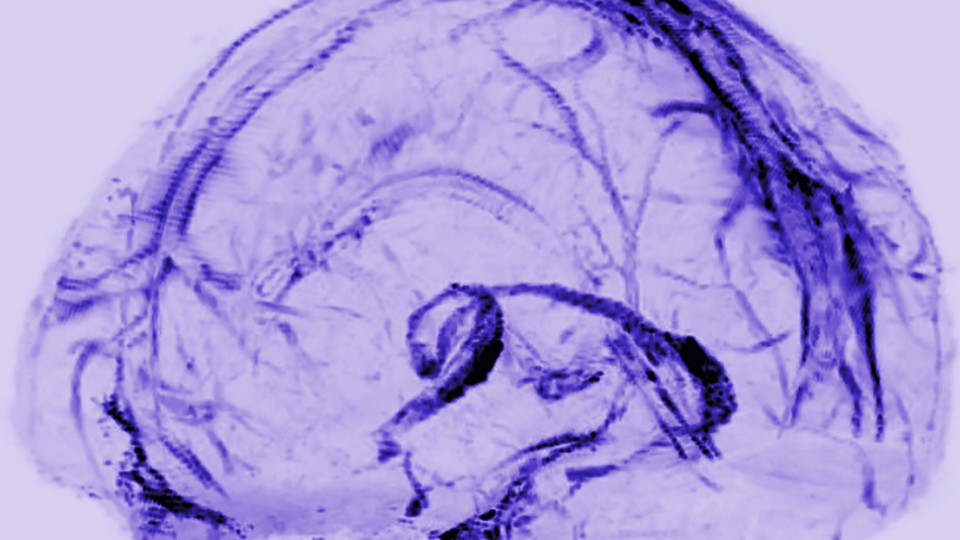

The One Health in the 21st Century workshop will serve as a snapshot of government, intergovernmental organization and non-governmental organization innovation as it pertains to the expanding paradigm of One Health. One Health being the umbrella term for addressing animal, human, and environmental health issues as inextricably linked [emphasis mine], each informing the other, rather than as distinct disciplines.

This snapshot, facilitated by a partnership between the Wilson Center, World Bank, and EcoHealth Alliance, aims to bridge professional silos represented at the workshop to address the current gaps and future solutions in the operationalization and institutionalization of One Health across sectors. With an initial emphasis on environmental resource management and assessment as well as federal cooperation, the One Health in the 21st Century Workshop is a launching point for upcoming events, convenings, and products, sparked by the partnership between the hosting organizations. RSVP today.

Agenda:

1:00pm — 1:15pm: Introductory Remarks

1:15pm — 2:30pm: Keynote and Panel: Putting One Health into Practice

Larry Madoff — Director of Emerging Disease Surveillance; Editor, ProMED-mail

Lance Brooks — Chief, Biological Threat Reduction Department at DoD

Further panelists TBA2:30pm — 2:40pm: Break

2:40pm — 3:50pm: Keynote and Panel: Adding Seats at the One Health Table: Promoting the Environmental Backbone at Home and Abroad

Assaf Anyamba — NASA Research Scientist

Jonathan Sleeman — Center Director for the U.S. Geological Survey’s National Wildlife Health Center

Jennifer Orme-Zavaleta — Principal Deputy Assistant Administrator for Science for the Office of Research and Development and the EPA Science Advisor

Further panelists TBA3:50pm — 4:50pm: Breakout Discussions and Report Back Panel

4:50pm — 5:00pm: Closing Remarks

5:00pm — 6:00pm: Networking Happy Hour

Co-Hosts:

You can register/RSVP here.

Logistics are:

November 26

1:00pm – 5:00pm

Reception to follow

5:00pm – 6:00pmFlom Auditorium, 6th floor

Wilson Center

Ronald Reagan Building and

International Trade Center

One Woodrow Wilson Plaza

1300 Pennsylvania, Ave., NW

Washington, D.C. 20004Phone: 202.691.4000

Internships

The Woodrow Wilson Center is gearing up for 2019 although the deadline for a Spring 2019 November 15, 2018. (You can find my previous announcement for internships in a July 23, 2018 posting). From a November 5, 2018 Wilson Center STIP announcement (received via email),

Internships in DC for Science and Technology Policy

Deadline for Fall Applicants November 15

The Science and Technology Innovation Program (STIP) at the Wilson Center welcomes applicants for spring 2019 internships. STIP focuses on understanding bottom-up, public innovation; top-down, policy innovation; and, on supporting responsible and equitable practices at the point where new technology and existing political, social, and cultural processes converge. We recommend exploring our blog and website first to determine if your research interests align with current STIP programming.

We offer two types of internships: research (open to law and graduate students only) and a social media and blogging internship (open to undergraduates, recent graduates, and graduate students). Research internships might deal with one of the following key objectives:

- Artificial Intelligence

- Citizen Science

- Cybersecurity

- One Health

- Public Communication of Science

- Serious Games Initiative

- Science and Technology Policy

Additionally, we are offering specific internships for focused projects, such as for our Earth Challenge 2020 initiative.

Special Project Intern: Earth Challenge 2020

Citizen science involves members of the public in scientific research to meet real world goals. In celebration of the 50th anniversary of Earth Day, Earth Day Network (EDN), The U.S. Department of State, and the Wilson Center are launching Earth Challenge 2020 (EC2020) as the world’s largest ever coordinated citizen science campaign. EC2020 will collaborate with existing citizen science projects as well as build capacity for new ones as part of a larger effort to grow citizen science worldwide. We will become a nexus for collecting billions of observations in areas including air quality, water quality, biodiversity, and human health to strengthen the links between science, the environment, and public citizens.

We are seeking a research intern with a specialty in topics including citizen science, crowdsourcing, making, hacking, sensor development, and other relevant topics.

This intern will scope and implement a semester-long project related to Earth Challenge 2020 deliverables. In addition to this the intern may:

- Conduct ad hoc research on a range of topics in science and technology innovation to learn while supporting department priorities.

- Write or edit articles and blog posts on topics of interest or local events.

- Support meetings, conferences, and other events, gaining valuable event management experience.

- Provide general logistical support.

This is a paid position available for 15-20 hours a week. Applicants from all backgrounds will be considered, though experience conducting cross and trans-disciplinary research is an asset. Ability to work independently is critical.

Interested applicants should submit a resume, cover letter describing their interest in Earth Challenge 2020 and outlining relevant skills, and two writing samples. One writing sample should be formal (e.g., a class paper); the other, informal (e.g., a blog post or similar).

For all internships, non-degree seeking students are ineligible. All internships must be served in Washington, D.C. and cannot be done remotely.

Full application process outlined on our internship website.

I don’t see a specific application deadline for the special project (Earth Challenge 2010) internship. In any event, good luck with all your applications.