I believe that China has more than two neuromorphic chips. The two being featured here are the ones for which I was easily able to find information.

The Darwin chip

The first information (that I stumbled across) about China and a neuromorphic chip (Darwin) was in a December 22, 2015 Science China Press news release on EurekAlert,

Artificial Neural Network (ANN) is a type of information processing system based on mimicking the principles of biological brains, and has been broadly applied in application domains such as pattern recognition, automatic control, signal processing, decision support system and artificial intelligence. Spiking Neural Network (SNN) is a type of biologically-inspired ANN that perform information processing based on discrete-time spikes. It is more biologically realistic than classic ANNs, and can potentially achieve much better performance-power ratio. Recently, researchers from Zhejiang University and Hangzhou Dianzi University in Hangzhou, China successfully developed the Darwin Neural Processing Unit (NPU), a neuromorphic hardware co-processor based on Spiking Neural Networks, fabricated by standard CMOS technology.

With the rapid development of the Internet-of-Things and intelligent hardware systems, a variety of intelligent devices are pervasive in today’s society, providing many services and convenience to people’s lives, but they also raise challenges of running complex intelligent algorithms on small devices. Sponsored by the college of Computer science of Zhejiang University, the research group led by Dr. De Ma from Hangzhou Dianzi university and Dr. Xiaolei Zhu from Zhejiang university has developed a co-processor named as Darwin.The Darwin NPU aims to provide hardware acceleration of intelligent algorithms, with target application domain of resource-constrained, low-power small embeddeddevices. It has been fabricated by 180nm standard CMOS process, supporting a maximum of 2048 neurons, more than 4 million synapses and 15 different possible synaptic delays. It is highly configurable, supporting reconfiguration of SNN topology and many parameters of neurons and synapses.Figure 1 shows photos of the die and the prototype development board, which supports input/output in the form of neural spike trains via USB port.

The successful development ofDarwin demonstrates the feasibility of real-time execution of Spiking Neural Networks in resource-constrained embedded systems. It supports flexible configuration of a multitude of parameters of the neural network, hence it can be used to implement different functionalities as configured by the user. Its potential applications include intelligent hardware systems, robotics, brain-computer interfaces, and others.Since it uses spikes for information processing and transmission,similar to biological neural networks, it may be suitable for analysis and processing of biological spiking neural signals, and building brain-computer interface systems by interfacing with animal or human brains. As a prototype application in Brain-Computer Interfaces, Figure 2 [not included here] describes an application example ofrecognizingthe user’s motor imagery intention via real-time decoding of EEG signals, i.e., whether he is thinking of left or right, and using it to control the movement direction of a basketball in the virtual environment. Different from conventional EEG signal analysis algorithms, the input and output to Darwin are both neural spikes: the input is spike trains that encode EEG signals; after processing by the neural network, the output neuron with the highest firing rate is chosen as the classification result.

The most recent development for this chip was announced in a September 2, 2019 Zhejiang University press release (Note: Links have been removed),

The second generation of the Darwin Neural Processing Unit (Darwin NPU 2) as well as its corresponding toolchain and micro-operating system was released in Hangzhou recently. This research was led by Zhejiang University, with Hangzhou Dianzi University and Huawei Central Research Institute participating in the development and algorisms of the chip. The Darwin NPU 2 can be primarily applied to smart Internet of Things (IoT). It can support up to 150,000 neurons and has achieved the largest-scale neurons on a nationwide basis.

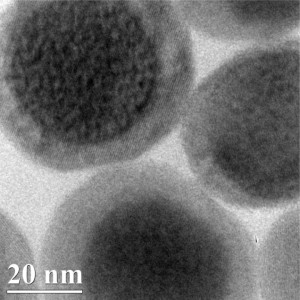

The Darwin NPU 2 is fabricated by standard 55nm CMOS technology. Every “neuromorphic” chip is made up of 576 kernels, each of which can support 256 neurons. It contains over 10 million synapses which can construct a powerful brain-inspired computing system.

“A brain-inspired chip can work like the neurons inside a human brain and it is remarkably unique in image recognition, visual and audio comprehension and naturalistic language processing,” said MA De, an associate professor at the College of Computer Science and Technology on the research team.

“In comparison with traditional chips, brain-inspired chips are more adept at processing ambiguous data, say, perception tasks. Another prominent advantage is their low energy consumption. In the process of information transmission, only those neurons that receive and process spikes will be activated while other neurons will stay dormant. In this case, energy consumption can be extremely low,” said Dr. ZHU Xiaolei at the School of Microelectronics.

To cater to the demands for voice business, Huawei Central Research Institute designed an efficient spiking neural network algorithm in accordance with the defining feature of the Darwin NPU 2 architecture, thereby increasing computing speeds and improving recognition accuracy tremendously.

Scientists have developed a host of applications, including gesture recognition, image recognition, voice recognition and decoding of electroencephalogram (EEG) signals, on the Darwin NPU 2 and reduced energy consumption by at least two orders of magnitude.

In comparison with the first generation of the Darwin NPU which was developed in 2015, the Darwin NPU 2 has escalated the number of neurons by two orders of magnitude from 2048 neurons and augmented the flexibility and plasticity of the chip configuration, thus expanding the potential for applications appreciably. The improvement in the brain-inspired chip will bring in its wake the revolution of computer technology and artificial intelligence. At present, the brain-inspired chip adopts a relatively simplified neuron model, but neurons in a real brain are far more sophisticated and many biological mechanisms have yet to be explored by neuroscientists and biologists. It is expected that in the not-too-distant future, a fascinating improvement on the Darwin NPU 2 will come over the horizon.

I haven’t been able to find a recent (i.e., post 2017) research paper featuring Darwin but there is another chip and research on that one was published in July 2019. First, the news.

The Tianjic chip

A July 31, 2019 article in the New York Times by Cade Metz describes the research and offers what seems to be a jaundiced perspective about the field of neuromorphic computing (Note: A link has been removed),

As corporate giants like Ford, G.M. and Waymo struggle to get their self-driving cars on the road, a team of researchers in China is rethinking autonomous transportation using a souped-up bicycle.

This bike can roll over a bump on its own, staying perfectly upright. When the man walking just behind it says “left,” it turns left, angling back in the direction it came.

It also has eyes: It can follow someone jogging several yards ahead, turning each time the person turns. And if it encounters an obstacle, it can swerve to the side, keeping its balance and continuing its pursuit.

… Chinese researchers who built the bike believe it demonstrates the future of computer hardware. It navigates the world with help from what is called a neuromorphic chip, modeled after the human brain.

Here’s a video, released by the researchers, demonstrating the chip’s abilities,

Now back to back to Metz’s July 31, 2019 article (Note: A link has been removed),

The short video did not show the limitations of the bicycle (which presumably tips over occasionally), and even the researchers who built the bike admitted in an email to The Times that the skills on display could be duplicated with existing computer hardware. But in handling all these skills with a neuromorphic processor, the project highlighted the wider effort to achieve new levels of artificial intelligence with novel kinds of chips.

This effort spans myriad start-up companies and academic labs, as well as big-name tech companies like Google, Intel and IBM. And as the Nature paper demonstrates, the movement is gaining significant momentum in China, a country with little experience designing its own computer processors, but which has invested heavily in the idea of an “A.I. chip.”

…

If you can get past what seems to be a patronizing attitude, there are some good explanations and cogent criticisms in the piece (Metz’s July 31, 2019 article, Note: Links have been removed),

… it faces significant limitations.

A neural network doesn’t really learn on the fly. Engineers train a neural network for a particular task before sending it out into the real world, and it can’t learn without enormous numbers of examples. OpenAI, a San Francisco artificial intelligence lab, recently built a system that could beat the world’s best players at a complex video game called Dota 2. But the system first spent months playing the game against itself, burning through millions of dollars in computing power.

Researchers aim to build systems that can learn skills in a manner similar to the way people do. And that could require new kinds of computer hardware. Dozens of companies and academic labs are now developing chips specifically for training and operating A.I. systems. The most ambitious projects are the neuromorphic processors, including the Tianjic chip under development at Tsinghua University in China.

Such chips are designed to imitate the network of neurons in the brain, not unlike a neural network but with even greater fidelity, at least in theory.

Neuromorphic chips typically include hundreds of thousands of faux neurons, and rather than just processing 1s and 0s, these neurons operate by trading tiny bursts of electrical signals, “firing” or “spiking” only when input signals reach critical thresholds, as biological neurons do.

…

Tiernan Ray’s August 3, 2019 article about the chip for ZDNet.com offers some thoughtful criticism with a side dish of snark (Note: Links have been removed),

Nature magazine’s cover story [July 31, 2019] is about a Chinese chip [Tianjic chip]that can run traditional deep learning code and also perform “neuromorophic” operations in the same circuitry. The work’s value seems obscured by a lot of hype about “artificial general intelligence” that has no real justification.

The term “artificial general intelligence,” or AGI, doesn’t actually refer to anything, at this point, it is merely a placeholder, a kind of Rorschach Test for people to fill the void with whatever notions they have of what it would mean for a machine to “think” like a person.

Despite that fact, or perhaps because of it, AGI is an ideal marketing term to attach to a lot of efforts in machine learning. Case in point, a research paper featured on the cover of this week’s Nature magazine about a new kind of computer chip developed by researchers at China’s Tsinghua University that could “accelerate the development of AGI,” they claim.

The chip is a strange hybrid of approaches, and is intriguing, but the work leaves unanswered many questions about how it’s made, and how it achieves what researchers claim of it. And some longtime chip observers doubt the impact will be as great as suggested.

“This paper is an example of the good work that China is doing in AI,” says Linley Gwennap, longtime chip-industry observer and principal analyst with chip analysis firm The Linley Group. “But this particular idea isn’t going to take over the world.”

The premise of the paper, “Towards artificial general intelligence with hybrid Tianjic chip architecture,” is that to achieve AGI, computer chips need to change. That’s an idea supported by fervent activity these days in the land of computer chips, with lots of new chip designs being proposed specifically for machine learning.

The Tsinghua authors specifically propose that the mainstream machine learning of today needs to be merged in the same chip with what’s called “neuromorphic computing.” Neuromorphic computing, first conceived by Caltech professor Carver Mead in the early ’80s, has been an obsession for firms including IBM for years, with little practical result.

…

[Missing details about the chip] … For example, the part is said to have “reconfigurable” circuits, but how the circuits are to be reconfigured is never specified. It could be so-called “field programmable gate array,” or FPGA, technology or something else. Code for the project is not provided by the authors as it often is for such research; the authors offer to provide the code “on reasonable request.”

More important is the fact the chip may have a hard time stacking up to a lot of competing chips out there, says analyst Gwennap. …

…

What the paper calls ANN and SNN are two very different means of solving similar problems, kind of like rotating (helicopter) and fixed wing (airplane) are for aviation,” says Gwennap. “Ultimately, I expect ANN [?] and SNN [spiking neural network] to serve different end applications, but I don’t see a need to combine them in a single chip; you just end up with a chip that is OK for two things but not great for anything.”

But you also end up generating a lot of buzz, and given the tension between the U.S. and China over all things tech, and especially A.I., the notion China is stealing a march on the U.S. in artificial general intelligence — whatever that may be — is a summer sizzler of a headline.

ANN could be either artificial neural network or something mentioned earlier in Ray’s article, a shortened version of CANN [continuous attractor neural network].

Shelly Fan’s August 7, 2019 article for the SingularityHub is almost as enthusiastic about the work as the podcasters for Nature magazine were (a little more about that later),

The study shows that China is readily nipping at the heels of Google, Facebook, NVIDIA, and other tech behemoths investing in developing new AI chip designs—hell, with billions in government investment it may have already had a head start. A sweeping AI plan from 2017 looks to catch up with the US on AI technology and application by 2020. By 2030, China’s aiming to be the global leader—and a champion for building general AI that matches humans in intellectual competence.

The country’s ambition is reflected in the team’s parting words.

“Our study is expected to stimulate AGI [artificial general intelligence] development by paving the way to more generalized hardware platforms,” said the authors, led by Dr. Luping Shi at Tsinghua University.

…

Using nanoscale fabrication, the team arranged 156 FCores, containing roughly 40,000 neurons and 10 million synapses, onto a chip less than a fifth of an inch in length and width. Initial tests showcased the chip’s versatility, in that it can run both SNNs and deep learning algorithms such as the popular convolutional neural network (CNNs) often used in machine vision.

Compared to IBM TrueNorth, the density of Tianjic’s cores increased by 20 percent, speeding up performance ten times and increasing bandwidth at least 100-fold, the team said. When pitted against GPUs, the current hardware darling of machine learning, the chip increased processing throughput up to 100 times, while using just a sliver (1/10,000) of energy.

…

BTW, Fan is a neuroscientist (from her SingularityHub profile page),

Shelly Xuelai Fan is a neuroscientist-turned-science writer. She completed her PhD in neuroscience at the University of British Columbia, where she developed novel treatments for neurodegeneration. While studying biological brains, she became fascinated with AI and all things biotech. Following graduation, she moved to UCSF [University of California at San Francisco] to study blood-based factors that rejuvenate aged brains. She is the co-founder of Vantastic Media, a media venture that explores science stories through text and video, and runs the award-winning blog NeuroFantastic.com. Her first book, “Will AI Replace Us?” (Thames & Hudson) will be out April 2019.

Onto Nature. Here’s a link to and a citation for the paper,

Towards artificial general intelligence with hybrid Tianjic chip architecture by Jing Pei, Lei Deng, Sen Song, Mingguo Zhao, Youhui Zhang, Shuang Wu, Guanrui Wang, Zhe Zou, Zhenzhi Wu, Wei He, Feng Chen, Ning Deng, Si Wu, Yu Wang, Yujie Wu, Zheyu Yang, Cheng Ma, Guoqi Li, Wentao Han, Huanglong Li, Huaqiang Wu, Rong Zhao, Yuan Xie & Luping Shi. Nature volume 572, pages106–111(2019) DOI: https//doi.org/10.1038/s41586-019-1424-8 Published: 31 July 2019 Issue Date: 01 August 2019

This paper is behind a paywall.

The July 31, 2019 Nature podcast, which includes a segment about the Tianjic chip research from China, which is at the 9 mins. 13 secs. mark (AI hardware) or you can scroll down about 55% of the way to the transcript of the interview with Luke Fleet, the Nature editor who dealt with the paper.

Some thoughts

The pundits put me in mind of my own reaction when I heard about phones that could take pictures. I didn’t see the point but, as it turned out, there was a perfectly good reason for combining what had been two separate activities into one device. It was no longer just a telephone and I had completely missed the point.

This too may be the case with the Tianjic chip. I think it’s too early to say whether or not it represents a new type of chip or if it’s a dead end.