The line between life and death may not be what we thought it was according to some research that was reported in April 2019. Ed Wong’s April 17, 2019 article (behind a paywall) for The Atlantic was my first inkling about the life-death questions raised by some research performed at Yale University, (Note: Links have been removed)

The brain, supposedly, cannot long survive without blood. Within seconds, oxygen supplies deplete, electrical activity fades, and unconsciousness sets in. If blood flow is not restored, within minutes, neurons start to die in a rapid, irreversible, and ultimately fatal wave.

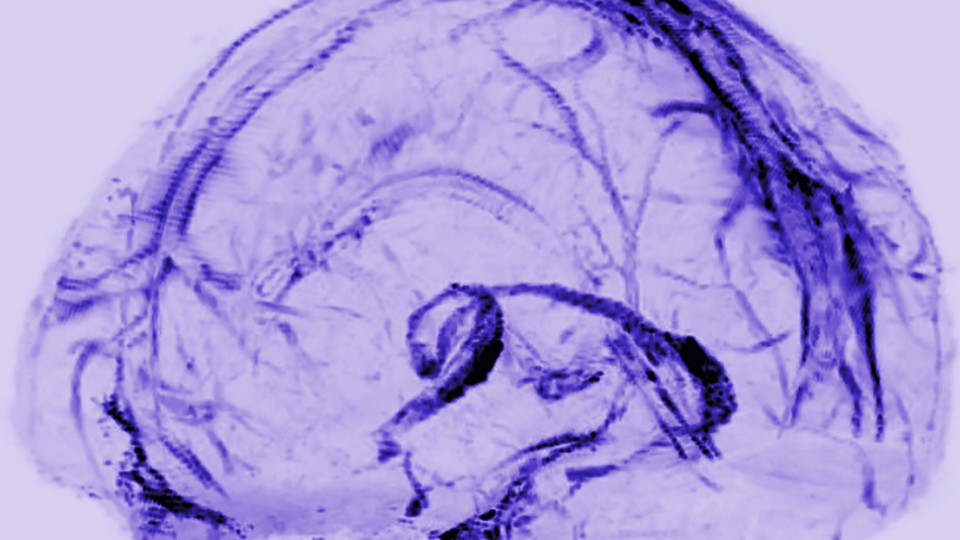

But maybe not? According to a team of scientists led by Nenad Sestan at Yale School of Medicine, this process might play out over a much longer time frame, and perhaps isn’t as inevitable or irreparable as commonly believed. Sestan and his colleagues showed this in dramatic fashion—by preserving and restoring signs of activity in the isolated brains of pigs that had been decapitated four hours earlier.

The team sourced 32 pig brains from a slaughterhouse, placed them in spherical chambers, and infused them with nutrients and protective chemicals, using pumps that mimicked the beats of a heart. This system, dubbed BrainEx, preserved the overall architecture of the brains, preventing them from degrading. It restored flow in their blood vessels, which once again became sensitive to dilating drugs. It stopped many neurons and other cells from dying, and reinstated their ability to consume sugar and oxygen. Some of these rescued neurons even started to fire. “Everything was surprising,” says Zvonimir Vrselja, who performed most of the experiments along with Stefano Daniele.

…

… “I don’t see anything in this report that should undermine confidence in brain death as a criterion of death,” says Winston Chiong, a neurologist at the University of California at San Francisco. The matter of when to declare someone dead has become more controversial since doctors began relying more heavily on neurological signs, starting around 1968, when the criteria for “brain death” were defined. But that diagnosis typically hinges on the loss of brainwide activity—a line that, at least for now, is still final and irreversible. After MIT Technology Review broke the news of Sestan’s work a year ago, he started receiving emails from people asking whether he could restore brain function to their loved ones. He very much cannot. BrainEx isn’t a resurrection chamber.

“It’s not going to result in human brain transplants,” adds Karen Rommelfanger, who directs Emory University’s neuroethics program. “And I don’t think this means that the singularity is coming, or that radical life extension is more possible than before.”

So why do the study? “There’s potential for using this method to develop innovative treatments for patients with strokes or other types of brain injuries, and there’s a real need for those kinds of treatments,” says L. Syd M Johnson, a neuroethicist at Michigan Technological University. The BrainEx method might not be able to fully revive hours-dead brains, but Yama Akbari, a critical-care neurologist at the University of California at Irvine, wonders whether it would be more successful if applied minutes after death. Alternatively, it could help to keep oxygen-starved brains alive and intact while patients wait to be treated. “It’s an important landmark study,” Akbari says.

…

Yong notes that the study still needs to be replicated in his article which also probes some of the ethical issues associated with the latest neuroscience research.

Nature published the Yale study,

Restoration of brain circulation and cellular functions hours post-mortem by Zvonimir Vrselja, Stefano G. Daniele, John Silbereis, Francesca Talpo, Yury M. Morozov, André M. M. Sousa, Brian S. Tanaka, Mario Skarica, Mihovil Pletikos, Navjot Kaur, Zhen W. Zhuang, Zhao Liu, Rafeed Alkawadri, Albert J. Sinusas, Stephen R. Latham, Stephen G. Waxman & Nenad Sestan. Nature 568, 336–343 (2019) DOI: https://doi.org/10.1038/s41586-019-1099-1 Published 17 April 2019 Issue Date 18 April 2019

This paper is behind a paywall.

Two neuroethicists had this to say (link to their commentary in Nature follows) as per an April 71, 2019 news release from Case Western Reserve University (also on EurekAlert), Note: Links have been removed,

The brain is more resilient than previously thought. In a groundbreaking experiment published in this week’s issue of Nature, neuroscientists created an artificial circulation system that successfully restored some functions and structures in donated pig brains–up to four hours after the pigs were butchered at a USDA food processing facility. Though there was no evidence of restored consciousness, brains from the pigs were without oxygen for hours, yet could still support key functions provided by the artificial system. The result challenges the notion that mammalian brains are fully and irreversibly damaged by a lack of oxygen.

“The assumptions have always been that after a couple minutes of anoxia, or no oxygen, the brain is ‘dead,'” says Stuart Youngner, MD, who co-authored a commentary accompanying the study with Insoo Hyun, PhD, both professors in the Department of Bioethics at Case Western Reserve University School of Medicine. “The system used by the researchers begs the question: How long should we try to save people?”

In the pig experiment, researchers used an artificial perfusate (a type of cell-free “artificial blood”), which helped brain cells maintain their structure and some functions. Resuscitative efforts in humans, like CPR, are also designed to get oxygen to the brain and stave off brain damage. After a period of time, if a person doesn’t respond to resuscitative efforts, emergency medical teams declare them dead.

The acceptable duration of resuscitative efforts is somewhat uncertain. “It varies by country, emergency medical team, and hospital,” Youngner said. Promising results from the pig experiment further muddy the waters about the when to stop life-saving efforts.

At some point, emergency teams must make a critical switch from trying to save a patient, to trying to save organs, said Youngner. “In Europe, when emergency teams stop resuscitation efforts, they declare a patient dead, and then restart the resuscitation effort to circulate blood to the organs so they can preserve them for transplantation.”

The switch can involve extreme means. In the commentary, Youngner and Hyun describe how some organ recovery teams use a balloon to physically cut off blood circulation to the brain after declaring a person dead, to prepare the organs for transplantation.

The pig experiment implies that sophisticated efforts to perfuse the brain might maintain brain cells. If technologies like those used in the pig experiment could be adapted for humans (a long way off, caution Youngner and Hyun), some people who, today, are typically declared legally dead after a catastrophic loss of oxygen could, tomorrow, become candidates for brain resuscitation, instead of organ donation.

Said Youngner, “As we get better at resuscitating the brain, we need to decide when are we going to save a patient, and when are we going to declare them dead–and save five or more who might benefit from an organ.”

Because brain resuscitation strategies are in their infancy and will surely trigger additional efforts, the scientific and ethics community needs to begin discussions now, says Hyun. “This study is likely to raise a lot of public concerns. We hoped to get ahead of the hype and offer an early, reasoned response to this scientific advance.”

Both Youngner and Hyun praise the experiment as a “major scientific advancement” that is overwhelmingly positive. It raises the tantalizing possibility that the grave risks of brain damage caused by a lack of oxygen could, in some cases, be reversible.

“Pig brains are similar in many ways to human brains, which makes this study so compelling,” Hyun said. “We urge policymakers to think proactively about what this line of research might mean for ongoing debates around organ donation and end of life care.”

Here’s a link to and a citation to the Nature commentary,

Pig experiment challenges assumptions around brain damage in people by Stuart Youngner and Insoo Hyun. Nature 568, 302-304 (2019) DOI: 10.1038/d41586-019-01169-8 April 17, 2019

This paper is open access.

I was hoping to find out more about BrainEx, but this April 17, 2019 US National Institute of Mental Health news release is all I’ve been able to find in my admittedly brief online search. The news release offers more celebration than technical detail.

Quick comment

Interestingly, there hasn’t been much of a furor over this work. Not yet.