Identification of the precise 3-D coordinates of iron, shown in red, and platinum atoms in an iron-platinum nanoparticle.. Courtesy of Colin Ophus and Florian Nickel/Berkeley Lab

The image of the iron-platinum nanoparticle (referenced in the headline) reminds of foetal ultrasound images. A Feb. 1, 2017 news item on ScienceDaily tells us more,

In the world of the very tiny, perfection is rare: virtually all materials have defects on the atomic level. These imperfections — missing atoms, atoms of one type swapped for another, and misaligned atoms — can uniquely determine a material’s properties and function. Now, UCLA [University of California at Los Angeles] physicists and collaborators have mapped the coordinates of more than 23,000 individual atoms in a tiny iron-platinum nanoparticle to reveal the material’s defects.

The results demonstrate that the positions of tens of thousands of atoms can be precisely identified and then fed into quantum mechanics calculations to correlate imperfections and defects with material properties at the single-atom level.

A Feb. 1, 2017 UCLA news release, which originated the news item, provides more detail about the work,

Jianwei “John” Miao, a UCLA professor of physics and astronomy and a member of UCLA’s California NanoSystems Institute, led the international team in mapping the atomic-level details of the bimetallic nanoparticle, more than a trillion of which could fit within a grain of sand.

“No one has seen this kind of three-dimensional structural complexity with such detail before,” said Miao, who is also a deputy director of the Science and Technology Center on Real-Time Functional Imaging. This new National Science Foundation-funded consortium consists of scientists at UCLA and five other colleges and universities who are using high-resolution imaging to address questions in the physical sciences, life sciences and engineering.

Miao and his team focused on an iron-platinum alloy, a very promising material for next-generation magnetic storage media and permanent magnet applications.

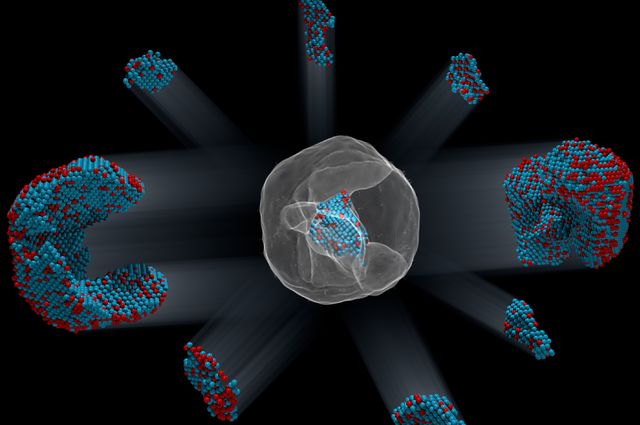

By taking multiple images of the iron-platinum nanoparticle with an advanced electron microscope at Lawrence Berkeley National Laboratory and using powerful reconstruction algorithms developed at UCLA, the researchers determined the precise three-dimensional arrangement of atoms in the nanoparticle.

“For the first time, we can see individual atoms and chemical composition in three dimensions. Everything we look at, it’s new,” Miao said.

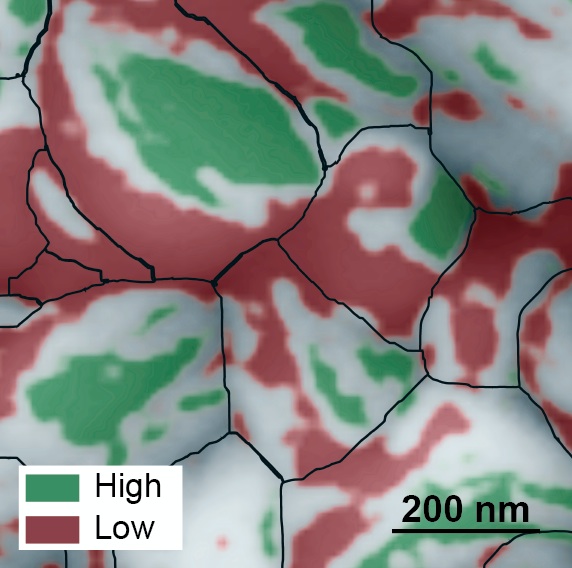

The team identified and located more than 6,500 iron and 16,600 platinum atoms and showed how the atoms are arranged in nine grains, each of which contains different ratios of iron and platinum atoms. Miao and his colleagues showed that atoms closer to the interior of the grains are more regularly arranged than those near the surfaces. They also observed that the interfaces between grains, called grain boundaries, are more disordered.

“Understanding the three-dimensional structures of grain boundaries is a major challenge in materials science because they strongly influence the properties of materials,” Miao said. “Now we are able to address this challenge by precisely mapping out the three-dimensional atomic positions at the grain boundaries for the first time.”

The researchers then used the three-dimensional coordinates of the atoms as inputs into quantum mechanics calculations to determine the magnetic properties of the iron-platinum nanoparticle. They observed abrupt changes in magnetic properties at the grain boundaries.

“This work makes significant advances in characterization capabilities and expands our fundamental understanding of structure-property relationships, which is expected to find broad applications in physics, chemistry, materials science, nanoscience and nanotechnology,” Miao said.

In the future, as the researchers continue to determine the three-dimensional atomic coordinates of more materials, they plan to establish an online databank for the physical sciences, analogous to protein databanks for the biological and life sciences. “Researchers can use this databank to study material properties truly on the single-atom level,” Miao said.

Miao and his team also look forward to applying their method called GENFIRE (GENeralized Fourier Iterative Reconstruction) to biological and medical applications. “Our three-dimensional reconstruction algorithm might be useful for imaging like CT scans,” Miao said. Compared with conventional reconstruction methods, GENFIRE requires fewer images to compile an accurate three-dimensional structure.

That means that radiation-sensitive objects can be imaged with lower doses of radiation.

The US Dept. of Energy (DOE) Lawrence Berkeley National Laboratory issued their own Feb. 1, 2017 news release (also on EurekAlert) about the work with a focus on how their equipment made this breakthrough possible (it repeats a little of the info. from the UCLA news release),

Scientists used one of the world’s most powerful electron microscopes to map the precise location and chemical type of 23,000 atoms in an extremely small particle made of iron and platinum.

The 3-D reconstruction reveals the arrangement of atoms in unprecedented detail, enabling the scientists to measure chemical order and disorder in individual grains, which sheds light on the material’s properties at the single-atom level. Insights gained from the particle’s structure could lead to new ways to improve its magnetic performance for use in high-density, next-generation hard drives.

What’s more, the technique used to create the reconstruction, atomic electron tomography (which is like an incredibly high-resolution CT scan), lays the foundation for precisely mapping the atomic composition of other useful nanoparticles. This could reveal how to optimize the particles for more efficient catalysts, stronger materials, and disease-detecting fluorescent tags.

Microscopy data was obtained and analyzed by scientists from the Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab) at the Molecular Foundry, in collaboration with Foundry users from UCLA, Oak Ridge National Laboratory, and the United Kingdom’s University of Birmingham. …

Atoms are the building blocks of matter, and the patterns in which they’re arranged dictate a material’s properties. These patterns can also be exploited to greatly improve a material’s function, which is why scientists are eager to determine the 3-D structure of nanoparticles at the smallest scale possible.

“Our research is a big step in this direction. We can now take a snapshot that shows the positions of all the atoms in a nanoparticle at a specific point in its growth. This will help us learn how nanoparticles grow atom by atom, and it sets the stage for a materials-design approach starting from the smallest building blocks,” says Mary Scott, who conducted the research while she was a Foundry user, and who is now a staff scientist. Scott and fellow Foundry scientists Peter Ercius and Colin Ophus developed the method in close collaboration with Jianwei Miao, a UCLA professor of physics and astronomy.

Their nanoparticle reconstruction builds on an achievement they reported last year in which they measured the coordinates of more than 3,000 atoms in a tungsten needle to a precision of 19 trillionths of a meter (19 picometers), which is many times smaller than a hydrogen atom. Now, they’ve taken the same precision, added the ability to distinguish different elements, and scaled up the reconstruction to include tens of thousands of atoms.

Importantly, their method maps the position of each atom in a single, unique nanoparticle. In contrast, X-ray crystallography and cryo-electron microscopy plot the average position of atoms from many identical samples. These methods make assumptions about the arrangement of atoms, which isn’t a good fit for nanoparticles because no two are alike.

“We need to determine the location and type of each atom to truly understand how a nanoparticle functions at the atomic scale,” says Ercius.

A TEAM approach

The scientists’ latest accomplishment hinged on the use of one of the highest-resolution transmission electron microscopes in the world, called TEAM I. It’s located at the National Center for Electron Microscopy, which is a Molecular Foundry facility. The microscope scans a sample with a focused beam of electrons, and then measures how the electrons interact with the atoms in the sample. It also has a piezo-controlled stage that positions samples with unmatched stability and position-control accuracy.

The researchers began growing an iron-platinum nanoparticle from its constituent elements, and then stopped the particle’s growth before it was fully formed. They placed the “partially baked” particle in the TEAM I stage, obtained a 2-D projection of its atomic structure, rotated it a few degrees, obtained another projection, and so on. Each 2-D projection provides a little more information about the full 3-D structure of the nanoparticle.

They sent the projections to Miao at UCLA, who used a sophisticated computer algorithm to convert the 2-D projections into a 3-D reconstruction of the particle. The individual atomic coordinates and chemical types were then traced from the 3-D density based on the knowledge that iron atoms are lighter than platinum atoms. The resulting atomic structure contains 6,569 iron atoms and 16,627 platinum atoms, with each atom’s coordinates precisely plotted to less than the width of a hydrogen atom.

Translating the data into scientific insights

Interesting features emerged at this extreme scale after Molecular Foundry scientists used code they developed to analyze the atomic structure. For example, the analysis revealed chemical order and disorder in interlocking grains, in which the iron and platinum atoms are arranged in different patterns. This has large implications for how the particle grew and its real-world magnetic properties. The analysis also revealed single-atom defects and the width of disordered boundaries between grains, which was not previously possible in complex 3-D boundaries.

“The important materials science problem we are tackling is how this material transforms from a highly randomized structure, what we call a chemically-disordered structure, into a regular highly-ordered structure with the desired magnetic properties,” says Ophus.

To explore how the various arrangements of atoms affect the nanoparticle’s magnetic properties, scientists from DOE’s Oak Ridge National Laboratory ran computer calculations on the Titan supercomputer at ORNL–using the coordinates and chemical type of each atom–to simulate the nanoparticle’s behavior in a magnetic field. This allowed the scientists to see patterns of atoms that are very magnetic, which is ideal for hard drives. They also saw patterns with poor magnetic properties that could sap a hard drive’s performance.

“This could help scientists learn how to steer the growth of iron-platinum nanoparticles so they develop more highly magnetic patterns of atoms,” says Ercius.

Adds Scott, “More broadly, the imaging technique will shed light on the nucleation and growth of ordered phases within nanoparticles, which isn’t fully theoretically understood but is critically important to several scientific disciplines and technologies.”

The folks at the Berkeley Lab have created a video (notice where the still image from the beginning of this post appears),

The Oak Ridge National Laboratory (ORNL), not wanting to be left out, has been mentioned in a Feb. 3, 2017 news item on ScienceDaily,

… researchers working with magnetic nanoparticles at the University of California, Los Angeles (UCLA), and the US Department of Energy’s (DOE’s) Lawrence Berkeley National Laboratory (Berkeley Lab) approached computational scientists at DOE’s Oak Ridge National Laboratory (ORNL) to help solve a unique problem: to model magnetism at the atomic level using experimental data from a real nanoparticle.

“These types of calculations have been done for ideal particles with ideal crystal structures but not for real particles,” said Markus Eisenbach, a computational scientist at the Oak Ridge Leadership Computing Facility (OLCF), a DOE Office of Science User Facility located at ORNL.

A Feb. 2, 2017 ORNL news release on EurekAlert, which originated the news item, elucidates further on how their team added to the research,

Eisenbach develops quantum mechanical electronic structure simulations that predict magnetic properties in materials. Working with Paul Kent, a computational materials scientist at ORNL’s Center for Nanophase Materials Sciences, the team collaborated with researchers at UCLA and Berkeley Lab’s Molecular Foundry to combine world-class experimental data with world-class computing to do something new–simulate magnetism atom by atom in a real nanoparticle.

Using the new data from the research teams on the West Coast, Eisenbach and Kent were able to precisely model the measured atomic structure, including defects, from a unique iron-platinum (FePt) nanoparticle and simulate its magnetic properties on the 27-petaflop Titan supercomputer at the OLCF.

Electronic structure codes take atomic and chemical structure and solve for the corresponding magnetic properties. However, these structures are typically derived from many 2-D electron microscopy or x-ray crystallography images averaged together, resulting in a representative, but not true, 3-D structure.

“In this case, researchers were able to get the precise 3-D structure for a real particle,” Eisenbach said. “The UCLA group has developed a new experimental technique where they can tell where the atoms are–the coordinates–and the chemical resolution, or what they are — iron or platinum.”

The ORNL news release goes on to describe the work from the perspective of the people who ran the supercompute simulationsr,

A Supercomputing Milestone

Magnetism at the atomic level is driven by quantum mechanics — a fact that has shaken up classical physics calculations and called for increasingly complex, first-principle calculations, or calculations working forward from fundamental physics equations rather than relying on assumptions that reduce computational workload.

For magnetic recording and storage devices, researchers are particularly interested in magnetic anisotropy, or what direction magnetism favors in an atom.

“If the anisotropy is too weak, a bit written to the nanoparticle might flip at room temperature,” Kent said.

To solve for magnetic anisotropy, Eisenbach and Kent used two computational codes to compare and validate results.

To simulate a supercell of about 1,300 atoms from strongly magnetic regions of the 23,000-atom nanoparticle, they used the Linear Scaling Multiple Scattering (LSMS) code, a first-principles density functional theory code developed at ORNL.

“The LSMS code was developed for large magnetic systems and can tackle lots of atoms,” Kent said.

As principal investigator on 2017, 2016, and previous INCITE program awards, Eisenbach has scaled the LSMS code to Titan for a range of magnetic materials projects, and the in-house code has been optimized for Titan’s accelerated architecture, speeding up calculations more than 8 times on the machine’s GPUs. Exceptionally capable of crunching large magnetic systems quickly, the LSMS code received an Association for Computing Machinery Gordon Bell Prize in high-performance computing achievement in 1998 and 2009, and developments continue to enhance the code for new architectures.

Working with Renat Sabirianov at the University of Nebraska at Omaha, the team also ran VASP, a simulation package that is better suited for smaller atom counts, to simulate regions of about 32 atoms.

“With both approaches, we were able to confirm that the local VASP results were consistent with the LSMS results, so we have a high confidence in the simulations,” Eisenbach said.

Computer simulations revealed that grain boundaries have a strong effect on magnetism. “We found that the magnetic anisotropy energy suddenly transitions at the grain boundaries. These magnetic properties are very important,” Miao said.

In the future, researchers hope that advances in computing and simulation will make a full-particle simulation possible — as first-principles calculations are currently too intensive to solve small-scale magnetism for regions larger than a few thousand atoms.

Also, future simulations like these could show how different fabrication processes, such as the temperature at which nanoparticles are formed, influence magnetism and performance.

“There’s a hope going forward that one would be able to use these techniques to look at nanoparticle growth and understand how to optimize growth for performance,” Kent said.

Finally, here’s a link to and a citation for the paper,

Deciphering chemical order/disorder and material properties at the single-atom level by Yongsoo Yang, Chien-Chun Chen, M. C. Scott, Colin Ophus, Rui Xu, Alan Pryor, Li Wu, Fan Sun, Wolfgang Theis, Jihan Zhou, Markus Eisenbach, Paul R. C. Kent, Renat F. Sabirianov, Hao Zeng, Peter Ercius, & Jianwei Miao. Nature 542, 75–79 (02 February 2017) doi:10.1038/nature21042 Published online 01 February 2017

This paper is behind a paywall.