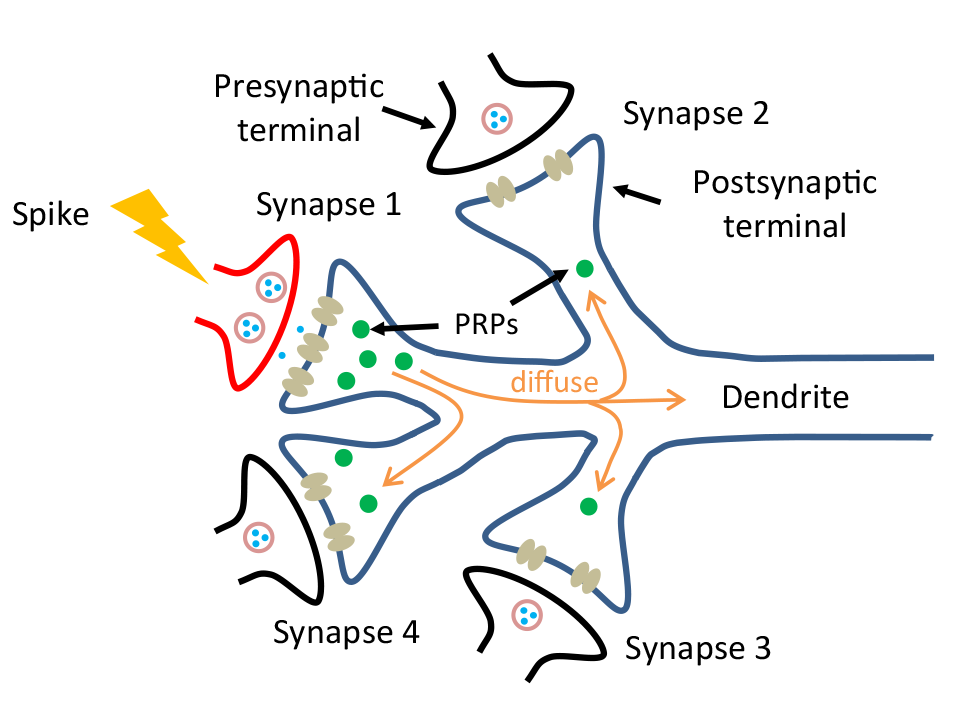

According to researchers at Helmholtz-Zentrum Dresden-Rossendorf and the rest of the international team collaborating on the work, it’s time to look more closely at plasticity in the neuronal membrane,.

From the abstract for their paper, Intrinsic plasticity of silicon nanowire neurotransistors for dynamic memory and learning functions by Eunhye Baek, Nikhil Ranjan Das, Carlo Vittorio Cannistraci, Taiuk Rim, Gilbert Santiago Cañón Bermúdez, Khrystyna Nych, Hyeonsu Cho, Kihyun Kim, Chang-Ki Baek, Denys Makarov, Ronald Tetzlaff, Leon Chua, Larysa Baraban & Gianaurelio Cuniberti. Nature Electronics volume 3, pages 398–408 (2020) DOI: https://doi.org/10.1038/s41928-020-0412-1 Published online: 25 May 2020 Issue Date: July 2020

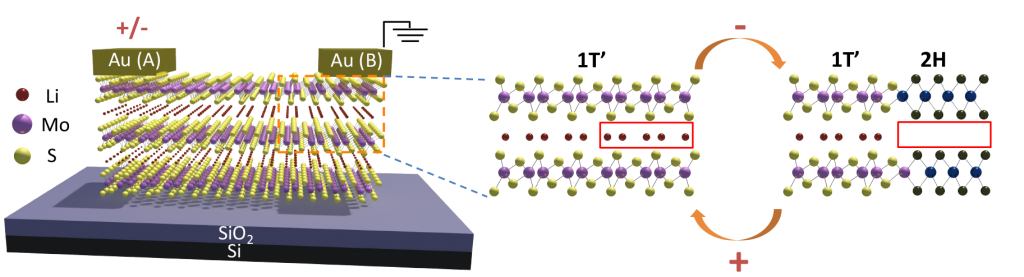

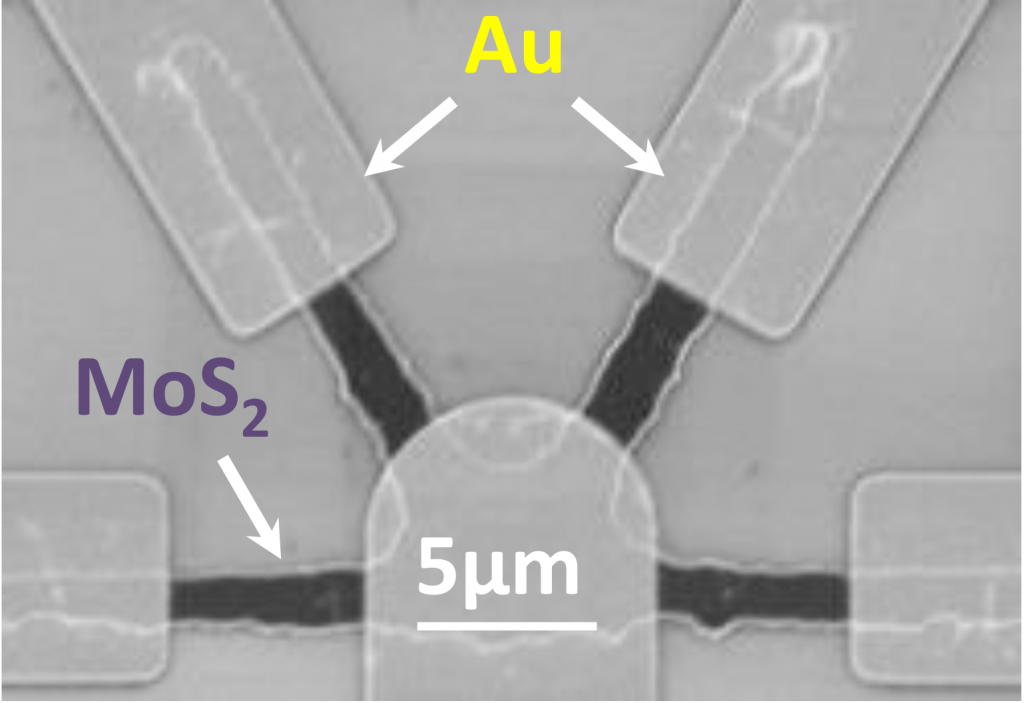

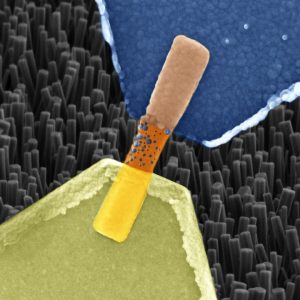

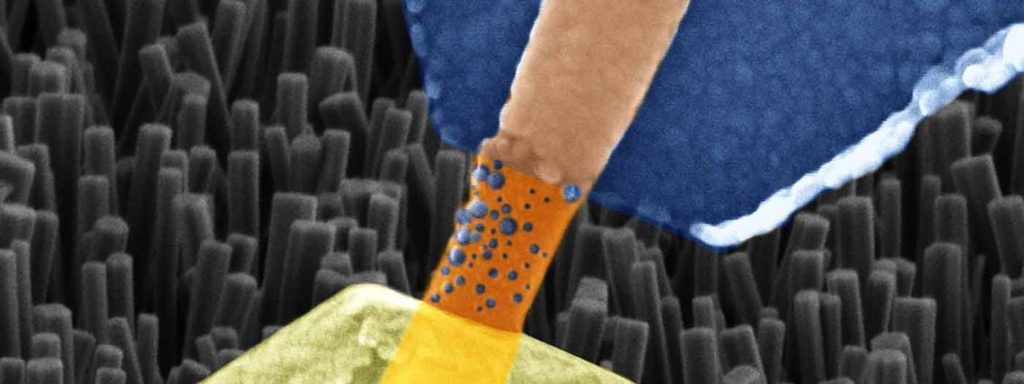

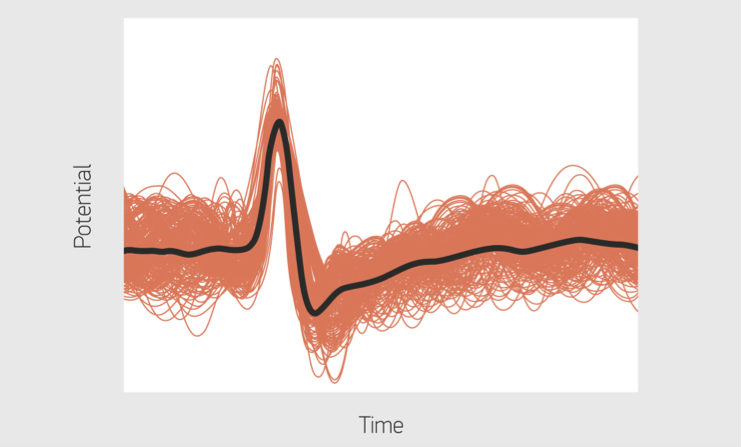

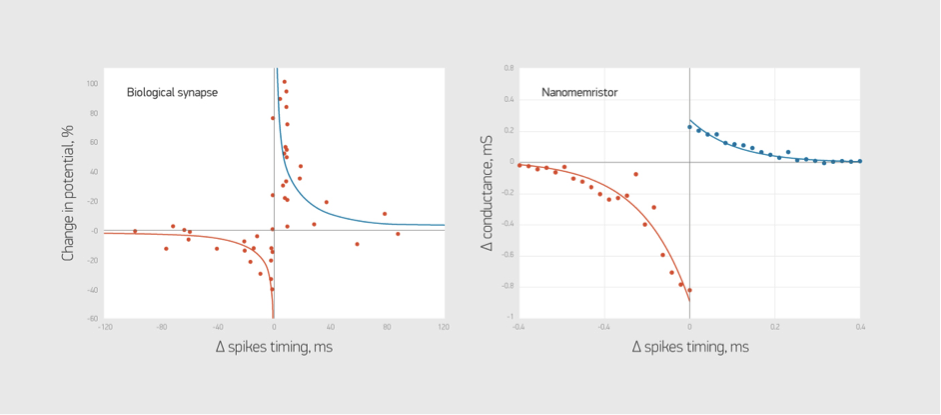

Neuromorphic architectures merge learning and memory functions within a single unit cell and in a neuron-like fashion. Research in the field has been mainly focused on the plasticity of artificial synapses. However, the intrinsic plasticity of the neuronal membrane is also important in the implementation of neuromorphic information processing. Here we report a neurotransistor made from a silicon nanowire transistor coated by an ion-doped sol–gel silicate film that can emulate the intrinsic plasticity of the neuronal membrane.

A July 14, 2020 news item on Nanowerk announced the research (Note: A link has been removed),

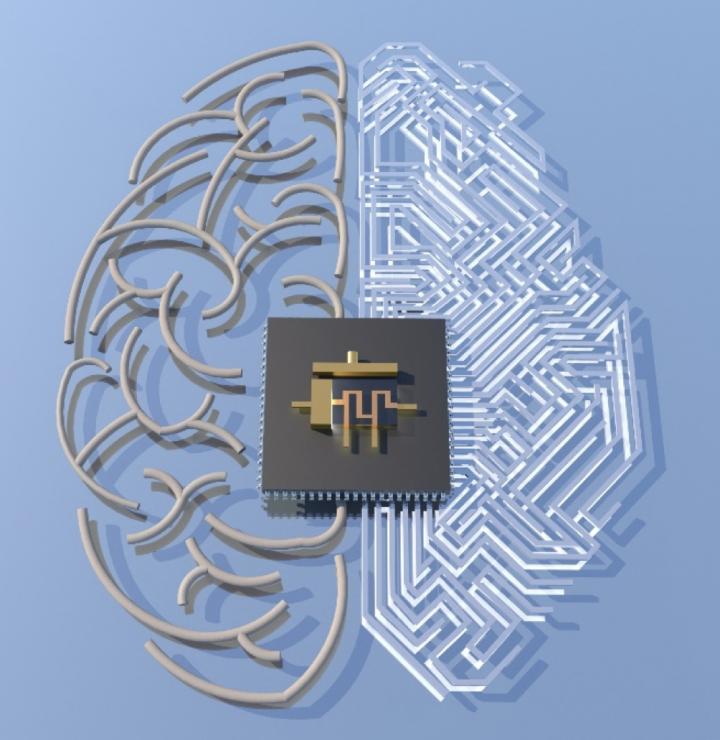

Especially activities in the field of artificial intelligence, like teaching robots to walk or precise automatic image recognition, demand ever more powerful, yet at the same time more economical computer chips. While the optimization of conventional microelectronics is slowly reaching its physical limits, nature offers us a blueprint how information can be processed and stored quickly and efficiently: our own brain.

For the very first time, scientists at TU Dresden and the Helmholtz-Zentrum Dresden-Rossendorf (HZDR) have now successfully imitated the functioning of brain neurons using semiconductor materials. They have published their research results in the journal Nature Electronics (“Intrinsic plasticity of silicon nanowire neurotransistors for dynamic memory and learning functions”).

A July 14, 2020 Helmholtz-Zentrum Dresden-Rossendorf press release (also on EurekAlert), which originated the news items delves further into the research,

Today, enhancing the performance of microelectronics is usually achieved by reducing component size, especially of the individual transistors on the silicon computer chips. “But that can’t go on indefinitely – we need new approaches”, Larysa Baraban asserts. The physicist, who has been working at HZDR since the beginning of the year, is one of the three primary authors of the international study, which involved a total of six institutes. One approach is based on the brain, combining data processing with data storage in an artificial neuron.

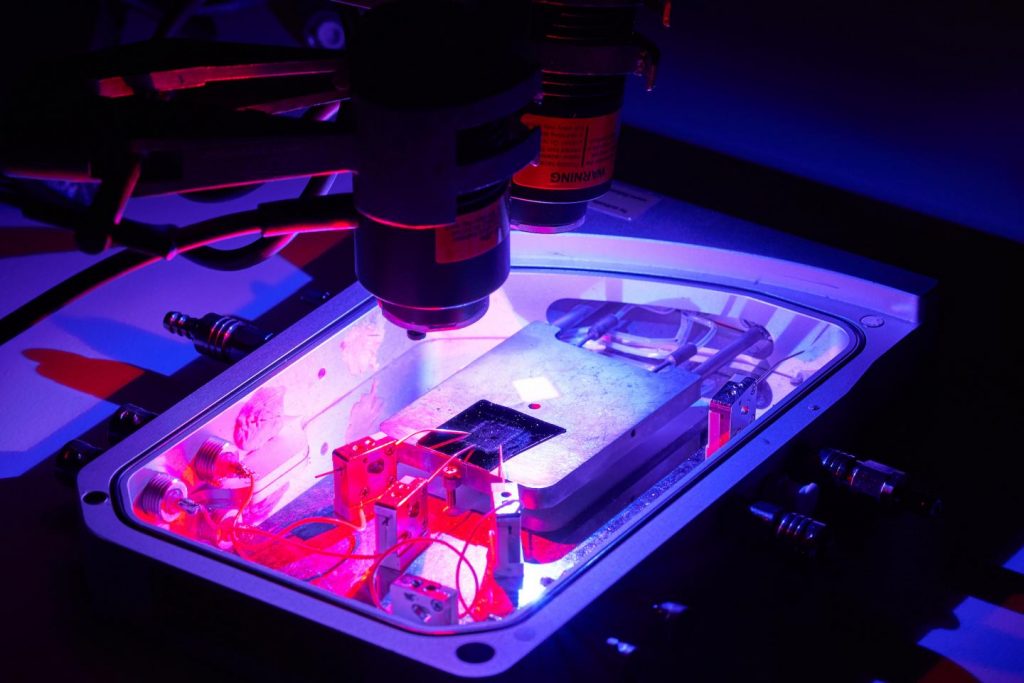

“Our group has extensive experience with biological and chemical electronic sensors,” Baraban continues. “So, we simulated the properties of neurons using the principles of biosensors and modified a classical field-effect transistor to create an artificial neurotransistor.” The advantage of such an architecture lies in the simultaneous storage and processing of information in a single component. In conventional transistor technology, they are separated, which slows processing time and hence ultimately also limits performance.

Silicon wafer + polymer = chip capable of learning

Modeling computers on the human brain is no new idea. Scientists made attempts to hook up nerve cells to electronics in Petri dishes decades ago. “But a wet computer chip that has to be fed all the time is of no use to anybody,” says Gianaurelio Cuniberti from TU Dresden. The Professor for Materials Science and Nanotechnology is one of the three brains behind the neurotransistor alongside Ronald Tetzlaff, Professor of Fundamentals of Electrical Engineering in Dresden, and Leon Chua [emphasis mine] from the University of California at Berkeley, who had already postulated similar components in the early 1970s.

Now, Cuniberti, Baraban and their team have been able to implement it: “We apply a viscous substance – called solgel – to a conventional silicon wafer with circuits. This polymer hardens and becomes a porous ceramic,” the materials science professor explains. “Ions move between the holes. They are heavier than electrons and slower to return to their position after excitation. This delay, called hysteresis, is what causes the storage effect.” As Cuniberti explains, this is a decisive factor in the functioning of the transistor. “The more an individual transistor is excited, the sooner it will open and let the current flow. This strengthens the connection. The system is learning.”

Cuniberti and his team are not focused on conventional issues, though. “Computers based on our chip would be less precise and tend to estimate mathematical computations rather than calculating them down to the last decimal,” the scientist explains. “But they would be more intelligent. For example, a robot with such processors would learn to walk or grasp; it would possess an optical system and learn to recognize connections. And all this without having to develop any software.” But these are not the only advantages of neuromorphic computers. Thanks to their plasticity, which is similar to that of the human brain, they can adapt to changing tasks during operation and, thus, solve problems for which they were not originally programmed.

I highlighted Dr. Leon Chua’s name as he was one of the first to conceptualize the notion of a memristor (memory resistor), which is what the press release seems to be referencing with the mention of artificial synapses. Dr. Chua very kindly answered a few questions for me about his work which I published in an April 13, 2010 posting (scroll down about 40% of the way).