Talk of artificial brains (also known as, brainlike computing or neuromorphic computing) usually turns to memory fairly quickly. This February 3, 2022 news item on ScienceDaily does too although the focus is on how memory and forgetting affect the ability to learn,

When the human brain learns something new, it adapts. But when artificial intelligence learns something new, it tends to forget information it already learned.

As companies use more and more data to improve how AI recognizes images, learns languages and carries out other complex tasks, a paper publishing in Science this week shows a way that computer chips could dynamically rewire themselves to take in new data like the brain does, helping AI to keep learning over time.

“The brains of living beings can continuously learn throughout their lifespan. We have now created an artificial platform for machines to learn throughout their lifespan,” said Shriram Ramanathan, a professor in Purdue University’s [Indiana, US] School of Materials Engineering who specializes in discovering how materials could mimic the brain to improve computing.

Unlike the brain, which constantly forms new connections between neurons to enable learning, the circuits on a computer chip don’t change. A circuit that a machine has been using for years isn’t any different than the circuit that was originally built for the machine in a factory.

This is a problem for making AI more portable, such as for autonomous vehicles or robots in space that would have to make decisions on their own in isolated environments. If AI could be embedded directly into hardware rather than just running on software as AI typically does, these machines would be able to operate more efficiently.

A February 3, 2022 Purdue University news release (also on EurekAlert), which originated the news item, provides more technical detail about the work (Note: Links have been removed),

In this study, Ramanathan and his team built a new piece of hardware that can be reprogrammed on demand through electrical pulses. Ramanathan believes that this adaptability would allow the device to take on all of the functions that are necessary to build a brain-inspired computer.

“If we want to build a computer or a machine that is inspired by the brain, then correspondingly, we want to have the ability to continuously program, reprogram and change the chip,” Ramanathan said.

Toward building a brain in chip form

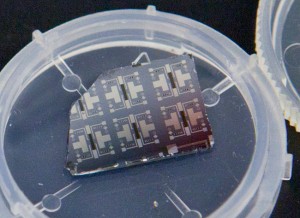

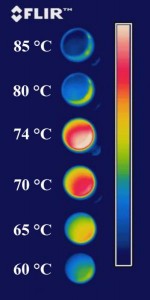

The hardware is a small, rectangular device made of a material called perovskite nickelate, which is very sensitive to hydrogen. Applying electrical pulses at different voltages allows the device to shuffle a concentration of hydrogen ions in a matter of nanoseconds, creating states that the researchers found could be mapped out to corresponding functions in the brain.

When the device has more hydrogen near its center, for example, it can act as a neuron, a single nerve cell. With less hydrogen at that location, the device serves as a synapse, a connection between neurons, which is what the brain uses to store memory in complex neural circuits.

Through simulations of the experimental data, the Purdue team’s collaborators at Santa Clara University and Portland State University showed that the internal physics of this device creates a dynamic structure for an artificial neural network that is able to more efficiently recognize electrocardiogram patterns and digits compared to static networks. This neural network uses “reservoir computing,” which explains how different parts of a brain communicate and transfer information.

Researchers from The Pennsylvania State University also demonstrated in this study that as new problems are presented, a dynamic network can “pick and choose” which circuits are the best fit for addressing those problems.

Since the team was able to build the device using standard semiconductor-compatible fabrication techniques and operate the device at room temperature, Ramanathan believes that this technique can be readily adopted by the semiconductor industry.

“We demonstrated that this device is very robust,” said Michael Park, a Purdue Ph.D. student in materials engineering. “After programming the device over a million cycles, the reconfiguration of all functions is remarkably reproducible.”

The researchers are working to demonstrate these concepts on large-scale test chips that would be used to build a brain-inspired computer.

Experiments at Purdue were conducted at the FLEX Lab and Birck Nanotechnology Center of Purdue’s Discovery Park. The team’s collaborators at Argonne National Laboratory, the University of Illinois, Brookhaven National Laboratory and the University of Georgia conducted measurements of the device’s properties.

Here’s a link to and a citation for the paper,

Reconfigurable perovskite nickelate electronics for artificial intelligence by Hai-Tian Zhang, Tae Joon Park, A. N. M. Nafiul Islam, Dat S. J. Tran, Sukriti Manna, Qi Wang, Sandip Mondal, Haoming Yu, Suvo Banik, Shaobo Cheng, Hua Zhou, Sampath Gamage, Sayantan Mahapatra, Yimei Zhu, Yohannes Abate, Nan Jiang, Subramanian K. R. S. Sankaranarayanan, Abhronil Sengupta, Christof Teuscher, Shriram Ramanathan. Science • 3 Feb 2022 • Vol 375, Issue 6580 • pp. 533-539 • DOI: 10.1126/science.abj7943

This paper is behind a paywall.