I have three news bits about legal issues that are arising as a consequence of emerging technologies.

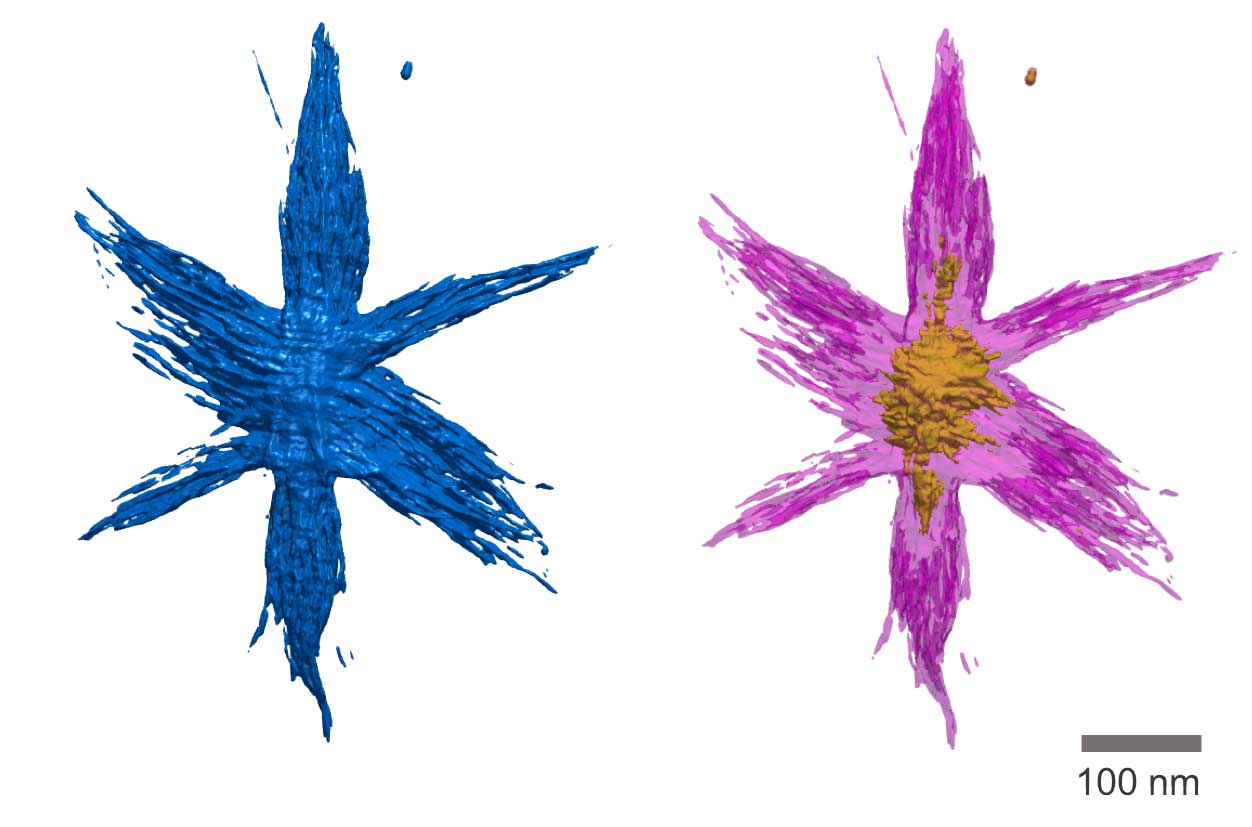

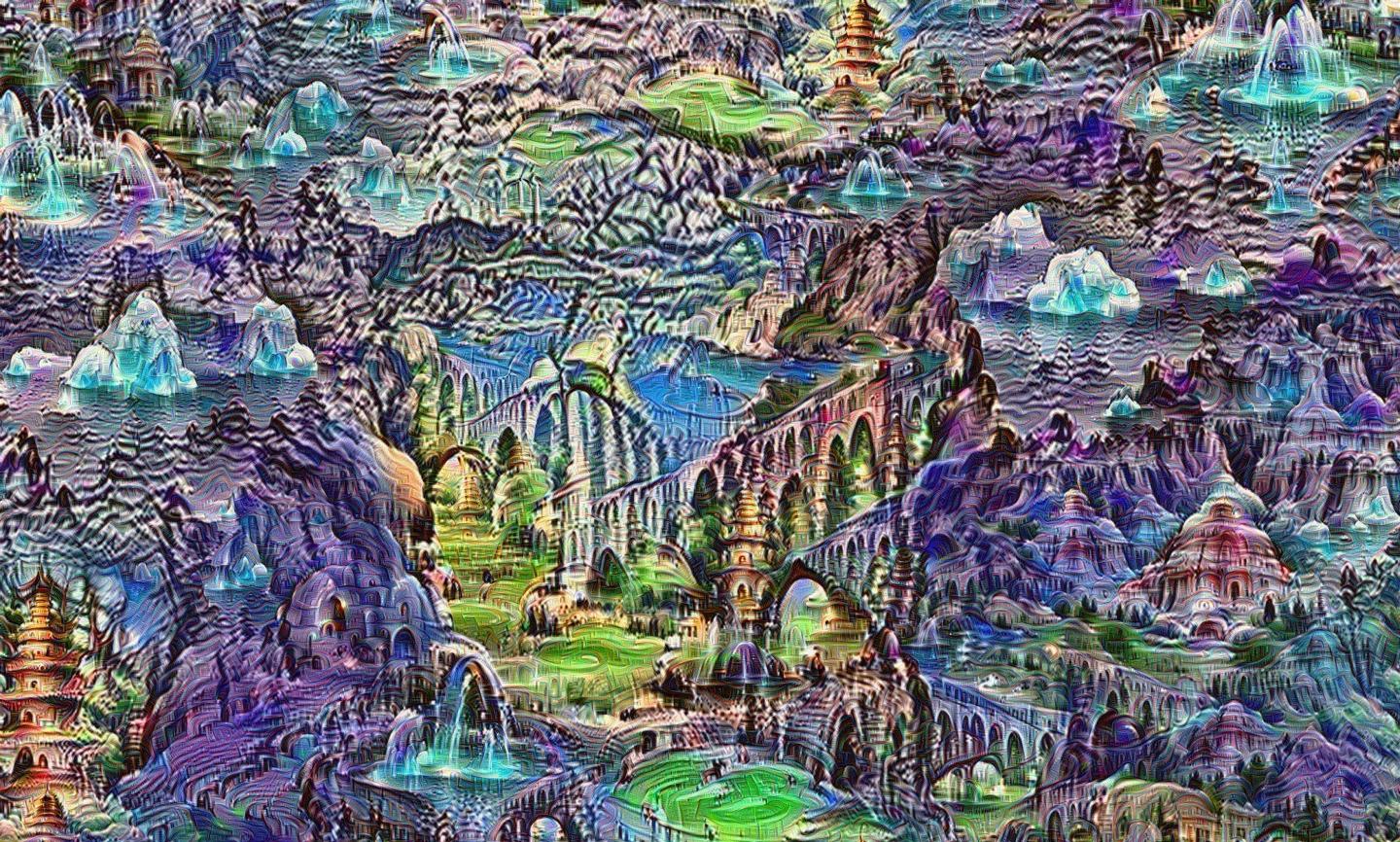

Deep neural networks, art, and copyright

Caption: The rise of automated art opens new creative avenues, coupled with new problems for copyright protection. Credit: Provided by: Alexander Mordvintsev, Christopher Olah and Mike Tyka

Presumably this artwork is a demonstration of automated art although they never really do explain how in the news item/news release. An April 26, 2017 news item on ScienceDaily announces research into copyright and the latest in using neural networks to create art,

In 1968, sociologist Jean Baudrillard wrote on automatism that “contained within it is the dream of a dominated world […] that serves an inert and dreamy humanity.”

With the growing popularity of Deep Neural Networks (DNN’s), this dream is fast becoming a reality.

Dr. Jean-Marc Deltorn, researcher at the Centre d’études internationales de la propriété intellectuelle in Strasbourg, argues that we must remain a responsive and responsible force in this process of automation — not inert dominators. As he demonstrates in a recent Frontiers in Digital Humanities paper, the dream of automation demands a careful study of the legal problems linked to copyright.

An April 26, 2017 Frontiers (publishing) news release on EurekAlert, which originated the news item, describes the research in more detail,

For more than half a century, artists have looked to computational processes as a way of expanding their vision. DNN’s are the culmination of this cross-pollination: by learning to identify a complex number of patterns, they can generate new creations.

These systems are made up of complex algorithms modeled on the transmission of signals between neurons in the brain.

DNN creations rely in equal measure on human inputs and the non-human algorithmic networks that process them.

Inputs are fed into the system, which is layered. Each layer provides an opportunity for a more refined knowledge of the inputs (shape, color, lines). Neural networks compare actual outputs to expected ones, and correct the predictive error through repetition and optimization. They train their own pattern recognition, thereby optimizing their learning curve and producing increasingly accurate outputs.

The deeper the layers are, the higher the level of abstraction. The highest layers are able to identify the contents of a given input with reasonable accuracy, after extended periods of training.

Creation thus becomes increasingly automated through what Deltorn calls “the arcane traceries of deep architecture”. The results are sufficiently abstracted from their sources to produce original creations that have been exhibited in galleries, sold at auction and performed at concerts.

The originality of DNN’s is a combined product of technological automation on one hand, human inputs and decisions on the other.

DNN’s are gaining popularity. Various platforms (such as DeepDream) now allow internet users to generate their very own new creations . This popularization of the automation process calls for a comprehensive legal framework that ensures a creator’s economic and moral rights with regards to his work – copyright protection.

Form, originality and attribution are the three requirements for copyright. And while DNN creations satisfy the first of these three, the claim to originality and attribution will depend largely on a given country legislation and on the traceability of the human creator.

Legislation usually sets a low threshold to originality. As DNN creations could in theory be able to create an endless number of riffs on source materials, the uncurbed creation of original works could inflate the existing number of copyright protections.

Additionally, a small number of national copyright laws confers attribution to what UK legislation defines loosely as “the person by whom the arrangements necessary for the creation of the work are undertaken.” In the case of DNN’s, this could mean anybody from the programmer to the user of a DNN interface.

Combined with an overly supple take on originality, this view on attribution would further increase the number of copyrightable works.

The risk, in both cases, is that artists will be less willing to publish their own works, for fear of infringement of DNN copyright protections.

In order to promote creativity – one seminal aim of copyright protection – the issue must be limited to creations that manifest a personal voice “and not just the electric glint of a computational engine,” to quote Deltorn. A delicate act of discernment.

DNN’s promise new avenues of creative expression for artists – with potential caveats. Copyright protection – a “catalyst to creativity” – must be contained. Many of us gently bask in the glow of an increasingly automated form of technology. But if we want to safeguard the ineffable quality that defines much art, it might be a good idea to hone in more closely on the differences between the electric and the creative spark.

This research is and be will part of a broader Frontiers Research Topic collection of articles on Deep Learning and Digital Humanities.

Here’s a link to and a citation for the paper,

Deep Creations: Intellectual Property and the Automata by Jean-Marc Deltorn. Front. Digit. Humanit., 01 February 2017 | https://doi.org/10.3389/fdigh.2017.00003

This paper is open access.

Conference on governance of emerging technologies

I received an April 17, 2017 notice via email about this upcoming conference. Here’s more from the Fifth Annual Conference on Governance of Emerging Technologies: Law, Policy and Ethics webpage,

The Fifth Annual Conference on Governance of Emerging Technologies:

Law, Policy and Ethics held at the new

Beus Center for Law & Society in Phoenix, AZ

May 17-19, 2017!

Call for Abstracts – Now Closed

The conference will consist of plenary and session presentations and discussions on regulatory, governance, legal, policy, social and ethical aspects of emerging technologies, including (but not limited to) nanotechnology, synthetic biology, gene editing, biotechnology, genomics, personalized medicine, human enhancement technologies, telecommunications, information technologies, surveillance technologies, geoengineering, neuroscience, artificial intelligence, and robotics. The conference is premised on the belief that there is much to be learned and shared from and across the governance experience and proposals for these various emerging technologies.

Keynote Speakers:

Gillian Hadfield, Richard L. and Antoinette Schamoi Kirtland Professor of Law and Professor of Economics USC [University of Southern California] Gould School of Law

Shobita Parthasarathy, Associate Professor of Public Policy and Women’s Studies, Director, Science, Technology, and Public Policy Program University of Michigan

Stuart Russell, Professor at [University of California] Berkeley, is a computer scientist known for his contributions to artificial intelligence

Craig Shank, Vice President for Corporate Standards Group in Microsoft’s Corporate, External and Legal Affairs (CELA)

Plenary Panels:

Innovation – Responsible and/or Permissionless

Ellen-Marie Forsberg, Senior Researcher/Research Manager at Oslo and Akershus University College of Applied Sciences

Adam Thierer, Senior Research Fellow with the Technology Policy Program at the Mercatus Center at George Mason University

Wendell Wallach, Consultant, ethicist, and scholar at Yale University’s Interdisciplinary Center for Bioethics

Gene Drives, Trade and International Regulations

Greg Kaebnick, Director, Editorial Department; Editor, Hastings Center Report; Research Scholar, Hastings Center

Jennifer Kuzma, Goodnight-North Carolina GlaxoSmithKline Foundation Distinguished Professor in Social Sciences in the School of Public and International Affairs (SPIA) and co-director of the Genetic Engineering and Society (GES) Center at North Carolina State University

Andrew Maynard, Senior Sustainability Scholar, Julie Ann Wrigley Global Institute of Sustainability Director, Risk Innovation Lab, School for the Future of Innovation in Society Professor, School for the Future of Innovation in Society, Arizona State University

Gary Marchant, Regents’ Professor of Law, Professor of Law Faculty Director and Faculty Fellow, Center for Law, Science & Innovation, Arizona State University

Marc Saner, Inaugural Director of the Institute for Science, Society and Policy, and Associate Professor, University of Ottawa Department of Geography

Big Data

Anupam Chander, Martin Luther King, Jr. Professor of Law and Director, California International Law Center, UC Davis School of Law

Pilar Ossorio, Professor of Law and Bioethics, University of Wisconsin, School of Law and School of Medicine and Public Health; Morgridge Institute for Research, Ethics Scholar-in-Residence

George Poste, Chief Scientist, Complex Adaptive Systems Initiative (CASI) (http://www.casi.asu.edu/), Regents’ Professor and Del E. Webb Chair in Health Innovation, Arizona State University

Emily Shuckburgh, climate scientist and deputy head of the Polar Oceans Team at the British Antarctic Survey, University of Cambridge

Responsible Development of AI

Spring Berman, Ira A. Fulton Schools of Engineering, Arizona State University

John Havens, The IEEE [Institute of Electrical and Electronics Engineers] Global Initiative for Ethical Considerations in Artificial Intelligence and Autonomous Systems

Subbarao Kambhampati, Senior Sustainability Scientist, Julie Ann Wrigley Global Institute of Sustainability, Professor, School of Computing, Informatics and Decision Systems Engineering, Ira A. Fulton Schools of Engineering, Arizona State University

Wendell Wallach, Consultant, Ethicist, and Scholar at Yale University’s Interdisciplinary Center for Bioethics

Existential and Catastrophic Ricks [sic]

Tony Barrett, Co-Founder and Director of Research of the Global Catastrophic Risk Institute

Haydn Belfield, Academic Project Administrator, Centre for the Study of Existential Risk at the University of Cambridge

Margaret E. Kosal, Associate Director, Sam Nunn School of International Affairs, Georgia Institute of Technology

Catherine Rhodes, Academic Project Manager, Centre for the Study of Existential Risk at CSER, University of Cambridge

…

These were the panels that are of interest to me; there are others on the homepage.

Here’s some information from the Conference registration webpage,

Early Bird Registration – $50 off until May 1! Enter discount code: earlybirdGETs50

New: Group Discount – Register 2+ attendees together and receive an additional 20% off for all group members!

Conference registration fees are as follows:

- General (non-CLE) Registration: $150.00

- CLE Registration: $350.00

- *Current Student / ASU Law Alumni Registration: $50.00

- ^Cybsersecurity sessions only (May 19): $100 CLE / $50 General / Free for students (registration info coming soon)

There you have it.

Neuro-techno future laws

I’m pretty sure this isn’t the first exploration of potential legal issues arising from research into neuroscience although it’s the first one I’ve stumbled across. From an April 25, 2017 news item on phys.org,

New human rights laws to prepare for advances in neurotechnology that put the ‘freedom of the mind’ at risk have been proposed today in the open access journal Life Sciences, Society and Policy.

The authors of the study suggest four new human rights laws could emerge in the near future to protect against exploitation and loss of privacy. The four laws are: the right to cognitive liberty, the right to mental privacy, the right to mental integrity and the right to psychological continuity.

An April 25, 2017 Biomed Central news release on EurekAlert, which originated the news item, describes the work in more detail,

Marcello Ienca, lead author and PhD student at the Institute for Biomedical Ethics at the University of Basel, said: “The mind is considered to be the last refuge of personal freedom and self-determination, but advances in neural engineering, brain imaging and neurotechnology put the freedom of the mind at risk. Our proposed laws would give people the right to refuse coercive and invasive neurotechnology, protect the privacy of data collected by neurotechnology, and protect the physical and psychological aspects of the mind from damage by the misuse of neurotechnology.”

Advances in neurotechnology, such as sophisticated brain imaging and the development of brain-computer interfaces, have led to these technologies moving away from a clinical setting and into the consumer domain. While these advances may be beneficial for individuals and society, there is a risk that the technology could be misused and create unprecedented threats to personal freedom.

Professor Roberto Andorno, co-author of the research, explained: “Brain imaging technology has already reached a point where there is discussion over its legitimacy in criminal court, for example as a tool for assessing criminal responsibility or even the risk of reoffending. Consumer companies are using brain imaging for ‘neuromarketing’, to understand consumer behaviour and elicit desired responses from customers. There are also tools such as ‘brain decoders’ which can turn brain imaging data into images, text or sound. All of these could pose a threat to personal freedom which we sought to address with the development of four new human rights laws.”

The authors explain that as neurotechnology improves and becomes commonplace, there is a risk that the technology could be hacked, allowing a third-party to ‘eavesdrop’ on someone’s mind. In the future, a brain-computer interface used to control consumer technology could put the user at risk of physical and psychological damage caused by a third-party attack on the technology. There are also ethical and legal concerns over the protection of data generated by these devices that need to be considered.

International human rights laws make no specific mention to neuroscience, although advances in biomedicine have become intertwined with laws, such as those concerning human genetic data. Similar to the historical trajectory of the genetic revolution, the authors state that the on-going neurorevolution will force a reconceptualization of human rights laws and even the creation of new ones.

Marcello Ienca added: “Science-fiction can teach us a lot about the potential threat of technology. Neurotechnology featured in famous stories has in some cases already become a reality, while others are inching ever closer, or exist as military and commercial prototypes. We need to be prepared to deal with the impact these technologies will have on our personal freedom.”

Here’s a link to and a citation for the paper,

Towards new human rights in the age of neuroscience and neurotechnology by Marcello Ienca and Roberto Andorno. Life Sciences, Society and Policy201713:5 DOI: 10.1186/s40504-017-0050-1 Published: 26 April 2017

© The Author(s). 2017

This paper is open access.

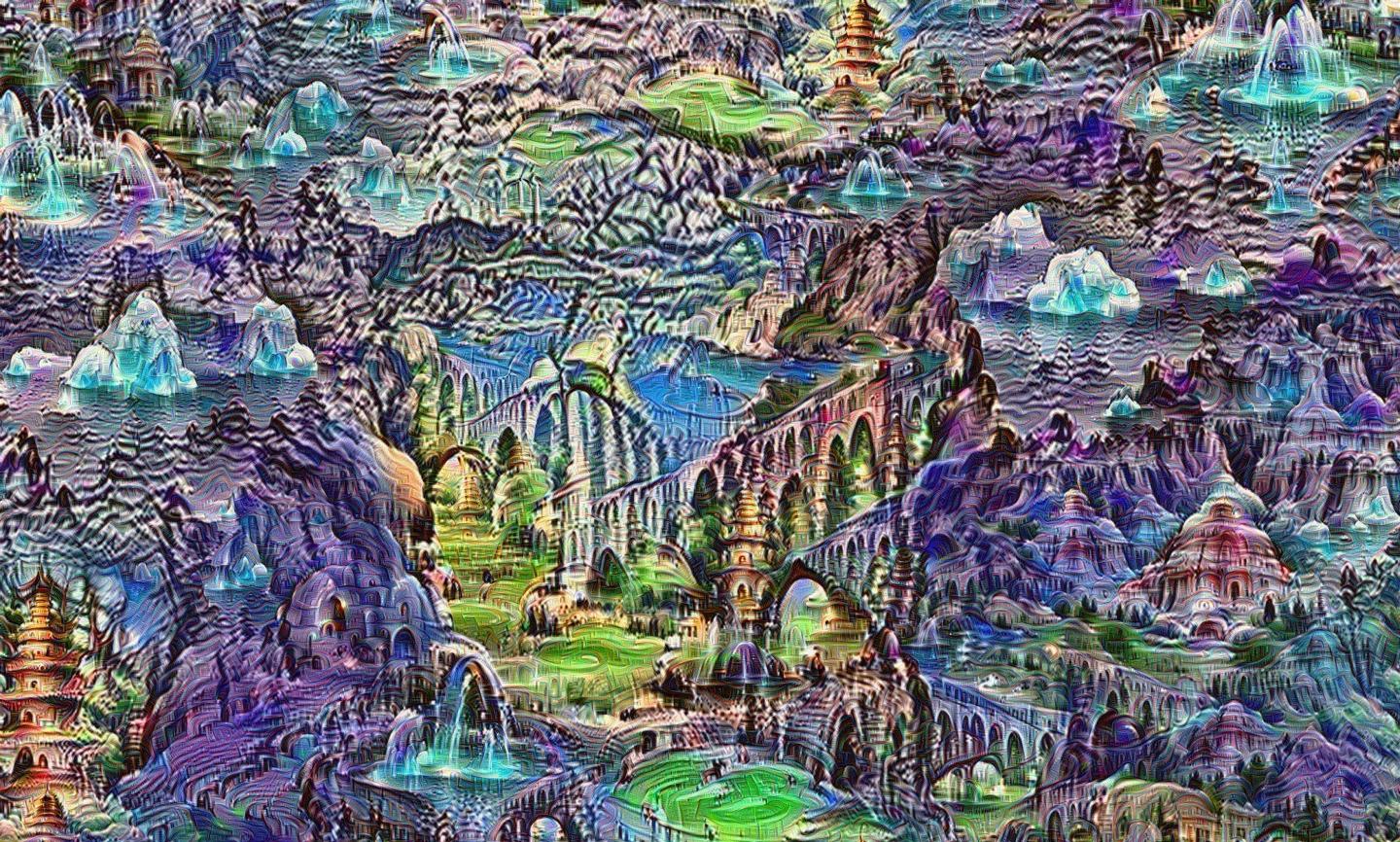

Image of a memristor chip Similarly, Lu’s electronic system is designed to detect the patterns very efficiently—and to use as few features as possible to describe the original input.