Agbaje Lateef’s (Professor of Microbiology and Head of Nanotechnology Research Group (NANO+) at Ladoke Akintola University of Technology) April 20, 2022 essay on nanotechnology in Nigeria for The Conversation offers an overview and a plea, Note: Links have been removed,

…

Egypt, South Africa, Tunisia, Nigeria and Algeria lead the field in Africa. Since 2006, South Africa has been developing scientists, providing infrastructure, establishing centres of excellence, developing national policy and setting regulatory standards for nanotechnology. Companies such as Mintek, Nano South Africa, SabiNano and Denel Dynamics are applying the science.

In contrast, Nigeria’s nanotechnology journey, which started with a national initiative in 2006, has been slow. It has been dogged by uncertainties, poor funding and lack of proper coordination. Still, scientists in Nigeria have continued to place the country on the map through publications.

In addition, research clusters at the University of Nigeria, Nsukka, Ladoke Akintola University of Technology and others have organised conferences. Our research group also founded an open access journal, Nano Plus: Science and Technology of Nanomaterials.

…

To get an idea of how well Nigeria was performing in nanotechnology research and development, we turned to SCOPUS, an academic database.

Our analysis shows that research in nanotechnology takes place in 71 Nigerian institutions in collaboration with 58 countries. South Africa, Malaysia, India, the US and China are the main collaborators. Nigeria ranked fourth in research articles published from 2010 to 2020 after Egypt, South Africa and Tunisia.

Five institutions contributed 43.88% of the nation’s articles in this period. They were the University of Nigeria, Nsukka; Covenant University, Ota; Ladoke Akintola University of Technology, Ogbomoso; University of Ilorin; and University of Lagos.

The number of articles published by Nigerian researchers in the same decade was 645. Annual output grew from five articles in 2010 to 137 in the first half of 2020. South Africa published 2,597 and Egypt 5,441 from 2010 to 2020. The global total was 414,526 articles.

The figures show steady growth in Nigeria’s publications. But the performance is low in view of the fact that the country has the most universities in Africa.

The research performance is also low in relation to population and economy size. Nigeria produced 1.58 articles per 2 million people and 1.09 articles per US$3 billion of GDP in 2019. South Africa recorded 14.58 articles per 2 million people and 3.65 per US$3 billion. Egypt published 18.51 per 2 million people and 9.20 per US$3 billion in the same period.

There is no nanotechnology patent of Nigerian origin in the US patents office. Standards don’t exist for nano-based products. South Africa had 23 patents in five years, from 2016 to 2020.

Nigerian nanotechnology research is limited by a lack of sophisticated instruments for analysis. It is impossible to conduct meaningful research locally without foreign collaboration on instrumentation. The absence of national policy on nanotechnology and of dedicated funds also hinder research.

…

In February 2018, Nigeria’s science and technology minister unveiled a national steering committee on nanotechnology policy. But the policy is yet to be approved by the federal government. In September 2021, I presented a memorandum to the national council on science, technology and innovation to stimulate national discourse on nanotechnology.

…

Given that this essay is dated more than six months after Professor Lateef’s memorandum to the national council, I’m assuming that no action has been taken as of yet.

A June 2022 addition to the Nigerian nanotechnology story

Agbaje Lateef has written a June 8, 2022 essay for The Conversation about nanotechnology and the Nigerian textile industry (Note: Links have been removed),

Nigeria’s cotton production has fallen steeply in recent years. It once supported the largest textile industry in Africa. The fall is due to weak demand for cotton and to poor yields resulting from planting low-quality cottonseeds. For these reasons, farmers switched from cotton to other crops.

Nigeria’s cotton output fell from 602,400 tonnes in 2010 to 51,000 tonnes in 2020. In the 1970s and early 1980s, the country’s textile industry had 180 textile mills employing over 450,000 people, supported by about 600,000 cotton farmers. By 2019, there were 25 textile mills and 25,000 workers.

…

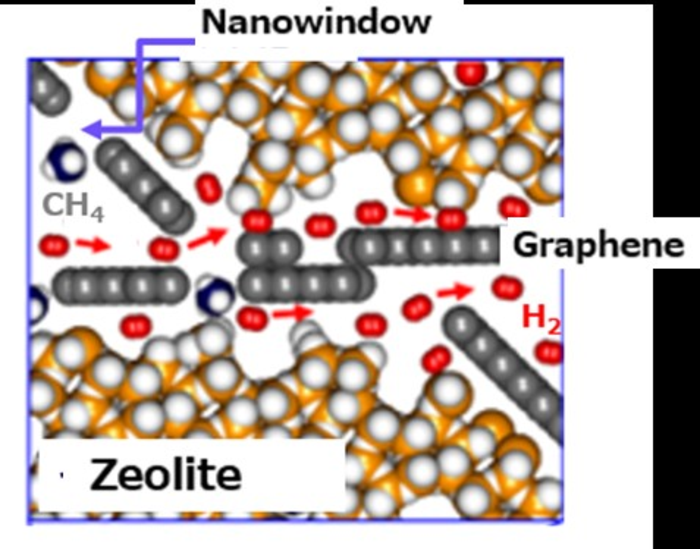

Nowadays, textiles’ properties can be greatly improved through nanotechnology – the use of extremely small materials with special properties. Nanomaterials like graphene and silver nanoparticles make textiles stronger, durable, and resistant to germs, radiation, water and fire.

Adding nanomaterials to textiles produces nanotextiles. These are often “smart” because they respond to the external environment in different ways when combined with electronics. They can be used to harvest and store energy, to release drugs, and as sensors in different applications.

Nanotextiles are increasingly used in defence and healthcare. For hospitals, they are used to produce bandages, curtains, uniforms and bedsheets with the ability to kill pathogens. The market value of nanotextiles was US$5.1 billion in 2019 and could reach US$14.8 billion in 2024.

At the moment, Nigeria is not benefiting from nanotextiles’ economic potential as it produces none. With over 216 million people, the country should be able to support its textile industry. It could also explore trading opportunities in the African Continental Free Trade Agreement to market innovative nanotextiles.

…

Lateef goes on to describe his research (from his June 8, 2022 essay),

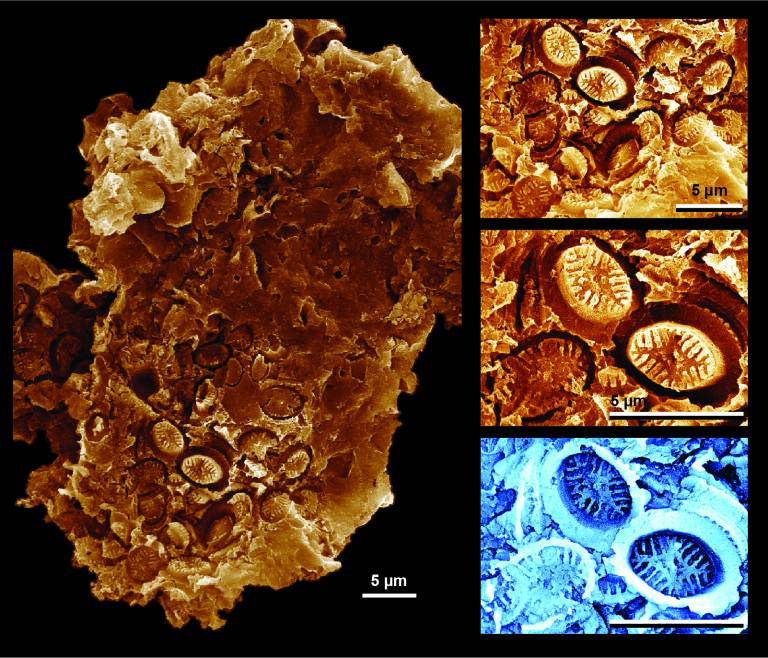

Our nanotechnology research group has made the first attempt to produce nanotextiles using cotton and silk in Nigeria. We used silver and silver-titanium oxide nanoparticles produced by locust beans’ wastewater. Locust bean is a multipurpose tree legume found in Nigeria and some other parts of Africa. The seeds, the fruit pulp and the leaves are used to prepare foods and drinks.

The seeds are used to produce a local condiment called “iru” in southwest Nigeria. The processing of iru generates a large quantity of wastewater that is not useful. We used the wastewater to reduce some compounds to produce silver and silver-titanium nanoparticles in the laboratory.

Fabrics were dipped into nanoparticle solutions to make nanotextiles. Thereafter, the nanotextiles were exposed to known bacteria and fungi. The growth of the organisms was monitored to determine the ability of the nanotextiles to kill them.

The nanotextiles prevented growth of several pathogenic bacteria and black mould, making them useful as antimicrobial materials. They were active against germs even after being washed five times with detergent. Textiles without nanoparticles did not prevent the growth of microorganisms.

These studies showed that nanotextiles can kill harmful microorganisms including those that are resistant to drugs. Materials such as air filters, sportswear, nose masks, and healthcare fabrics produced from nanotextiles possess excellent antimicrobial attributes. Nanotextiles can also promote wound healing and offer resistance to radiation, water and fire.

Our studies established the value that nanotechnology can add to textiles through hygiene and disease prevention. Using nanotextiles will promote good health and well-being for sustainable development. They will assist to reduce infections that are caused by germs.

Despite these benefits, nanomaterials in textiles can have some unwanted effects on the environment, health and safety. Some nanomaterials can harm human health causing irritation when they come in contact with skin or inhaled. Also, their release to the environment in large quantities can harm lower organisms and reduce growth of plants. We recommend that the impacts of nanotextiles should be evaluated case by case before use.

…

Dear Professor Lateef, I hope you see some action on your suggestions soon and thank you for the update. Also, good luck with your nanotextiles.