I love science stories about the inspirational qualities of spiderwebs. A November 26, 2021 news item on phys.org describes how spiderwebs have inspired advances in sensors and, potentially, quantum computing,,

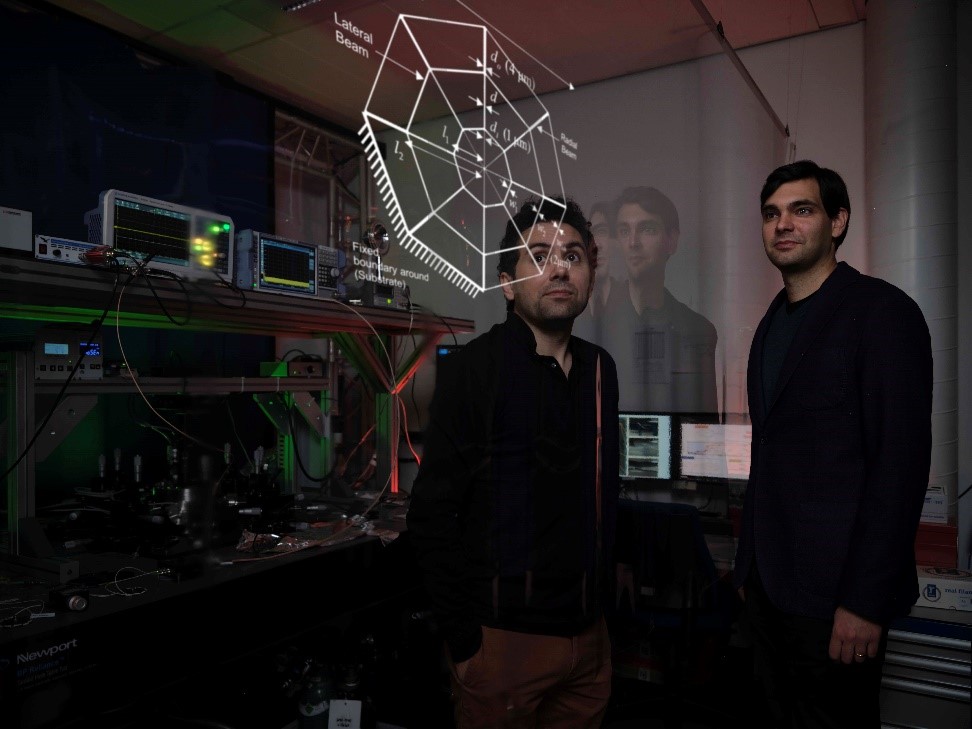

A team of researchers from TU Delft [Delft University of Technology; Netherlands] managed to design one of the world’s most precise microchip sensors. The device can function at room temperature—a ‘holy grail’ for quantum technologies and sensing. Combining nanotechnology and machine learning inspired by nature’s spiderwebs, they were able to make a nanomechanical sensor vibrate in extreme isolation from everyday noise. This breakthrough, published in the Advanced Materials Rising Stars Issue, has implications for the study of gravity and dark matter as well as the fields of quantum internet, navigation and sensing.

A November 24, 2021 TU Delft press release (also on EurekAlert but published on November 23, 2021), which originated the news item, describes the research in more detail,

One of the biggest challenges for studying vibrating objects at the smallest scale, like those used in sensors or quantum hardware, is how to keep ambient thermal noise from interacting with their fragile states. Quantum hardware for example is usually kept at near absolute zero (−273.15°C) temperatures, with refrigerators costing half a million euros apiece. Researchers from TU Delft created a web-shaped microchip sensor which resonates extremely well in isolation from room temperature noise. Among other applications, their discovery will make building quantum devices much more affordable.

Hitchhiking on evolution

Richard Norte and Miguel Bessa, who led the research, were looking for new ways to combine nanotechnology and machine learning. How did they come up with the idea to use spiderwebs as a model? Richard Norte: “I’ve been doing this work already for a decade when during lockdown, I noticed a lot of spiderwebs on my terrace. I realised spiderwebs are really good vibration detectors, in that they want to measure vibrations inside the web to find their prey, but not outside of it, like wind through a tree. So why not hitchhike on millions of years of evolution and use a spiderweb as an initial model for an ultra-sensitive device?”

Since the team did not know anything about spiderwebs’ complexities, they let machine learning guide the discovery process. Miguel Bessa: “We knew that the experiments and simulations were costly and time-consuming, so with my group we decided to use an algorithm called Bayesian optimization, to find a good design using few attempts.” Dongil Shin, co-first author in this work, then implemented the computer model and applied the machine learning algorithm to find the new device design.

Microchip sensor based on spiderwebs

To the researcher’s surprise, the algorithm proposed a relatively simple spiderweb out of 150 different spiderweb designs, which consists of only six strings put together in a deceivingly simple way. Bessa: “Dongil’s computer simulations showed that this device could work at room temperature, in which atoms vibrate a lot, but still have an incredibly low amount of energy leaking in from the environment – a higher Quality factor in other words. With machine learning and optimization we managed to adapt Richard’s spider web concept towards this much better quality factor.”Based on this new design, co-first author Andrea Cupertino built a microchip sensor with an ultra-thin, nanometre-thick film of ceramic material called Silicon Nitride. They tested the model by forcefully vibrating the microchip ‘web’ and measuring the time it takes for the vibrations to stop. The result was spectacular: a record-breaking isolated vibration at room temperature. Norte: “We found almost no energy loss outside of our microchip web: the vibrations move in a circle on the inside and don’t touch the outside. This is somewhat like giving someone a single push on a swing, and having them swing on for nearly a century without stopping.”

Implications for fundamental and applied sciences

With their spiderweb-based sensor, the researchers’ show how this interdisciplinary strategy opens a path to new breakthroughs in science, by combining bio-inspired designs, machine learning and nanotechnology. This novel paradigm has interesting implications for quantum internet, sensing, microchip technologies and fundamental physics: exploring ultra-small forces for example, like gravity or dark matter which are notoriously difficult to measure. According to the researchers, the discovery would not have been possible without the university’s Cohesion grant, which led to this collaboration between nanotechnology and machine learning.

Here’s a link to and a citation for the paper,

Spiderweb Nanomechanical Resonators via Bayesian Optimization: Inspired by Nature and Guided by Machine Learning by Dongil Shin, Andrea Cupertino, Matthijs H. J. de Jong, Peter G. Steeneken, Miguel A. Bessa, Richard A. Norte. Advanced Materials Volume34, Issue3 January 20, 2022 2106248 DOI: https://doi.org/10.1002/adma.202106248 First published (online): 25 October 2021

This paper is open access.

If spiderwebs can be sensors, can they also think?

it’s called ‘extended cognition’ or ‘extended mind thesis’ (Wikipedia entry) and the theory holds that the mind is not solely in the brain or even in the body. Predictably, the theory has both its supporters and critics as noted in Joshua Sokol’s article “The Thoughts of a Spiderweb” originally published on May 22, 2017 in Quanta Magazine (Note: Links have been removed),

Millions of years ago, a few spiders abandoned the kind of round webs that the word “spiderweb” calls to mind and started to focus on a new strategy. Before, they would wait for prey to become ensnared in their webs and then walk out to retrieve it. Then they began building horizontal nets to use as a fishing platform. Now their modern descendants, the cobweb spiders, dangle sticky threads below, wait until insects walk by and get snagged, and reel their unlucky victims in.

In 2008, the researcher Hilton Japyassú prompted 12 species of orb spiders collected from all over Brazil to go through this transition again. He waited until the spiders wove an ordinary web. Then he snipped its threads so that the silk drooped to where crickets wandered below. When a cricket got hooked, not all the orb spiders could fully pull it up, as a cobweb spider does. But some could, and all at least began to reel it in with their two front legs.

Their ability to recapitulate the ancient spiders’ innovation got Japyassú, a biologist at the Federal University of Bahia in Brazil, thinking. When the spider was confronted with a problem to solve that it might not have seen before, how did it figure out what to do? “Where is this information?” he said. “Where is it? Is it in her head, or does this information emerge during the interaction with the altered web?”

In February [2017], Japyassú and Kevin Laland, an evolutionary biologist at the University of Saint Andrews, proposed a bold answer to the question. They argued in a review paper, published in the journal Animal Cognition, that a spider’s web is at least an adjustable part of its sensory apparatus, and at most an extension of the spider’s cognitive system.

This would make the web a model example of extended cognition, an idea first proposed by the philosophers Andy Clark and David Chalmers in 1998 to apply to human thought. In accounts of extended cognition, processes like checking a grocery list or rearranging Scrabble tiles in a tray are close enough to memory-retrieval or problem-solving tasks that happen entirely inside the brain that proponents argue they are actually part of a single, larger, “extended” mind.

Among philosophers of mind, that idea has racked up citations, including supporters and critics. And by its very design, Japyassú’s paper, which aims to export extended cognition as a testable idea to the field of animal behavior, is already stirring up antibodies among scientists. …

It seems there is no definitive answer to the question of whether there is an ‘extended mind’ but it’s an intriguing question made (in my opinion) even more so with the spiderweb-inspired sensors from TU Delft.