I first stumbled onto memristors and the possibility of brain-like computing sometime in 2008 (around the time that R. Stanley Williams and his team at HP Labs first published the results of their research linking Dr. Leon Chua’s memristor theory to their attempts to shrink computer chips). In the almost 10 years since, scientists have worked hard to utilize memristors in the field of neuromorphic (brain-like) engineering/computing.

A January 22, 2018 news item on phys.org describes the latest work,

When it comes to processing power, the human brain just can’t be beat.

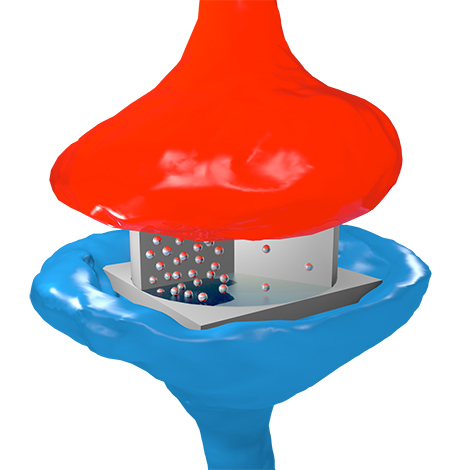

Packed within the squishy, football-sized organ are somewhere around 100 billion neurons. At any given moment, a single neuron can relay instructions to thousands of other neurons via synapses—the spaces between neurons, across which neurotransmitters are exchanged. There are more than 100 trillion synapses that mediate neuron signaling in the brain, strengthening some connections while pruning others, in a process that enables the brain to recognize patterns, remember facts, and carry out other learning tasks, at lightning speeds.

Researchers in the emerging field of “neuromorphic computing” have attempted to design computer chips that work like the human brain. Instead of carrying out computations based on binary, on/off signaling, like digital chips do today, the elements of a “brain on a chip” would work in an analog fashion, exchanging a gradient of signals, or “weights,” much like neurons that activate in various ways depending on the type and number of ions that flow across a synapse.

In this way, small neuromorphic chips could, like the brain, efficiently process millions of streams of parallel computations that are currently only possible with large banks of supercomputers. But one significant hangup on the way to such portable artificial intelligence has been the neural synapse, which has been particularly tricky to reproduce in hardware.

Now engineers at MIT [Massachusetts Institute of Technology] have designed an artificial synapse in such a way that they can precisely control the strength of an electric current flowing across it, similar to the way ions flow between neurons. The team has built a small chip with artificial synapses, made from silicon germanium. In simulations, the researchers found that the chip and its synapses could be used to recognize samples of handwriting, with 95 percent accuracy.

A January 22, 2018 MIT news release by Jennifer Chua (also on EurekAlert), which originated the news item, provides more detail about the research,

The design, published today [January 22, 2018] in the journal Nature Materials, is a major step toward building portable, low-power neuromorphic chips for use in pattern recognition and other learning tasks.

The research was led by Jeehwan Kim, the Class of 1947 Career Development Assistant Professor in the departments of Mechanical Engineering and Materials Science and Engineering, and a principal investigator in MIT’s Research Laboratory of Electronics and Microsystems Technology Laboratories. His co-authors are Shinhyun Choi (first author), Scott Tan (co-first author), Zefan Li, Yunjo Kim, Chanyeol Choi, and Hanwool Yeon of MIT, along with Pai-Yu Chen and Shimeng Yu of Arizona State University.

Too many paths

Most neuromorphic chip designs attempt to emulate the synaptic connection between neurons using two conductive layers separated by a “switching medium,” or synapse-like space. When a voltage is applied, ions should move in the switching medium to create conductive filaments, similarly to how the “weight” of a synapse changes.

But it’s been difficult to control the flow of ions in existing designs. Kim says that’s because most switching mediums, made of amorphous materials, have unlimited possible paths through which ions can travel — a bit like Pachinko, a mechanical arcade game that funnels small steel balls down through a series of pins and levers, which act to either divert or direct the balls out of the machine.

Like Pachinko, existing switching mediums contain multiple paths that make it difficult to predict where ions will make it through. Kim says that can create unwanted nonuniformity in a synapse’s performance.

“Once you apply some voltage to represent some data with your artificial neuron, you have to erase and be able to write it again in the exact same way,” Kim says. “But in an amorphous solid, when you write again, the ions go in different directions because there are lots of defects. This stream is changing, and it’s hard to control. That’s the biggest problem — nonuniformity of the artificial synapse.”

A perfect mismatch

Instead of using amorphous materials as an artificial synapse, Kim and his colleagues looked to single-crystalline silicon, a defect-free conducting material made from atoms arranged in a continuously ordered alignment. The team sought to create a precise, one-dimensional line defect, or dislocation, through the silicon, through which ions could predictably flow.

To do so, the researchers started with a wafer of silicon, resembling, at microscopic resolution, a chicken-wire pattern. They then grew a similar pattern of silicon germanium — a material also used commonly in transistors — on top of the silicon wafer. Silicon germanium’s lattice is slightly larger than that of silicon, and Kim found that together, the two perfectly mismatched materials can form a funnel-like dislocation, creating a single path through which ions can flow.

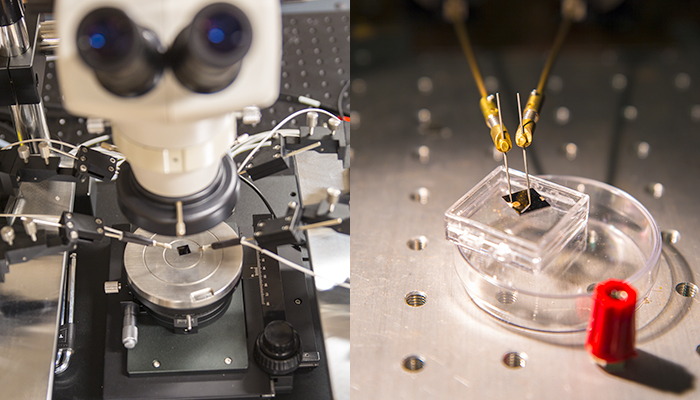

The researchers fabricated a neuromorphic chip consisting of artificial synapses made from silicon germanium, each synapse measuring about 25 nanometers across. They applied voltage to each synapse and found that all synapses exhibited more or less the same current, or flow of ions, with about a 4 percent variation between synapses — a much more uniform performance compared with synapses made from amorphous material.

They also tested a single synapse over multiple trials, applying the same voltage over 700 cycles, and found the synapse exhibited the same current, with just 1 percent variation from cycle to cycle.

“This is the most uniform device we could achieve, which is the key to demonstrating artificial neural networks,” Kim says.

Writing, recognized

As a final test, Kim’s team explored how its device would perform if it were to carry out actual learning tasks — specifically, recognizing samples of handwriting, which researchers consider to be a first practical test for neuromorphic chips. Such chips would consist of “input/hidden/output neurons,” each connected to other “neurons” via filament-based artificial synapses.

Scientists believe such stacks of neural nets can be made to “learn.” For instance, when fed an input that is a handwritten ‘1,’ with an output that labels it as ‘1,’ certain output neurons will be activated by input neurons and weights from an artificial synapse. When more examples of handwritten ‘1s’ are fed into the same chip, the same output neurons may be activated when they sense similar features between different samples of the same letter, thus “learning” in a fashion similar to what the brain does.

Kim and his colleagues ran a computer simulation of an artificial neural network consisting of three sheets of neural layers connected via two layers of artificial synapses, the properties of which they based on measurements from their actual neuromorphic chip. They fed into their simulation tens of thousands of samples from a handwritten recognition dataset commonly used by neuromorphic designers, and found that their neural network hardware recognized handwritten samples 95 percent of the time, compared to the 97 percent accuracy of existing software algorithms.

The team is in the process of fabricating a working neuromorphic chip that can carry out handwriting-recognition tasks, not in simulation but in reality. Looking beyond handwriting, Kim says the team’s artificial synapse design will enable much smaller, portable neural network devices that can perform complex computations that currently are only possible with large supercomputers.

“Ultimately we want a chip as big as a fingernail to replace one big supercomputer,” Kim says. “This opens a stepping stone to produce real artificial hardware.”

This research was supported in part by the National Science Foundation.

Here’s a link to and a citation for the paper,

SiGe epitaxial memory for neuromorphic computing with reproducible high performance based on engineered dislocations by Shinhyun Choi, Scott H. Tan, Zefan Li, Yunjo Kim, Chanyeol Choi, Pai-Yu Chen, Hanwool Yeon, Shimeng Yu, & Jeehwan Kim. Nature Materials (2018) doi:10.1038/s41563-017-0001-5 Published online: 22 January 2018

This paper is behind a paywall.

For the curious I have included a number of links to recent ‘memristor’ postings here,

January 22, 2018: Memristors at Masdar

January 3, 2018: Mott memristor

August 24, 2017: Neuristors and brainlike computing

June 28, 2017: Dr. Wei Lu and bio-inspired ‘memristor’ chips

May 2, 2017: Predicting how a memristor functions

December 30, 2016: Changing synaptic connectivity with a memristor

December 5, 2016: The memristor as computing device

November 1, 2016: The memristor as the ‘missing link’ in bioelectronic medicine?

You can find more by using ‘memristor’ as the search term in the blog search function or on the search engine of your choice.