An August 29, 2019 news item on phys.org broke the news about breaking a record for transferring quantum entanglement between matter and light ,

The quantum internet promises absolutely tap-proof communication and powerful distributed sensor networks for new science and technology. However, because quantum information cannot be copied, it is not possible to send this information over a classical network. Quantum information must be transmitted by quantum particles, and special interfaces are required for this. The Innsbruck-based experimental physicist Ben Lanyon, who was awarded the Austrian START Prize in 2015 for his research, is investigating these important intersections of a future quantum Internet.

Now his team at the Department of Experimental Physics at the University of Innsbruck and at the Institute of Quantum Optics and Quantum Information of the Austrian Academy of Sciences has achieved a record for the transfer of quantum entanglement between matter and light. For the first time, a distance of 50 kilometers was covered using fiber optic cables. “This is two orders of magnitude further than was previously possible and is a practical distance to start building inter-city quantum networks,” says Ben Lanyon.

…

An August 29, 2019 University of Innsbruck press release (also on EurekAlert), which originated the news item,

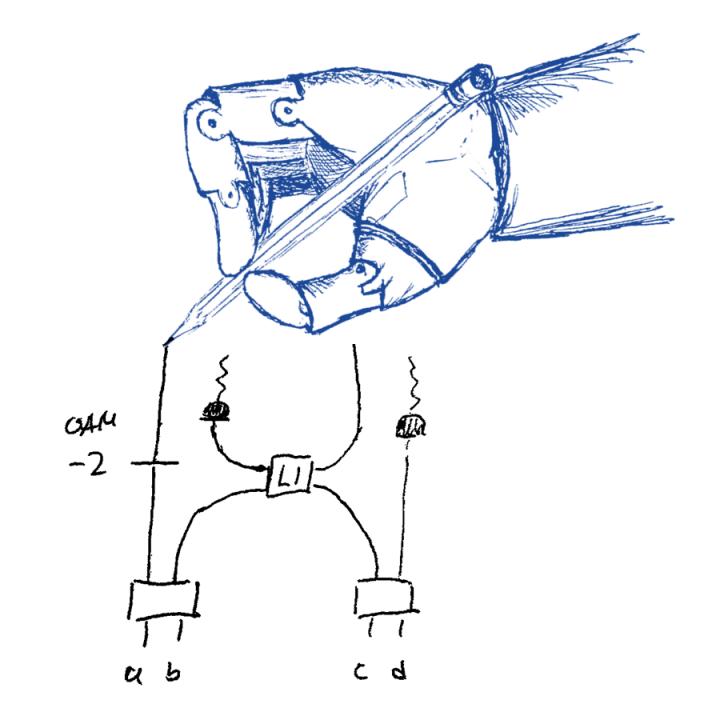

Converted photon for transmission

Lanyon’s team started the experiment with a calcium atom trapped in an ion trap. Using laser beams, the researchers write a quantum state onto the ion and simultaneously excite it to emit a photon in which quantum information is stored. As a result, the quantum states of the atom and the light particle are entangled. But the challenge is to transmit the photon over fiber optic cables. “The photon emitted by the calcium ion has a wavelength of 854 nanometers and is quickly absorbed by the optical fiber”, says Ben Lanyon. His team therefore initially sends the light particle through a nonlinear crystal illuminated by a strong laser. Thereby the photon wavelength is converted to the optimal value for long-distance travel: the current telecommunications standard wavelength of 1550 nanometers. The researchers from Innsbruck then send this photon through a 50-kilometer-long optical fiber line. Their measurements show that atom and light particle are still entangled even after the wavelength conversion and this long journey.

Even greater distances in sightAs a next step, Lanyon and his team show that their methods would enable entanglement to be generated between ions 100 kilometers apart and more. Two nodes send each an entangled photon over a distance of 50 kilometers to an intersection where the light particles are measured in such a way that they lose their entanglement with the ions, which in turn would entangle them. With 100-kilometer node spacing now a possibility, one could therefore envisage building the world’s first intercity light-matter quantum network in the coming years: only a handful of trapped ion-systems would be required on the way to establish a quantum internet between Innsbruck and Vienna, for example.

Lanyon’s team is part of the Quantum Internet Alliance, an international project within the Quantum Flagship framework of the European Union. The current results have been published in the Nature journal Quantum Information. Financially supported was the research among others by the Austrian Science Fund FWF and the European Union.

Here’s a link to and a citation for the paper,

Light-matter entanglement over 50 km of optical fibre by V. Krutyanskiy, M. Meraner, J. Schupp, V. Krcmarsky, H. Hainzer & B. P. Lanyon. npj Quantum Information volume 5, Article number: 72 (2019) DOI: https://doi.org/10.1038/s41534-019-0186-3 Published: 27 August 2019

This paper is open access.