First, here’s some of the latest research and if by ‘non-invasive,’ you mean that electrodes are not being planted in your brain, then this December 12, 2023 University of Technology Sydney (UTS) press release (also on EurekAlert) highlights non-invasive mind-reading AI via a brain-computer interface (BCI), Note: Links have been removed,

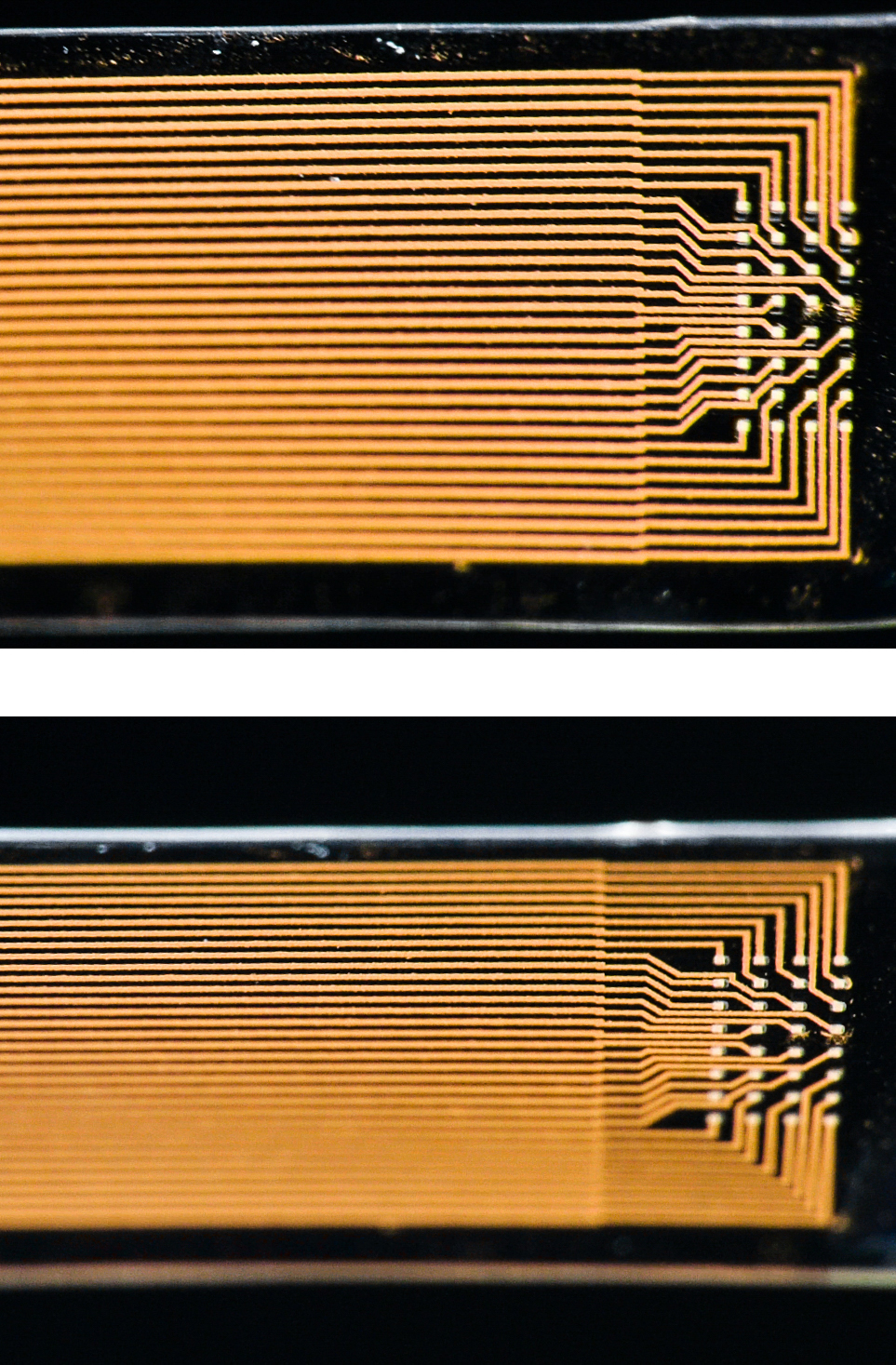

In a world-first, researchers from the GrapheneX-UTS Human-centric Artificial Intelligence Centre at the University of Technology Sydney (UTS) have developed a portable, non-invasive system that can decode silent thoughts and turn them into text.

The technology could aid communication for people who are unable to speak due to illness or injury, including stroke or paralysis. It could also enable seamless communication between humans and machines, such as the operation of a bionic arm or robot.

The study has been selected as the spotlight paper at the NeurIPS conference, a top-tier annual meeting that showcases world-leading research on artificial intelligence and machine learning, held in New Orleans on 12 December 2023.

The research was led by Distinguished Professor CT Lin, Director of the GrapheneX-UTS HAI Centre, together with first author Yiqun Duan and fellow PhD candidate Jinzhou Zhou from the UTS Faculty of Engineering and IT.

In the study participants silently read passages of text while wearing a cap that recorded electrical brain activity through their scalp using an electroencephalogram (EEG). A demonstration of the technology can be seen in this video [See UTS press release].The EEG wave is segmented into distinct units that capture specific characteristics and patterns from the human brain. This is done by an AI model called DeWave developed by the researchers. DeWave translates EEG signals into words and sentences by learning from large quantities of EEG data.

“This research represents a pioneering effort in translating raw EEG waves directly into language, marking a significant breakthrough in the field,” said Distinguished Professor Lin.

“It is the first to incorporate discrete encoding techniques in the brain-to-text translation process, introducing an innovative approach to neural decoding. The integration with large language models is also opening new frontiers in neuroscience and AI,” he said.

Previous technology to translate brain signals to language has either required surgery to implant electrodes in the brain, such as Elon Musk’s Neuralink [emphasis mine], or scanning in an MRI machine, which is large, expensive, and difficult to use in daily life.

These methods also struggle to transform brain signals into word level segments without additional aids such as eye-tracking, which restrict the practical application of these systems. The new technology is able to be used either with or without eye-tracking.

The UTS research was carried out with 29 participants. This means it is likely to be more robust and adaptable than previous decoding technology that has only been tested on one or two individuals, because EEG waves differ between individuals.

The use of EEG signals received through a cap, rather than from electrodes implanted in the brain, means that the signal is noisier. In terms of EEG translation however, the study reported state-of the art performance, surpassing previous benchmarks.

“The model is more adept at matching verbs than nouns. However, when it comes to nouns, we saw a tendency towards synonymous pairs rather than precise translations, such as ‘the man’ instead of ‘the author’,” said Duan. [emphases mine; synonymous, eh? what about ‘woman’ or ‘child’ instead of the ‘man’?]

“We think this is because when the brain processes these words, semantically similar words might produce similar brain wave patterns. Despite the challenges, our model yields meaningful results, aligning keywords and forming similar sentence structures,” he said.

The translation accuracy score is currently around 40% on BLEU-1. The BLEU score is a number between zero and one that measures the similarity of the machine-translated text to a set of high-quality reference translations. The researchers hope to see this improve to a level that is comparable to traditional language translation or speech recognition programs, which is closer to 90%.The research follows on from previous brain-computer interface technology developed by UTS in association with the Australian Defence Force [ADF] that uses brainwaves to command a quadruped robot, which is demonstrated in this ADF video [See my June 13, 2023 posting, “Mind-controlled robots based on graphene: an Australian research story” for the story and embedded video].

About one month after the research announcement regarding the University of Technology Sydney’s ‘non-invasive’ brain-computer interface (BCI), I stumbled across an in-depth piece about the field of ‘non-invasive’ mind-reading research.

Neurotechnology and neurorights

Fletcher Reveley’s January 18, 2024 article on salon.com (originally published January 3, 2024 on Undark) shows how quickly the field is developing and raises concerns, Note: Links have been removed,

One afternoon in May 2020, Jerry Tang, a Ph.D. student in computer science at the University of Texas at Austin, sat staring at a cryptic string of words scrawled across his computer screen:

“I am not finished yet to start my career at twenty without having gotten my license I never have to pull out and run back to my parents to take me home.”

The sentence was jumbled and agrammatical. But to Tang, it represented a remarkable feat: A computer pulling a thought, however disjointed, from a person’s mind.

For weeks, ever since the pandemic had shuttered his university and forced his lab work online, Tang had been at home tweaking a semantic decoder — a brain-computer interface, or BCI, that generates text from brain scans. Prior to the university’s closure, study participants had been providing data to train the decoder for months, listening to hours of storytelling podcasts while a functional magnetic resonance imaging (fMRI) machine logged their brain responses. Then, the participants had listened to a new story — one that had not been used to train the algorithm — and those fMRI scans were fed into the decoder, which used GPT1, a predecessor to the ubiquitous AI chatbot ChatGPT, to spit out a text prediction of what it thought the participant had heard. For this snippet, Tang compared it to the original story:

“Although I’m twenty-three years old I don’t have my driver’s license yet and I just jumped out right when I needed to and she says well why don’t you come back to my house and I’ll give you a ride.”

The decoder was not only capturing the gist of the original, but also producing exact matches of specific words — twenty, license. When Tang shared the results with his adviser, a UT Austin neuroscientist named Alexander Huth who had been working towards building such a decoder for nearly a decade, Huth was floored. “Holy shit,” Huth recalled saying. “This is actually working.” By the fall of 2021, the scientists were testing the device with no external stimuli at all — participants simply imagined a story and the decoder spat out a recognizable, albeit somewhat hazy, description of it. “What both of those experiments kind of point to,” said Huth, “is the fact that what we’re able to read out here was really like the thoughts, like the idea.”

The scientists brimmed with excitement over the potentially life-altering medical applications of such a device — restoring communication to people with locked-in syndrome, for instance, whose near full-body paralysis made talking impossible. But just as the potential benefits of the decoder snapped into focus, so too did the thorny ethical questions posed by its use. Huth himself had been one of the three primary test subjects in the experiments, and the privacy implications of the device now seemed visceral: “Oh my god,” he recalled thinking. “We can look inside my brain.”

Huth’s reaction mirrored a longstanding concern in neuroscience and beyond: that machines might someday read people’s minds. And as BCI technology advances at a dizzying clip, that possibility and others like it — that computers of the future could alter human identities, for example, or hinder free will — have begun to seem less remote. “The loss of mental privacy, this is a fight we have to fight today,” said Rafael Yuste, a Columbia University neuroscientist. “That could be irreversible. If we lose our mental privacy, what else is there to lose? That’s it, we lose the essence of who we are.”

Spurred by these concerns, Yuste and several colleagues have launched an international movement advocating for “neurorights” — a set of five principles Yuste argues should be enshrined in law as a bulwark against potential misuse and abuse of neurotechnology. But he may be running out of time.

…

Reveley’s January 18, 2024 article provides fascinating context and is well worth reading if you have the time.

For my purposes, I’m focusing on ethics, Note: Links have been removed,

… as these and other advances propelled the field forward, and as his own research revealed the discomfiting vulnerability of the brain to external manipulation, Yuste found himself increasingly concerned by the scarce attention being paid to the ethics of these technologies. Even Obama’s multi-billion-dollar BRAIN Initiative, a government program designed to advance brain research, which Yuste had helped launch in 2013 and supported heartily, seemed to mostly ignore the ethical and societal consequences of the research it funded. “There was zero effort on the ethical side,” Yuste recalled.

…

Yuste was appointed to the rotating advisory group of the BRAIN Initiative in 2015, where he began to voice his concerns. That fall, he joined an informal working group to consider the issue. “We started to meet, and it became very evident to me that the situation was a complete disaster,” Yuste said. “There was no guidelines, no work done.” Yuste said he tried to get the group to generate a set of ethical guidelines for novel BCI technologies, but the effort soon became bogged down in bureaucracy. Frustrated, he stepped down from the committee and, together with a University of Washington bioethicist named Sara Goering, decided to independently pursue the issue. “Our aim here is not to contribute to or feed fear for doomsday scenarios,” the pair wrote in a 2016 article in Cell, “but to ensure that we are reflective and intentional as we prepare ourselves for the neurotechnological future.”

In the fall of 2017, Yuste and Goering called a meeting at the Morningside Campus of Columbia, inviting nearly 30 experts from all over the world in such fields as neurotechnology, artificial intelligence, medical ethics, and the law. By then, several other countries had launched their own versions of the BRAIN Initiative, and representatives from Australia, Canada [emphasis mine], China, Europe, Israel, South Korea, and Japan joined the Morningside gathering, along with veteran neuroethicists and prominent researchers. “We holed ourselves up for three days to study the ethical and societal consequences of neurotechnology,” Yuste said. “And we came to the conclusion that this is a human rights issue. These methods are going to be so powerful, that enable to access and manipulate mental activity, and they have to be regulated from the angle of human rights. That’s when we coined the term ‘neurorights.’”

The Morningside group, as it became known, identified four principal ethical priorities, which were later expanded by Yuste into five clearly defined neurorights: The right to mental privacy, which would ensure that brain data would be kept private and its use, sale, and commercial transfer would be strictly regulated; the right to personal identity, which would set boundaries on technologies that could disrupt one’s sense of self; the right to fair access to mental augmentation, which would ensure equality of access to mental enhancement neurotechnologies; the right of protection from bias in the development of neurotechnology algorithms; and the right to free will, which would protect an individual’s agency from manipulation by external neurotechnologies. The group published their findings in an often-cited paper in Nature.

But while Yuste and the others were focused on the ethical implications of these emerging technologies, the technologies themselves continued to barrel ahead at a feverish speed. In 2014, the first kick of the World Cup was made by a paraplegic man using a mind-controlled robotic exoskeleton. In 2016, a man fist bumped Obama using a robotic arm that allowed him to “feel” the gesture. The following year, scientists showed that electrical stimulation of the hippocampus could improve memory, paving the way for cognitive augmentation technologies. The military, long interested in BCI technologies, built a system that allowed operators to pilot three drones simultaneously, partially with their minds. Meanwhile, a confusing maelstrom of science, science-fiction, hype, innovation, and speculation swept the private sector. By 2020, over $33 billion had been invested in hundreds of neurotech companies — about seven times what the NIH [US National Institutes of Health] had envisioned for the 12-year span of the BRAIN Initiative itself.

…

Now back to Tang and Huth (from Reveley’s January 18, 2024 article), Note: Links have been removed,

Central to the ethical questions Huth and Tang grappled with was the fact that their decoder, unlike other language decoders developed around the same time, was non-invasive — it didn’t require its users to undergo surgery. Because of that, their technology was free from the strict regulatory oversight that governs the medical domain. (Yuste, for his part, said he believes non-invasive BCIs pose a far greater ethical challenge than invasive systems: “The non-invasive, the commercial, that’s where the battle is going to get fought.”) Huth and Tang’s decoder faced other hurdles to widespread use — namely that fMRI machines are enormous, expensive, and stationary. But perhaps, the researchers thought, there was a way to overcome that hurdle too.

The information measured by fMRI machines — blood oxygenation levels, which indicate where blood is flowing in the brain — can also be measured with another technology, functional Near-Infrared Spectroscopy, or fNIRS. Although lower resolution than fMRI, several expensive, research-grade, wearable fNIRS headsets do approach the resolution required to work with Huth and Tang’s decoder. In fact, the scientists were able to test whether their decoder would work with such devices by simply blurring their fMRI data to simulate the resolution of research-grade fNIRS. The decoded result “doesn’t get that much worse,” Huth said.

And while such research-grade devices are currently cost-prohibitive for the average consumer, more rudimentary fNIRS headsets have already hit the market. Although these devices provide far lower resolution than would be required for Huth and Tang’s decoder to work effectively, the technology is continually improving, and Huth believes it is likely that an affordable, wearable fNIRS device will someday provide high enough resolution to be used with the decoder. In fact, he is currently teaming up with scientists at Washington University to research the development of such a device.

Even comparatively primitive BCI headsets can raise pointed ethical questions when released to the public. Devices that rely on electroencephalography, or EEG, a commonplace method of measuring brain activity by detecting electrical signals, have now become widely available — and in some cases have raised alarm. In 2019, a school in Jinhua, China, drew criticism after trialing EEG headbands that monitored the concentration levels of its pupils. (The students were encouraged to compete to see who concentrated most effectively, and reports were sent to their parents.) Similarly, in 2018 the South China Morning Post reported that dozens of factories and businesses had begun using “brain surveillance devices” to monitor workers’ emotions, in the hopes of increasing productivity and improving safety. The devices “caused some discomfort and resistance in the beginning,” Jin Jia, then a brain scientist at Ningbo University, told the reporter. “After a while, they got used to the device.”

But the primary problem with even low-resolution devices is that scientists are only just beginning to understand how information is actually encoded in brain data. In the future, powerful new decoding algorithms could discover that even raw, low-resolution EEG data contains a wealth of information about a person’s mental state at the time of collection. Consequently, nobody can definitively know what they are giving away when they allow companies to collect information from their brains.

Huth and Tang concluded that brain data, therefore, should be closely guarded, especially in the realm of consumer products. In an article on Medium from last April, Tang wrote that “decoding technology is continually improving, and the information that could be decoded from a brain scan a year from now may be very different from what can be decoded today. It is crucial that companies are transparent about what they intend to do with brain data and take measures to ensure that brain data is carefully protected.” (Yuste said the Neurorights Foundation recently surveyed the user agreements of 30 neurotech companies and found that all of them claim ownership of users’ brain data — and most assert the right to sell that data to third parties. [emphases mine]) Despite these concerns, however, Huth and Tang maintained that the potential benefits of these technologies outweighed their risks, provided the proper guardrails [emphasis mine] were put in place.

…

It would seem the first guardrails are being set up in South America (from Reveley’s January 18, 2024 article), Note: Links have been removed,

On a hot summer night in 2019, Yuste sat in the courtyard of an adobe hotel in the north of Chile with his close friend, the prominent Chilean doctor and then-senator Guido Girardi, observing the vast, luminous skies of the Atacama Desert and discussing, as they often did, the world of tomorrow. Girardi, who every year organizes the Congreso Futuro, Latin America’s preeminent science and technology event, had long been intrigued by the accelerating advance of technology and its paradigm-shifting impact on society — “living in the world at the speed of light,” as he called it. Yuste had been a frequent speaker at the conference, and the two men shared a conviction that scientists were birthing technologies powerful enough to disrupt the very notion of what it meant to be human.

Around midnight, as Yuste finished his pisco sour, Girardi made an intriguing proposal: What if they worked together to pass an amendment to Chile’s constitution, one that would enshrine protections for mental privacy as an inviolable right of every Chilean? It was an ambitious idea, but Girardi had experience moving bold pieces of legislation through the senate; years earlier he had spearheaded Chile’s famous Food Labeling and Advertising Law, which required companies to affix health warning labels on junk food. (The law has since inspired dozens of countries to pursue similar legislation.) With BCI, here was another chance to be a trailblazer. “I said to Rafael, ‘Well, why don’t we create the first neuro data protection law?’” Girardi recalled. Yuste readily agreed.

…

… Girardi led the political push, promoting a piece of legislation that would amend Chile’s constitution to protect mental privacy. The effort found surprising purchase across the political spectrum, a remarkable feat in a country famous for its political polarization. In 2021, Chile’s congress unanimously passed the constitutional amendment, which Piñera [Sebastián Piñera] swiftly signed into law. (A second piece of legislation, which would establish a regulatory framework for neurotechnology, is currently under consideration by Chile’s congress.) “There was no divide between the left or right,” recalled Girardi. “This was maybe the only law in Chile that was approved by unanimous vote.” Chile, then, had become the first country in the world to enshrine “neurorights” in its legal code.

…

Even before the passage of the Chilean constitutional amendment, Yuste had begun meeting regularly with Jared Genser, an international human rights lawyer who had represented such high-profile clients as Desmond Tutu, Liu Xiaobo, and Aung San Suu Kyi. (The New York Times Magazine once referred to Genser as “the extractor” for his work with political prisoners.) Yuste was seeking guidance on how to develop an international legal framework to protect neurorights, and Genser, though he had just a cursory knowledge of neurotechnology, was immediately captivated by the topic. “It’s fair to say he blew my mind in the first hour of discussion,” recalled Genser. Soon thereafter, Yuste, Genser, and a private-sector entrepreneur named Jamie Daves launched the Neurorights Foundation, a nonprofit whose first goal, according to its website, is “to protect the human rights of all people from the potential misuse or abuse of neurotechnology.”

To accomplish this, the organization has sought to engage all levels of society, from the United Nations and regional governing bodies like the Organization of American States, down to national governments, the tech industry, scientists, and the public at large. Such a wide-ranging approach, said Genser, “is perhaps insanity on our part, or grandiosity. But nonetheless, you know, it’s definitely the Wild West as it comes to talking about these issues globally, because so few people know about where things are, where they’re heading, and what is necessary.”

This general lack of knowledge about neurotech, in all strata of society, has largely placed Yuste in the role of global educator — he has met several times with U.N. Secretary-General António Guterres, for example, to discuss the potential dangers of emerging neurotech. And these efforts are starting to yield results. Guterres’s 2021 report, “Our Common Agenda,” which sets forth goals for future international cooperation, urges “updating or clarifying our application of human rights frameworks and standards to address frontier issues,” such as “neuro-technology.” Genser attributes the inclusion of this language in the report to Yuste’s advocacy efforts.

But updating international human rights law is difficult, and even within the Neurorights Foundation there are differences of opinion regarding the most effective approach. For Yuste, the ideal solution would be the creation of a new international agency, akin to the International Atomic Energy Agency — but for neurorights. “My dream would be to have an international convention about neurotechnology, just like we had one about atomic energy and about certain things, with its own treaty,” he said. “And maybe an agency that would essentially supervise the world’s efforts in neurotechnology.”

Genser, however, believes that a new treaty is unnecessary, and that neurorights can be codified most effectively by extending interpretation of existing international human rights law to include them. The International Covenant of Civil and Political Rights, for example, already ensures the general right to privacy, and an updated interpretation of the law could conceivably clarify that that clause extends to mental privacy as well.

…

There is no need for immediate panic (from Reveley’s January 18, 2024 article),

…

… while Yuste and the others continue to grapple with the complexities of international and national law, Huth and Tang have found that, for their decoder at least, the greatest privacy guardrails come not from external institutions but rather from something much closer to home — the human mind itself. Following the initial success of their decoder, as the pair read widely about the ethical implications of such a technology, they began to think of ways to assess the boundaries of the decoder’s capabilities. “We wanted to test a couple kind of principles of mental privacy,” said Huth. Simply put, they wanted to know if the decoder could be resisted.

In late 2021, the scientists began to run new experiments. First, they were curious if an algorithm trained on one person could be used on another. They found that it could not — the decoder’s efficacy depended on many hours of individualized training. Next, they tested whether the decoder could be thrown off simply by refusing to cooperate with it. Instead of focusing on the story that was playing through their headphones while inside the fMRI machine, participants were asked to complete other mental tasks, such as naming random animals, or telling a different story in their head. “Both of those rendered it completely unusable,” Huth said. “We didn’t decode the story they were listening to, and we couldn’t decode anything about what they were thinking either.”

…

Given how quickly this field of research is progressing, it seems like a good idea to increase efforts to establish neurorights (from Reveley’s January 18, 2024 article),

For Yuste, however, technologies like Huth and Tang’s decoder may only mark the beginning of a mind-boggling new chapter in human history, one in which the line between human brains and computers will be radically redrawn — or erased completely. A future is conceivable, he said, where humans and computers fuse permanently, leading to the emergence of technologically augmented cyborgs. “When this tsunami hits us I would say it’s not likely it’s for sure that humans will end up transforming themselves — ourselves — into maybe a hybrid species,” Yuste said. He is now focused on preparing for this future.

In the last several years, Yuste has traveled to multiple countries, meeting with a wide assortment of politicians, supreme court justices, U.N. committee members, and heads of state. And his advocacy is beginning to yield results. In August, Mexico began considering a constitutional reform that would establish the right to mental privacy. Brazil is currently considering a similar proposal, while Spain, Argentina, and Uruguay have also expressed interest, as has the European Union. In September [2023], neurorights were officially incorporated into Mexico’s digital rights charter, while in Chile, a landmark Supreme Court ruling found that Emotiv Inc, a company that makes a wearable EEG headset, violated Chile’s newly minted mental privacy law. That suit was brought by Yuste’s friend and collaborator, Guido Girardi.

…

“This is something that we should take seriously,” he [Huth] said. “Because even if it’s rudimentary right now, where is that going to be in five years? What was possible five years ago? What’s possible now? Where’s it gonna be in five years? Where’s it gonna be in 10 years? I think the range of reasonable possibilities includes things that are — I don’t want to say like scary enough — but like dystopian enough that I think it’s certainly a time for us to think about this.”

You can find The Neurorights Foundation here and/or read Reveley’s January 18, 2024 article on salon.com or as originally published January 3, 2024 on Undark. Finally, thank you for the article, Fletcher Reveley!