Apparently, I am an idiot—if the folks at Expunct and other organizations passionately devoted to their own viewpoints are to be believed.

To be specific, Berggruen Institute (which publishes Noēma magazine) has attracted remarkably sharp criticism and, by implication, that seems to include anyone examining, listening, or reading the institute’s various communication efforts.

Perhaps you’d like to judge the quality of the ideas for yourself?

Abut the Institute and about the magazine

The institute is a think tank founded by Nicolas Berggruen, US-based billionaire investor and philanthropist, and Nathan Gardels, journalist and editor-in-chief of Noēma magazine, in 2010. Before moving onto the magazine’s first anniversary, here’s more about the Institute from its About webpage,

Ideas for a Changing World

We live in a time of great transformations. From capitalism, to democracy, to the global order, our institutions are faltering. The very meaning of the human is fragmenting.

The Berggruen Institute was established in 2010 to develop foundational ideas about how to reshape political and social institutions in the face of these great transformations. We work across cultures, disciplines and political boundaries, engaging great thinkers to develop and promote long-term answers to the biggest challenges of the 21st Century.

…

As the for the magazine, here’s more from the About Us webpage (Note: I have rearranged the paragraph order),

In ancient Greek, noēma means “thinking” or the “object of thought.” And that is our intention: to delve deeply into the critical issues transforming the world today, at length and with historical context, in order to illuminate new pathways of thought in a way not possible through the immediacy of daily media. In this era of accelerated social change, there is a dire need for new ideas and paradigms to frame the world we are moving into.

Noema is a magazine exploring the transformations sweeping our world. We publish essays, interviews, reportage, videos and art on the overlapping realms of philosophy, governance, geopolitics, economics, technology and culture. In doing so, our unique approach is to get out of the usual lanes and cross disciplines, social silos and cultural boundaries. From artificial intelligence and the climate crisis to the future of democracy and capitalism, Noema Magazine seeks a deeper understanding of the most pressing challenges of the 21st century.

Published online and in print by the Berggruen Institute, Noema grew out of a previous publication called The WorldPost, which was first a partnership with HuffPost and later with The Washington Post. Noema publishes thoughtful, rigorous, adventurous pieces by voices from both inside and outside the institute. While committed to using journalism to help build a more sustainable and equitable world, we do not promote any particular set of national, economic or partisan interests.

First anniversary

Noēma’s anniversary is being marked by its second paper publication (the first was produced for the magazine’s launch). From a July 1, 2021 announcement received via email,

June 2021 marked one year since the launch of Noema Magazine, a crucial milestone for the new publication focused on exploring and amplifying transformative ideas. Noema is working to attract audiences through longform perspectives and contemporary artwork that weave together threads in philosophy, governance, geopolitics, economics, technology, and culture.

“What began more than seven years ago as a news-driven global voices platform for The Huffington Post known as The WorldPost, and later in partnership with The Washington Post, has been reimagined,” said Nathan Gardels, editor-in-chief of Noema. “It has evolved into a platform for expansive ideas through a visual lens, and a timely and provocative portal to plumb the deeper issues behind present events.”

The magazine’s editorial board, involved in the genesis and as content drivers of the magazine, includes Orhan Pamuk, Arianna Huffington, Fareed Zakaria, Reid Hoffman, Dambisa Moyo, Walter Isaacson, Pico Iyer, and Elif Shafak. Pieces by thinkers cracking the calcifications of intellectual domains include, among many others:

· Francis Fukuyama on the future of the nation-state

· A collage of commentary on COVID with Yuval Harari and Jared Diamond

· An interview with economist Mariana Mazzucato on “mission-oriented government”

· Taiwan’s Digital Minister Audrey Tang on digital democracy

· Hedge-fund giant Ray Dalio in conversation with Nobel laureate Joe Stiglitz

· Shannon Vallor on how AI is making us less intelligent and more artificial

· Former Governor Jerry Brown in conversation with Stewart Brand

· Ecologist Suzanne Simard on the intelligence of forest ecosystems

· A discussion on protecting the biosphere with Bill Gates’s guru Vaclav Smil

· An original story by Chinese science-fiction writer Hao Jingfang

Noema seeks to highlight how the great transformations of the 21st century are reflected in the work of today’s artistic innovators. Most articles are accompanied by an original illustration, melding together an aesthetic experience with ideas in social science and public policy. Among others, in the past year, the magazine has featured work from multimedia artist Pierre Huyghe, illustrator Daniel Martin Diaz, painter Scott Listfield, graphic designer and NFT artist Jonathan Zawada, 3D motion graphics artist Kyle Szostek, illustrator Moonassi, collage artist Lauren Lakin, and aerial photographer Brooke Holm. Additional contributions from artists include Berggruen Fellows Agnieszka Kurant and Anicka Yi discussing how their work explores the myth of the self.

Noema is available online and annually in print; the magazine’s second print issue will be released on July13, 2021. The theme of this issue is “planetary realism,” which proposes to go beyond the exhausted notions of globalization and geopolitical competition among nation-states to a new “Gaiapolitik.” It addresses the existential challenge of climate change across all borders and recognizes that human civilization is but one part of the ecology of being that encompasses multiple intelligences from microbes to forests to the emergent global exoskeleton of AI and internet connectivity (more on this in the letter from the editors below).

Published by the Berggruen Institute, Noema is an incubator for the Institute’s core ideas, such as “participation without populism,” “pre-distribution” and universal basic capital (vs. income), and the need for dialogue between the U.S. and China to avoid an AI arms race or inadvertent war.

“The world needs divergent thinking on big questions if we’re going to meet the challenges of the 21st century; Noema publishes bold and experimental ideas,” said Kathleen Miles, executive editor of Noema. “The magazine cross-fertilizes ideas across boundaries and explores correspondences among them in order to map out the terrain of the great transformations underway.”

I notice Suzanne Simard (from the University of British Columbia and author of “Finding the Mother Tree: Discovering the Wisdom of the Forest”) on the list of essayists along with a story by Chinese science fiction writer, Hao Jingfang.

Simard was mentioned here in a May 12, 2021 posting (scroll down to the “UBC forestry professor, Suzanne Simard’s memoir going to the movies?” subhead) when it was announced that her then not yet published memoir will be a film starring Amy Adams (or so they hope).

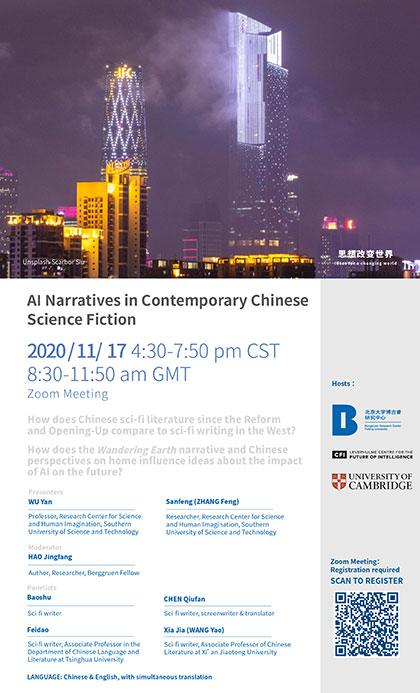

Hao Jingfang was mentioned here in a November 16, 2020 posting titled: “Telling stories about artificial intelligence (AI) and Chinese science fiction; a Nov. 17, 2020 virtual event” (co-hosted by the Berggruen Institute and University of Cambridge’s Leverhulme Centre for the Future of Intelligence [CFI]).

A month after Noēma’s second paper issue on July 13, 2021, the theme and topics appear especially timely in light of the extensive news coverage in Canada and many other parts of the world given to the Monday, August, 9, 2021 release of the sixth UN Climate report raising alarms over irreversible impacts. (Emily Chung’s August 12, 2021 analysis for the Canadian Broadcasting Corporation [CBC] offers a little good news for those severely alarmed by the report.) Note: The Intergovernmental Panel on Climate Change (IPCC) is the UN body tasked with assessing the science related to climate change.