Before launching into the assessment, a brief explanation of my theme: Hedy Lamarr was considered to be one of the great beauties of her day,

“Ziegfeld Girl” Hedy Lamarr 1941 MGM *M.V.

Titles: Ziegfeld Girl

People: Hedy Lamarr

Image courtesy mptvimages.com [downloaded from https://www.imdb.com/title/tt0034415/mediaviewer/rm1566611456]

Let’s take a moment to reflect on the mercurial brilliance of Hedy Lamarr. Not only did the Vienna-born actor flee a loveless marriage to a Nazi arms dealer to secure a seven-year, $3,000-a-week contract with MGM, and become (probably) the first Hollywood star to simulate a female orgasm on screen – she also took time out to invent a device that would eventually revolutionise mobile communications.

As described in unprecedented detail by the American journalist and historian Richard Rhodes in his new book, Hedy’s Folly, Lamarr and her business partner, the composer George Antheil, were awarded a patent in 1942 for a “secret communication system”. It was meant for radio-guided torpedoes, and the pair gave to the US Navy. It languished in their files for decades before eventually becoming a constituent part of GPS, Wi-Fi and Bluetooth technology.

…

(The article goes on to mention other celebrities [Marlon Brando, Barbara Cartland, Mark Twain, etc] and their inventions.)

Lamarr’s work as an inventor was largely overlooked until the 1990’s when the technology community turned her into a ‘cultish’ favourite and from there her reputation grew and acknowledgement increased culminating in Rhodes’ book and the documentary by Alexandra Dean, ‘Bombshell: The Hedy Lamarr Story (to be broadcast as part of PBS’s American Masters series on May 18, 2018).

Canada as Hedy Lamarr

There are some parallels to be drawn between Canada’s S&T and R&D (science and technology; research and development) and Ms. Lamarr. Chief amongst them, we’re not always appreciated for our brains. Not even by people who are supposed to know better such as the experts on the panel for the ‘Third assessment of The State of Science and Technology and Industrial Research and Development in Canada’ (proper title: Competing in a Global Innovation Economy: The Current State of R&D in Canada) from the Expert Panel on the State of Science and Technology and Industrial Research and Development in Canada.

A little history

Before exploring the comparison to Hedy Lamarr further, here’s a bit more about the history of this latest assessment from the Council of Canadian Academies (CCA), from the report released April 10, 2018,

This assessment of Canada’s performance indicators in science, technology, research, and innovation comes at an opportune time. The Government of Canada has expressed a renewed commitment in several tangible ways to this broad domain of activity including its Innovation and Skills Plan, the announcement of five superclusters, its appointment of a new Chief Science Advisor, and its request for the Fundamental Science Review. More specifically, the 2018 Federal Budget demonstrated the government’s strong commitment to research and innovation with historic investments in science.

The CCA has a decade-long history of conducting evidence-based assessments about Canada’s research and development activities, producing seven assessments of relevance:

•The State of Science and Technology in Canada (2006) [emphasis mine]

•Innovation and Business Strategy: Why Canada Falls Short (2009)

•Catalyzing Canada’s Digital Economy (2010)

•Informing Research Choices: Indicators and Judgment (2012)

•The State of Science and Technology in Canada (2012) [emphasis mine]

•The State of Industrial R&D in Canada (2013) [emphasis mine]

•Paradox Lost: Explaining Canada’s Research Strength and Innovation Weakness (2013)Using similar methods and metrics to those in The State of Science and Technology in Canada (2012) and The State of Industrial R&D in Canada (2013), this assessment tells a similar and familiar story: Canada has much to be proud of, with world-class researchers in many domains of knowledge, but the rest of the world is not standing still. Our peers are also producing high quality results, and many countries are making significant commitments to supporting research and development that will position them to better leverage their strengths to compete globally. Canada will need to take notice as it determines how best to take action. This assessment provides valuable material for that conversation to occur, whether it takes place in the lab or the legislature, the bench or the boardroom. We also hope it will be used to inform public discussion. [p. ix Print, p. 11 PDF]

This latest assessment succeeds the general 2006 and 2012 reports, which were mostly focused on academic research, and combines it with an assessment of industrial research, which was previously separate. Also, this third assessment’s title (Competing in a Global Innovation Economy: The Current State of R&D in Canada) makes what was previously quietly declared in the text, explicit from the cover onwards. It’s all about competition, despite noises such as the 2017 Naylor report (Review of fundamental research) about the importance of fundamental research.

One other quick comment, I did wonder in my July 1, 2016 posting (featuring the announcement of the third assessment) how combining two assessments would impact the size of the expert panel and the size of the final report,

Given the size of the 2012 assessment of science and technology at 232 pp. (PDF) and the 2013 assessment of industrial research and development at 220 pp. (PDF) with two expert panels, the imagination boggles at the potential size of the 2016 expert panel and of the 2016 assessment combining the two areas.

I got my answer with regard to the panel as noted in my Oct. 20, 2016 update (which featured a list of the members),

A few observations, given the size of the task, this panel is lean. As well, there are three women in a group of 13 (less than 25% representation) in 2016? It’s Ontario and Québec-dominant; only BC and Alberta rate a representative on the panel. I hope they will find ways to better balance this panel and communicate that ‘balanced story’ to the rest of us. On the plus side, the panel has representatives from the humanities, arts, and industry in addition to the expected representatives from the sciences.

The imbalance I noted then was addressed, somewhat, with the selection of the reviewers (from the report released April 10, 2018),

The CCA wishes to thank the following individuals for their review of this report:

Ronald Burnett, C.M., O.B.C., RCA, Chevalier de l’ordre des arts et des

lettres, President and Vice-Chancellor, Emily Carr University of Art and Design

(Vancouver, BC)Michelle N. Chretien, Director, Centre for Advanced Manufacturing and Design

Technologies, Sheridan College; Former Program and Business Development

Manager, Electronic Materials, Xerox Research Centre of Canada (Brampton,

ON)Lisa Crossley, CEO, Reliq Health Technologies, Inc. (Ancaster, ON)

Natalie Dakers, Founding President and CEO, Accel-Rx Health Sciences

Accelerator (Vancouver, BC)Fred Gault, Professorial Fellow, United Nations University-MERIT (Maastricht,

Netherlands)Patrick D. Germain, Principal Engineering Specialist, Advanced Aerodynamics,

Bombardier Aerospace (Montréal, QC)Robert Brian Haynes, O.C., FRSC, FCAHS, Professor Emeritus, DeGroote

School of Medicine, McMaster University (Hamilton, ON)Susan Holt, Chief, Innovation and Business Relationships, Government of

New Brunswick (Fredericton, NB)Pierre A. Mohnen, Professor, United Nations University-MERIT and Maastricht

University (Maastricht, Netherlands)Peter J. M. Nicholson, C.M., Retired; Former and Founding President and

CEO, Council of Canadian Academies (Annapolis Royal, NS)Raymond G. Siemens, Distinguished Professor, English and Computer Science

and Former Canada Research Chair in Humanities Computing, University of

Victoria (Victoria, BC) [pp. xii- xiv Print; pp. 15-16 PDF]

The proportion of women to men as reviewers jumped up to about 36% (4 of 11 reviewers) and there are two reviewers from the Maritime provinces. As usual, reviewers external to Canada were from Europe. Although this time, they came from Dutch institutions rather than UK or German institutions. Interestingly and unusually, there was no one from a US institution. When will they start using reviewers from other parts of the world?

As for the report itself, it is 244 pp. (PDF). (For the really curious, I have a December 15, 2016 post featuring my comments on the preliminary data for the third assessment.)

To sum up, they had a lean expert panel tasked with bringing together two inquiries and two reports. I imagine that was daunting. Good on them for finding a way to make it manageable.

Bibliometrics, patents, and a survey

I wish more attention had been paid to some of the issues around open science, open access, and open data, which are changing how science is being conducted. (I have more about this from an April 5, 2018 article by James Somers for The Atlantic but more about that later.) If I understand rightly, they may not have been possible due to the nature of the questions posed by the government when requested the assessment.

As was done for the second assessment, there is an acknowledgement that the standard measures/metrics (bibliometrics [no. of papers published, which journals published them; number of times papers were cited] and technometrics [no. of patent applications, etc.] of scientific accomplishment and progress are not the best and new approaches need to be developed and adopted (from the report released April 10, 2018),

It is also worth noting that the Panel itself recognized the limits that come from using traditional historic metrics. Additional approaches will be needed the next time this assessment is done. [p. ix Print; p. 11 PDF]

For the second assessment and as a means of addressing some of the problems with metrics, the panel decided to take a survey which the panel for the third assessment has also done (from the report released April 10, 2018),

The Panel relied on evidence from multiple sources to address its charge, including a literature review and data extracted from statistical agencies and organizations such as Statistics Canada and the OECD. For international comparisons, the Panel focused on OECD countries along with developing countries that are among the top 20 producers of peer-reviewed research publications (e.g., China, India, Brazil, Iran, Turkey). In addition to the literature review, two primary research approaches informed the Panel’s assessment:

•a comprehensive bibliometric and technometric analysis of Canadian research publications and patents; and,

•a survey of top-cited researchers around the world.Despite best efforts to collect and analyze up-to-date information, one of the Panel’s findings is that data limitations continue to constrain the assessment of R&D activity and excellence in Canada. This is particularly the case with industrial R&D and in the social sciences, arts, and humanities. Data on industrial R&D activity continue to suffer from time lags for some measures, such as internationally comparable data on R&D intensity by sector and industry. These data also rely on industrial categories (i.e., NAICS and ISIC codes) that can obscure important trends, particularly in the services sector, though Statistics Canada’s recent revisions to how this data is reported have improved this situation. There is also a lack of internationally comparable metrics relating to R&D outcomes and impacts, aside from those based on patents.

For the social sciences, arts, and humanities, metrics based on journal articles and other indexed publications provide an incomplete and uneven picture of research contributions. The expansion of bibliometric databases and methodological improvements such as greater use of web-based metrics, including paper views/downloads and social media references, will support ongoing, incremental improvements in the availability and accuracy of data. However, future assessments of R&D in Canada may benefit from more substantive integration of expert review, capable of factoring in different types of research outputs (e.g., non-indexed books) and impacts (e.g., contributions to communities or impacts on public policy). The Panel has no doubt that contributions from the humanities, arts, and social sciences are of equal importance to national prosperity. It is vital that such contributions are better measured and assessed. [p. xvii Print; p. 19 PDF]

My reading: there’s a problem and we’re not going to try and fix it this time. Good luck to those who come after us. As for this line: “The Panel has no doubt that contributions from the humanities, arts, and social sciences are of equal importance to national prosperity.” Did no one explain that when you use ‘no doubt’, you are introducing doubt? It’s a cousin to ‘don’t take this the wrong way’ and ‘I don’t mean to be rude but …’ .

Good news

This is somewhat encouraging (from the report released April 10, 2018),

Canada’s international reputation for its capacity to participate in cutting-edge R&D is strong, with 60% of top-cited researchers surveyed internationally indicating that Canada hosts world-leading infrastructure or programs in their fields. This share increased by four percentage points between 2012 and 2017. Canada continues to benefit from a highly educated population and deep pools of research skills and talent. Its population has the highest level of educational attainment in the OECD in the proportion of the population with

a post-secondary education. However, among younger cohorts (aged 25 to 34), Canada has fallen behind Japan and South Korea. The number of researchers per capita in Canada is on a par with that of other developed countries, andincreased modestly between 2004 and 2012. Canada’s output of PhD graduates has also grown in recent years, though it remains low in per capita terms relative to many OECD countries. [pp. xvii-xviii; pp. 19-20]

Don’t let your head get too big

Most of the report observes that our international standing is slipping in various ways such as this (from the report released April 10, 2018),

In contrast, the number of R&D personnel employed in Canadian businesses

dropped by 20% between 2008 and 2013. This is likely related to sustained and

ongoing decline in business R&D investment across the country. R&D as a share

of gross domestic product (GDP) has steadily declined in Canada since 2001,

and now stands well below the OECD average (Figure 1). As one of few OECD

countries with virtually no growth in total national R&D expenditures between

2006 and 2015, Canada would now need to more than double expenditures to

achieve an R&D intensity comparable to that of leading countries.Low and declining business R&D expenditures are the dominant driver of this

trend; however, R&D spending in all sectors is implicated. Government R&D

expenditures declined, in real terms, over the same period. Expenditures in the

higher education sector (an indicator on which Canada has traditionally ranked

highly) are also increasing more slowly than the OECD average. Significant

erosion of Canada’s international competitiveness and capacity to participate

in R&D and innovation is likely to occur if this decline and underinvestment

continue.Between 2009 and 2014, Canada produced 3.8% of the world’s research

publications, ranking ninth in the world. This is down from seventh place for

the 2003–2008 period. India and Italy have overtaken Canada although the

difference between Italy and Canada is small. Publication output in Canada grew

by 26% between 2003 and 2014, a growth rate greater than many developed

countries (including United States, France, Germany, United Kingdom, and

Japan), but below the world average, which reflects the rapid growth in China

and other emerging economies. Research output from the federal government,

particularly the National Research Council Canada, dropped significantly

between 2009 and 2014.(emphasis mine) [p. xviii Print; p. 20 PDF]

For anyone unfamiliar with Canadian politics, 2009 – 2014 were years during which Stephen Harper’s Conservatives formed the government. Justin Trudeau’s Liberals were elected to form the government in late 2015.

During Harper’s years in government, the Conservatives were very interested in changing how the National Research Council of Canada operated and, if memory serves, the focus was on innovation over research. Consequently, the drop in their research output is predictable.

Given my interest in nanotechnology and other emerging technologies, this popped out (from the report released April 10, 2018),

When it comes to research on most enabling and strategic technologies, however, Canada lags other countries. Bibliometric evidence suggests that, with the exception of selected subfields in Information and Communication Technologies (ICT) such as Medical Informatics and Personalized Medicine, Canada accounts for a relatively small share of the world’s research output for promising areas of technology development. This is particularly true for Biotechnology, Nanotechnology, and Materials science [emphasis mine]. Canada’s research impact, as reflected by citations, is also modest in these areas. Aside from Biotechnology, none of the other subfields in Enabling and Strategic Technologies has an ARC rank among the top five countries. Optoelectronics and photonics is the next highest ranked at 7th place, followed by Materials, and Nanoscience and Nanotechnology, both of which have a rank of 9th. Even in areas where Canadian researchers and institutions played a seminal role in early research (and retain a substantial research capacity), such as Artificial Intelligence and Regenerative Medicine, Canada has lost ground to other countries.

Arguably, our early efforts in artificial intelligence wouldn’t have garnered us much in the way of ranking and yet we managed some cutting edge work such as machine learning. I’m not suggesting the expert panel should have or could have found some way to measure these kinds of efforts but I’m wondering if there could have been some acknowledgement in the text of the report. I’m thinking a couple of sentences in a paragraph about the confounding nature of scientific research where areas that are ignored for years and even decades then become important (e.g., machine learning) but are not measured as part of scientific progress until after they are universally recognized.

Still, point taken about our diminishing returns in ’emerging’ technologies and sciences (from the report released April 10, 2018),

The impression that emerges from these data is sobering. With the exception of selected ICT subfields, such as Medical Informatics, bibliometric evidence does not suggest that Canada excels internationally in most of these research areas. In areas such as Nanotechnology and Materials science, Canada lags behind other countries in levels of research output and impact, and other countries are outpacing Canada’s publication growth in these areas — leading to declining shares of world publications. Even in research areas such as AI, where Canadian researchers and institutions played a foundational role, Canadian R&D activity is not keeping pace with that of other countries and some researchers trained in Canada have relocated to other countries (Section 4.4.1). There are isolated exceptions to these trends, but the aggregate data reviewed by this Panel suggest that Canada is not currently a world leader in research on most emerging technologies.

The Hedy Lamarr treatment

We have ‘good looks’ (arts and humanities) but not the kind of brains (physical sciences and engineering) that people admire (from the report released April 10, 2018),

Canada, relative to the world, specializes in subjects generally referred to as the

humanities and social sciences (plus health and the environment), and does

not specialize as much as others in areas traditionally referred to as the physical

sciences and engineering. Specifically, Canada has comparatively high levels

of research output in Psychology and Cognitive Sciences, Public Health and

Health Services, Philosophy and Theology, Earth and Environmental Sciences,

and Visual and Performing Arts. [emphases mine] It accounts for more than 5% of world researchin these fields. Conversely, Canada has lower research output than expected

in Chemistry, Physics and Astronomy, Enabling and Strategic Technologies,

Engineering, and Mathematics and Statistics. The comparatively low research

output in core areas of the natural sciences and engineering is concerning,

and could impair the flexibility of Canada’s research base, preventing research

institutions and researchers from being able to pivot to tomorrow’s emerging

research areas. [p. xix Print; p. 21 PDF]

Couldn’t they have used a more buoyant tone? After all, science was known as ‘natural philosophy’ up until the 19th century. As for visual and performing arts, let’s include poetry as a performing and literary art (both have been the case historically and cross-culturally) and let’s also note that one of the great physics texts, (De rerum natura by Lucretius) was a multi-volume poem (from Lucretius’ Wikipedia entry; Note: Links have been removed).

His poem De rerum natura (usually translated as “On the Nature of Things” or “On the Nature of the Universe”) transmits the ideas of Epicureanism, which includes Atomism [the concept of atoms forming materials] and psychology. Lucretius was the first writer to introduce Roman readers to Epicurean philosophy.[15] The poem, written in some 7,400 dactylic hexameters, is divided into six untitled books, and explores Epicurean physics through richly poetic language and metaphors. Lucretius presents the principles of atomism; the nature of the mind and soul; explanations of sensation and thought; the development of the world and its phenomena; and explains a variety of celestial and terrestrial phenomena. The universe described in the poem operates according to these physical principles, guided by fortuna, “chance”, and not the divine intervention of the traditional Roman deities.[16]

Should you need more proof that the arts might have something to contribute to physical sciences, there’s this in my March 7, 2018 posting,

It’s not often you see research that combines biologically inspired engineering and a molecular biophysicist with a professional animator who worked at Peter Jackson’s (Lord of the Rings film trilogy, etc.) Park Road Post film studio. An Oct. 18, 2017 news item on ScienceDaily describes the project,

Like many other scientists, Don Ingber, M.D., Ph.D., the Founding Director of the Wyss Institute, [emphasis mine] is concerned that non-scientists have become skeptical and even fearful of his field at a time when technology can offer solutions to many of the world’s greatest problems. “I feel that there’s a huge disconnect between science and the public because it’s depicted as rote memorization in schools, when by definition, if you can memorize it, it’s not science,” says Ingber, who is also the Judah Folkman Professor of Vascular Biology at Harvard Medical School and the Vascular Biology Program at Boston Children’s Hospital, and Professor of Bioengineering at the Harvard Paulson School of Engineering and Applied Sciences (SEAS). [emphasis mine] “Science is the pursuit of the unknown. We have a responsibility to reach out to the public and convey that excitement of exploration and discovery, and fortunately, the film industry is already great at doing that.”

…

“Not only is our physics-based simulation and animation system as good as other data-based modeling systems, it led to the new scientific insight [emphasis mine] that the limited motion of the dynein hinge focuses the energy released by ATP hydrolysis, which causes dynein’s shape change and drives microtubule sliding and axoneme motion,” says Ingber. “Additionally, while previous studies of dynein have revealed the molecule’s two different static conformations, our animation visually depicts one plausible way that the protein can transition between those shapes at atomic resolution, which is something that other simulations can’t do. The animation approach also allows us to visualize how rows of dyneins work in unison, like rowers pulling together in a boat, which is difficult using conventional scientific simulation approaches.”

It comes down to how we look at things. Yes, physical sciences and engineering are very important. If the report is to be believed we have a very highly educated population and according to PISA scores our students rank highly in mathematics, science, and reading skills. (For more information on Canada’s latest PISA scores from 2015 see this OECD page. As for PISA itself, it’s an OECD [Organization for Economic Cooperation and Development] programme where 15-year-old students from around the world are tested on their reading, mathematics, and science skills, you can get some information from my Oct. 9, 2013 posting.)

Is it really so bad that we choose to apply those skills in fields other than the physical sciences and engineering? It’s a little bit like Hedy Lamarr’s problem except instead of being judged for our looks and having our inventions dismissed, we’re being judged for not applying ourselves to physical sciences and engineering and having our work in other closely aligned fields dismissed as less important.

Canada’s Industrial R&D: an oft-told, very sad story

Bemoaning the state of Canada’s industrial research and development efforts has been a national pastime as long as I can remember. Here’s this from the report released April 10, 2018,

There has been a sustained erosion in Canada’s industrial R&D capacity and competitiveness. Canada ranks 33rd among leading countries on an index assessing the magnitude, intensity, and growth of industrial R&D expenditures. Although Canada is the 11th largest spender, its industrial R&D intensity (0.9%) is only half the OECD average and total spending is declining (−0.7%). Compared with G7 countries, the Canadian portfolio of R&D investment is more concentrated in industries that are intrinsically not as R&D intensive. Canada invests more heavily than the G7 average in oil and gas, forestry, machinery and equipment, and finance where R&D has been less central to business strategy than in many other industries. … About 50% of Canada’s industrial R&D spending is in high-tech sectors (including industries such as ICT, aerospace, pharmaceuticals, and automotive) compared with the G7 average of 80%. Canadian Business Enterprise Expenditures on R&D (BERD) intensity is also below the OECD average in these sectors. In contrast, Canadian investment in low and medium-low tech sectors is substantially higher than the G7 average. Canada’s spending reflects both its long-standing industrial structure and patterns of economic activity.

R&D investment patterns in Canada appear to be evolving in response to global and domestic shifts. While small and medium-sized enterprises continue to perform a greater share of industrial R&D in Canada than in the United States, between 2009 and 2013, there was a shift in R&D from smaller to larger firms. Canada is an increasingly attractive place to conduct R&D. Investment by foreign-controlled firms in Canada has increased to more than 35% of total R&D investment, with the United States accounting for more than half of that. [emphasis mine] Multinational enterprises seem to be increasingly locating some of their R&D operations outside their country of ownership, possibly to gain proximity to superior talent. Increasing foreign-controlled R&D, however, also could signal a long-term strategic loss of control over intellectual property (IP) developed in this country, ultimately undermining the government’s efforts to support high-growth firms as they scale up. [pp. xxii-xxiii Print; pp. 24-25 PDF]

Canada has been known as a ‘branch plant’ economy for decades. For anyone unfamiliar with the term, it means that companies from other countries come here, open up a branch and that’s how we get our jobs as we don’t have all that many large companies here. Increasingly, multinationals are locating R&D shops here.

While our small to medium size companies fund industrial R&D, it’s large companies (multinationals) which can afford long-term and serious investment in R&D. Luckily for companies from other countries, we have a well-educated population of people looking for jobs.

In 2017, we opened the door more widely so we can scoop up talented researchers and scientists from other countries, from a June 14, 2017 article by Beckie Smith for The PIE News,

Universities have welcomed the inclusion of the work permit exemption for academic stays of up to 120 days in the strategy, which also introduces expedited visa processing for some highly skilled professions.

Foreign researchers working on projects at a publicly funded degree-granting institution or affiliated research institution will be eligible for one 120-day stay in Canada every 12 months.

And universities will also be able to access a dedicated service channel that will support employers and provide guidance on visa applications for foreign talent.

The Global Skills Strategy, which came into force on June 12 [2017], aims to boost the Canadian economy by filling skills gaps with international talent.

…

As well as the short term work permit exemption, the Global Skills Strategy aims to make it easier for employers to recruit highly skilled workers in certain fields such as computer engineering.

“Employers that are making plans for job-creating investments in Canada will often need an experienced leader, dynamic researcher or an innovator with unique skills not readily available in Canada to make that investment happen,” said Ahmed Hussen, Minister of Immigration, Refugees and Citizenship.

“The Global Skills Strategy aims to give those employers confidence that when they need to hire from abroad, they’ll have faster, more reliable access to top talent.”

Coincidentally, Microsoft, Facebook, Google, etc. have announced, in 2017, new jobs and new offices in Canadian cities. There’s a also Chinese multinational telecom company Huawei Canada which has enjoyed success in Canada and continues to invest here (from a Jan. 19, 2018 article about security concerns by Matthew Braga for the Canadian Broadcasting Corporation (CBC) online news,

For the past decade, Chinese tech company Huawei has found no shortage of success in Canada. Its equipment is used in telecommunications infrastructure run by the country’s major carriers, and some have sold Huawei’s phones.

The company has struck up partnerships with Canadian universities, and say it is investing more than half a billion dollars in researching next generation cellular networks here. [emphasis mine]

…

While I’m not thrilled about using patents as an indicator of progress, this is interesting to note (from the report released April 10, 2018),

Canada produces about 1% of global patents, ranking 18th in the world. It lags further behind in trademark (34th) and design applications (34th). Despite relatively weak performance overall in patents, Canada excels in some technical fields such as Civil Engineering, Digital Communication, Other Special Machines, Computer Technology, and Telecommunications. [emphases mine] Canada is a net exporter of patents, which signals the R&D strength of some technology industries. It may also reflect increasing R&D investment by foreign-controlled firms. [emphasis mine] [p. xxiii Print; p. 25 PDF]

Getting back to my point, we don’t have large companies here. In fact, the dream for most of our high tech startups is to build up the company so it’s attractive to buyers, sell, and retire (hopefully before the age of 40). Strangely, the expert panel doesn’t seem to share my insight into this matter,

Canada’s combination of high performance in measures of research output and impact, and low performance on measures of industrial R&D investment and innovation (e.g., subpar productivity growth), continue to be viewed as a paradox, leading to the hypothesis that barriers are impeding the flow of Canada’s research achievements into commercial applications. The Panel’s analysis suggests the need for a more nuanced view. The process of transforming research into innovation and wealth creation is a complex multifaceted process, making it difficult to point to any definitive cause of Canada’s deficit in R&D investment and productivity growth. Based on the Panel’s interpretation of the evidence, Canada is a highly innovative nation, but significant barriers prevent the translation of innovation into wealth creation. The available evidence does point to a number of important contributing factors that are analyzed in this report. Figure 5 represents the relationships between R&D, innovation, and wealth creation.

The Panel concluded that many factors commonly identified as points of concern do not adequately explain the overall weakness in Canada’s innovation performance compared with other countries. [emphasis mine] Academia-business linkages appear relatively robust in quantitative terms given the extent of cross-sectoral R&D funding and increasing academia-industry partnerships, though the volume of academia-industry interactions does not indicate the nature or the quality of that interaction, nor the extent to which firms are capitalizing on the research conducted and the resulting IP. The educational system is high performing by international standards and there does not appear to be a widespread lack of researchers or STEM (science, technology, engineering, and mathematics) skills. IP policies differ across universities and are unlikely to explain a divergence in research commercialization activity between Canadian and U.S. institutions, though Canadian universities and governments could do more to help Canadian firms access university IP and compete in IP management and strategy. Venture capital availability in Canada has improved dramatically in recent years and is now competitive internationally, though still overshadowed by Silicon Valley. Technology start-ups and start-up ecosystems are also flourishing in many sectors and regions, demonstrating their ability to build on research advances to develop and deliver innovative products and services.

You’ll note there’s no mention of a cultural issue where start-ups are designed for sale as soon as possible and this isn’t new. Years ago, there was an accounting firm that published a series of historical maps (the last one I saw was in 2005) of technology companies in the Vancouver region. Technology companies were being developed and sold to large foreign companies from the 19th century to present day.

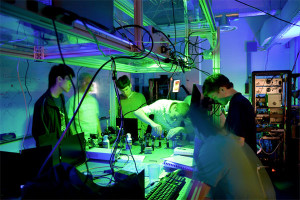

History has repeatedly demonstrated the power of research in physics to transform society. As a student of history and a believer in the power of physics, Mike Lazaridis set out in 2000 to make real his bold vision to establish the Region of Waterloo as a world leading centre for physics research. That is, a place where the best researchers in the world would come to do cutting-edge research and to collaborate with each other and in so doing, achieve transformative discoveries that would lead to the commercialization of breakthrough technologies.

History has repeatedly demonstrated the power of research in physics to transform society. As a student of history and a believer in the power of physics, Mike Lazaridis set out in 2000 to make real his bold vision to establish the Region of Waterloo as a world leading centre for physics research. That is, a place where the best researchers in the world would come to do cutting-edge research and to collaborate with each other and in so doing, achieve transformative discoveries that would lead to the commercialization of breakthrough technologies. Perimeter is located in a Governor-General award winning designed building in Waterloo. Success in recruiting and resulting space requirements led to an expansion of the Perimeter facility. A uniquely designed addition, which has been described as space-ship-like, was opened in 2011 as the

Perimeter is located in a Governor-General award winning designed building in Waterloo. Success in recruiting and resulting space requirements led to an expansion of the Perimeter facility. A uniquely designed addition, which has been described as space-ship-like, was opened in 2011 as the  Mike and Doug Fregin have been close friends since grade 5. They are also co-founders of BlackBerry (formerly Research In Motion Limited). Doug shares Mike’s passion for physics and supported Mike’s efforts at the Perimeter Institute with an initial gift of $10 million. Since that time Doug has donated a total of $30 million to Perimeter Institute. Separately, Doug helped establish the

Mike and Doug Fregin have been close friends since grade 5. They are also co-founders of BlackBerry (formerly Research In Motion Limited). Doug shares Mike’s passion for physics and supported Mike’s efforts at the Perimeter Institute with an initial gift of $10 million. Since that time Doug has donated a total of $30 million to Perimeter Institute. Separately, Doug helped establish the  For many years, theorists have been able to demonstrate the transformative powers of quantum mechanics on paper. That said, converting these theories to experimentally demonstrable discoveries has, putting it mildly, been a challenge. Many naysayers have suggested that achieving these discoveries was not possible and even the believers suggested that it could likely take decades to achieve these discoveries. Recently, a buzz has been developing globally as experimentalists have been able to achieve demonstrable success with respect to Quantum Information based discoveries. Local experimentalists are very much playing a leading role in this regard. It is believed by many that breakthrough discoveries that will lead to commercialization opportunities may be achieved in the next few years and certainly within the next decade.

For many years, theorists have been able to demonstrate the transformative powers of quantum mechanics on paper. That said, converting these theories to experimentally demonstrable discoveries has, putting it mildly, been a challenge. Many naysayers have suggested that achieving these discoveries was not possible and even the believers suggested that it could likely take decades to achieve these discoveries. Recently, a buzz has been developing globally as experimentalists have been able to achieve demonstrable success with respect to Quantum Information based discoveries. Local experimentalists are very much playing a leading role in this regard. It is believed by many that breakthrough discoveries that will lead to commercialization opportunities may be achieved in the next few years and certainly within the next decade.