I have two items and an exploration of the Canadian scene all three of which feature governments, artificial intelligence, and responsibility.

Special issue of Information Polity edited by Dutch academics,

A December 14, 2020 IOS Press press release (also on EurekAlert) announces a special issue of Information Polity focused on algorithmic transparency in government,

Amsterdam, NL – The use of algorithms in government is transforming the way bureaucrats work and make decisions in different areas, such as healthcare or criminal justice. Experts address the transparency challenges of using algorithms in decision-making procedures at the macro-, meso-, and micro-levels in this special issue of Information Polity.

Machine-learning algorithms hold huge potential to make government services fairer and more effective and have the potential of “freeing” decision-making from human subjectivity, according to recent research. Algorithms are used in many public service contexts. For example, within the legal system it has been demonstrated that algorithms can predict recidivism better than criminal court judges. At the same time, critics highlight several dangers of algorithmic decision-making, such as racial bias and lack of transparency.

Some scholars have argued that the introduction of algorithms in decision-making procedures may cause profound shifts in the way bureaucrats make decisions and that algorithms may affect broader organizational routines and structures. This special issue on algorithm transparency presents six contributions to sharpen our conceptual and empirical understanding of the use of algorithms in government.

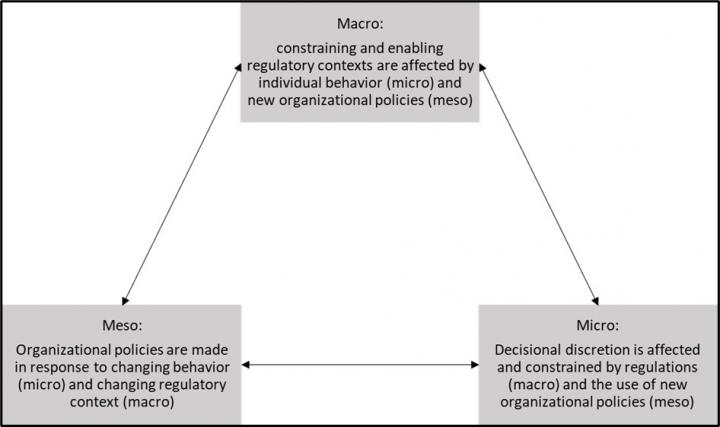

“There has been a surge in criticism towards the ‘black box’ of algorithmic decision-making in government,” explain Guest Editors Sarah Giest (Leiden University) and Stephan Grimmelikhuijsen (Utrecht University). “In this special issue collection, we show that it is not enough to unpack the technical details of algorithms, but also look at institutional, organizational, and individual context within which these algorithms operate to truly understand how we can achieve transparent and responsible algorithms in government. For example, regulations may enable transparency mechanisms, yet organizations create new policies on how algorithms should be used, and individual public servants create new professional repertoires. All these levels interact and affect algorithmic transparency in public organizations.”

The transparency challenges for the use of algorithms transcend different levels of government – from European level to individual public bureaucrats. These challenges can also take different forms; transparency can be enabled or limited by technical tools as well as regulatory guidelines or organizational policies. Articles in this issue address transparency challenges of algorithm use at the macro-, meso-, and micro-level. The macro level describes phenomena from an institutional perspective – which national systems, regulations and cultures play a role in algorithmic decision-making. The meso-level primarily pays attention to the organizational and team level, while the micro-level focuses on individual attributes, such as beliefs, motivation, interactions, and behaviors.

“Calls to ‘keep humans in the loop’ may be moot points if we fail to understand how algorithms impact human decision-making and how algorithmic design impacts the practical possibilities for transparency and human discretion,” notes Rik Peeters, research professor of Public Administration at the Centre for Research and Teaching in Economics (CIDE) in Mexico City. In a review of recent academic literature on the micro-level dynamics of algorithmic systems, he discusses three design variables that determine the preconditions for human transparency and discretion and identifies four main sources of variation in “human-algorithm interaction.”

The article draws two major conclusions: First, human agents are rarely fully “out of the loop,” and levels of oversight and override designed into algorithms should be understood as a continuum. The second pertains to bounded rationality, satisficing behavior, automation bias, and frontline coping mechanisms that play a crucial role in the way humans use algorithms in decision-making processes.

For future research Dr. Peeters suggests taking a closer look at the behavioral mechanisms in combination with identifying relevant skills of bureaucrats in dealing with algorithms. “Without a basic understanding of the algorithms that screen- and street-level bureaucrats have to work with, it is difficult to imagine how they can properly use their discretion and critically assess algorithmic procedures and outcomes. Professionals should have sufficient training to supervise the algorithms with which they are working.”

At the macro-level, algorithms can be an important tool for enabling institutional transparency, writes Alex Ingrams, PhD, Governance and Global Affairs, Institute of Public Administration, Leiden University, Leiden, The Netherlands. This study evaluates a machine-learning approach to open public comments for policymaking to increase institutional transparency of public commenting in a law-making process in the United States. The article applies an unsupervised machine learning analysis of thousands of public comments submitted to the United States Transport Security Administration on a 2013 proposed regulation for the use of new full body imaging scanners in airports. The algorithm highlights salient topic clusters in the public comments that could help policymakers understand open public comments processes. “Algorithms should not only be subject to transparency but can also be used as tool for transparency in government decision-making,” comments Dr. Ingrams.

“Regulatory certainty in combination with organizational and managerial capacity will drive the way the technology is developed and used and what transparency mechanisms are in place for each step,” note the Guest Editors. “On its own these are larger issues to tackle in terms of developing and passing laws or providing training and guidance for public managers and bureaucrats. The fact that they are linked further complicates this process. Highlighting these linkages is a first step towards seeing the bigger picture of why transparency mechanisms are put in place in some scenarios and not in others and opens the door to comparative analyses for future research and new insights for policymakers. To advocate the responsible and transparent use of algorithms, future research should look into the interplay between micro-, meso-, and macro-level dynamics.”

“We are proud to present this special issue, the 100th issue of Information Polity. Its focus on the governance of AI demonstrates our continued desire to tackle contemporary issues in eGovernment and the importance of showcasing excellent research and the insights offered by information polity perspectives,” add Professor Albert Meijer (Utrecht University) and Professor William Webster (University of Stirling), Editors-in-Chief.

This image illustrates the interplay between the various level dynamics,

Here’s a link, to and a citation for the special issue,

Algorithmic Transparency in Government: Towards a Multi-Level Perspective

Guest Editors: Sarah Giest, PhD, and Stephan Grimmelikhuijsen, PhD

Information Polity, Volume 25, Issue 4 (December 2020), published by IOS Press

The issue is open access for three months, Dec. 14, 2020 – March 14, 2021.

Two articles from the special were featured in the press release,

“The agency of algorithms: Understanding human-algorithm interaction in administrative decision-making,” by Rik Peeters, PhD (https://doi.org/10.3233/IP-200253)

“A machine learning approach to open public comments for policymaking,” by Alex Ingrams, PhD (https://doi.org/10.3233/IP-200256)

An AI governance publication from the US’s Wilson Center

Within one week of the release of a special issue of Information Polity on AI and governments, a Wilson Center (Woodrow Wilson International Center for Scholars) December 21, 2020 news release (received via email) announces a new publication,

Governing AI: Understanding the Limits, Possibilities, and Risks of AI in an Era of Intelligent Tools and Systems by John Zysman & Mark Nitzberg

Abstract

In debates about artificial intelligence (AI), imaginations often run wild. Policy-makers, opinion leaders, and the public tend to believe that AI is already an immensely powerful universal technology, limitless in its possibilities. However, while machine learning (ML), the principal computer science tool underlying today’s AI breakthroughs, is indeed powerful, ML is fundamentally a form of context-dependent statistical inference and as such has its limits. Specifically, because ML relies on correlations between inputs and outputs or emergent clustering in training data, today’s AI systems can only be applied in well- specified problem domains, still lacking the context sensitivity of a typical toddler or house-pet. Consequently, instead of constructing policies to govern artificial general intelligence (AGI), decision- makers should focus on the distinctive and powerful problems posed by narrow AI, including misconceived benefits and the distribution of benefits, autonomous weapons, and bias in algorithms. AI governance, at least for now, is about managing those who create and deploy AI systems, and supporting the safe and beneficial application of AI to narrow, well-defined problem domains. Specific implications of our discussion are as follows:

- AI applications are part of a suite of intelligent tools and systems and must ultimately be regulated as a set. Digital platforms, for example, generate the pools of big data on which AI tools operate and hence, the regulation of digital platforms and big data is part of the challenge of governing AI. Many of the platform offerings are, in fact, deployments of AI tools. Hence, focusing on AI alone distorts the governance problem.

- Simply declaring objectives—be they assuring digital privacy and transparency, or avoiding bias—is not sufficient. We must decide what the goals actually will be in operational terms.

- The issues and choices will differ by sector. For example, the consequences of bias and error will differ from a medical domain or a criminal justice domain to one of retail sales.

- The application of AI tools in public policy decision making, in transportation design or waste disposal or policing among a whole variety of domains, requires great care. There is a substantial risk of focusing on efficiency when the public debate about what the goals should be in the first place is in fact required. Indeed, public values evolve as part of social and political conflict.

- The economic implications of AI applications are easily exaggerated. Should public investment concentrate on advancing basic research or on diffusing the tools, user interfaces, and training needed to implement them?

- As difficult as it will be to decide on goals and a strategy to implement the goals of one community, let alone regional or international communities, any agreement that goes beyond simple objective statements is very unlikely.

Unfortunately, I haven’t been able to successfully download the working paper/report from the Wilson Center’s Governing AI: Understanding the Limits, Possibilities, and Risks of AI in an Era of Intelligent Tools and Systems webpage.

However, I have found a draft version of the report (Working Paper) published August 26, 2020 on the Social Science Research Network. This paper originated at the University of California at Berkeley as part of a series from the Berkeley Roundtable on the International Economy (BRIE). ‘Governing AI: Understanding the Limits, Possibility, and Risks of AI in an Era of Intelligent Tools and Systems’ is also known as the BRIE Working Paper 2020-5.

Canadian government and AI

The special issue on AI and governance and the the paper published by the Wilson Center stimulated my interest in the Canadian government’s approach to governance, responsibility, transparency, and AI.

There is information out there but it’s scattered across various government initiatives and ministries. Above all, it is not easy to find, open communication. Whether that’s by design or the blindness and/or ineptitude to be found in all organizations I leave that to wiser judges. (I’ve worked in small companies and they too have the problem. In colloquial terms, ‘the right hand doesn’t know what the left hand is doing’.)

Responsible use? Maybe not after 2019

First there’s a government of Canada webpage, Responsible use of artificial intelligence (AI). Other than a note at the bottom of the page “Date modified: 2020-07-28,” all of the information dates from 2016 up to March 2019 (which you’ll find on ‘Our Timeline’). Is nothing new happening?

For anyone interested in responsible use, there are two sections “Our guiding principles” and “Directive on Automated Decision-Making” that answer some questions. I found the ‘Directive’ to be more informative with its definitions, objectives, and, even, consequences. Sadly, you need to keep clicking to find consequences and you’ll end up on The Framework for the Management of Compliance. Interestingly, deputy heads are assumed in charge of managing non-compliance. I wonder how employees deal with a non-compliant deputy head?

What about the government’s digital service?

You might think Canadian Digital Service (CDS) might also have some information about responsible use. CDS was launched in 2017, according to Luke Simon’s July 19, 2017 article on Medium,

In case you missed it, there was some exciting digital government news in Canada Tuesday. The Canadian Digital Service (CDS) launched, meaning Canada has joined other nations, including the US and the UK, that have a federal department dedicated to digital.

…

At the time, Simon was Director of Outreach at Code for Canada.

Presumably, CDS, from an organizational perspective, is somehow attached to the Minister of Digital Government (it’s a position with virtually no governmental infrastructure as opposed to the Minister of Innovation, Science and Economic Development who is responsible for many departments and agencies). The current minister is Joyce Murray whose government profile offers almost no information about her work on digital services. Perhaps there’s a more informative profile of the Minister of Digital Government somewhere on a government website.

Meanwhile, they are friendly folks at CDS but they don’t offer much substantive information. From the CDS homepage,

Our aim is to make services easier for government to deliver. We collaborate with people who work in government to address service delivery problems. We test with people who need government services to find design solutions that are easy to use.

Learn more

After clicking on Learn more, I found this,

At the Canadian Digital Service (CDS), we partner up with federal departments to design, test and build simple, easy to use services. Our goal is to improve the experience – for people who deliver government services and people who use those services.

How it works

We work with our partners in the open, regularly sharing progress via public platforms. This creates a culture of learning and fosters best practices. It means non-partner departments can apply our work and use our resources to develop their own services.

Together, we form a team that follows the ‘Agile software development methodology’. This means we begin with an intensive ‘Discovery’ research phase to explore user needs and possible solutions to meeting those needs. After that, we move into a prototyping ‘Alpha’ phase to find and test ways to meet user needs. Next comes the ‘Beta’ phase, where we release the solution to the public and intensively test it. Lastly, there is a ‘Live’ phase, where the service is fully released and continues to be monitored and improved upon.

Between the Beta and Live phases, our team members step back from the service, and the partner team in the department continues the maintenance and development. We can help partners recruit their service team from both internal and external sources.

Before each phase begins, CDS and the partner sign a partnership agreement which outlines the goal and outcomes for the coming phase, how we’ll get there, and a commitment to get them done.

…

As you can see, there’s not a lot of detail and they don’t seem to have included anything about artificial intelligence as part of their operation. (I’ll come back to the government’s implementation of artificial intelligence and information technology later.)

Does the Treasury Board of Canada have charge of responsible AI use?

I think so but there are government departments/ministries that also have some responsibilities for AI and I haven’t seen any links back to the Treasury Board documentation.

For anyone not familiar with the Treasury Board or even if you are, December 14, 2009 article (Treasury Board of Canada: History, Organization and Issues) on Maple Leaf Web is quite informative,

The Treasury Board of Canada represent a key entity within the federal government. As an important cabinet committee and central agency, they play an important role in financial and personnel administration. Even though the Treasury Board plays a significant role in government decision making, the general public tends to know little about its operation and activities. [emphasis mine] The following article provides an introduction to the Treasury Board, with a focus on its history, responsibilities, organization, and key issues.

…

It seems the Minister of Digital Government, Joyce Murray is part of the Treasury Board and the Treasury Board is the source for the Digital Operations Strategic Plan: 2018-2022,

I haven’t read the entire document but the table of contents doesn’t include a heading for artificial intelligence and there wasn’t any mention of it in the opening comments.

But isn’t there a Chief Information Officer for Canada?

Herein lies a tale (I doubt I’ll ever get the real story) but the answer is a qualified ‘no’. The Chief Information Officer for Canada, Alex Benay (there is an AI aspect) stepped down in September 2019 to join a startup company according to an August 6, 2019 article by Mia Hunt for Global Government Forum,

Alex Benay has announced he will step down as Canada’s chief information officer next month to “take on new challenge” at tech start-up MindBridge.

“It is with mixed emotions that I am announcing my departure from the Government of Canada,” he said on Wednesday in a statement posted on social media, describing his time as CIO as “one heck of a ride”.

He said he is proud of the work the public service has accomplished in moving the national digital agenda forward. Among these achievements, he listed the adoption of public Cloud across government; delivering the “world’s first” ethical AI management framework; [emphasis mine] renewing decades-old policies to bring them into the digital age; and “solidifying Canada’s position as a global leader in open government”.

He also led the introduction of new digital standards in the workplace, and provided “a clear path for moving off” Canada’s failed Phoenix pay system. [emphasis mine]

…

I cannot find a current Chief Information of Canada despite searches but I did find this List of chief information officers (CIO) by institution. Where there was one, there are now many.

Since September 2019, Mr. Benay has moved again according to a November 7, 2019 article by Meagan Simpson on the BetaKit,website (Note: Links have been removed),

Alex Benay, the former CIO [Chief Information Officer] of Canada, has left his role at Ottawa-based Mindbridge after a short few months stint.

The news came Thursday, when KPMG announced that Benay was joining the accounting and professional services organization as partner of digital and government solutions. Benay originally announced that he was joining Mindbridge in August, after spending almost two and a half years as the CIO for the Government of Canada.

Benay joined the AI startup as its chief client officer and, at the time, was set to officially take on the role on September 3rd. According to Benay’s LinkedIn, he joined Mindbridge in August, but if the September 3rd start date is correct, Benay would have only been at Mindbridge for around three months. The former CIO of Canada was meant to be responsible for Mindbridge’s global growth as the company looked to prepare for an IPO in 2021.

Benay told The Globe and Mail that his decision to leave Mindbridge was not a question of fit, or that he considered the move a mistake. He attributed his decision to leave to conversations with Mindbridge customer KPMG, over a period of three weeks. Benay told The Globe that he was drawn to the KPMG opportunity to lead its digital and government solutions practice, something that was more familiar to him given his previous role.

Mindbridge has not completely lost what was touted as a start hire, though, as Benay will be staying on as an advisor to the startup. “This isn’t a cutting the cord and moving on to something else completely,” Benay told The Globe. “It’s a win-win for everybody.”

…

Via Mr. Benay, I’ve re-introduced artificial intelligence and introduced the Phoenix Pay system and now I’m linking them to government implementation of information technology in a specific case and speculating about implementation of artificial intelligence algorithms in government.

Phoenix Pay System Debacle (things are looking up), a harbinger for responsible use of artificial intelligence?

I’m happy to hear that the situation where government employees had no certainty about their paycheques is becoming better. After the ‘new’ Phoenix Pay System was implemented in early 2016, government employees found they might get the correct amount on their paycheque or might find significantly less than they were entitled to or might find huge increases.

The instability alone would be distressing but adding to it with the inability to get the problem fixed must have been devastating. Almost five years later, the problems are being resolved and people are getting paid appropriately, more often.

The estimated cost for fixing the problems was, as I recall, over $1B; I think that was a little optimistic. James Bagnall’s July 28, 2020 article for the Ottawa Citizen provides more detail, although not about the current cost, and is the source of my measured optimism,

Something odd has happened to the Phoenix Pay file of late. After four years of spitting out errors at a furious rate, the federal government’s new pay system has gone quiet.

And no, it’s not because of the even larger drama written by the coronavirus. In fact, there’s been very real progress at Public Services and Procurement Canada [PSPC; emphasis mine], the department in charge of pay operations.

Since January 2018, the peak of the madness, the backlog of all pay transactions requiring action has dropped by about half to 230,000 as of late June. Many of these involve basic queries for information about promotions, overtime and rules. The part of the backlog involving money — too little or too much pay, incorrect deductions, pay not received — has shrunk by two-thirds to 125,000.

These are still very large numbers but the underlying story here is one of long-delayed hope. The government is processing the pay of more than 330,000 employees every two weeks while simultaneously fixing large batches of past mistakes.

…

While officials with two of the largest government unions — Public Service Alliance of Canada [PSAC] and the Professional Institute of the Public Service of Canada [PPSC] — disagree the pay system has worked out its kinks, they acknowledge it’s considerably better than it was. New pay transactions are being processed “with increased timeliness and accuracy,” the PSAC official noted.

Neither union is happy with the progress being made on historical mistakes. PIPSC president Debi Daviau told this newspaper that many of her nearly 60,000 members have been waiting for years to receive salary adjustments stemming from earlier promotions or transfers, to name two of the more prominent sources of pay errors.

…

Even so, the sharp improvement in Phoenix Pay’s performance will soon force the government to confront an interesting choice: Should it continue with plans to replace the system?

Treasury Board, the government’s employer, two years ago launched the process to do just that. Last March, SAP Canada — whose technology underpins the pay system still in use at Canada Revenue Agency — won a competition to run a pilot project. Government insiders believe SAP Canada is on track to build the full system starting sometime in 2023.

…

When Public Services set out the business case in 2009 for building Phoenix Pay, it noted the pay system would have to accommodate 150 collective agreements that contained thousands of business rules and applied to dozens of federal departments and agencies. The technical challenge has since intensified.

…

Under the original plan, Phoenix Pay was to save $70 million annually by eliminating 1,200 compensation advisors across government and centralizing a key part of the operation at the pay centre in Miramichi, N.B., where 550 would manage a more automated system.

Instead, the Phoenix Pay system currently employs about 2,300. This includes 1,600 at Miramichi and five regional pay offices, along with 350 each at a client contact centre (which deals with relatively minor pay issues) and client service bureau (which handles the more complex, longstanding pay errors). This has naturally driven up the average cost of managing each pay account — 55 per cent higher than the government’s former pay system according to last fall’s estimate by the Parliamentary Budget Officer.

… As the backlog shrinks, the need for regional pay offices and emergency staffing will diminish. Public Services is also working with a number of high-tech firms to develop ways of accurately automating employee pay using artificial intelligence [emphasis mine].

…

Given the Phoenix Pay System debacle, it might be nice to see a little information about how the government is planning to integrate more sophisticated algorithms (artificial intelligence) in their operations.

I found this on a Treasury Board webpage, all 1 minute and 29 seconds of it,

The blonde model or actress mentions that companies applying to Public Services and Procurement Canada for placement on the list must use AI responsibly. Her script does not include a definition or guidelines, which, as previously noted, as on the Treasury Board website.

As for Public Services and Procurement Canada, they have an Artificial intelligence source list,

Public Services and Procurement Canada (PSPC) is putting into operation the Artificial intelligence source list to facilitate the procurement of Canada’s requirements for Artificial intelligence (AI).

After research and consultation with industry, academia, and civil society, Canada identified 3 AI categories and business outcomes to inform this method of supply:

Insights and predictive modelling

Machine interactions

Cognitive automation

…

PSPC is focused only on procuring AI. If there are guidelines on their website for its use, I did not find them.

I found one more government agency that might have some information about artificial intelligence and guidelines for its use, Shared Services Canada,

Shared Services Canada (SSC) delivers digital services to Government of Canada organizations. We provide modern, secure and reliable IT services so federal organizations can deliver digital programs and services that meet Canadians needs.

…

Since the Minister of Digital Government, Joyce Murray, is listed on the homepage, I was hopeful that I could find out more about AI and governance and whether or not the Canadian Digital Service was associated with this government ministry/agency. I was frustrated on both counts.

To sum up, there is no information that I could find after March 2019 about Canada, it’s government and plans for AI, especially responsible management/governance and AI on a Canadian government website although I have found guidelines, expectations, and consequences for non-compliance. (Should anyone know which government agency has up-to-date information on its responsible use of AI, please let me know in the Comments.

Canadian Institute for Advanced Research (CIFAR)

The first mention of the Pan-Canadian Artificial Intelligence Strategy is in my analysis of the Canadian federal budget in a March 24, 2017 posting. Briefly, CIFAR received a big chunk of that money. Here’s more about the strategy from the CIFAR Pan-Canadian AI Strategy homepage,

In 2017, the Government of Canada appointed CIFAR to develop and lead a $125 million Pan-Canadian Artificial Intelligence Strategy, the world’s first national AI strategy.

CIFAR works in close collaboration with Canada’s three national AI Institutes — Amii in Edmonton, Mila in Montreal, and the Vector Institute in Toronto, as well as universities, hospitals and organizations across the country.

The objectives of the strategy are to:

Attract and retain world-class AI researchers by increasing the number of outstanding AI researchers and skilled graduates in Canada.

Foster a collaborative AI ecosystem by establishing interconnected nodes of scientific excellence in Canada’s three major centres for AI: Edmonton, Montreal, and Toronto.

Advance national AI initiatives by supporting a national research community on AI through training programs, workshops, and other collaborative opportunities.

Understand the societal implications of AI by developing global thought leadership on the economic, ethical, policy, and legal implications [emphasis mine] of advances in AI.

Responsible AI at CIFAR

You can find Responsible AI in a webspace devoted to what they have called, AI & Society. Here’s more from the homepage,

CIFAR is leading global conversations about AI’s impact on society.

The AI & Society program, one of the objectives of the CIFAR Pan-Canadian AI Strategy, develops global thought leadership on the economic, ethical, political, and legal implications of advances in AI. These dialogues deliver new ways of thinking about issues, and drive positive change in the development and deployment of responsible AI.

…

Under the category of building an AI World I found this (from CIFAR’s AI & Society homepage),

BUILDING AN AI WORLD

Explore the landscape of global AI strategies.

Canada was the first country in the world to announce a federally-funded national AI strategy, prompting many other nations to follow suit. CIFAR published two reports detailing the global landscape of AI strategies.

…

I skimmed through the second report and it seems more like a comparative study of various country’s AI strategies than a overview of responsible use of AI.

Final comments about Responsible AI in Canada and the new reports

I’m glad to see there’s interest in Responsible AI but based on my adventures searching the Canadian government websites and the Pan-Canadian AI Strategy webspace, I’m left feeling hungry for more.

I didn’t find any details about how AI is being integrated into government departments and for what uses. I’d like to know and I’d like to have some say about how it’s used and how the inevitable mistakes will be dealh with.

The great unwashed

What I’ve found is high minded, but, as far as I can tell, there’s absolutely no interest in talking to the ‘great unwashed’. Those of us who are not experts are being left out of these earlier stage conversations.

I’m sure we’ll be consulted at some point but it will be long past the time when are our opinions and insights could have impact and help us avoid the problems that experts tend not to see. What we’ll be left with is protest and anger on our part and, finally, grudging admissions and corrections of errors on the government’s part.

Let’s take this for an example. The Phoenix Pay System was implemented in its first phase on Feb. 24, 2016. As I recall, problems develop almost immediately. The second phase of implementation starts April 21, 2016. In May 2016 the government hires consultants to fix the problems. November 29, 2016 the government minister, Judy Foote, admits a mistake has been made. February 2017 the government hires consultants to establish what lessons they might learn. February 15, 2018 the pay problems backlog amounts to 633,000. Source: James Bagnall, Feb. 23, 2018 ‘timeline‘ for Ottawa Citizen

Do take a look at the timeline, there’s more to it than what I’ve written here and I’m sure there’s more to the Phoenix Pay System debacle than a failure to listen to warnings from those who would be directly affected. It’s fascinating though how often a failure to listen presages far deeper problems with a project.

The Canadian government, both a conservative and a liberal government, contributed to the Phoenix Debacle but it seems the gravest concern is with senior government bureaucrats. You might think things have changed since this recounting of the affair in a June 14, 2018 article by Michelle Zilio for the Globe and Mail,

The three public servants blamed by the Auditor-General for the Phoenix pay system problems were not fired for mismanagement of the massive technology project that botched the pay of tens of thousands of public servants for more than two years.

Marie Lemay, deputy minister for Public Services and Procurement Canada (PSPC), said two of the three Phoenix executives were shuffled out of their senior posts in pay administration and did not receive performance bonuses for their handling of the system. Those two employees still work for the department, she said. Ms. Lemay, who refused to identify the individuals, said the third Phoenix executive retired.

In a scathing report last month, Auditor-General Michael Ferguson blamed three “executives” – senior public servants at PSPC, which is responsible for Phoenix − for the pay system’s “incomprehensible failure.” [emphasis mine] He said the executives did not tell the then-deputy minister about the known problems with Phoenix, leading the department to launch the pay system despite clear warnings it was not ready.

Speaking to a parliamentary committee on Thursday, Ms. Lemay said the individuals did not act with “ill intent,” noting that the development and implementation of the Phoenix project were flawed. She encouraged critics to look at the “bigger picture” to learn from all of Phoenix’s failures.

…

Mr. Ferguson, whose office spoke with the three Phoenix executives as a part of its reporting, said the officials prioritized some aspects of the pay-system rollout, such as schedule and budget, over functionality. He said they also cancelled a pilot implementation project with one department that would have helped it detect problems indicating the system was not ready.

…

Mr. Ferguson’s report warned the Phoenix problems are indicative of “pervasive cultural problems” [emphasis mine] in the civil service, which he said is fearful of making mistakes, taking risks and conveying “hard truths.”

…

Speaking to the same parliamentary committee on Tuesday, Privy Council Clerk [emphasis mine] Michael Wernick challenged Mr. Ferguson’s assertions, saying his chapter on the federal government’s cultural issues is an “opinion piece” containing “sweeping generalizations.”

…

The Privy Council Clerk is the top level bureaucrat (and there is only one such clerk) in the civil/public service and I think his quotes are quite telling of “pervasive cultural problems.” There’s a new Privy Council Clerk but from what I can tell he was well trained by his predecessor.

Do* we really need senior government bureaucrats?

I now have an example of bureaucratic interference, specifically with the Global Public Health Information Network (GPHIN) where it would seem that not much has changed, from a December 26, 2020 article by Grant Robertson for the Globe & Mail,

When Canada unplugged support for its pandemic alert system [GPHIN] last year, it was a symptom of bigger problems inside the Public Health Agency. Experienced scientists were pushed aside, expertise was eroded, and internal warnings went unheeded, which hindered the department’s response to COVID-19

As a global pandemic began to take root in February, China held a series of backchannel conversations with Canada, lobbying the federal government to keep its borders open.

With the virus already taking a deadly toll in Asia, Heng Xiaojun, the Minister Counsellor for the Chinese embassy, requested a call with senior Transport Canada officials. Over the course of the conversation, the Chinese representatives communicated Beijing’s desire that flights between the two countries not be stopped because it was unnecessary.

“The Chinese position on the continuation of flights was reiterated,” say official notes taken from the call. “Mr. Heng conveyed that China is taking comprehensive measures to combat the coronavirus.”

Canadian officials seemed to agree, since no steps were taken to restrict or prohibit travel. To the federal government, China appeared to have the situation under control and the risk to Canada was low. Before ending the call, Mr. Heng thanked Ottawa for its “science and fact-based approach.”

…

It was a critical moment in the looming pandemic, but the Canadian government lacked the full picture, instead relying heavily on what Beijing was choosing to disclose to the World Health Organization (WHO). Ottawa’s ability to independently know what was going on in China – on the ground and inside hospitals – had been greatly diminished in recent years.

Canada once operated a robust pandemic early warning system and employed a public-health doctor based in China who could report back on emerging problems. But it had largely abandoned those international strategies over the past five years, and was no longer as plugged-in.

By late February [2020], Ottawa seemed to be taking the official reports from China at their word, stating often in its own internal risk assessments that the threat to Canada remained low. But inside the Public Health Agency of Canada (PHAC), rank-and-file doctors and epidemiologists were growing increasingly alarmed at how the department and the government were responding.

“The team was outraged,” one public-health scientist told a colleague in early April, in an internal e-mail obtained by The Globe and Mail, criticizing the lack of urgency shown by Canada’s response during January, February and early March. “We knew this was going to be around for a long time, and it’s serious.”

China had locked down cities and restricted travel within its borders. Staff inside the Public Health Agency believed Beijing wasn’t disclosing the whole truth about the danger of the virus and how easily it was transmitted. “The agency was just too slow to respond,” the scientist said. “A sane person would know China was lying.”

It would later be revealed that China’s infection and mortality rates were played down in official records, along with key details about how the virus was spreading.

But the Public Health Agency, which was created after the 2003 SARS crisis to bolster the country against emerging disease threats, had been stripped of much of its capacity to gather outbreak intelligence and provide advance warning by the time the pandemic hit.

The Global Public Health Intelligence Network, an early warning system known as GPHIN that was once considered a cornerstone of Canada’s preparedness strategy, had been scaled back over the past several years, with resources shifted into projects that didn’t involve outbreak surveillance.

However, a series of documents obtained by The Globe during the past four months, from inside the department and through numerous Access to Information requests, show the problems that weakened Canada’s pandemic readiness run deeper than originally thought. Pleas from the international health community for Canada to take outbreak detection and surveillance much more seriously were ignored by mid-level managers [emphasis mine] inside the department. A new federal pandemic preparedness plan – key to gauging the country’s readiness for an emergency – was never fully tested. And on the global stage, the agency stopped sending experts [emphasis mine] to international meetings on pandemic preparedness, instead choosing senior civil servants with little or no public-health background [emphasis mine] to represent Canada at high-level talks, The Globe found.

…

The curtailing of GPHIN and allegations that scientists had become marginalized within the Public Health Agency, detailed in a Globe investigation this past July [2020], are now the subject of two federal probes – an examination by the Auditor-General of Canada and an independent federal review, ordered by the Minister of Health.

Those processes will undoubtedly reshape GPHIN and may well lead to an overhaul of how the agency functions in some areas. The first steps will be identifying and fixing what went wrong. With the country now topping 535,000 cases of COVID-19 and more than 14,700 dead, there will be lessons learned from the pandemic.

Prime Minister Justin Trudeau has said he is unsure what role added intelligence [emphasis mine] could have played in the government’s pandemic response, though he regrets not bolstering Canada’s critical supplies of personal protective equipment sooner. But providing the intelligence to make those decisions early is exactly what GPHIN was created to do – and did in previous outbreaks.

Epidemiologists have described in detail to The Globe how vital it is to move quickly and decisively in a pandemic. Acting sooner, even by a few days or weeks in the early going, and throughout, can have an exponential impact on an outbreak, including deaths. Countries such as South Korea, Australia and New Zealand, which have fared much better than Canada, appear to have acted faster in key tactical areas, some using early warning information they gathered. As Canada prepares itself in the wake of COVID-19 for the next major health threat, building back a better system becomes paramount.

…

If you have time, do take a look at Robertson’s December 26, 2020 article and the July 2020 Globe investigation. As both articles make clear, senior bureaucrats whose chief attribute seems to have been longevity took over, reallocated resources, drove out experts, and crippled the few remaining experts in the system with a series of bureaucratic demands while taking trips to attend meetings (in desirable locations) for which they had no significant or useful input.

The Phoenix and GPHIN debacles bear a resemblance in that senior bureaucrats took over and in a state of blissful ignorance made a series of disastrous decisions bolstered by politicians who seem to neither understand nor care much about the outcomes.

If you think I’m being harsh watch Canadian Broadcasting Corporation (CBC) reporter Rosemary Barton interview Prime Minister Trudeau for a 2020 year-end interview, Note: There are some commercials. Then, pay special attention to the Trudeau’s answer to the first question,

Responsible AI, eh?

Based on the massive mishandling of the Phoenix Pay System implementation where top bureaucrats did not follow basic and well established information services procedures and the Global Public Health Information Network mismanagement by top level bureaucrats, I’m not sure I have a lot of confidence in any Canadian government claims about a responsible approach to using artificial intelligence.

Unfortunately, it doesn’t matter as implementation is most likely already taking place here in Canada.

Enough with the pessimism. I feel it’s necessary to end this on a mildly positive note. Hurray to the government employees who worked through the Phoenix Pay System debacle, the current and former GPHIN experts who continued to sound warnings, and all those people striving to make true the principles of ‘Peace, Order, and Good Government’, the bedrock principles of the Canadian Parliament.

A lot of mistakes have been made but we also do make a lot of good decisions.

*’Doe’ changed to ‘Do’ on May 14, 2021.