This supremacy, refers to an engineering milestone and a October 23, 2019 news item on ScienceDaily announces the milestone has been reached,

Researchers in UC [University of California] Santa Barbara/Google scientist John Martinis’ group have made good on their claim to quantum supremacy. Using 53 entangled quantum bits (“qubits”), their Sycamore computer has taken on — and solved — a problem considered intractable for classical computers.

…

An October 23, 2019 UC Santa Barbara news release (also on EurekAlert) by Sonia Fernandez, which originated the news item, delves further into the work,

“A computation that would take 10,000 years on a classical supercomputer took 200 seconds on our quantum computer,” said Brooks Foxen, a graduate student researcher in the Martinis Group. “It is likely that the classical simulation time, currently estimated at 10,000 years, will be reduced by improved classical hardware and algorithms, but, since we are currently 1.5 trillion times faster, we feel comfortable laying claim to this achievement.”

The feat is outlined in a paper in the journal Nature.

The milestone comes after roughly two decades of quantum computing research conducted by Martinis and his group, from the development of a single superconducting qubit to systems including architectures of 72 and, with Sycamore, 54 qubits (one didn’t perform) that take advantage of the both awe-inspiring and bizarre properties of quantum mechanics.

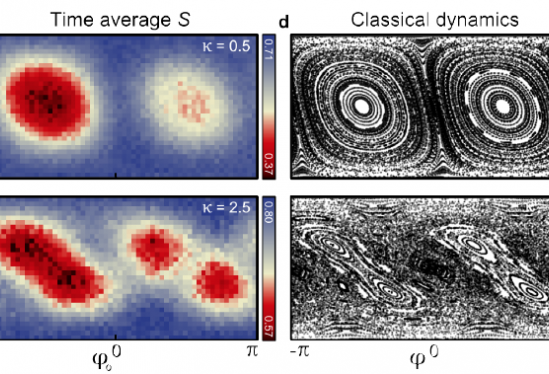

“The algorithm was chosen to emphasize the strengths of the quantum computer by leveraging the natural dynamics of the device,” said Ben Chiaro, another graduate student researcher in the Martinis Group. That is, the researchers wanted to test the computer’s ability to hold and rapidly manipulate a vast amount of complex, unstructured data.

“We basically wanted to produce an entangled state involving all of our qubits as quickly as we can,” Foxen said, “and so we settled on a sequence of operations that produced a complicated superposition state that, when measured, returns bitstring with a probability determined by the specific sequence of operations used to prepare that particular superposition. The exercise, which was to verify that the circuit’s output correspond to the equence used to prepare the state, sampled the quantum circuit a million times in just a few minutes, exploring all possibilities — before the system could lose its quantum coherence.

‘A complex superposition state’

“We performed a fixed set of operations that entangles 53 qubits into a complex superposition state,” Chiaro explained. “This superposition state encodes the probability distribution. For the quantum computer, preparing this superposition state is accomplished by applying a sequence of tens of control pulses to each qubit in a matter of microseconds. We can prepare and then sample from this distribution by measuring the qubits a million times in 200 seconds.”

“For classical computers, it is much more difficult to compute the outcome of these operations because it requires computing the probability of being in any one of the 2^53 possible states, where the 53 comes from the number of qubits — the exponential scaling is why people are interested in quantum computing to begin with,” Foxen said. “This is done by matrix multiplication, which is expensive for classical computers as the matrices become large.”

According to the new paper, the researchers used a method called cross-entropy benchmarking to compare the quantum circuit’s output (a “bitstring”) to its “corresponding ideal probability computed via simulation on a classical computer” to ascertain that the quantum computer was working correctly.

“We made a lot of design choices in the development of our processor that are really advantageous,” said Chiaro. Among these advantages, he said, are the ability to experimentally tune the parameters of the individual qubits as well as their interactions.

While the experiment was chosen as a proof-of-concept for the computer, the research has resulted in a very real and valuable tool: a certified random number generator. Useful in a variety of fields, random numbers can ensure that encrypted keys can’t be guessed, or that a sample from a larger population is truly representative, leading to optimal solutions for complex problems and more robust machine learning applications. The speed with which the quantum circuit can produce its randomized bit string is so great that there is no time to analyze and “cheat” the system.

“Quantum mechanical states do things that go beyond our day-to-day experience and so have the potential to provide capabilities and application that would otherwise be unattainable,” commented Joe Incandela, UC Santa Barbara’s vice chancellor for research. “The team has demonstrated the ability to reliably create and repeatedly sample complicated quantum states involving 53 entangled elements to carry out an exercise that would take millennia to do with a classical supercomputer. This is a major accomplishment. We are at the threshold of a new era of knowledge acquisition.”

Looking ahead

With an achievement like “quantum supremacy,” it’s tempting to think that the UC Santa Barbara/Google researchers will plant their flag and rest easy. But for Foxen, Chiaro, Martinis and the rest of the UCSB/Google AI Quantum group, this is just the beginning.

“It’s kind of a continuous improvement mindset,” Foxen said. “There are always projects in the works.” In the near term, further improvements to these “noisy” qubits may enable the simulation of interesting phenomena in quantum mechanics, such as thermalization, or the vast amount of possibility in the realms of materials and chemistry.

In the long term, however, the scientists are always looking to improve coherence times, or, at the other end, to detect and fix errors, which would take many additional qubits per qubit being checked. These efforts have been running parallel to the design and build of the quantum computer itself, and ensure the researchers have a lot of work before hitting their next milestone.

“It’s been an honor and a pleasure to be associated with this team,” Chiaro said. “It’s a great collection of strong technical contributors with great leadership and the whole team really synergizes well.”

Here’s a link to and a citation for the paper,

Quantum supremacy using a programmable superconducting processor by Frank Arute, Kunal Arya, Ryan Babbush, Dave Bacon, Joseph C. Bardin, Rami Barends, Rupak Biswas, Sergio Boixo, Fernando G. S. L. Brandao, David A. Buell, Brian Burkett, Yu Chen, Zijun Chen, Ben Chiaro, Roberto Collins, William Courtney, Andrew Dunsworth, Edward Farhi, Brooks Foxen, Austin Fowler, Craig Gidney, Marissa Giustina, Rob Graff, Keith Guerin, Steve Habegger, Matthew P. Harrigan, Michael J. Hartmann, Alan Ho, Markus Hoffmann, Trent Huang, Travis S. Humble, Sergei V. Isakov, Evan Jeffrey, Zhang Jiang, Dvir Kafri, Kostyantyn Kechedzhi, Julian Kelly, Paul V. Klimov, Sergey Knysh, Alexander Korotkov, Fedor Kostritsa, David Landhuis, Mike Lindmark, Erik Lucero, Dmitry Lyakh, Salvatore Mandrà, Jarrod R. McClean, Matthew McEwen, Anthony Megrant, Xiao Mi, Kristel Michielsen, Masoud Mohseni, Josh Mutus, Ofer Naaman, Matthew Neeley, Charles Neill, Murphy Yuezhen Niu, Eric Ostby, Andre Petukhov, John C. Platt, Chris Quintana, Eleanor G. Rieffel, Pedram Roushan, Nicholas C. Rubin, Daniel Sank, Kevin J. Satzinger, Vadim Smelyanskiy, Kevin J. Sung, Matthew D. Trevithick, Amit Vainsencher, Benjamin Villalonga, Theodore White, Z. Jamie Yao, Ping Yeh, Adam Zalcman, Hartmut Neven & John M. Martinis. Nature volume 574, pages505–510 (2019) DOI: https://doi.org/10.1038/s41586-019-1666-5 Issue Date 24 October 2019

This paper appears to be open access.

![This shows the diffusion of the neurotransmitter applied to squid skin at upper right, which induces a wave of iridescence traveling to the lower left and progressing from red to blue. Each object in the image is a living cell, 10 microns long; the dark object in the center of each cell is the cell nucleus. [downloaded from http://www.ia.ucsb.edu/pa/display.aspx?pkey=3076]](http://www.frogheart.ca/wp-content/uploads/2013/07/CephalopodCamuuflage-300x199.jpeg)