I gather the University of California at Santa Barbara’s (UCSB) Center for Nanotechnology in Society is ‘sunsetting’ as its funding runs out. A Nov. 9, 2016 UCSB news release by Brandon Fastman describes the center’s ‘swan song’,

After more than a decade, the UCSB Center for Nanotechnology in Society research has provided new and deep knowledge of how technological innovation and social change impact one another. Now, as the national center reaches the end of its term, its three primary research groups have published synthesis reports that bring together important findings from their 11 years of activity.

The reports, which include policy recommendations, are available for free download at the CNS web site at

http://www.cns.ucsb.edu/irg-synthesis-reports.

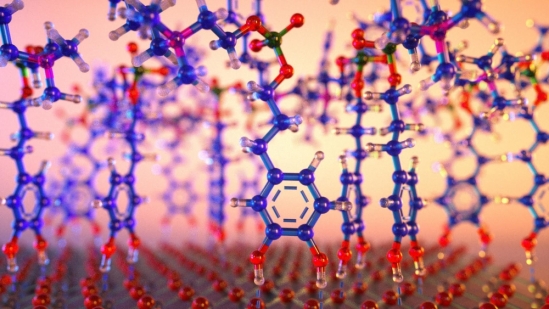

The ever-increasing ability of scientists to manipulate matter on the molecular level brings with it the potential for science fiction-like technologies such as nanoelectronic sensors that would entail “merging tissue with electronics in a way that it becomes difficult to determine where the tissue ends and the electronics begin,” according to a Harvard chemist in a recent CQ Researcher report. While the life-altering ramifications of such technologies are clear, it is less clear how they might impact the larger society to which they are introduced.

CNS research, as detailed the reports, addresses such gaps in knowledge. For instance, when anthropologist Barbara Herr Harthorn and her collaborators at the UCSB Center for Nanotechnology in Society (CNS-UCSB), convened public deliberations to discuss the promises and perils of health and human enhancement nanotechnologies, they thought that participants might be concerned about medical risks. However, that is not exactly what they found.

Participants were less worried about medical or technological mishaps than about the equitable distribution of the risks and benefits of new technologies and fair procedures for addressing potential problems. That is, they were unconvinced that citizens across the socioeconomic spectrum would share equal access to the benefits of therapies or equal exposure to their pitfalls.

In describing her work, Harthorn explained, “Intuitive assumptions of experts and practitioners about public perceptions and concerns are insufficient to understanding the societal contexts of technologies. Relying on intuition often leads to misunderstandings of social and institutional realities. CNS-UCSB has attempted to fill in the knowledge gaps through methodologically sophisticated empirical and theoretical research.”

In her role as Director of CNS-UCSB, Harthorn has overseen a larger effort to promote the responsible development of sophisticated materials and technologies seen as central to the nation’s economic future. By pursuing this goal, researchers at CNS-UCSB, which closed its doors at the end of the summer, have advanced the role for the social, economic, and behavioral sciences in understanding technological innovation.

Harthorn has spent the past 11 years trying to understand public expectations, values, beliefs, and perceptions regarding nanotechnologies. Along with conducting deliberations, she has worked with toxicologists and engineers to examine the environmental and occupational risks of nanotechnologies, determine gaps in the U.S. regulatory system, and survey nanotechnology experts. Work has also expanded to comparative studies of other emerging technologies such as shale oil and gas extraction (fracking).

Along with Harthorn’s research group on risk perception and social response, CNS-UCSB housed two other main research groups. One, led by sociologist Richard Appelbaum, studied the impacts of nanotechnology on the global economy. The other, led by historian Patrick McCray, studied the technologies, communities, and individuals that have shaped the direction of nanotechnology research.

Appelbaum’s research program included studying how state policies regarding nanotechnology – especially in China and Latin America – has impacted commercialization. Research trips to China elicited a great understanding of that nation’s research culture and its capacity to produce original intellectual property. He also studied the role of international collaboration in spurring technological innovation. As part of this research, his collaborators surveyed and interviewed international STEM graduate students in the United States in order to understand the factors that influence their choice whether to remain abroad or return home.

In examining the history of nanotechnology, McCray’s group explained how the microelectronics industry provided a template for what became known as nanotechnology, examined educational policies aimed at training a nano-workforce, and produced a history of the scanning tunneling microscope. They also penned award-winning monographs including McCray’s book, The Visioneers: How a Group of Elite Scientists Pursued Space Colonies, Nanotechnologies, and Limitless Future.

Reaching the Real World

Funded as a National Center by the US National Science Foundation in 2005, CNS-UCSB was explicitly intended to enhance the understanding of the relationship between new technologies and their societal context. After more than a decade of funding, CNS-UCSB research has provided a deep understanding of the relationship between technological innovation and social change.

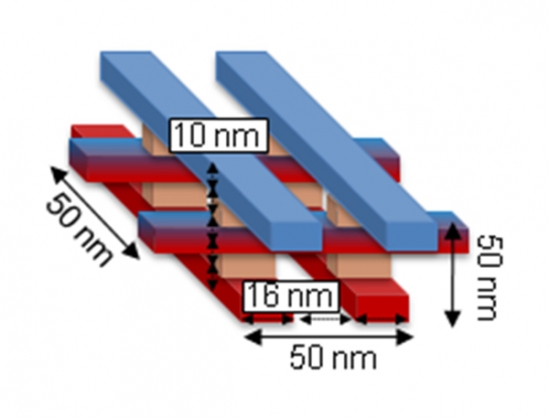

New developments in nanotechnology, an area of research that has garnered $24 billion in funding from the U.S. federal government since 2001, impact sectors as far ranging as agriculture, medicine, energy, defense, and construction, posing great challenges for policymakers and regulators who must consider questions of equity, sustainability, occupational and environmental health and safety, economic and educational policy, disruptions to privacy, security and even what it means to be human. (A nanometer is roughly 10,000 times smaller than the diameter of a human hair.) Nanoscale materials are already integrated into food packaging, electronics, solar cells, cosmetics, and pharmaceuticals. They are far in development for drugs that can target specific cells, microscopic spying devices, and quantum computers.

Given such real-world applications, it was important to CNS researchers that the results of their work not remain confined within the halls of academia. Therefore, they have delivered testimony to Congress, federal and state agencies (including the National Academies of Science, the Centers for Disease Control and Prevention, the Presidential Council of Advisors on Science and Technology, the U.S. Presidential Bioethics Commission and the National Nanotechnology Initiative), policy outfits (including the Washington Center for Equitable Growth), and international agencies (including the World Bank, European Commission, and World Economic Forum). They’ve collaborated with nongovernmental organizations. They’ve composed policy briefs and op eds, and their work has been covered by numerous news organizations including, recently, NPR, The New Yorker, and Forbes. They have also given many hundreds of lectures to audiences in community groups, schools, and museums.

Policy Options

Most notably, in their final act before the center closed, each of the three primary research groups published synthesis reports that bring together important findings from their 11 years of activity. Their titles are:

Exploring Nanotechnology’s Origins, Institutions, and Communities: A Ten Year Experiment in Large Scale Collaborative STS Research

Globalization and Nanotechnology: The Role of State Policy and International Collaboration

Understanding Nanotechnologies’ Risks and Benefits: Emergence, Expertise and Upstream Participation.

A sampling of key policy recommendations follows:

1. Public acceptability of nanotechnologies is driven by: benefit perception, the type of application, and the risk messages transmitted from trusted sources and their stability over time; therefore transparent and responsible risk communication is a critical aspect of acceptability.

2. Social risks, particularly issues of equity and politics, are primary, not secondary, drivers of perception and need to be fully addressed in any new technology development. We have devoted particular attention to studying how gender and race/ethnicity affect both public and expert risk judgments.

3. State policies aimed at fostering science and technology development should clearly continue to emphasize basic research, but not to the exclusion of supporting promising innovative payoffs. The National Nanotechnology Initiative, with its overwhelming emphasis on basic research, would likely achieve greater success in spawning thriving businesses and commercialization by investing more in capital programs such as the Small Business Innovation Research (SBIR) and Small Business Technology Transfer (STTR) programs, self-described as “America’s seed fund.”

4. While nearly half of all international STEM graduate students would like to stay in the U.S. upon graduation, fully 40 percent are undecided — and a main barrier is current U.S. immigration policy.

5. Although representatives from the nanomaterials industry demonstrate relatively high perceived risk regarding engineered nanomaterials, they likewise demonstrate low sensitivity to variance in risks across type of engineered nanomaterials, and a strong disinclination to regulation. This situation puts workers at significant risk and probably requires regulatory action now (beyond the currently favored voluntary or ‘soft law’ approaches).

6. The complex nature of technological ecosystems translates into a variety of actors essential for successful innovation. One species is the Visioneer, a person who blends engineering experience with a transformative vision of the technological future and a willingness to promote this vision to the public and policy makers.

Leaving a Legacy

Along with successful outreach efforts, CNS-UCSB also flourished when measured by typical academic metrics, including nearly 400 publications and 1,200 talks.

In addition to producing groundbreaking interdisciplinary research, CNS-UCSB also produced innovative educational programs, reaching 200 professionals-in-training from the undergraduate to postdoctoral levels. The Center’s educational centerpiece was a graduate fellowship program, referred to as “magical” by an NSF reviewer, that integrated doctoral students from disciplines across the UCSB campus into ongoing social science research projects.

For social scientists, working side-by-side with science and engineering students gave them an appreciation for the methods, culture, and ethics of their colleagues in different disciplines. It also led to methodological innovation. For their part, scientists and engineers were able to understand the larger context of their work at the bench.

UCSB graduates who participated in CNS’s educational programs have gone on to work as postdocs and professors at universities (including MIT, Stanford, U Penn), policy experts (at organizations like the Science Technology and Policy Institute and the Canadian Institute for Advanced Research), researchers at government agencies (like the National Institute for Standards and Technology), nonprofits (like the Kauffman Foundation), and NGOs. Others work in industry, and some have become entrepreneurs, starting their own businesses.

CNS has spawned lines of research that will continue at UCSB and the institutions of collaborators around the world, but its most enduring legacy will be the students it trained. They bring a true understanding of the complex interconnections between technology and society — along with an intellectual toolkit for examining them — to every sector of the economy, and they will continue to pursue a world that is as just as it technologically advanced.

5. Although representatives from the nanomaterials industry demonstrate relatively high perceived risk regarding engineered nanomaterials, they likewise demonstrate low sensitivity to variance in risks across type of engineered nanomaterials, and a strong disinclination to regulation. This situation puts workers at significant risk and probably requires regulatory action now (beyond the currently favored voluntary or ‘soft law’ approaches).

Without having read the documents, I’m not sure how to respond but I do have a question. Just how much regulation are they suggesting?

I offer all of the people associated with the center my thanks for all their hard work and my gratitude for the support I received from the center when I presented at the Society for the Study of Nanotechnologies and Other Emerging Technology (S.Net) in 2012. I’m glad to see they’re going out with a bang.

![The solid line defines the current known limits of the Younger Dryas Boundary field of cosmic-impact proxies, spanning 50 million square kilometers. [downloaded from http://www.news.ucsb.edu/2014/014368/nanodiamonds-are-forever#sthash.FtKG6WwS.dpuf]](http://www.frogheart.ca/wp-content/uploads/2014/09/Nanodiamonds_YDB-Field-300x161.jpg)