I have briefly speculated about the importance of touch elsewhere (see my July 19, 2019 posting regarding BlocKit and blockchain; scroll down about 50% of the way) but this upcoming news bit and the one following it put a different spin on the importance of touch.

Exceptional sense of touch

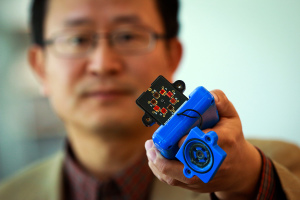

Robots need a sense of touch to perform their tasks and a July 18, 2019 National University of Singapore press release (also on EurekAlert) announces work on an improved sense of touch,

Robots and prosthetic devices may soon have a sense of touch equivalent to, or better than, the human skin with the Asynchronous Coded Electronic Skin (ACES), an artificial nervous system developed by a team of researchers at the National University of Singapore (NUS).

The new electronic skin system achieved ultra-high responsiveness and robustness to damage, and can be paired with any kind of sensor skin layers to function effectively as an electronic skin.

The innovation, achieved by Assistant Professor Benjamin Tee and his team from the Department of Materials Science and Engineering at the NUS Faculty of Engineering, was first reported in prestigious scientific journal Science Robotics on 18 July 2019.

Faster than the human sensory nervous system

“Humans use our sense of touch to accomplish almost every daily task, such as picking up a cup of coffee or making a handshake. Without it, we will even lose our sense of balance when walking. Similarly, robots need to have a sense of touch in order to interact better with humans, but robots today still cannot feel objects very well,” explained Asst Prof Tee, who has been working on electronic skin technologies for over a decade in hope of giving robots and prosthetic devices a better sense of touch.

Drawing inspiration from the human sensory nervous system, the NUS team spent a year and a half developing a sensor system that could potentially perform better. While the ACES electronic nervous system detects signals like the human sensor nervous system, it is made up of a network of sensors connected via a single electrical conductor, unlike the nerve bundles in the human skin. It is also unlike existing electronic skins which have interlinked wiring systems that can make them sensitive to damage and difficult to scale up.

Elaborating on the inspiration, Asst Prof Tee, who also holds appointments in the NUS Department of Electrical and Computer Engineering, NUS Institute for Health Innovation & Technology (iHealthTech), N.1 Institute for Health and the Hybrid Integrated Flexible Electronic Systems (HiFES) programme, said, “The human sensory nervous system is extremely efficient, and it works all the time to the extent that we often take it for granted. It is also very robust to damage. Our sense of touch, for example, does not get affected when we suffer a cut. If we can mimic how our biological system works and make it even better, we can bring about tremendous advancements in the field of robotics where electronic skins are predominantly applied.”

ACES can detect touches more than 1,000 times faster than the human sensory nervous system. For example, it is capable of differentiating physical contacts between different sensors in less than 60 nanoseconds – the fastest ever achieved for an electronic skin technology – even with large numbers of sensors. ACES-enabled skin can also accurately identify the shape, texture and hardness of objects within 10 milliseconds, ten times faster than the blinking of an eye. This is enabled by the high fidelity and capture speed of the ACES system.

The ACES platform can also be designed to achieve high robustness to physical damage, an important property for electronic skins because they come into the frequent physical contact with the environment. Unlike the current system used to interconnect sensors in existing electronic skins, all the sensors in ACES can be connected to a common electrical conductor with each sensor operating independently. This allows ACES-enabled electronic skins to continue functioning as long as there is one connection between the sensor and the conductor, making them less vulnerable to damage.

Smart electronic skins for robots and prosthetics

ACES’ simple wiring system and remarkable responsiveness even with increasing numbers of sensors are key characteristics that will facilitate the scale-up of intelligent electronic skins for Artificial Intelligence (AI) applications in robots, prosthetic devices and other human machine interfaces.

“Scalability is a critical consideration as big pieces of high performing electronic skins are required to cover the relatively large surface areas of robots and prosthetic devices,” explained Asst Prof Tee. “ACES can be easily paired with any kind of sensor skin layers, for example, those designed to sense temperatures and humidity, to create high performance ACES-enabled electronic skin with an exceptional sense of touch that can be used for a wide range of purposes,” he added.

For instance, pairing ACES with the transparent, self-healing and water-resistant sensor skin layer also recently developed by Asst Prof Tee’s team, creates an electronic skin that can self-repair, like the human skin. This type of electronic skin can be used to develop more realistic prosthetic limbs that will help disabled individuals restore their sense of touch.

Other potential applications include developing more intelligent robots that can perform disaster recovery tasks or take over mundane operations such as packing of items in warehouses. The NUS team is therefore looking to further apply the ACES platform on advanced robots and prosthetic devices in the next phase of their research.

For those who like videos, the researchers have prepared this,

Here’s a link to and a citation for the paper,

A neuro-inspired artificial peripheral nervous system for scalable electronic skins by Wang Wei Lee, Yu Jun Tan, Haicheng Yao, Si Li, Hian Hian See, Matthew Hon, Kian Ann Ng, Betty Xiong, John S. Ho and Benjamin C. K. Tee. Science Robotics Vol 4, Issue 32 31 July 2019 eaax2198 DOI: 10.1126/scirobotics.aax2198 Published online first: 17 Jul 2019:

This paper is behind a paywall.

Picking up a grape and holding his wife’s hand

This story comes from the Canadian Broadcasting Corporation (CBC) Radio with a six minute story embedded in the text, from a July 25, 2019 CBC Radio ‘As It Happens’ article by Sheena Goodyear,

…

The West Valley City, Utah, real estate agent [Keven Walgamott] lost his left hand in an electrical accident 17 years ago. Since then, he’s tried out a few different prosthetic limbs, but always found them too clunky and uncomfortable.

Then he decided to work with the University of Utah in 2016 to test out new prosthetic technology that mimics the sensation of human touch, allowing Walgamott to perform delicate tasks with precision — including shaking his wife’s hand.

“I extended my left hand, she came and extended hers, and we were able to feel each other with the left hand for the first time in 13 years, and it was just a marvellous and wonderful experience,” Walgamott told As It Happens guest host Megan Williams.

Walgamott, one of seven participants in the University of Utah study, was able to use an advanced prosthetic hand called the LUKE Arm to pick up an egg without cracking it, pluck a single grape from a bunch, hammer a nail, take a ring on and off his finger, fit a pillowcase over a pillow and more.

…

While performing the tasks, Walgamott was able to actually feel the items he was holding and correctly gauge the amount of pressure he needed to exert — mimicking a process the human brain does automatically.

“I was able to feel something in each of my fingers,” he said. “What I feel, I guess the easiest way to explain it, is little electrical shocks.”

Those shocks — which he describes as a kind of a tingling sensation — intensify as he tightens his grip.

“Different variations of the intensity of the electricity as I move my fingers around and as I touch things,” he said.

…

To make that [sense of touch] happen, the researchers implanted electrodes into the nerves on Walgamott’s forearm, allowing his brain to communicate with his prosthetic through a computer outside his body. That means he can move the hand just by thinking about it.

But those signals also work in reverse.

The team attached sensors to the hand of a LUKE Arm. Those sensors detect touch and positioning, and send that information to the electrodes so it can be interpreted by the brain.

…

For Walgamott, performing a series of menial tasks as a team of scientists recorded his progress was “fun to do.”

“I’d forgotten how well two hands work,” he said. “That was pretty cool.”

But it was also a huge relief from the phantom limb pain he has experienced since the accident, which he describes as a “burning sensation” in the place where his hand used to be.

…

A July 24, 2019 University of Utah news release (also on EurekAlert) provides more detail about the research,

Keven Walgamott had a good “feeling” about picking up the egg without crushing it.

What seems simple for nearly everyone else can be more of a Herculean task for Walgamott, who lost his left hand and part of his arm in an electrical accident 17 years ago. But he was testing out the prototype of a high-tech prosthetic arm with fingers that not only can move, they can move with his thoughts. And thanks to a biomedical engineering team at the University of Utah, he “felt” the egg well enough so his brain could tell the prosthetic hand not to squeeze too hard.

That’s because the team, led by U biomedical engineering associate professor Gregory Clark, has developed a way for the “LUKE Arm” (so named after the robotic hand that Luke Skywalker got in “The Empire Strikes Back”) to mimic the way a human hand feels objects by sending the appropriate signals to the brain. Their findings were published in a new paper co-authored by U biomedical engineering doctoral student Jacob George, former doctoral student David Kluger, Clark and other colleagues in the latest edition of the journal Science Robotics. A copy of the paper may be obtained by emailing robopak@aaas.org.

“We changed the way we are sending that information to the brain so that it matches the human body. And by matching the human body, we were able to see improved benefits,” George says. “We’re making more biologically realistic signals.”

That means an amputee wearing the prosthetic arm can sense the touch of something soft or hard, understand better how to pick it up and perform delicate tasks that would otherwise be impossible with a standard prosthetic with metal hooks or claws for hands.

“It almost put me to tears,” Walgamott says about using the LUKE Arm for the first time during clinical tests in 2017. “It was really amazing. I never thought I would be able to feel in that hand again.”

Walgamott, a real estate agent from West Valley City, Utah, and one of seven test subjects at the U, was able to pluck grapes without crushing them, pick up an egg without cracking it and hold his wife’s hand with a sensation in the fingers similar to that of an able-bodied person.

“One of the first things he wanted to do was put on his wedding ring. That’s hard to do with one hand,” says Clark. “It was very moving.”

Those things are accomplished through a complex series of mathematical calculations and modeling.

The LUKE Arm

The LUKE Arm has been in development for some 15 years. The arm itself is made of mostly metal motors and parts with a clear silicon “skin” over the hand. It is powered by an external battery and wired to a computer. It was developed by DEKA Research & Development Corp., a New Hampshire-based company founded by Segway inventor Dean Kamen.

Meanwhile, the U’s team has been developing a system that allows the prosthetic arm to tap into the wearer’s nerves, which are like biological wires that send signals to the arm to move. It does that thanks to an invention by U biomedical engineering Emeritus Distinguished Professor Richard A. Normann called the Utah Slanted Electrode Array. The array is a bundle of 100 microelectrodes and wires that are implanted into the amputee’s nerves in the forearm and connected to a computer outside the body. The array interprets the signals from the still-remaining arm nerves, and the computer translates them to digital signals that tell the arm to move.

But it also works the other way. To perform tasks such as picking up objects requires more than just the brain telling the hand to move. The prosthetic hand must also learn how to “feel” the object in order to know how much pressure to exert because you can’t figure that out just by looking at it.

First, the prosthetic arm has sensors in its hand that send signals to the nerves via the array to mimic the feeling the hand gets upon grabbing something. But equally important is how those signals are sent. It involves understanding how your brain deals with transitions in information when it first touches something. Upon first contact of an object, a burst of impulses runs up the nerves to the brain and then tapers off. Recreating this was a big step.

“Just providing sensation is a big deal, but the way you send that information is also critically important, and if you make it more biologically realistic, the brain will understand it better and the performance of this sensation will also be better,” says Clark.

To achieve that, Clark’s team used mathematical calculations along with recorded impulses from a primate’s arm to create an approximate model of how humans receive these different signal patterns. That model was then implemented into the LUKE Arm system.

Future research

In addition to creating a prototype of the LUKE Arm with a sense of touch, the overall team is already developing a version that is completely portable and does not need to be wired to a computer outside the body. Instead, everything would be connected wirelessly, giving the wearer complete freedom.

Clark says the Utah Slanted Electrode Array is also capable of sending signals to the brain for more than just the sense of touch, such as pain and temperature, though the paper primarily addresses touch. And while their work currently has only involved amputees who lost their extremities below the elbow, where the muscles to move the hand are located, Clark says their research could also be applied to those who lost their arms above the elbow.

Clark hopes that in 2020 or 2021, three test subjects will be able to take the arm home to use, pending federal regulatory approval.

The research involves a number of institutions including the U’s Department of Neurosurgery, Department of Physical Medicine and Rehabilitation and Department of Orthopedics, the University of Chicago’s Department of Organismal Biology and Anatomy, the Cleveland Clinic’s Department of Biomedical Engineering and Utah neurotechnology companies Ripple Neuro LLC and Blackrock Microsystems. The project is funded by the Defense Advanced Research Projects Agency and the National Science Foundation.

“This is an incredible interdisciplinary effort,” says Clark. “We could not have done this without the substantial efforts of everybody on that team.”

Here’s a link to and a citation for the paper,

Biomimetic sensory feedback through peripheral nerve stimulation improves dexterous use of a bionic hand by J. A. George, D. T. Kluger, T. S. Davis, S. M. Wendelken, E. V. Okorokova, Q. He, C. C. Duncan, D. T. Hutchinson, Z. C. Thumser, D. T. Beckler, P. D. Marasco, S. J. Bensmaia and G. A. Clark. Science Robotics Vol. 4, Issue 32, eaax2352 31 July 2019 DOI: 10.1126/scirobotics.aax2352 Published online first: 24 Jul 2019

This paper is definitely behind a paywall.

The University of Utah researchers have produced a video highlighting their work,