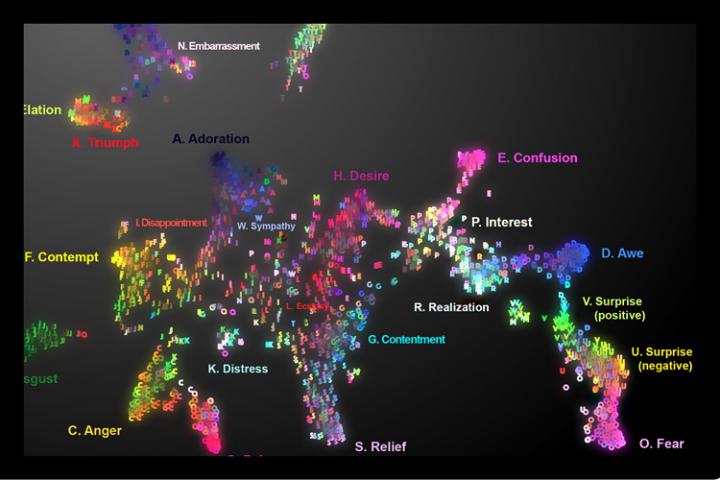

The real map, not the the image of the map you see above, offers a disconcerting (for me, anyway) experience. Especially since I’ve just finished reading Lisa Feldman Barrett’s 2017 book, How Emotions are Made, where she presents her theory of ‘constructed emotion. (There’s more about ‘constructed emotion’ later in this post.)

Moving on to the story about the ‘auditory emotion map’ in the headline, a February 4, 2019 University of California at Berkeley news release by Yasmin Anwar (also on EurekAlert but published on Feb. 5, 2019) describes the work,

Ooh, surprise! Those spontaneous sounds we make to express everything from elation (woohoo) to embarrassment (oops) say a lot more about what we’re feeling than previously understood, according to new research from the University of California, Berkeley.

Proving that a sigh is not just a sigh [a reference to the song, As Time Goes By? The lyric is “a kiss is still a kiss, a sigh is just a sigh …”], UC Berkeley scientists conducted a statistical analysis of listener responses to more than 2,000 nonverbal exclamations known as “vocal bursts” and found they convey at least 24 kinds of emotion. Previous studies of vocal bursts set the number of recognizable emotions closer to 13.

The results, recently published online in the American Psychologist journal, are demonstrated in vivid sound and color on the first-ever interactive audio map of nonverbal vocal communication.

“This study is the most extensive demonstration of our rich emotional vocal repertoire, involving brief signals of upwards of two dozen emotions as intriguing as awe, adoration, interest, sympathy and embarrassment,” said study senior author Dacher Keltner, a psychology professor at UC Berkeley and faculty director of the Greater Good Science Center, which helped support the research.

For millions of years, humans have used wordless vocalizations to communicate feelings that can be decoded in a matter of seconds, as this latest study demonstrates.

“Our findings show that the voice is a much more powerful tool for expressing emotion than previously assumed,” said study lead author Alan Cowen, a Ph.D. student in psychology at UC Berkeley.

On Cowen’s audio map, one can slide one’s cursor across the emotional topography and hover over fear (scream), then surprise (gasp), then awe (woah), realization (ohhh), interest (ah?) and finally confusion (huh?).

Among other applications, the map can be used to help teach voice-controlled digital assistants and other robotic devices to better recognize human emotions based on the sounds we make, he said.

As for clinical uses, the map could theoretically guide medical professionals and researchers working with people with dementia, autism and other emotional processing disorders to zero in on specific emotion-related deficits.

“It lays out the different vocal emotions that someone with a disorder might have difficulty understanding,” Cowen said. “For example, you might want to sample the sounds to see if the patient is recognizing nuanced differences between, say, awe and confusion.”

Though limited to U.S. responses, the study suggests humans are so keenly attuned to nonverbal signals – such as the bonding “coos” between parents and infants – that we can pick up on the subtle differences between surprise and alarm, or an amused laugh versus an embarrassed laugh.

For example, by placing the cursor in the embarrassment region of the map, you might find a vocalization that is recognized as a mix of amusement, embarrassment and positive surprise.

A tour through amusement reveals the rich vocabulary of laughter and a spin through the sounds of adoration, sympathy, ecstasy and desire may tell you more about romantic life than you might expect,” said Keltner.

Researchers recorded more than 2,000 vocal bursts from 56 male and female professional actors and non-actors from the United States, India, Kenya and Singapore by asking them to respond to emotionally evocative scenarios.

Next, more than 1,000 adults recruited via Amazon’s Mechanical Turk online marketplace listened to the vocal bursts and evaluated them based on the emotions and meaning they conveyed and whether the tone was positive or negative, among several other characteristics.

A statistical analysis of their responses found that the vocal bursts fit into at least two dozen distinct categories including amusement, anger, awe, confusion, contempt, contentment, desire, disappointment, disgust, distress, ecstasy, elation, embarrassment, fear, interest, pain, realization, relief, sadness, surprise (positive) surprise (negative), sympathy and triumph.

For the second part of the study, researchers sought to present real-world contexts for the vocal bursts. They did this by sampling YouTube video clips that would evoke the 24 emotions established in the first part of the study, such as babies falling, puppies being hugged and spellbinding magic tricks.

This time, 88 adults of all ages judged the vocal bursts extracted from YouTube videos. Again, the researchers were able to categorize their responses into 24 shades of emotion. The full set of data were then organized into a semantic space onto an interactive map.

“These results show that emotional expressions color our social interactions with spirited declarations of our inner feelings that are difficult to fake, and that our friends, co-workers, and loved ones rely on to decipher our true commitments,” Cowen said.

The writer assumes that emotions are pre-existing. Somewhere, there’s happiness, sadness, anger, etc. It’s the pre-existence that Lisa Feldman Barret challenges with her theory that we construct our emotions (from her Wikipedia entry),

She highlights differences in emotions between different cultures, and says that emotions “are not triggered; you create them. They emerge as a combination of the physical properties of your body, a flexible brain that wires itself to whatever environment it develops in, and your culture and upbringing, which provide that environment.”

You can find Barrett’s December 6, 2017 TED talk here wheres she explains her theory in greater detail. One final note about Barrett, she was born and educated in Canada and now works as a Professor of Psychology at Northeastern University, with appointments at Harvard Medical School and Massachusetts General Hospital at Northeastern University in Boston, Massachusetts; US.

A February 7, 2019 by Mark Wilson for Fast Company delves further into the 24 emotion audio map mentioned at the outset of this posting (Note: Links have been removed),

Fear, surprise, awe. Desire, ecstasy, relief.

These emotions are not distinct, but interconnected, across the gradient of human experience. At least that’s what a new paper from researchers at the University of California, Berkeley, Washington University, and Stockholm University proposes. The accompanying interactive map, which charts the sounds we make and how we feel about them, will likely persuade you to agree.

At the end of his article, Wilson also mentions the Dalai Lama and his Atlas of Emotions, a data visualization project, (featured in Mark Wilson’s May 13, 2016 article for Fast Company). It seems humans of all stripes are interested in emotions.

Here’s a link to and a citation for the paper about the audio map,

Mapping 24 emotions conveyed by brief human vocalization by Cowen, Alan S;, Elfenbein, Hillary Ange;, Laukka, Petri; Keltner, Dacher. American Psychologist, Dec 20, 2018, No Pagination Specified DOI: 10.1037/amp0000399

This paper is behind a paywall.