Thinner, meaning smaller and less bulky, is a prized quality in technologies such as phones, batteries, and, in this case, lenses. From a May 16, 2022 news item on ScienceDaily,

The technological advancement of optical lenses has long been a significant marker of human scientific achievement. Eyeglasses, telescopes, cameras, and microscopes have all literally and figuratively allowed us to see the world in a new light. Lenses are also a fundamental component of manufacturing nanoelectronics by the semiconductor industry.

One of the most impactful breakthroughs of lens technology in recent history has been the development of photonic metasurfaces — artificially engineered nano-scale materials with remarkable optical properties. Georgia Tech [Georgia Institute of Technology] researchers at the forefront of this technology have recently demonstrated the first-ever electrically tunable photonic metasurface platform in a recent study published by Nature Communications.

“Metasurfaces can make the optical systems very thin, and as they become easier to control and tune, you’ll soon find them in cell phone cameras and similar electronic imaging systems,” said Ali Adibi, professor in the School of Electrical and Computer Engineering at the Georgia Institute of Technology [Georgia Tech; US].

…

A May 10, 2022 Georgia Tech news release (also on EurekAlert but published May 16, 2022), which originated the news item, provides more detail,

The pronounced tuning measures achieved through the new platform represent a critical advancement towards the development of miniaturized reconfigurable metasurfaces. The results of the study have shown a record eleven-fold change in the reflective properties, a large range of spectral tuning for operation, and much faster tuning speed.

Heating Up Metasurfaces

Metasurfaces are a class of nanophotonic materials in which a large range of miniaturized elements are engineered to affect the transmission and reflection of light at different frequencies in a controlled way.

“When viewing under very strong microscopes, metasurfaces look like a periodic array of posts,” said Adibi. “The best analogy would be to think of a LEGO pattern formed by connecting many similar LEGO bricks next to each other.”

Since their inception, metasurfaces have been used to demonstrate that very thin optical devices can affect light propagation with metalenses (the formation of thin lenses) being the most developed application.

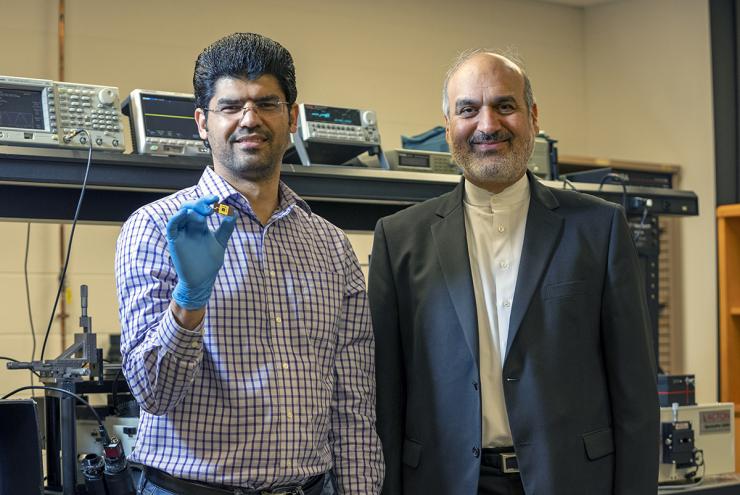

Despite impressive progress, most demonstrated metasurfaces are passive, meaning their performance cannot be changed (or tuned) after fabrication. The work presented by Adibi and his team, led by Ph.D. candidate Sajjad Abdollahramezani, applies electrical heat to a special class of nanophotonic materials to create a platform that can enable reconfigurable metasurfaces to be easily manufactured with high levels of optical modulation.

PCMs Provide the Answer

A wide range of materials may be used to form metasurfaces including metals, oxides, and semiconductors, but Abdollahramezani and Adibi’s research focuses on phase-change materials (PCMs) because they can form the most effective structures with the smallest feature sizes. PCMs are substances that absorb and release heat during the process of heating and cooling. They are called “phase-change” materials because they go from one crystallization state to another during the thermal cycling process. Water changing from a liquid to a solid or gas is the most common example.

The Georgia Tech team’s experiments are substantially more complicated than heating and freezing water. Knowing that the optical properties of PCMs can be altered by local heating, they have harnessed the full potential of the PCM alloy Ge2Sb2Te5 (GST), which is a compound of germanium, antimony, and tellurium.

By combining the optical design with a miniaturized electrical microheater underneath, the team can change the crystalline phase of the GST to make active tuning of the metasurface device possible. The fabricated metasurfaces were developed at Georgia Tech’s Institute for Electronics and Nanotechnology (IEN) and tested in characterization labs by illuminating the reconfigurable metasurfaces with laser light at different frequencies and measuring the properties of the reflected light in real time.

What Tunable Metasurfaces Mean for the Future

Driven by device miniaturization and system integration, as well as their ability to selectively reflect different colors of light, metasurfaces are rapidly replacing bulky optical assemblies of the past. Immediate impact on technologies like LiDAR systems for autonomous cars, imaging, spectroscopy, and sensing is expected.

With further development, more aggressive applications like computing, augmented reality, photonic chips for artificial intelligence, and biohazard detection can also be envisioned, according to Abdollahramezani and Adibi.

“As the platform continues to develop, reconfigurable metasurfaces will be found everywhere,” said Adibi. “They will even empower smaller endoscopes to go deep inside the body for better imaging and help medical sensors detect different biomarkers in blood.”

…

Funding: This material is based upon work supported by the National Science Foundation (NSF) under Grant No. 1837021. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the NSF. The work was primarily funded by Office of Naval Research (ONR) (N00014-18-1-2055, Dr. B. Bennett) and by Defense Advanced Research Projects Agency [DARPA] (D19AC00001, Dr. R. Chandrasekar). W.C. acknowledges support from ONR (N00014-17-1-2555) and National Science Foundation (NSF) (DMR-2004749). A. Alù acknowledges support from Air Force Office of Scientific Research and the Simons Foundation. M.W. acknowledges support by the Deutsche Forschungsgemeinschaft (SFB 917). M.E.S. acknowledges financial support of NSF-CHE (1608801). This work was performed in part at the Georgia Tech Institute for Electronics and Nanotechnology (IEN), a member of the National Nanotechnology Coordinated Infrastructure (NNCI), which is supported by NSF (ECCS1542174).

…

I am charmed by this image. Neither of these two are professionals at posing for photographers. Nonetheless, they look pleased and happy to help the publicity team spread the word about their research, they also seem like they’re looking forward to getting back to work.

Here’s a link to and a citation for the paper,

Electrically driven reprogrammable phase-change metasurface reaching 80% efficiency by Sajjad Abdollahramezani, Omid Hemmatyar, Mohammad Taghinejad, Hossein Taghinejad, Alex Krasnok, Ali A. Eftekhar, Christian Teichrib, Sanchit Deshmukh, Mostafa A. El-Sayed, Eric Pop, Matthias Wuttig, Andrea Alù, Wenshan Cai & Ali Adibi. Nature Communications volume 13, Article number: 1696 (2022) DOI: https://doi.org/10.1038/s41467-022-29374-6 Published: 30 March 2022

This paper is open access.