A couple of Australian academics have written a comment for the journal Nature, which bears the intriguing subtitle: “The patent system assumes that inventors are human. Inventions devised by machines require their own intellectual property law and an international treaty.” (For the curious, I’ve linked to a few of my previous posts touching on intellectual property [IP], specifically the patent’s fraternal twin, copyright at the end of this piece.)

Before linking to the comment, here’s the May 27, 2022 University of New South Wales (UNCSW) press release (also on EurekAlert but published May 30, 2022) which provides an overview of their thinking on the subject, Note: Links have been removed,

It’s not surprising these days to see new inventions that either incorporate or have benefitted from artificial intelligence (AI) in some way, but what about inventions dreamt up by AI – do we award a patent to a machine?

This is the quandary facing lawmakers around the world with a live test case in the works that its supporters say is the first true example of an AI system named as the sole inventor.

In commentary published in the journal Nature, two leading academics from UNSW Sydney examine the implications of patents being awarded to an AI entity.

Intellectual Property (IP) law specialist Associate Professor Alexandra George and AI expert, Laureate Fellow and Scientia Professor Toby Walsh argue that patent law as it stands is inadequate to deal with such cases and requires legislators to amend laws around IP and patents – laws that have been operating under the same assumptions for hundreds of years.

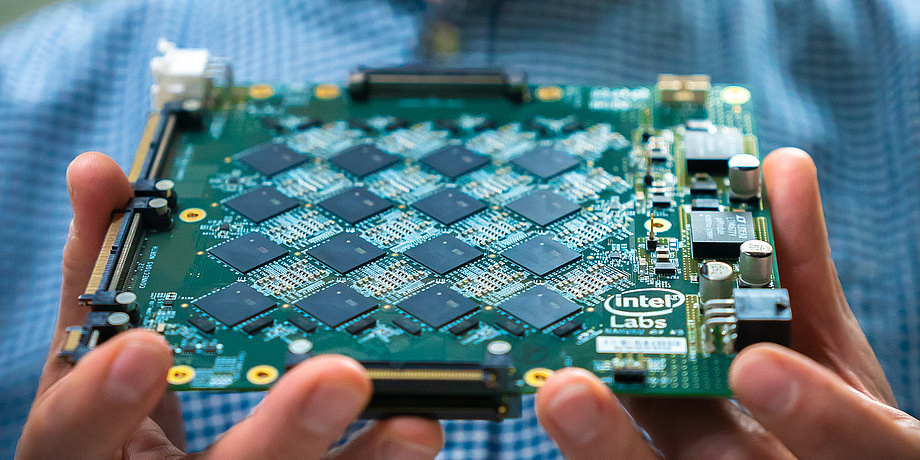

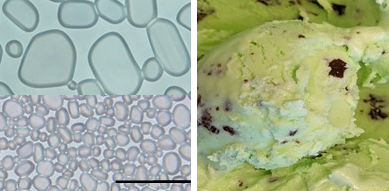

The case in question revolves around a machine called DABUS (Device for the Autonomous Bootstrapping of Unified Sentience) created by Dr Stephen Thaler, who is president and chief executive of US-based AI firm Imagination Engines. Dr Thaler has named DABUS as the inventor of two products – a food container with a fractal surface that helps with insulation and stacking, and a flashing light for attracting attention in emergencies.

For a short time in Australia, DABUS looked like it might be recognised as the inventor because, in late July 2021, a trial judge accepted Dr Thaler’s appeal against IP Australia’s rejection of the patent application five months earlier. But after the Commissioner of Patents appealed the decision to the Full Court of the Federal Court of Australia, the five-judge panel upheld the appeal, agreeing with the Commissioner that an AI system couldn’t be named the inventor.

A/Prof. George says the attempt to have DABUS awarded a patent for the two inventions instantly creates challenges for existing laws which has only ever considered humans or entities comprised of humans as inventors and patent-holders.

“Even if we do accept that an AI system is the true inventor, the first big problem is ownership. How do you work out who the owner is? An owner needs to be a legal person, and an AI is not recognised as a legal person,” she says.

Ownership is crucial to IP law. Without it there would be little incentive for others to invest in the new inventions to make them a reality.

“Another problem with ownership when it comes to AI-conceived inventions, is even if you could transfer ownership from the AI inventor to a person: is it the original software writer of the AI? Is it a person who has bought the AI and trained it for their own purposes? Or is it the people whose copyrighted material has been fed into the AI to give it all that information?” asks A/Prof. George.

For obvious reasons

Prof. Walsh says what makes AI systems so different to humans is their capacity to learn and store so much more information than an expert ever could. One of the requirements of inventions and patents is that the product or idea is novel, not obvious and is useful.

“There are certain assumptions built into the law that an invention should not be obvious to a knowledgeable person in the field,” Prof. Walsh says.

“Well, what might be obvious to an AI won’t be obvious to a human because AI might have ingested all the human knowledge on this topic, way more than a human could, so the nature of what is obvious changes.”

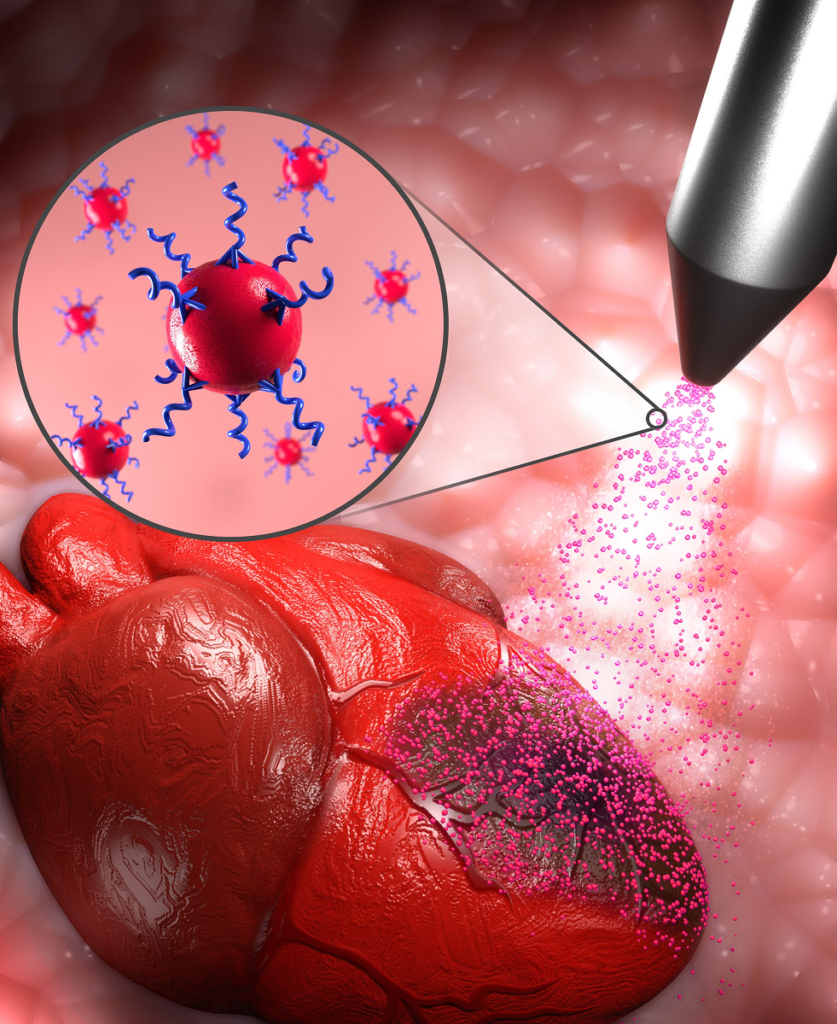

Prof. Walsh says this isn’t the first time that AI has been instrumental in coming up with new inventions. In the area of drug development, a new antibiotic was created in 2019 – Halicin – that used deep learning to find a chemical compound that was effective against drug-resistant strains of bacteria.

“Halicin was originally meant to treat diabetes, but its effectiveness as an antibiotic was only discovered by AI that was directed to examine a vast catalogue of drugs that could be repurposed as antibiotics. So there’s a mixture of human and machine coming into this discovery.”

Prof. Walsh says in the case of DABUS, it’s not entirely clear whether the system is truly responsible for the inventions.

“There’s lots of involvement of Dr Thaler in these inventions, first in setting up the problem, then guiding the search for the solution to the problem, and then interpreting the result,” Prof. Walsh says.

“But it’s certainly the case that without the system, you wouldn’t have come up with the inventions.”

Change the laws

Either way, both authors argue that governing bodies around the world will need to modernise the legal structures that determine whether or not AI systems can be awarded IP protection. They recommend the introduction of a new ‘sui generis’ form of IP law – which they’ve dubbed ‘AI-IP’ – that would be specifically tailored to the circumstances of AI-generated inventiveness. This, they argue, would be more effective than trying to retrofit and shoehorn AI-inventiveness into existing patent laws.

Looking forward, after examining the legal questions around AI and patent law, the authors are currently working on answering the technical question of how AI is going to be inventing in the future.

Dr Thaler has sought ‘special leave to appeal’ the case concerning DABUS to the High Court of Australia. It remains to be seen whether the High Court will agree to hear it. Meanwhile, the case continues to be fought in multiple other jurisdictions around the world.

Here’s a link to and a citation for the paper,

Artificial intelligence is breaking patent law by Alexandra George & Toby Walsh. Nature (Nature) COMMENT ISSN 1476-4687 (online) 24 May 2022 ISSN 0028-0836 (print) Vol 605 26 May 2022 pp. 616-18 DOI: 10.1038/d41586-022-01391-x

This paper appears to be open access.

The Journey

DABIUS has gotten a patent in one jurisdiction, from an August 8, 2021 article on brandedequity.com,

…

The patent application listing DABUS as the inventor was filed in patent offices around the world, including the US, Europe, Australia, and South Afica. But only South Africa granted the patent (Australia followed suit a few days later after a court judgment gave the go-ahard [and rejected it several months later]).

…

Natural person?

This September 27, 2021 article by Miguel Bibe for Inventa covers some of the same ground adding some some discussion of the ‘natural person’ problem,

…

The patent is for “a food container based on fractal geometry”, and was accepted by the CIPC [Companies and Intellectual Property Commission] on June 24, 2021. The notice of issuance was published in the July 2021 “Patent Journal”.

South Africa does not have a substantive patent examination system and, instead, requires applicants to merely complete a filing for their inventions. This means that South Africa patent laws do not provide a definition for “inventor” and the office only proceeds with a formal examination in order to confirm if the paperwork was filled correctly.

…

… according to a press release issued by the University of Surrey: “While patent law in many jurisdictions is very specific in how it defines an inventor, the DABUS team is arguing that the status quo is not fit for purpose in the Fourth Industrial Revolution.”

On the other hand, this may not be considered as a victory for the DABUS team since several doubts and questions remain as to who should be considered the inventor of the patent. Current IP laws in many jurisdictions follow the traditional term of “inventor” as being a “natural person”, and there is no legal precedent in the world for inventions created by a machine.

…

August 2022 update

Mike Masnick in an August 15, 2022 posting on Techdirt provides the latest information on Stephen Thaler’s efforts to have patents and copyrights awarded to his AI entity, DABUS,

Stephen Thaler is a man on a mission. It’s not a very good mission, but it’s a mission. He created something called DABUS (Device for the Autonomous Bootstrapping of Unified Sentience) and claims that it’s creating things, for which he has tried to file for patents and copyrights around the globe, with his mission being to have DABUS named as the inventor or author. This is dumb for many reasons. The purpose of copyright and patents are to incentivize the creation of these things, by providing to the inventor or author a limited time monopoly, allowing them to, in theory, use that monopoly to make some money, thereby making the entire inventing/authoring process worthwhile. An AI doesn’t need such an incentive. And this is why patents and copyright only are given to persons and not animals or AI.

…

… Thaler’s somewhat quixotic quest continues to fail. The EU Patent Office rejected his application. The Australian patent office similarly rejected his request. In that case, a court sided with Thaler after he sued the Australian patent office, and said that his AI could be named as an inventor, but thankfully an appeals court set aside that ruling a few months ago. In the US, Thaler/DABUS keeps on losing as well. Last fall, he lost in court as he tried to overturn the USPTO ruling, and then earlier this year, the US Copyright Office also rejected his copyright attempt (something it has done a few times before). In June, he sued the Copyright Office over this, which seems like a long shot.

And now, he’s also lost his appeal of the ruling in the patent case. CAFC, the Court of Appeals for the Federal Circuit — the appeals court that handles all patent appeals — has rejected Thaler’s request just like basically every other patent and copyright office, and nearly all courts.

…

If you have the time, the August 15, 2022 posting is an interesting read.

Consciousness and ethical AI

Just to make things more fraught, an engineer at Google has claimed that one of their AI chatbots has consciousness. From a June 16, 2022 article (in Canada’s National Post [previewed on epaper]) by Patrick McGee,

Google has ignited a social media firestorm on the the nature of consciousness after placing an engineer on paid leave with his belief that the tech group’s chatbot has become “sentient.”

Blake Lemoine, a senior software engineer in Google’s Responsible AI unit, did not receive much attention when he wrote a Medium post saying he “may be fired soon for doing AI ethics work.”

But a Saturday [June 11, 2022] profile in the Washington Post characterized Lemoine as “the Google engineer who thinks “the company’s AI has come to life.”

…

This is not the first time that Google has run into a problem with ethics and AI. Famously, Timnit Gebru who co-led (with Margaret Mitchell) Google’s ethics and AI unit departed in 2020. Gebru said (and maintains to this day) she was fired. They said she was ?, they never did make a final statement although after an investigation Gebru did receive an apology. You *can* read more about Gebru and the issues she brought to light in her Wikipedia entry. Coincidentally (or not), Margaret Mitchell was terminated/fired in February 2021 from Google after criticizing the company for Gebru’s ‘firing’. See a February 19, 2021 article by Megan Rose Dickey for TechCrunch for details about what the company has admitted is a firing or Margaret Mitchell’s termination from the company.

Getting back intellectual property and AI.

What about copyright?

There are no mentions of copyright in the earliest material I have here about the ‘creative’ arts and artificial intelligence is this, “Writing and AI or is a robot writing this blog?” posted July 16, 2014. More recently, there’s “Beer and wine reviews, the American Chemical Society’s (ACS) AI editors, and the Turing Test” posted May 20, 2022. The type of writing featured is not literary or typically considered creative writing.

On the more creative front, there’s “True love with AI (artificial intelligence): The Nature of Things explores emotional and creative AI (long read)” posted on December 3, 2021. The literary/creative portion of the post can be found under the ‘AI and creativity’ subhead approximately 30% of the way down and where I mention Douglas Coupland. Again, there’s no mention of copyright.

It’s with the visual arts that copyright gets mentioned. The first one I can find here is “Robot artists—should they get copyright protection” posted on July 10, 2017.

Fun fact: Andres Guadamuz who was mentioned in my posting took to his own blog where he gave my blog a shout out while implying that I wasn’t thoughtful. The gist of his August 8, 2017 posting was that he was misunderstood by many people, which led to the title for his post, “Should academics try to engage the public?” Thankfully, he soldiers on trying to educate us with his TechnoLama blog.

Lastly, there’s this August 16, 2019 posting “AI (artificial intelligence) artist got a show at a New York City art gallery” where you can scroll down to the ‘What about intellectual property?’ subhead about 80% of the way.

You look like a thing …

i am recommending a book for anyone who’d like to learn a little more about how artificial intelligence (AI) works, “You look like a thing and I love you; How Artificial Intelligence Works and Why It’s Making the World a Weirder Place” by Janelle Shane (2019).

It does not require an understanding of programming/coding/algorithms/etc.; Shane makes the subject as accessible as possible and gives you insight into why the term ‘artificial stupidity’ is more applicable than you might think. You can find Shane’s website here and you can find her 10 minute TED talk here.

*’can’ added to sentence on May 12, 2023.