The Salk Institute wouldn’t have been my first guess for the science partner in this art and science project, which will be examining museum visitor behaviour. From the September 28, 2022 Salk Institute news release (also on EurekAlert and a copy received via email) announcing the project grant,

Clay vessels of innumerable shapes and sizes come to life as they illuminate a rich history of symbolic meanings and identity. Some museum visitors may lean in to get a better view, while others converse with their friends over the rich hues. Exhibition designers have long wondered how the human brain senses, perceives, and learns in the rich environment of a museum gallery.

In a synthesis of science and art, Salk scientists have teamed up with curators and design experts at the Los Angeles County Museum of Art (LACMA) to study how nearly 100,000 museum visitors respond to exhibition design. The goal of the project, funded by a $900,000 grant from the National Science Foundation, is to better understand how people perceive, make choices in, interact with, and learn from a complex environment, and to further enhance the educational mission of museums through evidence-based design strategies.

The Salk team is led by Professor Thomas Albright, Salk Fellow Talmo Pereira, and Staff Scientist Sergei Gepshtein.

The experimental exhibition at LACMA—called “Conversing in Clay: Ceramics from the LACMA Collection”—is open until May 21, 2023.

“LACMA is one of the world’s greatest art museums, so it is wonderful to be able to combine its expertise with our knowledge of brain function and behavior,” says Albright, director of Salk’s Vision Center Laboratory and Conrad T. Prebys Chair in Vision Research. “The beauty of this project is that it extends our laboratory research on perception, memory, and decision-making into the real world.”

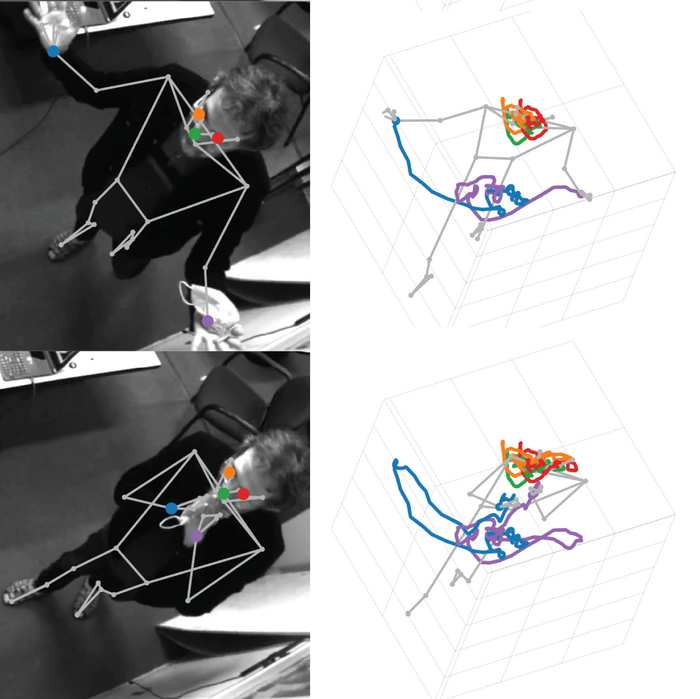

Albright and Gepshtein study the visual system and how it informs decisions and behaviors. A major focus of their work is uncovering how perception guides movement in space. Pereira’s expertise lies in measuring and quantifying behaviors. He invented a deep learning technique called SLEAP [Social LEAP Estimates Animal Poses (SLEAP)], which precisely captures the movements of organisms, from single cells to whales, using conventional videography. This technology has enabled scientists to describe behaviors with unprecedented precision.

For this project, the scientists have placed 10 video cameras throughout a LACMA gallery. The researchers will record how the museum environment shapes behaviors as visitors move through the space, including preferred viewing locations, paths and rates of movement, postures, social interactions, gestures, and expressions. Those behaviors will, in turn, provide novel insights into the underlying perceptual and cognitive processes that guide our engagement with works of art. The scientists will also test strategic modifications to gallery design to produce the most rewarding experience.

“We plan to capture every behavior that every person does while visiting the exhibit,” Pereira says. “For example, how long they stand in front an object, whether they’re talking to a friend or even scratching their head. Then we can use this data to predict how the visitor will act next, such as if they will visit another object in the exhibit or if they leave instead.”

Results from the study will help inform future exhibit design and visitor experience and provide an unprecedented quantitative look at how human systems for perception and memory lead to predictable decisions and actions in a rich sensory environment.

“As a museum that has a long history of melding art with science and technology, we are thrilled to partner with the Salk Institute for this study,” says Michael Govan, LACMA CEO and Wallis Annenberg director. “LACMA is always striving to create accessible, engaging gallery environments for all visitors. We look forward to applying what we learn to our approach to gallery design and to enhance visitor experience.”

Next, the scientists plan to employ this experimental approach to gain a better understanding of how the design of environments for people with specific needs, like school-age children or patients with dementia, might improve cognitive processes and behaviors.

Several members of the research team are also members of the Academy of Neuroscience for Architecture, which seeks to promote and advance knowledge that links neuroscience research to a growing understanding of human responses to the built environment.

Gepshtein is also a member of Salk’s Center for the Neurobiology of Vision and director of the Collaboratory for Adaptive Sensory Technologies. Additionally, he serves as the director of the Center for Spatial Perception & Concrete Experience at the University of Southern California.

About the Los Angeles County Museum of Art:

LACMA is the largest art museum in the western United States, with a collection of more than 149,000 objects that illuminate 6,000 years of artistic expression across the globe. Committed to showcasing a multitude of art histories, LACMA exhibits and interprets works of art from new and unexpected points of view that are informed by the region’s rich cultural heritage and diverse population. LACMA’s spirit of experimentation is reflected in its work with artists, technologists, and thought leaders as well as in its regional, national, and global partnerships to share collections and programs, create pioneering initiatives, and engage new audiences.

About the Salk Institute for Biological Studies:

Every cure has a starting point. The Salk Institute embodies Jonas Salk’s mission to dare to make dreams into reality. Its internationally renowned and award-winning scientists explore the very foundations of life, seeking new understandings in neuroscience, genetics, immunology, plant biology, and more. The Institute is an independent nonprofit organization and architectural landmark: small by choice, intimate by nature, and fearless in the face of any challenge. Be it cancer or Alzheimer’s, aging or diabetes, Salk is where cures begin. Learn more at: salk.edu.

I find this image quite intriguing,

I’m trying to figure out how they’ll do this. Will each visitor be ‘tagged’ as they enter the LACMA gallery so they can be ‘followed’ individually as they respond (or don’t respond) to the exhibits? Will they be notified that they are participating in a study?

I was tracked without my knowledge or consent at the Vancouver (Canada) Art Gallery’s (VAG) exhibition, “The Imitation Game: Visual Culture in the Age of Artificial Intelligence” (March 5, 2022 – October 23, 2022). It was disconcerting to find out that my ‘tracks’ had become part of a real time installation. (The result of my trip to the VAG was a two-part commentary: “Mad, bad, and dangerous to know? Artificial Intelligence at the Vancouver [Canada] Art Gallery [1 of 2]: The Objects” and “Mad, bad, and dangerous to know? Artificial Intelligence at the Vancouver [Canada] Art Gallery [2 of 2]: Meditations”. My response to the experience can be found under the ‘Eeek’ subhead of part 2: Meditations. For the curious, part 1: The Objects is here.)