While this July 10, 2014 news item on ScienceDaily concerns DARPA, an implantable neural device, and the Lawrence Livermore National Laboratory (LLNL), it is a new project and not the one featured here in a June 18, 2014 posting titled: ‘DARPA (US Defense Advanced Research Projects Agency) awards funds for implantable neural interface’.

The new project as per the July 10, 2014 news item on ScienceDaily concerns memory,

The Department of Defense’s Defense Advanced Research Projects Agency (DARPA) awarded Lawrence Livermore National Laboratory (LLNL) up to $2.5 million to develop an implantable neural device with the ability to record and stimulate neurons within the brain to help restore memory, DARPA officials announced this week.

The research builds on the understanding that memory is a process in which neurons in certain regions of the brain encode information, store it and retrieve it. Certain types of illnesses and injuries, including Traumatic Brain Injury (TBI), Alzheimer’s disease and epilepsy, disrupt this process and cause memory loss. TBI, in particular, has affected 270,000 military service members since 2000.

A July 2, 2014 LLNL news release, which originated the news item, provides more detail,

The goal of LLNL’s work — driven by LLNL’s Neural Technology group and undertaken in collaboration with the University of California, Los Angeles (UCLA) and Medtronic — is to develop a device that uses real-time recording and closed-loop stimulation of neural tissues to bridge gaps in the injured brain and restore individuals’ ability to form new memories and access previously formed ones.

…

Specifically, the Neural Technology group will seek to develop a neuromodulation system — a sophisticated electronics system to modulate neurons — that will investigate areas of the brain associated with memory to understand how new memories are formed. The device will be developed at LLNL’s Center for Bioengineering.

“Currently, there is no effective treatment for memory loss resulting from conditions like TBI,” said LLNL’s project leader Satinderpall Pannu, director of the LLNL’s Center for Bioengineering, a unique facility dedicated to fabricating biocompatible neural interfaces. …

LLNL will develop a miniature, wireless and chronically implantable neural device that will incorporate both single neuron and local field potential recordings into a closed-loop system to implant into TBI patients’ brains. The device — implanted into the entorhinal cortex and hippocampus — will allow for stimulation and recording from 64 channels located on a pair of high-density electrode arrays. The entorhinal cortex and hippocampus are regions of the brain associated with memory.

The arrays will connect to an implantable electronics package capable of wireless data and power telemetry. An external electronic system worn around the ear will store digital information associated with memory storage and retrieval and provide power telemetry to the implantable package using a custom RF-coil system.

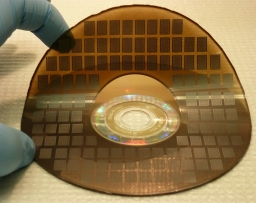

Designed to last throughout the duration of treatment, the device’s electrodes will be integrated with electronics using advanced LLNL integration and 3D packaging technologies. The microelectrodes that are the heart of this device are embedded in a biocompatible, flexible polymer.

Using the Center for Bioengineering’s capabilities, Pannu and his team of engineers have achieved 25 patents and many publications during the last decade. The team’s goal is to build the new prototype device for clinical testing by 2017.

Lawrence Livermore’s collaborators, UCLA and Medtronic, will focus on conducting clinical trials and fabricating parts and components, respectively.

“The RAM [Restoring Active Memory] program poses a formidable challenge reaching across multiple disciplines from basic brain research to medicine, computing and engineering,” said Itzhak Fried, lead investigator for the UCLA on this project and professor of neurosurgery and psychiatry and biobehavioral sciences at the David Geffen School of Medicine at UCLA and the Semel Institute for Neuroscience and Human Behavior. “But at the end of the day, it is the suffering individual, whether an injured member of the armed forces or a patient with Alzheimer’s disease, who is at the center of our thoughts and efforts.”

LLNL’s work on the Restoring Active Memory program supports [US] President [Barack] Obama’s Brain Research through Advancing Innovative Neurotechnologies (BRAIN) initiative.

Obama’s BRAIN is picking up speed.