A March 2, 2021 University of Queensland press release (also on EurekAlert) announces research into how fish brains develop and how baby fish hear,

A DJ-turned-researcher at The University of Queensland has used her knowledge of cool beats to understand brain networks and hearing in baby fish

The ‘Fish DJ’ used her acoustic experience to design a speaker system for zebrafish larvae and discovered that their hearing is considerably better than originally thought.

This video clip features zebrafish larvae listening to music, MC Hammer’s ‘U Can’t Touch This’ (1990),

Here’s the rest of the March 2, 2021 University of Queensland press release,

PhD candidate Rebecca Poulsen from the Queensland Brain Institute said that combining this new speaker system with whole-brain imaging showed how larvae can hear a range of different sounds they would encounter in the wild.

“For many years my music career has been in music production and DJ-ing — I’ve found underwater acoustics to be a lot more complicated than air frequencies,” Ms Poulsen said.

“It is very rewarding to be using the acoustic skills I learnt in my undergraduate degree, and in my music career, to overcome the challenge of delivering sounds to our zebrafish in the lab.

“I designed the speaker to adhere to the chamber the larvae are in, so all the sound I play is accurately received by the larvae, with no loss through the air.”

Ms Poulsen said people did not often think about underwater hearing, but it was crucial for fish survival – to escape predators, find food and communicate with each other.

Ms Poulsen worked with Associate Professor Ethan Scott, who specialises in the neural circuits and behaviour of sensory processing, to study the zebrafish and find out how their neurons work together to process sounds.

The tiny size of the zebrafish larvae allows researchers to study their entire brain under a microscope and see the activity of each brain cell individually.

“Using this new speaker system combined with whole brain imaging, we can see which brain cells and regions are active when the fish hear different types of sounds,” Dr Scott said.

The researchers are testing different sounds to see if the fish can discriminate between single frequencies, white noise, short sharp sounds and sound with a gradual crescendo of volume.

These sounds include components of what a fish would hear in the wild, like running water, other fish swimming past, objects hitting the surface of the water and predators approaching.

“Conventional thinking is that fish larvae have rudimentary hearing, and only hear low-frequency sounds, but we have shown they can hear relatively high-frequency sounds and that they respond to several specific properties of diverse sounds,” Dr Scott said.

“This raises a host of questions about how their brains interpret these sounds and how hearing contributes to their behaviour.”

Ms Poulsen has played many types of sounds to the larvae to see which parts of their brains light up, but also some music – including MC Hammer’s “U Can’t Touch This”– that even MC Hammer himself enjoyed.

The March 3, 3021 story by Graham Readfearn originally published by The Guardian (also found on MSN News), has more details about the work and the researcher,

…

As Australia’s first female dance music producer and DJ, Rebecca Poulsen – aka BeXta – is a pioneer, with scores of tracks, mixes and hundreds of gigs around the globe under her belt.

But between DJ gigs, the 46-year-old is now back at university studying neuroscience at Queensland Brain Institute at the University of Queensland in Brisbane.

And part of this involves gently securing baby zebrafish inside a chamber and then playing them sounds while scanning their brains with a laser and looking at what happens through a microscope.

…

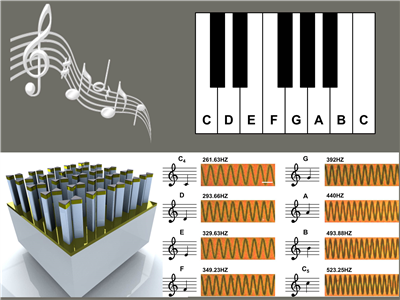

The analysis for the study doesn’t look at how the fish larvae react during Hammer [MC Hammer] time, but how their brain cells react to simple single-frequency sounds.

“It told us their hearing range was broader than we thought it was before,” she says.

Poulsen also tried more complex sounds, like white noise and “frequency sweeps”, which she describes as “like the sound when Wile E Coyote falls off a cliff” in the Road Runner cartoons.

“When you look at the neurons that light up at each sound, they’re unique. The fish can tell the difference between complex and different sounds.”

This is, happily, where MC Hammer comes in.

Out of professional and scientific curiosity – and also presumably just because she could – Poulsen played music to the fish.

She composed her own piece of dance music and that did seem to light things up.

But what about U Can’t Touch This?

“You can see when the vocal goes ‘ohhh-oh’, specific neurons light up and you can see it pulses to the beat. To me it looks like neurons responding to different parts of the music.

“I do like the track. I was pretty little when it came out and I loved it. I didn’t have the harem pants, though, but I did used to do the dance.”

…

How do you stop the fish from swimming away while you play them sounds? And how do you get a speaker small enough to deliver different volumes and frequencies without startling the fish?

For the first problem, the baby zebrafish – just 3mm long – are contained in a jelly-like substance that lets them breathe “but stops them from swimming away and keeps them nice and still so we can image them”.

For the second problem, Poulsen and colleagues used a speaker just 1cm wide and stuck it to the glass of the 2cm-cubed chamber the fish was contained in.

Using fish larvae has its advantages. “They’re so tiny we can see their whole brain … we can see the whole brain live in real time.”

If you have the time, I recommend reading Readfearn’s March 3, 3021 story in its entirety.

Poulsen as Bexta has a Wikipedia entry and I gather from Readfearn’s story that she is still active professionally.

Here’s a link to and a citation for the published paper,

Broad frequency sensitivity and complex neural coding in the larval zebrafish auditory system by Rebecca E. Poulsen, Leandro A. Scholz, Lena Constantin, Itia Favre-Bulle, Gilles C. Vanwalleghem, Ethan K. Scott. Current Biology DOI:https://doi.org/10.1016/j.cub.2021.01.103 Published: March 02, 2021

This paper appears to be open access.

There is an earlier version of the paper on bioRxiv made available for open peer review. Oddly, I don’t see any comments but perhaps I need to login.

Related research but not the same

I was surprised when a friend of mine in early January 2021 needed to be persuaded that noise in aquatic environments is a problem. If you should have any questions or doubts, perhaps this March 4, 2021 article by Amy Noise (that is her name) on the Research2Reality website can answer them,

Ever had builders working next door? Or a neighbour leaf blowing while you’re trying to make a phone call? Unwanted background noise isn’t just stressful, it also has tangible health impacts – for both humans and our marine cousins.

Sound travels faster and farther in water than in air. For marine creatures who rely heavily on sound, crowded ocean soundscapes could be more harmful than previously thought.

Marine animals use sound to navigate, communicate, find food and mates, spot predators, and socialize. But since the Industrial Revolution, humans have made the planet, and the oceans in particular, exponentially noisier.

From shipping and fishing, to mining and sonar, underwater anthropogenic noise is becoming louder and more prevalent. While parts of the ocean’s chorus are being drowned out, others are being permanently muted through hunting and habitat loss.

…

[An] international team, including University of Victoria biologist Francis Juanes, reviewed over 10,000 papers from the past 40 years. They found overwhelming evidence that anthropogenic noise is negatively impacting marine animals.

…

Getting back to Poulsen and Queensland, her focus is on brain development not noise although I imagine some of her work may be of use to researchers investigating anthropogenic noise and its impact on aquatic life.

![Rorschach Audio visual image [downloaded from http://rorschachaudio.wordpress.com/2013/06/04/british-library-sonic-archives/]](http://www.frogheart.ca/wp-content/uploads/2013/06/rorschach_audio_sonic_archives-300x283.jpg)