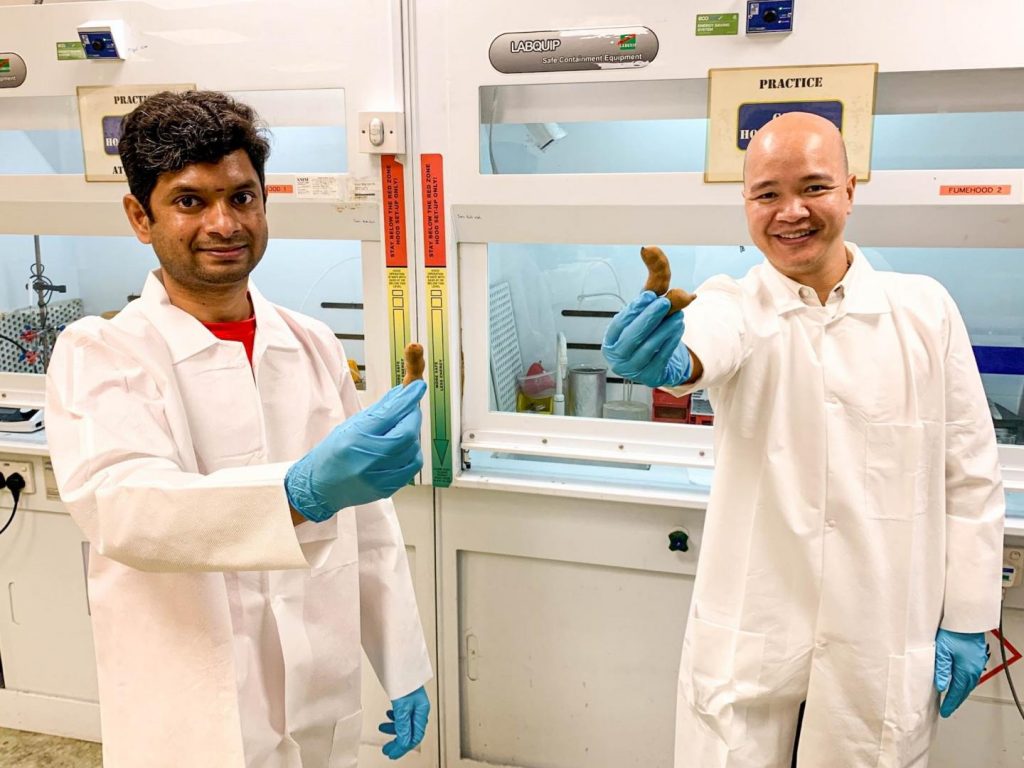

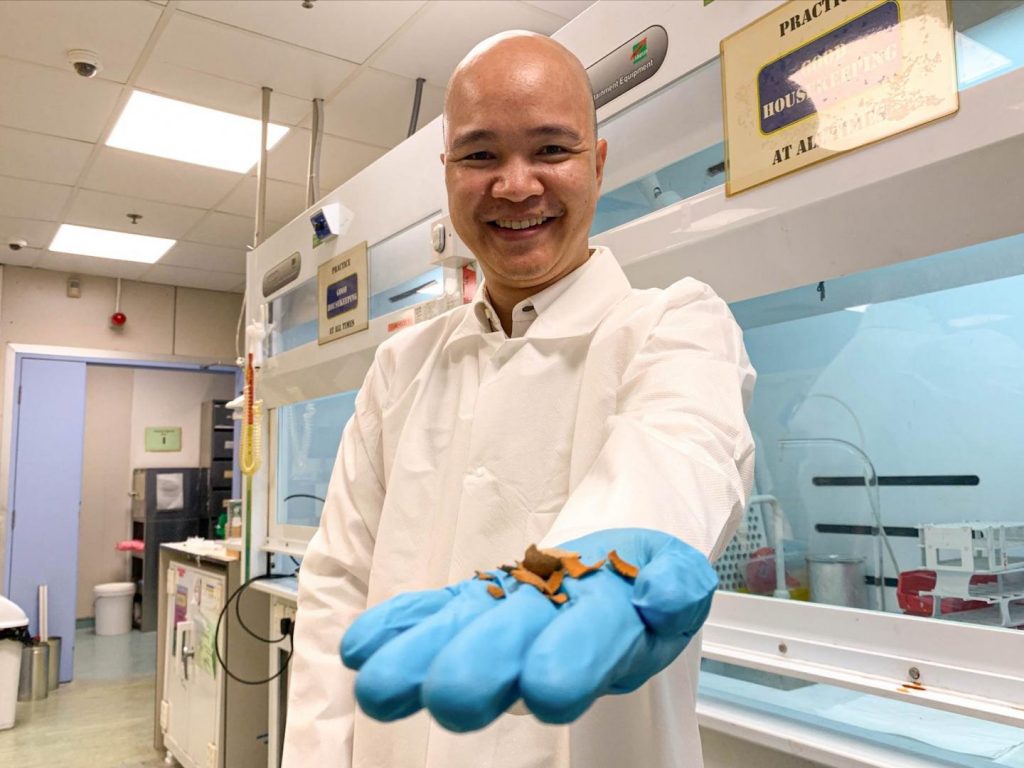

Fro anyone who needs a shot of happiness, this is a very happy scientist,

A July 14, 2021 news item on ScienceDaily describes the source of assistant professor (Steve) Cuong Dang’s happiness,

Shells of tamarind, a tropical fruit consumed worldwide, are discarded during food production. As they are bulky, tamarind shells take up a considerable amount of space in landfills where they are disposed as agricultural waste.

However, a team of international scientists led by Nanyang Technological University, Singapore (NTU Singapore) has found a way to deal with the problem. By processing the tamarind shells which are rich in carbon, the scientists converted the waste material into carbon nanosheets, which are a key component of supercapacitors – energy storage devices that are used in automobiles, buses, electric vehicles, trains, and elevators.

The study reflects NTU’s commitment to address humanity’s grand challenges on sustainability as part of its 2025 strategic plan, which seeks to accelerate the translation of research discoveries into innovations that mitigate our impact on the environment.

A July 14, 2021 NTU press release (also here [scroll down to click on the link to the full press release] and on EurekAlert but published July 13, 2021), which originated the news item, delves further into the topic,

he team, made up of researchers from NTU Singapore, the Western Norway University of Applied Sciences in Norway, and Alagappa University in India, believes that these nanosheets, when scaled up, could be an eco-friendly alternative to their industrially produced counterparts, and cut down on waste at the same time.

Assistant Professor (Steve) Cuong Dang, from NTU’s School of Electrical and Electronic Engineering, who led the study, said: “Through a series of analysis, we found that the performance of our tamarind shell-derived nanosheets was comparable to their industrially made counterparts in terms of porous structure and electrochemical properties. The process to make the nanosheets is also the standard method to produce active carbon nanosheets.”

Professor G. Ravi, Head, Department of Physics, who co-authored the study with Asst Prof Dr R. Yuvakkumar, who are both from Alagappa University, said: “The use of tamarind shells may reduce the amount of space required for landfills, especially in regions in Asia such as India, one of the world’s largest producers of tamarind, which is also grappling with waste disposal issues.”

The study was published in the peer-reviewed scientific journal Chemosphere in June [2021].

The step-by-step recipe for carbon nanosheets

To manufacture the carbon nanosheets, the researchers first washed tamarind fruit shells and dried them at 100°C for around six hours, before grinding them into powder.

The scientists then baked the powder in a furnace for 150 minutes at 700-900 degrees Celsius in the absence of oxygen to convert them into ultrathin sheets of carbon known as nanosheets.

Tamarind shells are rich in carbon and porous in nature, making them an ideal material from which to manufacture carbon nanosheets.

A common material used to produce carbon nanosheets are industrial hemp fibres. However, they require to be heated at over 180°C for 24 hours – four times longer than that of tamarind shells, and at a higher temperature. This is before the hemp is further subjected to intense heat to convert them into carbon nanosheets.

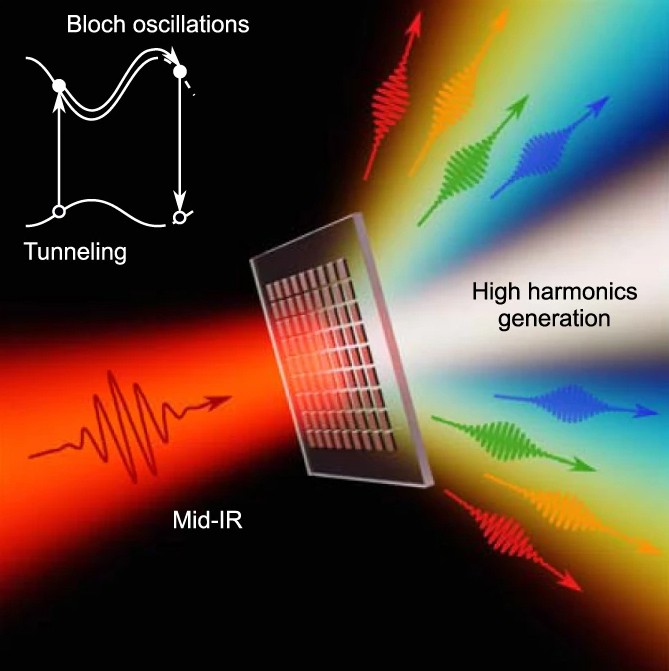

Professor Dhayalan Velauthapillai, Head of the research group for Advanced Nanomaterials for Clean Energy and Health Applications at Western Norway University of Applied Sciences, who participated in the study, said: “Carbon nanosheets comprise of layers of carbon atoms arranged in interconnecting hexagons, like a honeycomb. The secret behind their energy storing capabilities lies in their porous structure leading to large surface area which help the material to store large amounts of electric charges.”

The tamarind shell-derived nanosheets also showed good thermal stability and electric conductivity, making them promising options for energy storage.

The researchers hope to explore larger scale production of the carbon nanosheets with agricultural partners. They are also working on reducing the energy needed for the production process, making it more environmentally friendly, and are seeking to improve the electrochemical properties of the nanosheets.

The team also hopes to explore the possibility of using different types of fruit skins or shells to produce carbon nanosheets.

Here’s a link to and a citation for the paper,

Cleaner production of tamarind fruit shell into bio-mass derived porous 3D-activated carbon nanosheets by CVD technique for supercapacitor applications by V. Thirumal, K. Dhamodharan, R. Yuvakkumar, G. Ravi, B. Saravanakumar, M. Thambidurai, Cuong Dang, Dhayalan Velauthapillai. Chemosphere Volume 282, November 2021, 131033 DOI: https://doi.org/10.1016/j.chemosphere.2021.131033 Available online 2 June 2021.

This paper is behind a paywall.

Because we could all do with a little more happiness these days,