This is the second of a two-part posting about robots in Vancouver and Canada. The first part included a definition, a brief mention a robot ethics quandary, and sexbots. This part is all about the future. (Part one is here.)

Canadian Robotics Strategy

Meetings were held Sept. 28 – 29, 2017 in, surprisingly, Vancouver. (For those who don’t know, this is surprising because most of the robotics and AI research seems to be concentrated in eastern Canada. if you don’t believe me take a look at the speaker list for Day 2 or the ‘Canadian Stakeholder’ meeting day.) From the NSERC (Natural Sciences and Engineering Research Council) events page of the Canadian Robotics Network,

Join us as we gather robotics stakeholders from across the country to initiate the development of a national robotics strategy for Canada. Sponsored by the Natural Sciences and Engineering Research Council of Canada (NSERC), this two-day event coincides with the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2017) in order to leverage the experience of international experts as we explore Canada’s need for a national robotics strategy.

Where

Vancouver, BC, CanadaWhen

Thursday September 28 & Friday September 29, 2017 — Save the date!Download the full agenda and speakers’ list here.

Objectives

The purpose of this two-day event is to gather members of the robotics ecosystem from across Canada to initiate the development of a national robotics strategy that builds on our strengths and capacities in robotics, and is uniquely tailored to address Canada’s economic needs and social values.

This event has been sponsored by the Natural Sciences and Engineering Research Council of Canada (NSERC) and is supported in kind by the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2017) as an official Workshop of the conference. The first of two days coincides with IROS 2017 – one of the premiere robotics conferences globally – in order to leverage the experience of international robotics experts as we explore Canada’s need for a national robotics strategy here at home.

Who should attend

Representatives from industry, research, government, startups, investment, education, policy, law, and ethics who are passionate about building a robust and world-class ecosystem for robotics in Canada.

Program Overview

Download the full agenda and speakers’ list here.

DAY ONE: IROS Workshop

“Best practices in designing effective roadmaps for robotics innovation”

Thursday September 28, 2017 | 8:30am – 5:00pm | Vancouver Convention Centre

Morning Program:“Developing robotics innovation policy and establishing key performance indicators that are relevant to your region” Leading international experts share their experience designing robotics strategies and policy frameworks in their regions and explore international best practices. Opening Remarks by Prof. Hong Zhang, IROS 2017 Conference Chair.

Afternoon Program: “Understanding the Canadian robotics ecosystem” Canadian stakeholders from research, industry, investment, ethics and law provide a collective overview of the Canadian robotics ecosystem. Opening Remarks by Ryan Gariepy, CTO of Clearpath Robotics.

Thursday Evening Program: Sponsored by Clearpath Robotics Workshop participants gather at a nearby restaurant to network and socialize.

…

Learn more about the IROS Workshop.

DAY TWO: NSERC-Sponsored Canadian Robotics Stakeholder Meeting

“Towards a national robotics strategy for Canada”Friday September 29, 2017 | 8:30am – 5:00pm | University of British Columbia (UBC)

On the second day of the program, robotics stakeholders from across the country gather at UBC for a full day brainstorming session to identify Canada’s unique strengths and opportunities relative to the global competition, and to align on a strategic vision for robotics in Canada.

Friday Evening Program: Sponsored by NSERC Meeting participants gather at a nearby restaurant for the event’s closing dinner reception.

Learn more about the Canadian Robotics Stakeholder Meeting.

…

I was glad to see in the agenda that some of the international speakers represented research efforts from outside the usual Europe/US axis.

I have been in touch with one of the organizers (also mentioned in part one with regard to robot ethics), Ajung Moon (her website is here), who says that there will be a white paper available on the Canadian Robotics Network website at some point in the future. I’ll keep looking for it and, in the meantime, I wonder what the 2018 Canadian federal budget will offer robotics.

Robots and popular culture

For anyone living in Canada or the US, Westworld (television series) is probably the most recent and well known ‘robot’ drama to premiere in the last year.As for movies, I think Ex Machina from 2014 probably qualifies in that category. Interestingly, both Westworld and Ex Machina seem quite concerned with sex with Westworld adding significant doses of violence as another concern.

I am going to focus on another robot story, the 2012 movie, Robot & Frank, which features a care robot and an older man,

Frank (played by Frank Langella), a former jewel thief, teaches a robot the skills necessary to rob some neighbours of their valuables. The ethical issue broached in the film isn’t whether or not the robot should learn the skills and assist Frank in his thieving ways although that’s touched on when Frank keeps pointing out that planning his heist requires he live more healthily. No, the problem arises afterward when the neighbour accuses Frank of the robbery and Frank removes what he believes is all the evidence. He believes he’s going successfully evade arrest until the robot notes that Frank will have to erase its memory in order to remove all of the evidence. The film ends without the robot’s fate being made explicit.

In a way, I find the ethics query (was the robot Frank’s friend or just a machine?) posed in the film more interesting than the one in Vikander’s story, an issue which does have a history. For example, care aides, nurses, and/or servants would have dealt with requests to give an alcoholic patient a drink. Wouldn’t there already be established guidelines and practices which could be adapted for robots? Or, is this question made anew by something intrinsically different about robots?

To be clear, Vikander’s story is a good introduction and starting point for these kinds of discussions as is Moon’s ethical question. But they are starting points and I hope one day there’ll be a more extended discussion of the questions raised by Moon and noted in Vikander’s article (a two- or three-part series of articles? public discussions?).

How will humans react to robots?

Earlier there was the contention that intimate interactions with robots and sexbots would decrease empathy and the ability of human beings to interact with each other in caring ways. This sounds a bit like the argument about smartphones/cell phones and teenagers who don’t relate well to others in real life because most of their interactions are mediated through a screen, which many seem to prefer. It may be partially true but, arguably,, books too are an antisocial technology as noted in Walter J. Ong’s influential 1982 book, ‘Orality and Literacy’, (from the Walter J. Ong Wikipedia entry),

A major concern of Ong’s works is the impact that the shift from orality to literacy has had on culture and education. Writing is a technology like other technologies (fire, the steam engine, etc.) that, when introduced to a “primary oral culture” (which has never known writing) has extremely wide-ranging impacts in all areas of life. These include culture, economics, politics, art, and more. Furthermore, even a small amount of education in writing transforms people’s mentality from the holistic immersion of orality to interiorization and individuation. [emphases mine]

So, robotics and artificial intelligence would not be the first technologies to affect our brains and our social interactions.

There’s another area where human-robot interaction may have unintended personal consequences according to April Glaser’s Sept. 14, 2017 article on Slate.com (Note: Links have been removed),

The customer service industry is teeming with robots. From automated phone trees to touchscreens, software and machines answer customer questions, complete orders, send friendly reminders, and even handle money. For an industry that is, at its core, about human interaction, it’s increasingly being driven to a large extent by nonhuman automation.

But despite the dreams of science-fiction writers, few people enter a customer-service encounter hoping to talk to a robot. And when the robot malfunctions, as they so often do, it’s a human who is left to calm angry customers. It’s understandable that after navigating a string of automated phone menus and being put on hold for 20 minutes, a customer might take her frustration out on a customer service representative. Even if you know it’s not the customer service agent’s fault, there’s really no one else to get mad at. It’s not like a robot cares if you’re angry.

When human beings need help with something, says Madeleine Elish, an anthropologist and researcher at the Data and Society Institute who studies how humans interact with machines, they’re not only looking for the most efficient solution to a problem. They’re often looking for a kind of validation that a robot can’t give. “Usually you don’t just want the answer,” Elish explained. “You want sympathy, understanding, and to be heard”—none of which are things robots are particularly good at delivering. In a 2015 survey of over 1,300 people conducted by researchers at Boston University, over 90 percent of respondents said they start their customer service interaction hoping to speak to a real person, and 83 percent admitted that in their last customer service call they trotted through phone menus only to make their way to a human on the line at the end.

“People can get so angry that they have to go through all those automated messages,” said Brian Gnerer, a call center representative with AT&T in Bloomington, Minnesota. “They’ve been misrouted or been on hold forever or they pressed one, then two, then zero to speak to somebody, and they are not getting where they want.” And when people do finally get a human on the phone, “they just sigh and are like, ‘Thank God, finally there’s somebody I can speak to.’ ”

Even if robots don’t always make customers happy, more and more companies are making the leap to bring in machines to take over jobs that used to specifically necessitate human interaction. McDonald’s and Wendy’s both reportedly plan to add touchscreen self-ordering machines to restaurants this year. Facebook is saturated with thousands of customer service chatbots that can do anything from hail an Uber, retrieve movie times, to order flowers for loved ones. And of course, corporations prefer automated labor. As Andy Puzder, CEO of the fast-food chains Carl’s Jr. and Hardee’s and former Trump pick for labor secretary, bluntly put it in an interview with Business Insider last year, robots are “always polite, they always upsell, they never take a vacation, they never show up late, there’s never a slip-and-fall, or an age, sex, or race discrimination case.”

But those robots are backstopped by human beings. How does interacting with more automated technology affect the way we treat each other? …

…

“We know that people treat artificial entities like they’re alive, even when they’re aware of their inanimacy,” writes Kate Darling, a researcher at MIT who studies ethical relationships between humans and robots, in a recent paper on anthropomorphism in human-robot interaction. Sure, robots don’t have feelings and don’t feel pain (not yet, anyway). But as more robots rely on interaction that resembles human interaction, like voice assistants, the way we treat those machines will increasingly bleed into the way we treat each other.

…

It took me a while to realize that what Glaser is talking about are AI systems and not robots as such. (sigh) It’s so easy to conflate the concepts.

AI ethics (Toby Walsh and Suzanne Gildert)

Jack Stilgoe of the Guardian published a brief Oct. 9, 2017 introduction to his more substantive (30 mins.?) podcast interview with Dr. Toby Walsh where they discuss stupid AI amongst other topics (Note: A link has been removed),

Professor Toby Walsh has recently published a book – Android Dreams – giving a researcher’s perspective on the uncertainties and opportunities of artificial intelligence. Here, he explains to Jack Stilgoe that we should worry more about the short-term risks of stupid AI in self-driving cars and smartphones than the speculative risks of super-intelligence.

Professor Walsh discusses the effects that AI could have on our jobs, the shapes of our cities and our understandings of ourselves. As someone developing AI, he questions the hype surrounding the technology. He is scared by some drivers’ real-world experimentation with their not-quite-self-driving Teslas. And he thinks that Siri needs to start owning up to being a computer.

I found this discussion to cast a decidedly different light on the future of robotics and AI. Walsh is much more interested in discussing immediate issues like the problems posed by ‘self-driving’ cars. (Aside: Should we be calling them robot cars?)

One ethical issue Walsh raises is with data regarding accidents. He compares what’s happening with accident data from self-driving (robot) cars to how the aviation industry handles accidents. Hint: accident data involving air planes is shared. Would you like to guess who does not share their data?

Sharing and analyzing data and developing new safety techniques based on that data has made flying a remarkably safe transportation technology.. Walsh argues the same could be done for self-driving cars if companies like Tesla took the attitude that safety is in everyone’s best interests and shared their accident data in a scheme similar to the aviation industry’s.

In an Oct. 12, 2017 article by Matthew Braga for Canadian Broadcasting Corporation (CBC) news online another ethical issue is raised by Suzanne Gildert (a participant in the Canadian Robotics Roadmap/Strategy meetings mentioned earlier here), Note: Links have been removed,

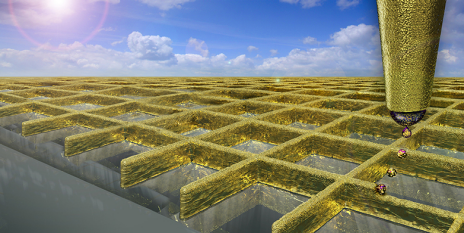

… Suzanne Gildert, the co-founder and chief science officer of Vancouver-based robotics company Kindred. Since 2014, her company has been developing intelligent robots [emphasis mine] that can be taught by humans to perform automated tasks — for example, handling and sorting products in a warehouse.

The idea is that when one of Kindred’s robots encounters a scenario it can’t handle, a human pilot can take control. The human can see, feel and hear the same things the robot does, and the robot can learn from how the human pilot handles the problematic task.

This process, called teleoperation, is one way to fast-track learning by manually showing the robot examples of what its trainers want it to do. But it also poses a potential moral and ethical quandary that will only grow more serious as robots become more intelligent.

“That AI is also learning my values,” Gildert explained during a talk on robot ethics at the Singularity University Canada Summit in Toronto on Wednesday [Oct. 11, 2017]. “Everything — my mannerisms, my behaviours — is all going into the AI.”

…

At its worst, everything from algorithms used in the U.S. to sentence criminals to image-recognition software has been found to inherit the racist and sexist biases of the data on which it was trained.

But just as bad habits can be learned, good habits can be learned too. The question is, if you’re building a warehouse robot like Kindred is, is it more effective to train those robots’ algorithms to reflect the personalities and behaviours of the humans who will be working alongside it? Or do you try to blend all the data from all the humans who might eventually train Kindred robots around the world into something that reflects the best strengths of all?

…

I notice Gildert distinguishes her robots as “intelligent robots” and then focuses on AI and issues with bias which have already arisen with regard to algorithms (see my May 24, 2017 posting about bias in machine learning, AI, and .Note: if you’re in Vancouver on Oct. 26, 2017 and interested in algorithms and bias), there’s a talk being given by Dr. Cathy O’Neil, author the Weapons of Math Destruction, on the topic of Gender and Bias in Algorithms. It’s not free but tickets are here.)

Final comments

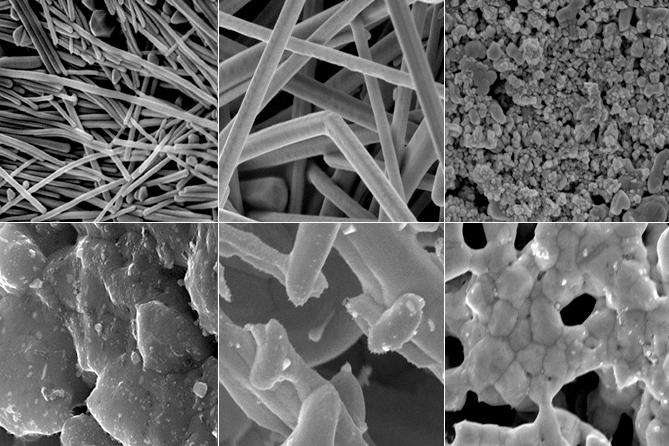

There is one more aspect I want to mention. Even as someone who usually deals with nanobots, it’s easy to start discussing robots as if the humanoid ones are the only ones that exist. To recapitulate, there are humanoid robots, utilitarian robots, intelligent robots, AI, nanobots, ‘microscopic bots, and more all of which raise questions about ethics and social impacts.

However, there is one more category I want to add to this list: cyborgs. They live amongst us now. Anyone who’s had a hip or knee replacement or a pacemaker or a deep brain stimulator or other such implanted device qualifies as a cyborg. Increasingly too, prosthetics are being introduced and made part of the body. My April 24, 2017 posting features this story,

…

This Case Western Reserve University (CRWU) video accompanies a March 28, 2017 CRWU news release, (h/t ScienceDaily March 28, 2017 news item)

Bill Kochevar grabbed a mug of water, drew it to his lips and drank through the straw.

His motions were slow and deliberate, but then Kochevar hadn’t moved his right arm or hand for eight years.

And it took some practice to reach and grasp just by thinking about it.

Kochevar, who was paralyzed below his shoulders in a bicycling accident, is believed to be the first person with quadriplegia in the world to have arm and hand movements restored with the help of two temporarily implanted technologies. [emphasis mine]

A brain-computer interface with recording electrodes under his skull, and a functional electrical stimulation (FES) system* activating his arm and hand, reconnect his brain to paralyzed muscles.

…

Does a brain-computer interface have an effect on human brain and, if so, what might that be?

In any discussion (assuming there is funding for it) about ethics and social impact, we might want to invite the broadest range of people possible at an ‘earlyish’ stage (although we’re already pretty far down the ‘automation road’) stage or as Jack Stilgoe and Toby Walsh note, technological determinism holds sway.

Once again here are links for the articles and information mentioned in this double posting,

- My Sept. 22, 2017 posting about the latest nanobot

- Robot Wikipedia entry

- Tessa Vikander’s Sept. 14, 2017 article about robot ethics in the Westender

- Alessandra Maldonado’s Aug. 10, 2017 article for salon.com about sexbots

- Marc Beaulieu’s Sept. 25, 2017 article for Canadian Broadcasting News (CBC) online about hacking sexbots

- My March 24, 2017 posting about the 2017 Canadian federal budget

- Ajung Moon’s website is here

- The Canadian Robotics Network website

- April Glaser’s Sept. 14, 2017 article about how our interactions with robots may change us on slate.com

- My April 24, 2017 posting about the integration of prosthetics into the brain

- Part one of this two-parter

That’s it!

ETA Oct. 16, 2017: Well, I guess that wasn’t quite ‘it’. BBC’s (British Broadcasting Corporation) Magazine published a thoughtful Oct. 15, 2017 piece titled: Can we teach robots ethics?

![[downloaded from https://github.com/jhermann/Stack-O-Waffles] Credit: jherman](http://www.frogheart.ca/wp-content/uploads/2016/01/Waffles.png)