Mutaz Musa, a physician at New York Presbyterian Hospital/Weill Cornell (Department of Emergency Medicine) and software developer in New York City, has penned an eyeopening opinion piece about artificial intelligence (or robots if you prefer) and the field of radiology. From a June 25, 2018 opinion piece for The Scientist (Note: Links have been removed),

Although artificial intelligence has raised fears of job loss for many, we doctors have thus far enjoyed a smug sense of security. There are signs, however, that the first wave of AI-driven redundancies among doctors is fast approaching. And radiologists seem to be first on the chopping block.

…

Andrew Ng, founder of online learning platform Coursera and former CTO of “China’s Google,” Baidu, recently announced the development of CheXNet, a convolutional neural net capable of recognizing pneumonia and other thoracic pathologies on chest X-rays better than human radiologists. Earlier this year, a Hungarian group developed a similar system for detecting and classifying features of breast cancer in mammograms. In 2017, Adelaide University researchers published details of a bot capable of matching human radiologist performance in detecting hip fractures. And, of course, Google achieved superhuman proficiency in detecting diabetic retinopathy in fundus photographs, a task outside the scope of most radiologists.

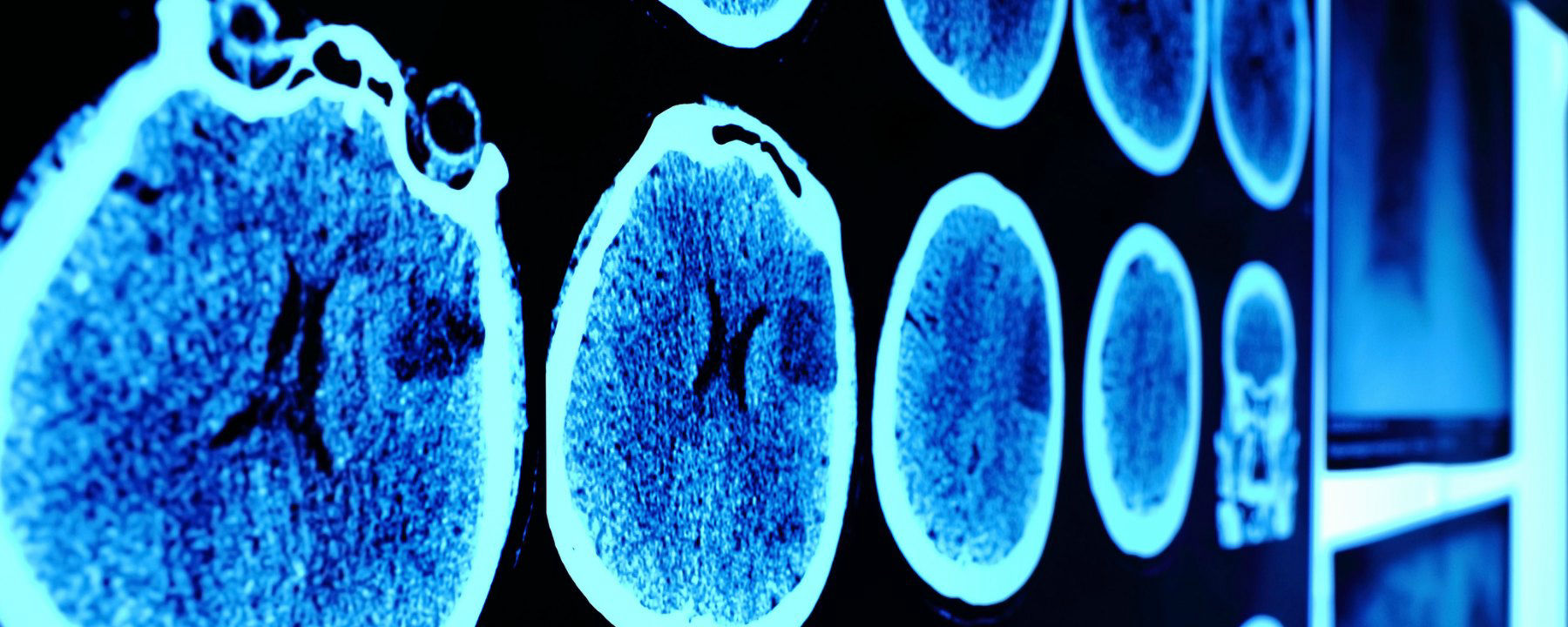

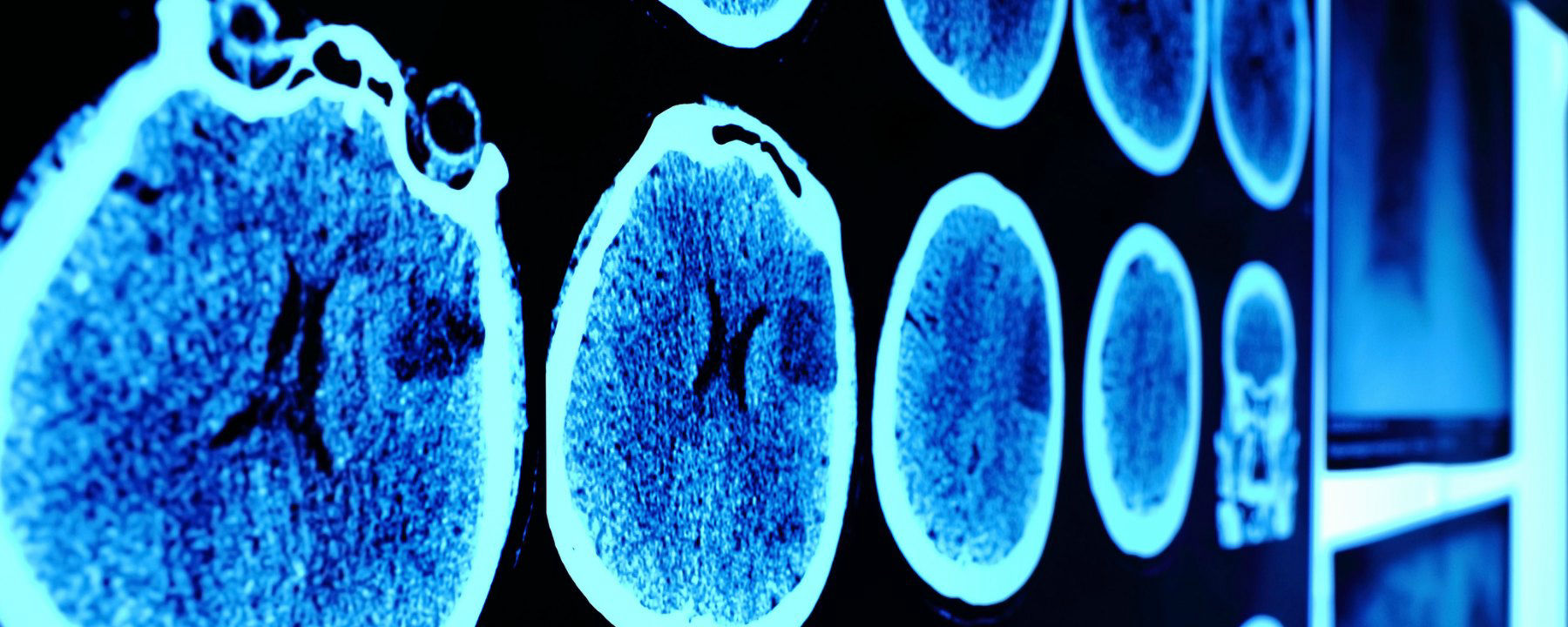

Beyond single, two-dimensional radiographs, a team at Oxford University developed a system for detecting spinal disease from MRI data with a performance equivalent to a human radiologist. Meanwhile, researchers at the University of California, Los Angeles, reported detecting pathology on head CT scans with an error rate more than 20 times lower than a human radiologist.

Although these particular projects are still in the research phase and far from perfect—for instance, often pitting their machines against a limited number of radiologists—the pace of progress alone is telling.

Others have already taken their algorithms out of the lab and into the marketplace. Enlitic, founded by Aussie serial entrepreneur and University of San Francisco researcher Jeremy Howard, is a Bay-Area startup that offers automated X-ray and chest CAT scan interpretation services. Enlitic’s systems putatively can judge the malignancy of nodules up to 50 percent more accurately than a panel of radiologists and identify fractures so small they’d typically be missed by the human eye. One of Enlitic’s largest investors, Capitol Health, owns a network of diagnostic imaging centers throughout Australia, anticipating the broad rollout of this technology. Another Bay-Area startup, Arterys, offers cloud-based medical imaging diagnostics. Arterys’s services extend beyond plain films to cardiac MRIs and CAT scans of the chest and abdomen. And there are many others.

Musa has offered a compelling argument with lots of links to supporting evidence.

[downloaded from https://www.the-scientist.com/news-opinion/opinion–rise-of-the-robot-radiologists-64356]

And evidence keeps mounting, I just stumbled across this

June 30, 2018 news item on Xinhuanet.com,

An artificial intelligence (AI) system scored 2:0 against elite human physicians Saturday in two rounds of competitions in diagnosing brain tumors and predicting hematoma expansion in Beijing.

The BioMind AI system, developed by the Artificial Intelligence Research Centre for Neurological Disorders at the Beijing Tiantan Hospital and a research team from the Capital Medical University, made correct diagnoses in 87 percent of 225 cases in about 15 minutes, while a team of 15 senior doctors only achieved 66-percent accuracy.

The AI also gave correct predictions in 83 percent of brain hematoma expansion cases, outperforming the 63-percent accuracy among a group of physicians from renowned hospitals across the country.

The outcomes for human physicians were quite normal and even better than the average accuracy in ordinary hospitals, said Gao Peiyi, head of the radiology department at Tiantan Hospital, a leading institution on neurology and neurosurgery.

To train the AI, developers fed it tens of thousands of images of nervous system-related diseases that the Tiantan Hospital has archived over the past 10 years, making it capable of diagnosing common neurological diseases such as meningioma and glioma with an accuracy rate of over 90 percent, comparable to that of a senior doctor.

All the cases were real and contributed by the hospital, but never used as training material for the AI, according to the organizer.

Wang Yongjun, executive vice president of the Tiantan Hospital, said that he personally did not care very much about who won, because the contest was never intended to pit humans against technology but to help doctors learn and improve [emphasis mine] through interactions with technology.

“I hope through this competition, doctors can experience the power of artificial intelligence. This is especially so for some doctors who are skeptical about artificial intelligence. I hope they can further understand AI and eliminate their fears toward it,” said Wang.

Dr. Lin Yi who participated and lost in the second round, said that she welcomes AI, as it is not a threat but a “friend.” [emphasis mine]

AI will not only reduce the workload but also push doctors to keep learning and improve their skills, said Lin.

Bian Xiuwu, an academician with the Chinese Academy of Science and a member of the competition’s jury, said there has never been an absolute standard correct answer in diagnosing developing diseases, and the AI would only serve as an assistant to doctors in giving preliminary results. [emphasis mine]

Dr. Paul Parizel, former president of the European Society of Radiology and another member of the jury, also agreed that AI will not replace doctors, but will instead function similar to how GPS does for drivers. [emphasis mine]

Dr. Gauden Galea, representative of the World Health Organization in China, said AI is an exciting tool for healthcare but still in the primitive stages.

Based on the size of its population and the huge volume of accessible digital medical data, China has a unique advantage in developing medical AI, according to Galea.

China has introduced a series of plans in developing AI applications in recent years.

In 2017, the State Council issued a development plan on the new generation of Artificial Intelligence and the Ministry of Industry and Information Technology also issued the “Three-Year Action Plan for Promoting the Development of a New Generation of Artificial Intelligence (2018-2020).”

The Action Plan proposed developing medical image-assisted diagnostic systems to support medicine in various fields.

I note the reference to cars and global positioning systems (GPS) and their role as ‘helpers’;, it seems no one at the ‘AI and radiology’ competition has heard of driverless cars. Here’s Musa on those reassuring comments abut how the technology won’t replace experts but rather augment their skills,

To be sure, these services frame themselves as “support products” that “make doctors faster,” rather than replacements that make doctors redundant. This language may reflect a reserved view of the technology, though it likely also represents a marketing strategy keen to avoid threatening or antagonizing incumbents. After all, many of the customers themselves, for now, are radiologists.

Radiology isn’t the only area where experts might find themselves displaced.

Eye experts

It seems inroads have been made by artificial intelligence systems (AI) into the diagnosis of eye diseases. It got the ‘Fast Company’ treatment (exciting new tech, learn all about it) as can be seen further down in this posting. First, here’s a more restrained announcement, from an August 14, 2018 news item on phys.org (Note: A link has been removed),

An artificial intelligence (AI) system, which can recommend the correct referral decision for more than 50 eye diseases, as accurately as experts has been developed by Moorfields Eye Hospital NHS Foundation Trust, DeepMind Health and UCL [University College London].

The breakthrough research, published online by Nature Medicine, describes how machine-learning technology has been successfully trained on thousands of historic de-personalised eye scans to identify features of eye disease and recommend how patients should be referred for care.

Researchers hope the technology could one day transform the way professionals carry out eye tests, allowing them to spot conditions earlier and prioritise patients with the most serious eye diseases before irreversible damage sets in.

An August 13, 2018 UCL press release, which originated the news item, describes the research and the reasons behind it in more detail,

More than 285 million people worldwide live with some form of sight loss, including more than two million people in the UK. Eye diseases remain one of the biggest causes of sight loss, and many can be prevented with early detection and treatment.

Dr Pearse Keane, NIHR Clinician Scientist at the UCL Institute of Ophthalmology and consultant ophthalmologist at Moorfields Eye Hospital NHS Foundation Trust said: “The number of eye scans we’re performing is growing at a pace much faster than human experts are able to interpret them. There is a risk that this may cause delays in the diagnosis and treatment of sight-threatening diseases, which can be devastating for patients.”

“The AI technology we’re developing is designed to prioritise patients who need to be seen and treated urgently by a doctor or eye care professional. If we can diagnose and treat eye conditions early, it gives us the best chance of saving people’s sight. With further research it could lead to greater consistency and quality of care for patients with eye problems in the future.”

The study, launched in 2016, brought together leading NHS eye health professionals and scientists from UCL and the National Institute for Health Research (NIHR) with some of the UK’s top technologists at DeepMind to investigate whether AI technology could help improve the care of patients with sight-threatening diseases, such as age-related macular degeneration and diabetic eye disease.

Using two types of neural network – mathematical systems for identifying patterns in images or data – the AI system quickly learnt to identify 10 features of eye disease from highly complex optical coherence tomography (OCT) scans. The system was then able to recommend a referral decision based on the most urgent conditions detected.

To establish whether the AI system was making correct referrals, clinicians also viewed the same OCT scans and made their own referral decisions. The study concluded that AI was able to make the right referral recommendation more than 94% of the time, matching the performance of expert clinicians.

The AI has been developed with two unique features which maximise its potential use in eye care. Firstly, the system can provide information that helps explain to eye care professionals how it arrives at its recommendations. This information includes visuals of the features of eye disease it has identified on the OCT scan and the level of confidence the system has in its recommendations, in the form of a percentage. This functionality is crucial in helping clinicians scrutinise the technology’s recommendations and check its accuracy before deciding the type of care and treatment a patient receives.

Secondly, the AI system can be easily applied to different types of eye scanner, not just the specific model on which it was trained. This could significantly increase the number of people who benefit from this technology and future-proof it, so it can still be used even as OCT scanners are upgraded or replaced over time.

The next step is for the research to go through clinical trials to explore how this technology might improve patient care in practice, and regulatory approval before it can be used in hospitals and other clinical settings.

If clinical trials are successful in demonstrating that the technology can be used safely and effectively, Moorfields will be able to use an eventual, regulatory-approved product for free, across all 30 of their UK hospitals and community clinics, for an initial period of five years.

The work that has gone into this project will also help accelerate wider NHS research for many years to come. For example, DeepMind has invested significant resources to clean, curate and label Moorfields’ de-identified research dataset to create one of the most advanced eye research databases in the world.

Moorfields owns this database as a non-commercial public asset, which is already forming the basis of nine separate medical research studies. In addition, Moorfields can also use DeepMind’s trained AI model for future non-commercial research efforts, which could help advance medical research even further.

Mustafa Suleyman, Co-founder and Head of Applied AI at DeepMind Health, said: “We set up DeepMind Health because we believe artificial intelligence can help solve some of society’s biggest health challenges, like avoidable sight loss, which affects millions of people across the globe. These incredibly exciting results take us one step closer to that goal and could, in time, transform the diagnosis, treatment and management of patients with sight threatening eye conditions, not just at Moorfields, but around the world.”

Professor Sir Peng Tee Khaw, director of the NIHR Biomedical Research Centre at Moorfields Eye Hospital NHS Foundation Trust and UCL Institute of Ophthalmology said: “The results of this pioneering research with DeepMind are very exciting and demonstrate the potential sight-saving impact AI could have for patients. I am in no doubt that AI has a vital role to play in the future of healthcare, particularly when it comes to training and helping medical professionals so that patients benefit from vital treatment earlier than might previously have been possible. This shows the transformative research than can be carried out in the UK combining world leading industry and NIHR/NHS hospital/university partnerships.”

Matt Hancock, Health and Social Care Secretary, said: “This is hugely exciting and exactly the type of technology which will benefit the NHS in the long term and improve patient care – that’s why we fund over a billion pounds a year in health research as part of our long term plan for the NHS.”

Here’s a link to and a citation for the study,

Clinically applicable deep learning for diagnosis and referral in retinal disease by Jeffrey De Fauw, Joseph R. Ledsam, Bernardino Romera-Paredes, Stanislav Nikolov, Nenad Tomasev, Sam Blackwell, Harry Askham, Xavier Glorot, Brendan O’Donoghue, Daniel Visentin, George van den Driessche, Balaji Lakshminarayanan, Clemens Meyer, Faith Mackinder, Simon Bouton, Kareem Ayoub, Reena Chopra, Dominic King, Alan Karthikesalingam, Cían O. Hughes, Rosalind Raine, Julian Hughes, Dawn A. Sim, Catherine Egan, Adnan Tufail, Hugh Montgomery, Demis Hassabis, Geraint Rees, Trevor Back, Peng T. Khaw, Mustafa Suleyman, Julien Cornebise, Pearse A. Keane, & Olaf Ronneberger. Nature Medicine (2018) DOI: https://doi.org/10.1038/s41591-018-0107-6 Published 13 August 2018

This paper is behind a paywall.

And now, Melissa Locker’s August 15, 2018 article for Fast Company (Note: Links have been removed),

In a paper published in Nature Medicine on Monday, Google’s DeepMind subsidiary, UCL, and researchers at Moorfields Eye Hospital showed off their new AI system. The researchers used deep learning to create algorithm-driven software that can identify common patterns in data culled from dozens of common eye diseases from 3D scans. The result is an AI that can identify more than 50 diseases with incredible accuracy and can then refer patients to a specialist. Even more important, though, is that the AI can explain why a diagnosis was made, indicating which part of the scan prompted the outcome. It’s an important step in both medicine and in making AIs slightly more human

The editor or writer has even highlighted the sentence about the system’s accuracy—not just good but incredible!

I will be publishing something soon [my August 21, 2018 posting] which highlights some of the questions one might want to ask about AI and medicine before diving headfirst into this brave new world of medicine.