Springer Nature, the National Center for Nanoscience and Technology, China and the National Science Library of the Chinese Academy of Sciences (CAS) released in both Chinese and English a white paper entitled “Small Science in Big China: An overview of the state of Chinese nanoscience and technology” at NanoChina 2017, an international conference on nanoscience and technology held August 28 and 29 in Beijing. The white paper looks at the rapid growth of China’s nanoscience research into its current role as the world’s leader [emphasis mine], examines China’s strengths and challenges, and makes some suggestions for how its contribution to the field can continue to thrive.

The white paper points out that China has become a strong contributor to nanoscience research in the world, and is a powerhouse of nanotechnology R&D. Some of China’s basic research is leading the world. China’s applied nanoscience research and the industrialization of nanotechnologies have also begun to take shape. These achievements are largely due to China’s strong investment in nanoscience and technology. China’s nanoscience research is also moving from quantitative increase to quality improvement and innovation, with greater emphasis on the applications of nanotechnologies.

“China took an initial step into nanoscience research some twenty years ago, and has since grown its commitment at an unprecedented rate, as it has for scientific research as a whole. Such a growth is reflected both in research quantity and, importantly, in quality. Therefore, I regard nanoscience as a window through which to observe the development of Chinese science, and through which we could analyze how that rapid growth has happened. Further, the experience China has gained in developing nanoscience and related technologies is a valuable resource for the other countries and other fields of research to dig deep into and draw on,” said Arnout Jacobs, President, Greater China, Springer Nature.

The white paper explores at China’s research output relative to the rest of the world in terms of research paper output, research contribution contained in the Nano database, and finally patents, providing insight into China’s strengths and expertise in nano research. The white paper also presents the results of a survey of experts from the community discussing the outlook for and challenges to the future of China’s nanoscience research.

China nano research output: strong rise in quantity and quality

In 1997, around 13,000 nanoscience-related papers were published globally. By 2016, this number had risen to more than 154,000 nano-related research papers. This corresponds to a compound annual growth rate of 14% per annum, almost four times the growth in publications across all areas of research of 3.7%. Over the same period of time, the nano-related output from China grew from 820 papers in 1997 to over 52,000 papers in 2016, a compound annual growth rate of 24%.

China’s contribution to the global total has been growing steadily. In 1997, Chinese researchers co-authored just 6% of the nano-related papers contained in the Science Citation Index (SCI). By 2010, this grew to match the output of the United States. They now contribute over a third of the world’s total nanoscience output — almost twice that of the United States.

Additionally, China’s share of the most cited nanoscience papers has kept increasing year on year, with a compound annual growth rate of 22% — more than three times the global rate. It overtook the United States in 2014 and its contribution is now many times greater than that of any other country in the world, manifesting an impressive progression in both quantity and quality.

The rapid growth of nanoscience in China has been enabled by consistent and strong financial support from the Chinese government. As early as 1990, the State Science and Technology Committee, the predecessor of the Ministry of Science and Technology (MOST), launched the Climbing Up project on nanomaterial science. During the 1990s, the National Natural Science Foundation of China (NSFC) also funded nearly 1,000 small-scale projects in nanoscience. In the National Guideline on Medium- and Long-Term Program for Science and Technology Development (for 2006−2020) issued in early 2006 by the Chinese central government, nanoscience was identified as one of four areas of basic research and received the largest proportion of research budget out of the four areas. The brain boomerang, with more and more foreign-trained Chinese researchers returning from overseas, is another contributor to China’s rapid rise in nanoscience.

The white paper clarifies the role of Chinese institutions, including CAS, in driving China’s rise to become the world’s leader in nanoscience. Currently, CAS is the world’s largest producer of high impact nano research, contributing more than twice as many papers in the 1% most-cited nanoscience literature than its closest competitors. In addition to CAS, five other Chinese institutions are ranked among the global top 20 in terms of output of top cited 1% nanoscience papers — Tsinghua University, Fudan University, Zhejiang University, University of Science and Technology of China and Peking University.

Nano database reveals advantages and focus of China’s nano research

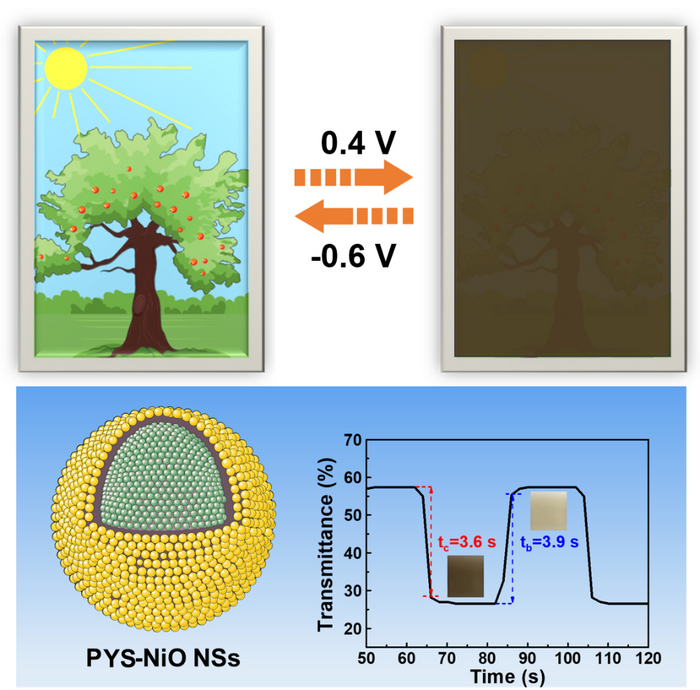

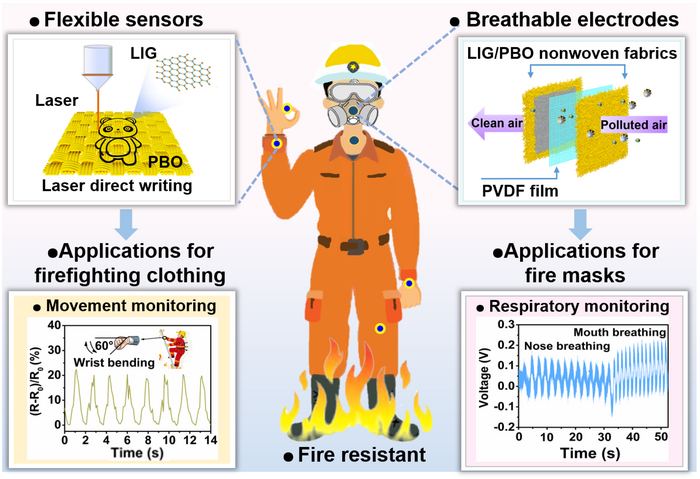

The Nano database (http://nano.nature.com) is a comprehensive platform that has been recently developed by Nature Research – part of Springer Nature – which contains nanoscience-related papers published in 167 peer-reviewed journals including Advanced Materials, Nano Letters, Nature, Science and more. Analysis of the Nano database of nanomaterial-containing articles published in top 30 journals during 2014–2016 shows that Chinese scientists explore a wide range of nanomaterials, the five most common of which are nanostructured materials, nanoparticles, nanosheets, nanodevices and nanoporous materials.

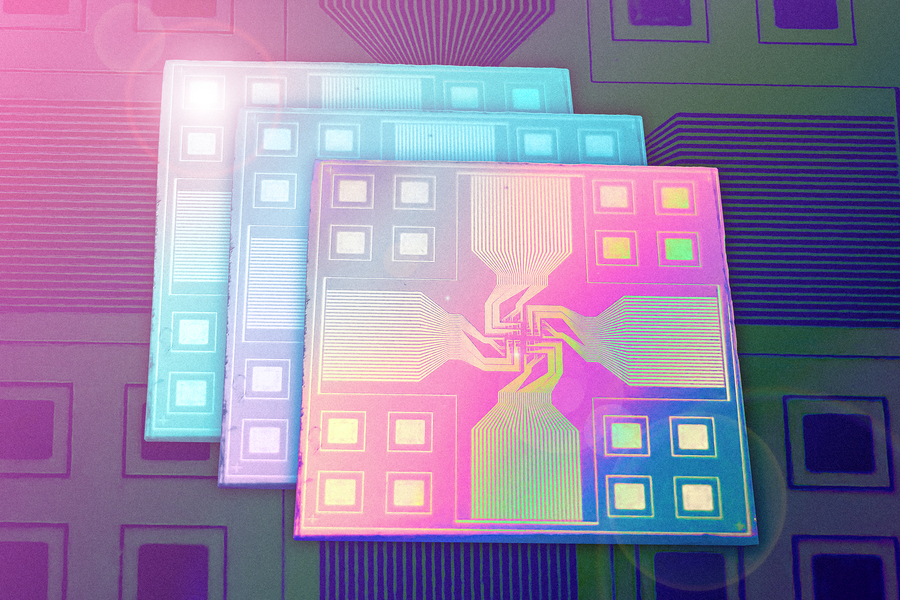

In terms of the research of applications, China has a clear leading edge in catalysis research, which is the most popular area of the country’s quality nanoscience papers. Chinese nano researchers also contributed significantly to nanomedicine and energy-related applications. China is relatively weaker in nanomaterials for electronics applications, compared to other research powerhouses, but robotics and lasers are emerging applications areas of nanoscience in China, and nanoscience papers addressing photonics and data storage applications also see strong growth in China. Over 80% of research from China listed in the database explicitly mentions applications of the nanostructures and nanomaterials described, notably higher than from most other leading nations such as the United States, Germany, the UK, Japan and France.

Nano also reveals the extent of China’s international collaborations in nano research. China has seen the percentage of its internationally collaborated papers increasing from 36% in 2014 to 44% in 2016. This level of international collaboration, similar to that of South Korea, is still much lower than that of the western countries, and the rate of growth is also not as fast as those in the United States, France and Germany.

The United States is China’s biggest international collaborator, contributing to 55% of China’s internationally collaborated papers on nanoscience that are included in the top 30 journals in the Nano database. Germany, Australia and Japan follow in a descending order as China’s collaborators on nano-related quality papers.

China’s patent output: topping the world, mostly applied domestically

Analysis of the Derwent Innovation Index (DII) database of Clarivate Analytics shows that China’s accumulative total number of patent applications for the past 20 years, amounting to 209,344 applications, or 45% of the global total, is more than twice as many as that of the United States, the second largest contributor to nano-related patents. China surpassed the United States and ranked the top in the world since 2008.

Five Chinese institutions, including the CAS, Zhejiang University, Tsinghua University, Hon Hai Precision Industry Co., Ltd. and Tianjin University can be found among the global top 10 institutional contributors to nano-related patent applications. CAS has been at the top of the global rankings since 2008, with a total of 11,218 patent applications for the past 20 years. Interestingly, outside of China, most of the other big institutional contributors among the top 10 are commercial enterprises, while in China, research or academic institutions are leading in patent applications.

However, the number of nano-related patents China applied overseas is still very low, accounting for only 2.61% of its total patent applications for the last 20 years cumulatively, whereas the proportion in the United States is nearly 50%. In some European countries, including the UK and France, more than 70% of patent applications are filed overseas.

China has high numbers of patent applications in several popular technical areas for nanotechnology use, and is strongest in patents for polymer compositions and macromolecular compounds. In comparison, nano-related patent applications in the United States, South Korea and Japan are mainly for electronics or semiconductor devices, with the United States leading the world in the cumulative number of patents for semiconductor devices.

Outlook, opportunities and challenges

The white paper highlights that the rapid rise of China’s research output and patent applications has painted a rosy picture for the development of Chinese nanoscience, and in both the traditionally strong subjects and newly emerging areas, Chinese nanoscience shows great potential.

Several interviewed experts in the survey identify catalysis and catalytic nanomaterials as the most promising nanoscience area for China. The use of nanotechnology in the energy and medical sectors was also considered very promising.

Some of the interviewed experts commented that the industrial impact of China’s nanotechnology is limited and there is still a gap between nanoscience research and the industrialization of nanotechnologies. Therefore, they recommended that the government invest more in applied research to drive the translation of nanoscience research and find ways to encourage enterprises to invest more in R&D.

As more and more young scientists enter the field, the competition for research funding is becoming more intense. However, this increasing competition for funding was not found to concern most interviewed young scientists, rather, they emphasized that the soft environment is more important. They recommended establishing channels that allow the suggestions or creative ideas of the young to be heard. Also, some interviewed young researchers commented that they felt that the current evaluation system was geared towards past achievements or favoured overseas experience, and recommended the development of an improved talent selection mechanism to ensure a sustainable growth of China’s nanoscience.

I have taken a look at the white paper and found it to be well written. It also provides a brief but thorough history of nanotechnology/nanoscience even adding a bit of historical information that was new to me. As for the rest of the white paper, it relies on bibliometrics (number of published papers and number of citations) and number of patents filed to lay the groundwork for claiming Chinese leadership in nanotechnology. As I’ve stated many times before, these are problematic measures but as far as I can determine they are almost the only ones we have. Frankly, as a Canadian, it doesn’t much matter to me since Canada no matter how you slice or dice it is always in a lower tier relative to science leadership in major fields. It’s the Americans who might feel inclined to debate leadership with regard to nanotechnology and other major fields and I leave it to to US commentators to take up the cudgels should they be inclined. The big bonuses here are the history, the glimpse into the Chinese perspective on the field of nanotechnology/nanoscience, and the analysis of weaknesses and strengths.

While the focus is on exciting new technology products from Baidu, the subtext, such as it is, suggests US companies had best keep an eye on its Chinese competitor(s).

Now back to China and nanotechnology leadership and the production of nanoparticles. This announcement was made in a November 17, 2017 news item on Azonano,