The Canadian federal government released its 2023 budget on Tuesday, March 28, 2023. There were no flashy science research announcements in the budget. Trudeau and his team like to trumpet science initiatives and grand plans (even if they’re reannouncing something from a previous budget) but like last year—this year—not so much.

Consequently, this posting about the annual federal budget should have been shorter than usual. What happened?

Partly, it’s the military spending (chapter 5 of the budget in part 2 of this 2023 budget post). For those who are unfamiliar with the link between military scientific research and their impact on the general population, there are a number of inventions and innovations directly due to military research, e.g., plastic surgery, television, and the internet. (You can check a November 6, 2018 essay for The Conversation by Robert Kirby, Professor of Clinical Education and Surgery at Keele University, for more about the impact of World War 1 and medical research, “World War I: the birth of plastic surgery and modern anaesthesia.”)

So, there’s a lot to be found by inference. Consequently, I found Chapter 3 to also be unexpectedly rich in science and technology efforts.

Throughout both parts of this 2023 Canadian federal budget post, you will find excerpts from individual chapters of the federal budget followed my commentary directly after. My general commentary is reserved for the end.

Sometimes, I have included an item because it piqued my interest. E.g., Canadian agriculture is dependent on Russian fertilizer!!! News to me and I imagine many others. BTW, this budget aims to wean us from this dependency.

Chapter 2: Investing in Public Health Care and Affordable Dental Care

Here goes: from https://www.budget.canada.ca/2023/report-rapport/toc-tdm-en.html,

…

2.1 Investing in Public Health Care

…

Improving Canada’s Readiness for Health Emergencies

Vaccines and other cutting-edge life-science innovations have helped us to take control of the COVID-19 pandemic. To support these efforts, the federal government has committed significant funding towards the revitalization of Canada’s biomanufacturing sector through a Biomanufacturing and Life Sciences Strategy [emphasis mine]. To date, the government has invested more than $1.8 billion in 32 vaccine, therapeutic, and biomanufacturing projects across Canada, alongside $127 million for upgrades to specialized labs at universities across the country. Canada is building a life sciences ecosystem that is attracting major investments from leading global companies, including Moderna, AstraZeneca, and Sanofi.

To build upon the progress of the past three years, the government will explore new ways to be more efficient and effective in the development and production of the vaccines, therapies, and diagnostic tools that would be required for future health emergencies. As a first step, the government will further consult Canadian and international experts on how to best organize our readiness efforts for years to come. …

Gold rush in them thar life sciences

I have covered the rush to capitalize on Canadian life sciences research (with a special emphasis on British Columbia) in various posts including (amongst others): my December 30, 2020 posting “Avo Media, Science Telephone, and a Canadian COVID-19 billionaire scientist,” and my August 23, 2021 posting “Who’s running the life science companies’ public relations campaign in British Columbia (Vancouver, Canada)?” There’s also my August 20, 2021 posting “Getting erased from the mRNA/COVID-19 story,” highlighting how brutal the competition amongst these Canadian researchers can be.

Getting back to the 2023 budget, ‘The Biomanufacturing and Life Sciences Strategy’ mentioned in this latest budget was announced in a July 28, 2021 Innovation, Science and Economic Development Canada news release. You can find the strategy here and an overview of the strategy here. You may want to check out the overview as it features links to,

What We Heard Report: Results of the consultation on biomanufacturing and life sciences capacity in Canada

Ontario’s Strategy: Taking life sciences to the next level

Quebec’s Strategy: 2022–2025 Québec Life Sciences Strategy

Nova Scotia’s Strategy: BioFuture2030 Prince Edward Island’s Strategy:

The Prince Edward Island Bioscience Cluster [emphases mine]

2022 saw one government announcement concerning the strategy, from a March 3, 2022 Innovation, Science and Economic Development Canada news release, Note: Links have been removed,

Protecting the health and safety of Canadians and making sure we have the domestic capacity to respond to future health crises are top priorities of the Government of Canada. With the guidance of Canada’s Biomanufacturing and Life Sciences Strategy, the government is actively supporting the growth of a strong, competitive domestic life sciences sector, with cutting-edge biomanufacturing capabilities.

Today [March 3, 2022], the Honourable François-Philippe Champagne, Minister of Innovation, Science and Industry, announced a $92 million investment in adMare BioInnovations to drive company innovation, scale-up and training activities in Canada’s life sciences sector. This investment will help translate commercially promising health research into innovative new therapies and will see Canadian anchor companies provide the training required and drive the growth of Canada’s life science companies.

…

The real action took place earlier this month (March 2023) just prior to the budget. Oddly, I can’t find any mention of these initiatives in the budget document. (Confession: I have not given the 2023 budget a close reading although I have been through the whole budget once and viewed individual chapters more closely a few times.)

This March 2, 2023 (?) Tri-agency Institutional Programs Secretariat news release kicked things off, Note 1: I found the date at the bottom of their webpage; Note 2: Links have been removed,

The Government of Canada’s main priority continues to be protecting the health and safety of Canadians. Throughout the pandemic, the quick and decisive actions taken by the government meant that Canada was able to scale up domestic biomanufacturing capacity, which had been in decline for over 40 years. Since then, the government is rebuilding a strong and competitive biomanufacturing and life sciences sector brick by brick. This includes strengthening the foundations of the life sciences ecosystem through the research and talent of Canada’s world-class postsecondary institutions and research hospitals, as well as fostering increased collaboration with innovative companies.

Today [March 2, 2023?], the Honourable François-Philippe Champagne, Minister of Innovation, Science and Industry, and the Honourable Jean-Yves Duclos, Minister of Health, announced an investment of $10 million in support of the creation of five research hubs [emphasis mine]:

- CBRF PRAIRIE Hub, led by the University of Alberta

- Canada’s Immuno-Engineering and Biomanufacturing Hub, led by The University of British Columbia

- Eastern Canada Pandemic Preparedness Hub, led by the Université de Montréal

- Canadian Pandemic Preparedness Hub, led by the University of Ottawa and McMaster University

- Canadian Hub for Health Intelligence & Innovation in Infectious Diseases, led by the University of Toronto

This investment, made through Stage 1 of the integrated Canada Biomedical Research Fund (CBRF) and Biosciences Research Infrastructure Fund (BRIF) competition, will bolster research and talent development efforts led by the institutions, working in collaboration with their partners. The hubs combine the strengths of academia, industry and the public and not-for-profit sectors to jointly improve pandemic readiness and the overall health and well-being of Canadians.

The multidisciplinary research hubs will accelerate the research and development of next-generation vaccines and therapeutics and diagnostics, while supporting training and development to expand the pipeline of skilled talent. The hubs will also accelerate the translation of promising research into commercially viable products and processes. This investment helps to strengthen the resilience of Canada’s life sciences sector by supporting leading Canadian research in innovative technologies that keep us safe and boost our economy.

Today’s [March 2, 2023?] announcement also launched Stage 2 of the CBRF-BRIF competition. This is a national competition that includes $570 million in available funding for proposals, aimed at cutting-edge research, talent development and research infrastructure projects associated with the selected research hubs. By strengthening research and talent capacity and leveraging collaborations across the entire biomanufacturing ecosystem, Canada will be better prepared to face future pandemics, in order to protect Canadian’s health and safety.

Then, the Innovation, Science and Economic Development Canada’s March 9, 2023 news release made this announcement, Note: Links have been removed,

Since March 2020, major achievements have been made to rebuild a vibrant domestic life sciences ecosystem to protect Canadians against future health threats. The growth of the sector is a top priority for the Government of Canada, and with over $1.8 billion committed to 33 projects to boost our domestic biomanufacturing, vaccine and therapeutics capacity, we are strengthening our resiliency for current health emergencies and our readiness for future ones.

The COVID-19 Vaccine Task Force played a critical role in guiding and supporting the Government of Canada’s COVID-19 vaccine response. Today [March 9, 2023], recognizing the importance of science-based decisions, the Honourable François-Philippe Champagne, Minister of Innovation, Science and Industry, and the Honourable Jean-Yves Duclos, Minister of Health, are pleased to announce the creation of the Council of Expert Advisors (CEA). The 14 members of the CEA, who held their first official meeting earlier this week, will advise the Government of Canada on the long-term, sustainable growth of Canada’s biomanufacturing and life sciences sector, and on how to enhance our preparedness and capacity to protect the health and safety of Canadians.

The membership of the CEA comprises leaders with in-depth scientific, industrial, academic and public health expertise. The CEA co-chairs are Joanne Langley, Professor of Pediatrics and of Community Health and Epidemiology at the Dalhousie University Faculty of Medicine, and Division Head of Infectious Diseases at the IWK Health Centre; and Marco Marra, Professor in Medical Genetics at the University of British Columbia (UBC), UBC Canada Research Chair in Genome Science and distinguished scientist at the BC Cancer Foundation.

The CEA’s first meeting focused on the previous steps taken under Canada’s Biomanufacturing and Life Sciences Strategy and on its path forward. The creation of the CEA is an important milestone in the strategy, as it continues to evolve and adapt to new technologies and changing conditions in the marketplace and life sciences ecosystem. The CEA will also inform on investments that enhance capacity across Canada to support end-to-end production of critical vaccines, therapeutics and essential medical countermeasures, and to ensure that Canadians can reap the full economic benefits of the innovations developed, including well-paying jobs.

As I’m from British Columbia, I’m highlighting this University of British Columbia (UBC) March 17, 2023 news release about their involvement, Note: Links have been removed,

Canada’s biotech ecosystem is poised for a major boost with the federal government announcement today that B.C. will be home to Canada’s Immuno-Engineering and Biomanufacturing Hub (CIEBH).

The B.C.-based research and innovation hub, led by UBC, brings together a coalition of provincial, national and international partners to position Canada as a global epicentre for the development and manufacturing of next-generation immune-based therapeutics.

A primary goal of CIEBH is to establish a seamless drug development pipeline that will enable Canada to respond to future pandemics and other health challenges in fewer than 100 days.

This hub will build on the strengths of B.C.’s biotech and life sciences industry, and those of our national and global partners, to make Canada a world leader in the development of lifesaving medicines,” said Dr. Deborah Buszard, interim president and vice-chancellor of UBC. “It’s about creating a healthier future for all Canadians. Together with our outstanding alliance of partners, we will ensure Canada is prepared to respond rapidly to future health challenges with homegrown solutions.”

CIEBH is one of five new research hubs announced by the federal government that will work together to improve pandemic readiness and the overall health and well-being of Canadians. Federal funding of $570 million is available over the next four years to support project proposals associated with these hubs in order to advance Canada’s Biomanufacturing and Life Sciences Strategy.

…

More than 50 organizations representing the private, public, not-for-profit and academic sectors have come together to form the hub, creating a rich environment that will bolster biomedical innovation in Canada. Among these partners are leading B.C. biotech companies that played a key role in Canada’s COVID-19 pandemic response and are developing cutting-edge treatments for a range of human diseases.

…

CIEBH, led by UBC, will further align the critical mass of biomedical research strengths concentrated at B.C. academic institutions, including the B.C. Institute of Technology, Simon Fraser University and the University of Victoria, as well as the clinical expertise of B.C. research hospitals and health authorities. With linkages to key partners across Canada, including Dalhousie University, the University of Waterloo, and the Vaccine and Infectious Disease Organization, the hub will create a national network to address gaps in Canada’s drug development pipeline.

…

In recent decades, B.C. has emerged as a global leader in immuno-engineering, a field that is transforming how society treats disease by harnessing and modulating the immune system.

B.C. academic institutions and prominent Canadian companies like Precision NanoSystems, Acuitas Therapeutics and AbCellera have developed significant expertise in advanced immune-based therapeutics such as lipid nanoparticle- and mRNA-based vaccines, engineered antibodies, cell therapies and treatments for antimicrobial resistant infections. UBC professor Dr. Pieter Cullis, a member of CIEBH’s core scientific team, has been widely recognized for his pioneering work developing the lipid nanoparticle delivery technology that enables mRNA therapeutics such as the highly effective COVID-19 mRNA vaccines.

…

As noted previously, I’m a little puzzled that the federal government didn’t mention the investment in these hubs in their budget. They usually trumpet these kinds of initiatives.

On a related track, I’m even more puzzled that the province of British Columbia does not have its own life sciences research strategy in light of that sector’s success. Certainly it seems that Ontario, Quebec, Nova Scotia, and Prince Edward are all eager to get a piece of the action. Still, there is a Life Sciences in British Columbia: Sector Profile dated June 2020 and an undated (likely from some time between July 2017 to January 2020 when Bruce Ralston whose name is on the document was the relevant cabinet minister) British Columbia Technology and Innovation Policy Framework.

In case you missed the link earlier, see my August 23, 2021 posting “Who’s running the life science companies’ public relations campaign in British Columbia (Vancouver, Canada)?” which includes additional information about the BC life sciences sector, federal and provincial funding, the City of Vancouver’s involvement, and other related matters.

Chapter 3: A Made-In-Canada Plan: Affordable Energy, Good Jobs, and a Growing Clean Economy

The most science-focused information is in Chapter 3, from https://www.budget.canada.ca/2023/report-rapport/toc-tdm-en.html,

3.2 A Growing, Clean Economy

More than US$100 trillion in private capital is projected to be spent between now and 2050 to build the global clean economy.

Canada is currently competing with the United States, the European Union, and countries around the world for our share of this investment. To secure our share of this global investment, we must capitalize on Canada’s competitive advantages, including our skilled and diverse workforce, and our abundance of critical resources that the world needs.

The federal government has taken significant action over the past seven years to support Canada’s net-zero economic future. To build on this progress and support the growth of Canada’s clean economy, Budget 2023 proposes a range of measures that will encourage businesses to invest in Canada and create good-paying jobs for Canadian workers.

This made-in-Canada plan follows the federal tiered structure to incent the development of Canada’s clean economy and provide additional support for projects that need it. This plan includes:

- Clear and predictable investment tax credits to provide foundational support for clean technology manufacturing, clean hydrogen, zero-emission technologies, and carbon capture and storage;

- The deployment of financial instruments through the Canada Growth Fund, such as contracts for difference, to absorb certain risks and encourage private sector investment in low-carbon projects, technologies, businesses, and supply chains; and,

- Targeted clean technology and sector supports delivered by Innovation, Science and Economic Development Canada to support battery manufacturing and further advance the development, application, and manufacturing of clean technologies.

Canada’s Potential in Critical Minerals

As a global leader in mining, Canada is in a prime position to provide a stable resource base for critical minerals [emphasis mine] that are central to major global industries such as clean technology, auto manufacturing, health care, aerospace, and the digital economy. For nickel and copper alone, the known reserves in Canada are more than 10 million tonnes, with many other potential sources at the exploration stage.

The Buy North American provisions for critical minerals and electric vehicles in the U.S. Inflation Reduction Act will create opportunities for Canada. In particular, U.S. acceleration of clean technology manufacturing will require robust supply chains of critical minerals that Canada has in abundance. However, to fully unleash Canada’s potential in critical minerals, we need to ensure a framework is in place to accelerate private investment.

Budget 2022 committed $3.8 billion for Canada’s Critical Minerals Strategy to provide foundational support to Canada’s mining sector to take advantage of these new opportunities. The Strategy was published in December 2022.

On March 24, 2023, the government launched the Critical Minerals Infrastructure Fund [emphasis mine; I cannot find a government announcement/news release for this fund]—a new fund announced in Budget 2022 that will allocate $1.5 billion towards energy and transportation projects needed to unlock priority mineral deposits. The new fund will complement other clean energy and transportation supports, such as the Canada Infrastructure Bank and the National Trade Corridors Fund, as well as other federal programs that invest in critical minerals projects, such as the Strategic Innovation Fund.

The new Investment Tax Credit for Clean Technology Manufacturing proposed in Budget 2023 will also provide a significant incentive to boost private investment in Canadian critical minerals projects and create new opportunities and middle class jobs in communities across the country.

An Investment Tax Credit for Clean Technology Manufacturing

Supporting Canadian companies in the manufacturing and processing of clean technologies, and in the extraction and processing of critical minerals, will create good middle class jobs for Canadians, ensure our businesses remain competitive in major global industries, and support the supply chains of our allies around the world.

While the Clean Technology Investment Tax Credit, first announced in Budget 2022, will provide support to Canadian companies adopting clean technologies, the Clean Technology Manufacturing Investment Tax Credit will provide support to Canadian companies that are manufacturing or processing clean technologies and their precursors.

- Budget 2023 proposes a refundable tax credit equal to 30 per cent of the cost of investments in new machinery and equipment used to manufacture or process key clean technologies, and extract, process, or recycle key critical minerals, including:

- Extraction, processing, or recycling of critical minerals essential for clean technology supply chains, specifically: lithium, cobalt, nickel, graphite, copper, and rare earth elements;

- Manufacturing of renewable or nuclear energy equipment;

- Processing or recycling of nuclear fuels and heavy water; [emphases mine]

- Manufacturing of grid-scale electrical energy storage equipment;

- Manufacturing of zero-emission vehicles; and,

- Manufacturing or processing of certain upstream components and materials for the above activities, such as cathode materials and batteries used in electric vehicles.

The investment tax credit is expected to cost $4.5 billion over five years, starting in 2023-24, and an additional $6.6 billion from 2028-29 to 2034-35. The credit would apply to property that is acquired and becomes available for use on or after January 1, 2024, and would no longer be in effect after 2034, subject to a phase-out starting in 2032.

…

3.4 Reliable Transportation and Resilient Infrastructure

…

Supporting Resilient Infrastructure Through Innovation

The Smart Cities Challenge [emphasis mine] was launched in 2017 to encourage cities to adopt new and innovative approaches to improve the quality of life for their residents. The first round of the Challenge resulted in $75 million in prizes across four winning applicants: Montreal, Quebec; Guelph, Ontario; communities of Nunavut; and Bridgewater, Nova Scotia.

New and innovative solutions are required to help communities reduce the risks and impacts posed by weather-related events and disasters triggered by climate change. To help address this issue, the government will be launching a new round of the Smart Cities Challenge later this year, which will focus on using connected technologies, data, and innovative approaches to improve climate resiliency.

…

3.5 Investing in Tomorrow’s Technology

With the best-educated workforce on earth, world-class academic and research institutions, and robust start-up ecosystems across the country, Canada’s economy is fast becoming a global technology leader – building on its strengths in areas like artificial intelligence. Canada is already home to some of the top markets for high-tech careers in North America, including the three fastest growing markets between 2016 and 2021: Vancouver, Toronto, and Quebec City.

However, more can be done to help the Canadian economy reach its full potential. Reversing a longstanding trend of underinvestment in research and development by Canadian business [emphasis mine] is essential our long-term economic growth.

Budget 2023 proposes new measures to encourage business innovation in Canada, as well as new investments in college research and the forestry industry that will help to build a stronger and more innovative Canadian economy.

Attracting High-Tech Investment to Canada

In recent months, Canada has attracted several new digital and high-tech projects that will support our innovative economy, including:

- Nokia: a $340 million project that will strengthen Canada’s position as a leader in 5G and digital innovation;

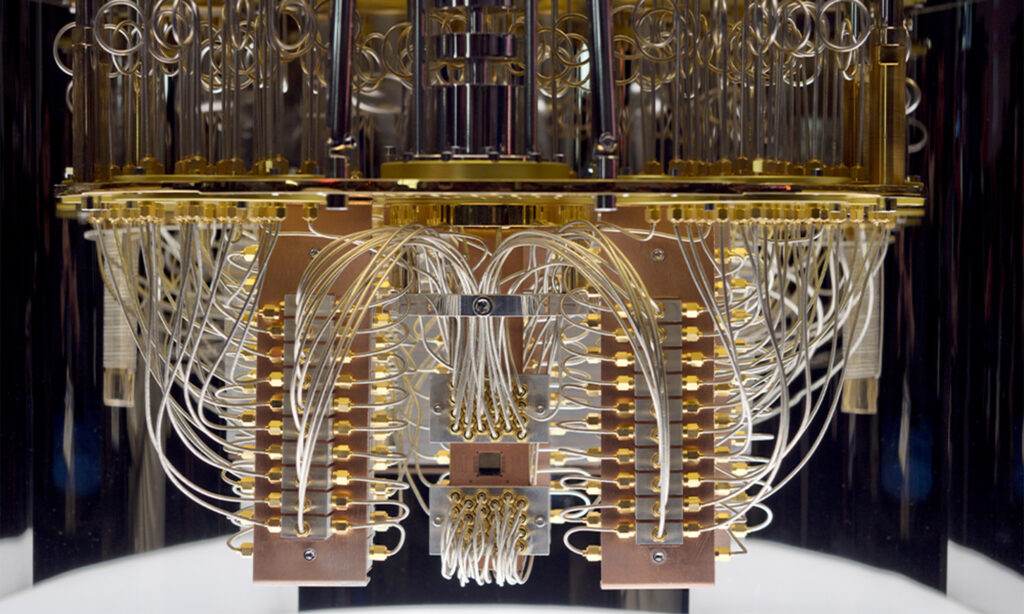

- Xanadu Quantum Technologies: a $178 million project that will support Canada’s leadership in quantum computing;

- Sanctuary Cognitive Systems Corporation: a $121 million project that will boost Canada’s leadership in the global Artificial Intelligence market; and,

- EXFO: a $77 million project to create a 5G Centre of Excellence that aims to develop one of the world’s first Artificial Intelligence-based automated network solutions.

Review of the Scientific Research and Experimental Development Tax Incentive Program

The Scientific Research and Experimental Development (SR&ED) tax incentive program continues to be a cornerstone of Canada’s innovation strategy by supporting research and development with the goal of encouraging Canadian businesses of all sizes to invest in innovation that drives economic growth.

In Budget 2022, the federal government announced its intention to review the SR&ED program to ensure it is providing adequate support and improving the development, retention, and commercialization of intellectual property, including the consideration of adopting a patent box regime. [emphasis mine] The Department of Finance will continue to engage with stakeholders on the next steps in the coming months.

Modernizing Canada’s Research Ecosystem

Canada’s research community and world-class researchers solve some of the world’s toughest problems, and Canada’s spending on higher education research and development, as a share of GDP, has exceeded all other G7 countries.

Since 2016, the federal government has committed more than $16 billion of additional funding to support research and science across Canada. This includes:

- Nearly $4 billion in Budget 2018 for Canada’s research system, including $2.4 billion for the Canada Foundation for Innovation and the granting councils—the Natural Sciences and Engineering Research Council of Canada, the Social Sciences and Humanities Research Council of Canada and the Canadian Institutes of Health Research; [emphases mine]

- More than $500 million in Budget 2019 in total additional support to third-party research and science organizations, in addition to the creation of the Strategic Science Fund, which will announce successful recipients later this year;

- $1.2 billion in Budget 2021 for Pan-Canadian Genomics and Artificial Intelligence Strategies, and a National Quantum Strategy;

- $1 billion in Budget 2021 to the granting councils and the Canada Foundation for Innovation for life sciences researchers and infrastructure; and,

- The January 2023 announcement of Canada’s intention to become a full member in the Square Kilometre Array Observatory, which will provide Canadian astronomers with access to its ground-breaking data. The government is providing up to $269.3 million to support this collaboration.

In order to maintain Canada’s research strength—and the knowledge, innovations, and talent it fosters—our systems to support science and research must evolve. The government has been consulting with stakeholders, including through the independent Advisory Panel on the Federal Research Support System, to seek advice from research leaders on how to further strengthen Canada’s research support system.

The government is carefully considering the Advisory Panel’s advice, with more detail to follow in the coming months on further efforts to modernize the system.

Using College Research to Help Businesses Grow

Canada’s colleges, CEGEPs, and polytechnic institutes use their facilities, equipment, and expertise to solve applied research problems every day. Students at these institutions are developing the skills they need to start good careers when they leave school, and by partnering with these institutions, businesses can access the talent and the tools they need to innovate and grow.

- To help more Canadian businesses access the expertise and research and development facilities they need, Budget 2023 proposes to provide $108.6 million over three years, starting in 2023-24, to expand the College and Community Innovation Program, administered by the Natural Sciences and Engineering Research Council.

Supporting Canadian Leadership in Space

For decades, Canada’s participation in the International Space Station has helped to fuel important scientific advances, and showcased Canada’s ability to create leading-edge space technologies, such as Canadarm2. Canadian space technologies have inspired advances in other fields, such as the NeuroArm, the world’s first robot capable of operating inside an MRI, making previously impossible surgeries possible.

- Budget 2023 proposes to provide $1.1 billion [emphasis mine] over 14 years, starting in 2023-24, on a cash basis, to the Canadian Space Agency [emphasis mine] to continue Canada’s participation in the International Space Station until 2030.

Looking forward, humanity is returning to the moon [emphasis mine]. Canada intends to join these efforts by contributing a robotic lunar utility vehicle to perform key activities in support of human lunar exploration. Canadian participation in the NASA-led Lunar Gateway station—a space station that will orbit the moon—also presents new opportunities for innovative advances in science and technology. Canada is providing Canadarm3 to the Lunar Gateway, and a Canadian astronaut will join Artemis II, the first crewed mission to the moon since 1972. In Budget 2023, the government is providing further support to assist these missions.

- Budget 2023 proposes to provide $1.2 billion [emphasis mine] over 13 years, starting in 2024-25, to the Canadian Space Agency to develop and contribute a lunar utility vehicle to assist astronauts on the moon.

- Budget 2023 proposes to provide $150 million [emphasis mine[ over five years, starting in 2023-24, to the Canadian Space Agency for the next phase of the Lunar Exploration Accelerator Program to support the Canada’s world-class space industry and help accelerate the development of new technologies.

- Budget 2023 also proposes to provide $76.5 million [emphasis mine] over eight years, starting in 2023-24, on a cash basis, to the Canadian Space Agency in support of Canadian science on the Lunar Gateway station.

Investing in Canada’s Forest Economy

The forestry sector plays an important role in Canada’s natural resource economy [emphasis mine], and is a source of good careers in many rural communities across Canada, including Indigenous communities. As global demand for sustainable forest products grows, continued support for Canada’s forestry sector will help it innovate, grow, and support good middle class jobs for Canadians.

- Budget 2023 proposes to provide $368.4 million over three years, starting in 2023-24, with $3.1 million in remaining amortization, to Natural Resources Canada to renew and update forest sector support, including for research and development, Indigenous and international leadership, and data. Of this amount, $30.1 million would be sourced from existing departmental resources.

Establishing the Dairy Innovation and Investment Fund

The dairy sector is facing a growing surplus of solids non-fat (SNF) [emphasis mine], a by-product of dairy processing. Limited processing capacity for SNF results in lost opportunities for dairy processors and farmers.

- Budget 2023 proposes to provide $333 million over ten years, starting in 2023-24, for Agriculture and Agri-Food Canada to support investments in research and development of new products based on SNF, market development for these products, and processing capacity for SNF-based products more broadly.

Supporting Farmers for Diversifying Away from Russian Fertilizers

Russia’s illegal invasion of Ukraine has resulted in higher prices for nitrogen fertilizers, which has had a notable impact on Eastern Canadian farmers who rely heavily on imported fertilizer.

- Budget 2023 proposes to provide $34.1 million over three years, starting in 2023-24, to Agriculture and Agri-Food Canada’s On-Farm Climate Action Fund to support adoption of nitrogen management practices by Eastern Canadian farmers, that will help optimize the use and reduce the need for fertilizer.

Providing Interest Relief for Agricultural Producers

Farm production costs have increased in Canada and around the world, including as a result Russia’s illegal invasion of Ukraine and global supply chain disruptions. It is important that Canada’s agricultural producers have access to the cash flow they need to cover these costs until they sell their products.

- Budget 2023 proposes to provide $13 million in 2023-24 to Agriculture and Agri-Food Canada to increase the interest-free limit for loans under the Advance Payments Program from $250,000 to $350,000 for the 2023 program year.

Additionally, the government will consult with provincial and territorial counterparts to explore ways to extend help to small agricultural producers who demonstrate urgent financial need.

Maintaining Livestock Sector Exports with a Foot-and-Mouth Disease Vaccine Bank

Foot-and-Mouth Disease (FMD) is a highly transmissible illness that can affect cattle, pigs, and other cloven-hoofed animals. Recent outbreaks in Asia and Africa have increased the risk of global spread, and a FMD outbreak in Canada would cut off exports for all livestock sectors, with major economic implications. However, the impact of a potential outbreak would be significantly reduced with the early vaccination of livestock.

- Budget 2023 proposes to provide $57.5 million over five years, starting in 2023-24, with $5.6 million ongoing, to the Canadian Food Inspection Agency to establish a FMD vaccine bank for Canada, and to develop FMD response plans. The government will seek a cost-sharing arrangement with provinces and territories.

Canadian economic theory (the staples theory), mining, nuclear energy, quantum science, and more

Critical minerals are getting a lot of attention these days. (They were featured in the 2022 budget, see my April 19, 2022 posting, scroll down to the Mining subhead.) This year, US President Joe Biden, in his first visit to Canada as President, singled out critical minerals at the end of his 28 hour state visit (from a March 24, 2023 CBC news online article by Alexander Panetta; Note: Links have been removed),

There was a pot of gold at the end of President Joe Biden’s jaunt to Canada. It’s going to Canada’s mining sector.

The U.S. military will deliver funds this spring to critical minerals projects in both the U.S. and Canada. The goal is to accelerate the development of a critical minerals industry on this continent.

The context is the United States’ intensifying rivalry with China.

The U.S. is desperate to reduce its reliance on its adversary for materials needed to power electric vehicles, electronics and many other products, and has set aside hundreds of millions of dollars under a program called the Defence Production Act.

The Pentagon already has told Canadian companies they would be eligible to apply. It has said the cash would arrive as grants, not loans.

On Friday [March 24, 2023], before Biden left Ottawa, he promised they’ll get some.

The White House and the Prime Minister’s Office announced that companies from both countries will be eligible this spring for money from a $250 million US fund.

Which Canadian companies? The leaders didn’t say. Canadian officials have provided the U.S. with a list of at least 70 projects that could warrant U.S. funding.

…

“Our nations are blessed with incredible natural resources,” Biden told Canadian parliamentarians during his speech in the House of Commons.

“Canada in particular has large quantities of critical minerals [emphasis mine] that are essential for our clean energy future, for the world’s clean energy future.

…

I don’t believe that Joe Biden has ever heard of the Canadian academic Harold Innis (neither have most Canadians) but Biden is echoing a rather well known theory, in some circles, about Canada’s economy (from the Harold Innis Wikipedia entry),

Harold Adams Innis FRSC (November 5, 1894 – November 9, 1952) was a Canadian professor of political economy at the University of Toronto and the author of seminal works on media, communication theory, and Canadian economic history. He helped develop the staples thesis, which holds that Canada’s culture, political history, and economy have been decisively influenced by the exploitation and export of a series of “staples” such as fur, fish, lumber, wheat, mined metals, and coal. The staple thesis dominated economic history in Canada from the 1930s to 1960s, and continues to be a fundamental part of the Canadian political economic tradition.[8] [all emphases mine]

…

The staples theory is referred to informally as “hewers of wood and drawers of water.”

Critical Minerals Infrastructure Fund

I cannot find an announcement for this fund (perhaps it’s a US government fund?) but there is a March 7, 2023 Natural Resources Canada news release, Note: A link has been removed,

…

Simply put, our future depends on critical minerals. The Government of Canada is committed to investing in this future, which is why the Canadian Critical Minerals Strategy — launched by the Honourable Jonathan Wilkinson, Minister of Natural Resources, in December 2022 — is backed by up to $3.8 billion in federal funding. [emphases mine] Today [March 7, 2023], Minister Wilkinson announced more details on the implementation of this Strategy. Over $344 million in funding is supporting the following five new programs and initiatives:

- Critical Minerals Technology and Innovation Program – $144.4 million for the research, development, demonstration, commercialization and adoption of new technologies and processes that support sustainable growth in Canadian critical minerals value chains and associated innovation ecosystems.

- Critical Minerals Geoscience and Data Initiative – $79.2 million to enhance the quality and availability of data and digital technologies to support geoscience and mapping that will accelerate the efficient and effective development of Canadian critical minerals value chains, including by identifying critical minerals reserves and developing pathways for sustainable mineral development.

- Global Partnerships Program – $70 million to strengthen Canada’s global leadership role in enhancing critical minerals supply chain resiliency through international collaborations related to critical minerals.

- Northern Regulatory Initiative – $40 million to advance Canada’s northern and territorial critical minerals agenda by supporting regulatory dialogue, regional studies, land-use planning, impact assessments and Indigenous consultation.

- Renewal of the Critical Minerals Centre of Excellence (CMCE) – $10.6 million so the CMCE can continue the ongoing development and implementation of the Canadian Critical Minerals Strategy.

Commentary from the mining community

Mariaan Webb wrote a March 29,2023 article about the budget and the response from the mining community for miningweekly.com, Note: Links have been removed,

The 2023 Budget, delivered by Finance Minister Chrystia Freeland on Tuesday, bolsters the ability of the Canadian mining sector to deliver for the country, recognising the industry’s central role in enabling the transition to a net-zero economy, says Mining Association of Canada (MAC) president and CEO Pierre Gratton.

“Without mining, there are no electric vehicles, no clean power from wind farms, solar panels or nuclear energy, [emphasis mine] and no transmission lines,” said Gratton.

…

What kind of nuclear energy?

There are two kinds of nuclear energy: fission and fusion. (Fission is the one where the atom is split and requires minerals. Fusion energy is how stars are formed. Much less polluting than fission energy, at this time it is not a commercially viable option nor is it close to being so.)

As far as I’m aware, fusion energy does not require any mined materials. So, Gratton appears to be referring to fission nuclear energy when he’s talking about the mining sector and critical minerals.

I have an October 28, 2022 posting, which provides an overview of fusion energy and the various projects designed to capitalize on it.

Smart Cities in Canada

I was happy to be updated on the Smart Cities Challenge. When I last wrote about it (a March 20, 2018 posting; scroll down to the “Smart Cities, the rest of the country, and Vancouver” subhead). I notice that the successful applicants are from Montreal, Quebec; Guelph, Ontario; communities of Nunavut; and Bridgewater, Nova Scotia. It’s about time northern communities got some attention. It’s hard not to notice that central Canada (i.e., Ontario and Quebec) again dominates.

I look forward to hearing more about the new, upcoming challenge.

The quantum crew

I first made note of what appears to be a fracture in the Canadian quantum community in a May 4, 2021 posting (scroll down to the National Quantum Strategy subhead) about the 2021 budget. I made note of it again in a July 26, 2022 posting (scroll down to the Canadian quantum scene subhead).

In my excerpts from the 3.5 Investing in Tomorrow’s Technology section of the 2023 budget, Xanadu Quantum Technologies, headquartered in Toronto, Ontario is singled out with three other companies (none of which are in the quantum computing field). Oddly, D-Wave Systems (located in British Columbia), which as far as I’m aware is the star of Canada’s quantum computing sector, has yet to be singled out in any budget I’ve seen yet. (I’m estimating I’ve reviewed about 10 budgets.)

Canadians in space

Shortly after the 2023 budget was presented, Canadian astronaut Jeremy Hansen was revealed as one of four astronauts to go on a mission to orbit the moon. From a Canadian Broadcasting (CBC) April 3, 2023 news online article by Nicole Mortillaro (Note: A link has been removed),

Jeremy Hansen is heading to the moon.

The 47-year old Canadian astronaut was announced today as one of four astronauts — along with Christina Koch, Victor Glover and Reid Wiseman — who will be part of NASA’s [US National Aeronautics and Space Administration] Artemis II mission.

…

Hansen was one of four active Canadian astronauts that included Jennifer Sidey-Gibbons, Joshua Kutryk and David Saint-Jacques vying for a seat on the Orion spacecraft set to orbit the moon.

…

Artemis II is the second step in NASA’s mission to return astronauts to the surface of the moon.

The astronauts won’t be landing, but rather they will orbit for 10 days in the Orion spacecraft, testing key components to prepare for Artemis III that will place humans back on the moon some time in 2025 for the first time since 1972.

Canada gets a seat on Artemis II due to its contributions to Lunar Gateway, a space station that will orbit the moon. But Canada is also building a lunar rover provided by Canadensys Aerospace.

…

On Monday [April 3, 2023], Hansen noted there are two reasons a Canadian is going to the moon, adding that it “makes me smile when I say that.”

The first, he said, is American leadership, and the decision to curate an international team.

“The second reason is Canada’s can-do attitude,” he said proudly.

…

In addition to our ‘can-do attitude,” we’re also spending some big money, i.e., the Canadian government has proposed in its 2023 budget some $2.5B to various space and lunar efforts over the next several years.

Chapter 3 odds and sods

First seen in the 2022 budget, the patent box regime makes a second appearance in the 2023 budget where apparently ‘stakeholders will be engaged’ later this year. At least, they’re not rushing into this. (For the original announcement and an explanation of a patent box regime, see my April 19, 2022 budget review; scroll down to the Review of Tax Support to R&D and Intellectual Property subhead.)

I’m happy to see the Dairy Innovation and Investment Fund. I’m particularly happy to see a focus on finding uses for solids non-fat (SNF) by providing “$333 million over ten years, starting in 2023-24, … research and development of new products based on SNF [emphasis mine], market development for these products, and processing capacity for SNF-based products more broadly.”

This investment contrasts with the approach to cellulose nanocrystals (CNC) derived from wood (i.e., the forest economy), where the Canadian government invested heavily in research and even opened a production facility under the auspices of a company, CelluForce. It was a little problematic.

By 2013, the facility had a stockpile of CNC and nowhere to sell it. That’s right, no market for CNC as there had been no product development. (See my May 8, 2012 posting where that lack is mentioned, specifically there’s a quote from Tim Harper in an excerpted Globe and Mail article. My August 17, 2016 posting notes that the stockpile was diminishing. The CelluForce website makes no mention of it now in 2023.)

It’s good to see the government emphasis on research into developing products for SNFs especially after the CelluForce stockpile and in light of US President Joe Biden’s recent enthusiasm over our critical minerals.

Chapter 4: Advancing Reconciliation and Building a Canada That Works for Everyone

Chapter 4: Advancing Reconciliation and Building a Canada That Works for Everyone offers this, from https://www.budget.canada.ca/2023/report-rapport/toc-tdm-en.html,

4.3 Clean Air and Clean Water

…

Progress on Biodiversity

Montreal recently hosted the Fifteenth Conference of the Parties (COP15) to the United Nations Convention on Biological Diversity, which led to a new Post-2020 Global Biodiversity Framework. During COP15, Canada announced new funding for biodiversity and conservation measures at home and abroad that will support the implementation of the Global Biodiversity Framework, including $800 million to support Indigenous-led conservation within Canada through the innovative Project Finance for Permanence model.

Protecting Our Freshwater

Canada is home to 20 per cent of the world’s freshwater supply. Healthy lakes and rivers are essential to Canadians, communities, and businesses across the country. Recognizing the threat to freshwater caused by climate change and pollution, the federal government is moving forward to establish a new Canada Water Agency and make major investments in a strengthened Freshwater Action Plan.

- Budget 2023 proposes to provide $650 million over ten years, starting in 2023-24, to support monitoring, assessment, and restoration work in the Great Lakes, Lake Winnipeg, Lake of the Woods, St. Lawrence River, Fraser River, Saint John River, Mackenzie River, and Lake Simcoe. Budget 2023 also proposes to provide $22.6 million over three years, starting in 2023-24, to support better coordination of efforts to protect freshwater across Canada.

- Budget 2023 also proposes to provide $85.1 million over five years, starting in 2023-24, with $0.4 million in remaining amortization and $21 million ongoing thereafter to support the creation of the Canada Water Agency [emphasis mine], which will be headquartered in Winnipeg. By the end of 2023, the government will introduce legislation that will fully establish the Canada Water Agency as a standalone entity.

…

Cleaner and Healthier Ports

Canada’s ports are at the heart of our supply chains, delivering goods to Canadians and allowing our businesses to reach global markets. As rising shipping levels enable and create economic growth and good jobs, the federal government is taking action to protect Canada’s coastal ecosystems and communities.

- Budget 2023 proposes to provide $165.4 million over seven years, starting in 2023-24, to Transport Canada to establish a Green Shipping Corridor Program to reduce the impact of marine shipping on surrounding communities and ecosystems. The program will help spur the launch of the next generation of clean ships, invest in shore power technology, and prioritize low-emission and low-noise vessels at ports.

Water, water everywhere

I wasn’t expecting to find mention of establishing a Canada Water Agency and details are sketchy other than, It will be in Winnipeg, Manitoba and there will be government funding. Fingers crossed that this agency will do some good work (whatever that might be). Personally, I’d like to see some action with regard to droughts.

In British Columbia (BC) where I live and which most of us think of as ‘water rich’, is suffering under conditions such that our rivers and lakes are at very low levels according to an April 6, 2023 article by Glenda Luymes for the Vancouver Sun (print version, p. A4),

…

On the North American WaterWatch map, which codes river flows using a series of coloured dots, high flows are represented in various shades of blue while low flows are represented in red hues. On Wednesday [April 5, 2023], most of BC was speckled red, brown and orange, with the majority of the province’s rivers flowing “much below normal.”

“It does not bode well for the fish populations,” said Marvin Rosenau, a fisheries and ecosystems instructor at BCIT [British Columbia Institute of Technology]. …

Rosenau said low water last fall [2022], when much of BC was in the grip of drought, decreased salmon habitat during spawning season. …

…

BC has already seen small early season wildfires, including one near Merritt last weekend [April 1/2, 2023]. …

Getting back to the Canada Water Agency, there’s this March 29, 2023 CBC news online article by Bartley Kives,

The 2023 federal budget calls for a new national water agency to be based in Winnipeg, provided Justin Trudeau’s Liberal government remains in power long enough to see it established [emphasis mine] in the Manitoba capital.

The budget announced on Tuesday [March 28, 2023] calls for the creation of the Canada Water Agency, a new federal entity with a headquarters in Winnipeg.

While the federal government is still determining precisely what the new agency will do, one Winnipeg-based environmental organization expects it to become a one-stop shop for water science, water quality assessment and water management [emphasis mine].

“This is something that we don’t actually have in this country at the moment,” said Matt McCandless, a vice-president for the non-profit International Institute for Sustainable Development.

Right now, municipalities, provinces and Indigenous authorities take different approaches to managing water quality, water science, flooding and droughts, said McCandless, adding a national water agency could provide more co-ordination.

…

For now, it’s unknown how many employees will be based at the Canada Water Agency’s Winnipeg headquarters. According to the budget, legislation to create the agency won’t be introduced until later this year [emphasis mine].

That means the Winnipeg headquarters likely won’t materialize before 2024, one year before the Trudeau minority government faces re-election, assuming it doesn’t lose the confidence of the House of Commons beforehand [emphasis mine].

Nonetheless, several Canadian cities and provinces were vying for the Canada Water Agency’s headquarters, including Manitoba.

…

The budget also calls for $65 million worth of annual spending on lake science and restoration, with an unstated fraction of that cash devoted to Lake Winnipeg.

McCandless calls the spending on water science an improvement over previous budgets.

…

Kives seems a tad jaundiced but you get that way (confession: I have too) when covering government spending promises.

Part 2 (military spending and general comments) will be posted sometime during the week of April 24-28, 2023.