This story take a few twists and turns. First, ‘brain chips’ as they’re sometimes called would allow, theoretically, computers to learn and function like human brains. (Note: There’s another type of ‘brain chip’ which could be implanted in human brains to help deal with diseases such as Parkinson’s and Alzheimer’s. *Today’s [June 26, 2015] earlier posting about an artificial neuron points at some of the work being done in this areas.*)

Returning to the ‘brain ship’ at hand. Second, there’s a company called BrainChip, which has one patent and another pending for, yes, a ‘brain chip’.

The company, BrainChip, founded in Australia and now headquartered in California’s Silicon Valley, recently sparked some investor interest in Australia. From an April 7, 2015 article by Timna Jacks for the Australian Financial Review,

Former mining stock Aziana Limited has whet Australian investors’ appetite for science fiction, with its share price jumping 125 per cent since it announced it was acquiring a US-based tech company called BrainChip, which promises artificial intelligence through a microchip that replicates the neural system of the human brain.

Shares in the company closed at 9¢ before the Easter long weekend, having been priced at just 4¢ when the backdoor listing of BrainChip was announced to the market on March 18.

Creator of the patented digital chip, Peter Van Der Made told The Australian Financial Review the technology has the capacity to learn autonomously, due to its composition of 10,000 biomimic neurons, which, through a process known as synaptic time-dependent plasticity, can form memories and associations in the same way as a biological brain. He said it works 5000 times faster and uses a thousandth of the power of the fastest computers available today.

…

Mr Van Der Made is inviting technology partners to license the technology for their own chips and products, and is donating the technology to university laboratories in the US for research.

The Netherlands-born Australian, now based in southern California, was inspired to create the brain-like chip in 2004, after working at the IBM Internet Security Systems for two years, where he was chief scientist for behaviour analysis security systems. …

A June 23, 2015 article by Tony Malkovic on phys.org provide a few more details about BrainChip and about the deal,

Mr Van der Made and the company, also called BrainChip, are now based in Silicon Valley in California and he returned to Perth last month as part of the company’s recent merger and listing on the Australian Stock Exchange.

He says BrainChip has the ability to learn autonomously, evolve and associate information and respond to stimuli like a brain.

…

Mr Van der Made says the company’s chip technology is more than 5,000 faster than other technologies, yet uses only 1/1,000th of the power.

“It’s a hardware only solution, there is no software to slow things down,” he says.

“It doesn’t executes instructions, it learns and supplies what it has learnt to new information.

…

“BrainChip is on the road to position itself at the forefront of artificial intelligence,” he says.

“We have a clear advantage, at least 10 years, over anybody else in the market, that includes IBM.”

BrainChip is aiming at the global semiconductor market involving almost anything that involves a microprocessor.

You can find out more about the company, BrainChip here. The site does have a little more information about the technology,

Spiking Neuron Adaptive Processor (SNAP)

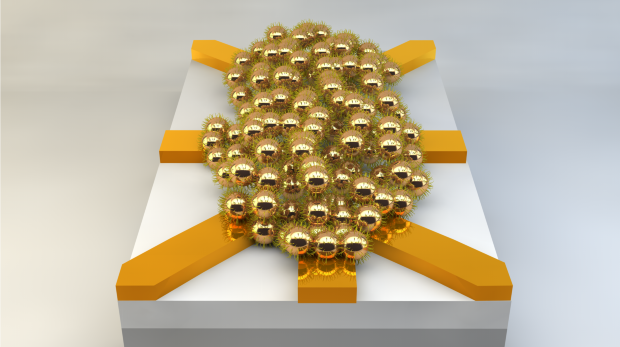

BrainChip’s inventor, Peter van der Made, has created an exciting new Spiking Neural Networking technology that has the ability to learn autonomously, evolve and associate information just like the human brain. The technology is developed as a digital design containing a configurable “sea of biomimic neurons’.

The technology is fast, completely digital, and consumes very low power, making it feasible to integrate large networks into portable battery-operated products, something that has never been possible before.

BrainChip neurons autonomously learn through a process known as STDP (Synaptic Time Dependent Plasticity). BrainChip’s fully digital neurons process input spikes directly in hardware. Sensory neurons convert physical stimuli into spikes. Learning occurs when the input is intense, or repeating through feedback and this is directly correlated to the way the brain learns.

Computing Artificial Neural Networks (ANNs)

The brain consists of specialized nerve cells that communicate with one another. Each such nerve cell is called a Neuron,. The inputs are memory nodes called synapses. When the neuron associates information, it produces a ‘spike’ or a ‘spike train’. Each spike is a pulse that triggers a value in the next synapse. Synapses store values, similar to the way a computer stores numbers. In combination, these values determine the function of the neural network. Synapses acquire values through learning.

In Artificial Neural Networks (ANNs) this complex function is generally simplified to a static summation and compare function, which severely limits computational power. BrainChip has redefined how neural networks work, replicating the behaviour of the brain. BrainChip’s artificial neurons are completely digital, biologically realistic resulting in increased computational power, high speed and extremely low power consumption.

The Problem with Artificial Neural Networks

Standard ANNs, running on computer hardware are processed sequentially; the processor runs a program that defines the neural network. This consumes considerable time and because these neurons are processed sequentially, all this delayed time adds up resulting in a significant linear decline in network performance with size.

…

BrainChip neurons are all mapped in parallel. Therefore the performance of the network is not dependent on the size of the network providing a clear speed advantage. So because there is no decline in performance with network size, learning also takes place in parallel within each synapse, making STDP learning very fast.

A hardware solution

BrainChip’s digital neural technology is the only custom hardware solution that is capable of STDP learning. The hardware requires no coding and has no software as it evolves learning through experience and user direction.

The BrainChip neuron is unique in that it is completely digital, behaves asynchronously like an analog neuron, and has a higher level of biological realism. It is more sophisticated than software neural models and is many orders of magnitude faster. The BrainChip neuron consists entirely of binary logic gates with no traditional CPU core. Hence, there are no ‘programming’ steps. Learning and training takes the place of programming and coding. Like of a child learning a task for the first time.

Software ‘neurons’, to compromise for limited processing power, are simplified to a point where they do not resemble any of the features of a biological neuron. This is due to the sequential nature of computers, whereby all data has to pass through a central processor in chunks of 16, 32 or 64 bits. In contrast, the brain’s network is parallel and processes the equivalent of millions of data bits simultaneously.

A significantly faster technology

Performing emulation in digital hardware has distinct advantages over software. As software is processed sequentially, one instruction at a time, Software Neural Networks perform slower with increasing size. Parallel hardware does not have this problem and maintains the same speed no matter how large the network is. Another advantage of hardware is that it is more power efficient by several orders of magnitude.

The speed of the BrainChip device is unparalleled in the industry.

For large neural networks a GPU (Graphics Processing Unit) is ~70 times faster than the Intel i7 executing a similar size neural network. The BrainChip neural network is faster still and takes far fewer CPU (Central Processing Unit) cycles, with just a little communication overhead, which means that the CPU is available for other tasks. The BrainChip network also responds much faster than a software network accelerating the performance of the entire system.

The BrainChip network is completely parallel, with no sequential dependencies. This means that the network does not slow down with increasing size.

Endorsed by the neuroscience community

A number of the world’s pre-eminent neuroscientists have endorsed the technology and are agreeing to joint develop projects.

BrainChip has the potential to become the de facto standard for all autonomous learning technology and computer products.

Patented

BrainChip’s autonomous learning technology patent was granted on the 21st September 2008 (Patent number US 8,250,011 “Autonomous learning dynamic artificial neural computing device and brain inspired system”). BrainChip is the only company in the world to have achieved autonomous learning in a network of Digital Neurons without any software.

A prototype Spiking Neuron Adaptive Processor was designed as a ‘proof of concept’ chip.

The first tests were completed at the end of 2007 and this design was used as the foundation for the US patent application which was filed in 2008. BrainChip has also applied for a continuation-in-part patent filed in 2012, the “Method and System for creating Dynamic Neural Function Libraries”, US Patent Application 13/461,800 which is pending.

Van der Made doesn’t seem to have published any papers on this work and the description of the technology provided on the website is frustratingly vague. There are many acronyms for processes but no mention of what this hardware might be. For example, is it based on a memristor or some kind of atomic ionic switch or something else altogether?

It would be interesting to find out more but, presumably, van der Made, wishes to withhold details. There are many companies following the same strategy while pursuing what they view as a business advantage.

* Artificial neuron link added June 26, 2015 at 1017 hours PST.