Given R. Stanley Williams’s presence on the author list, it’s a bit surprising that there’s no mention of memristors. If I read the signs rightly the interest is shifting, in some cases, from the memristor to a more comprehensive grouping of circuit elements referred to as ‘neuristors’ or, more likely, ‘nanocirucuit elements’ in the effort to achieve brainlike (neuromorphic) computing (engineering). (Williams was the leader of the HP Labs team that offered proof and more of the memristor’s existence, which I mentioned here in an April 5, 2010 posting. There are many, many postings on this topic here; try ‘memristors’ or ‘brainlike computing’ for your search terms.)

A September 24, 2020 news item on ScienceDaily announces a recent development in the field of neuromorphic engineering,

In the September [2020] issue of the journal Nature, scientists from Texas A&M University, Hewlett Packard Labs and Stanford University have described a new nanodevice that acts almost identically to a brain cell. Furthermore, they have shown that these synthetic brain cells can be joined together to form intricate networks that can then solve problems in a brain-like manner.

“This is the first study where we have been able to emulate a neuron with just a single nanoscale device, which would otherwise need hundreds of transistors,” said Dr. R. Stanley Williams, senior author on the study and professor in the Department of Electrical and Computer Engineering. “We have also been able to successfully use networks of our artificial neurons to solve toy versions of a real-world problem that is computationally intense even for the most sophisticated digital technologies.”

In particular, the researchers have demonstrated proof of concept that their brain-inspired system can identify possible mutations in a virus, which is highly relevant for ensuring the efficacy of vaccines and medications for strains exhibiting genetic diversity.

…

A September 24, 2020 Texas A&M University news release (also on EurekAlert) by Vandana Suresh, which originated the news item, provides some context for the research,

Over the past decades, digital technologies have become smaller and faster largely because of the advancements in transistor technology. However, these critical circuit components are fast approaching their limit of how small they can be built, initiating a global effort to find a new type of technology that can supplement, if not replace, transistors.

In addition to this “scaling-down” problem, transistor-based digital technologies have other well-known challenges. For example, they struggle at finding optimal solutions when presented with large sets of data.

“Let’s take a familiar example of finding the shortest route from your office to your home. If you have to make a single stop, it’s a fairly easy problem to solve. But if for some reason you need to make 15 stops in between, you have 43 billion routes to choose from,” said Dr. Suhas Kumar, lead author on the study and researcher at Hewlett Packard Labs. “This is now an optimization problem, and current computers are rather inept at solving it.”

Kumar added that another arduous task for digital machines is pattern recognition, such as identifying a face as the same regardless of viewpoint or recognizing a familiar voice buried within a din of sounds.

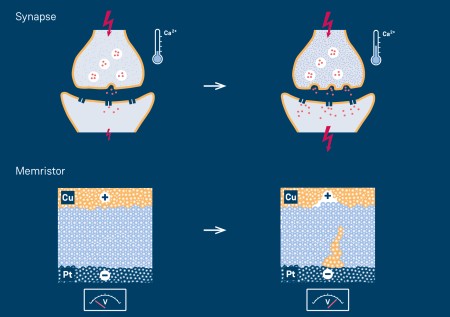

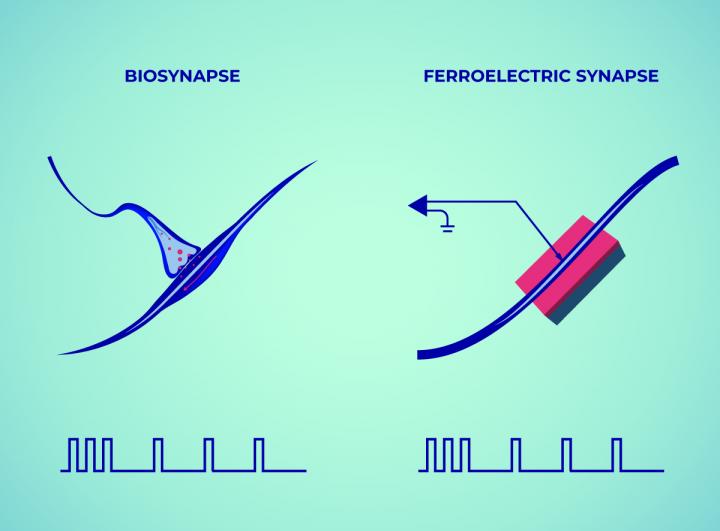

But tasks that can send digital machines into a computational tizzy are ones at which the brain excels. In fact, brains are not just quick at recognition and optimization problems, but they also consume far less energy than digital systems. Hence, by mimicking how the brain solves these types of tasks, Williams said brain-inspired or neuromorphic systems could potentially overcome some of the computational hurdles faced by current digital technologies.

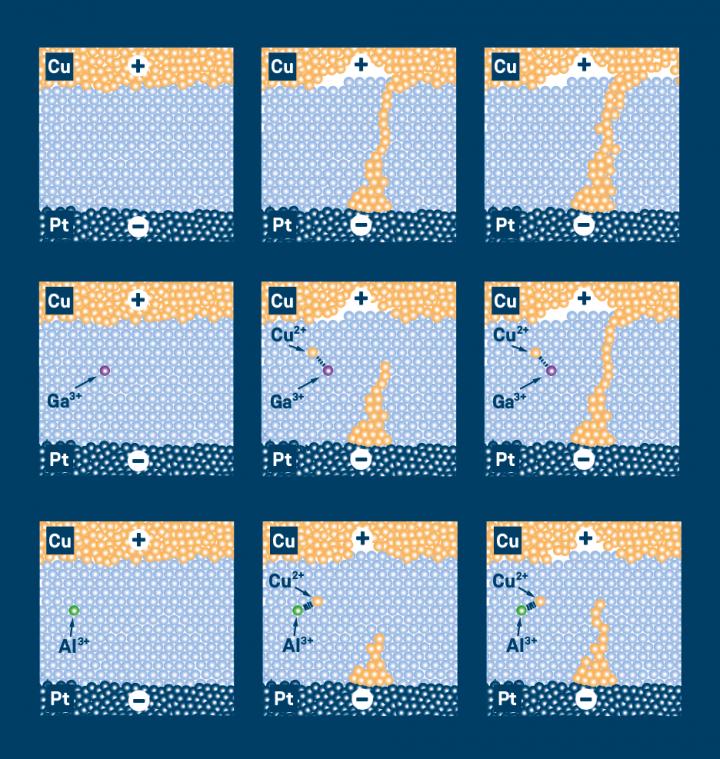

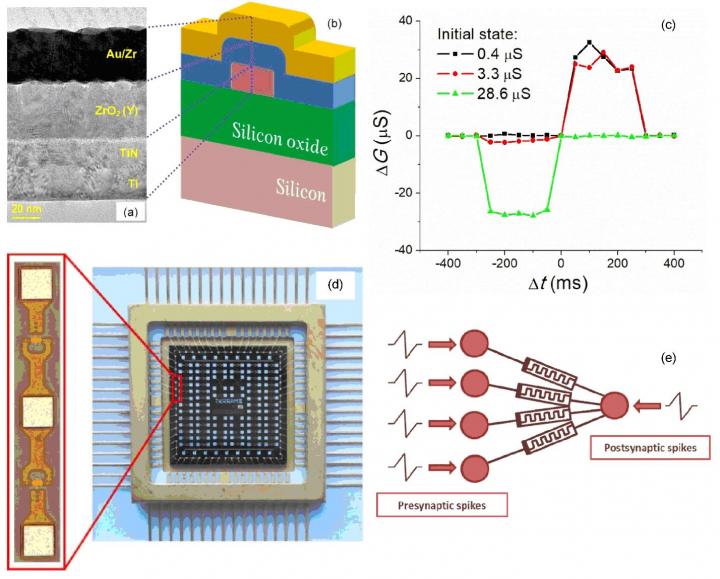

To build the fundamental building block of the brain or a neuron, the researchers assembled a synthetic nanoscale device consisting of layers of different inorganic materials, each with a unique function. However, they said the real magic happens in the thin layer made of the compound niobium dioxide.

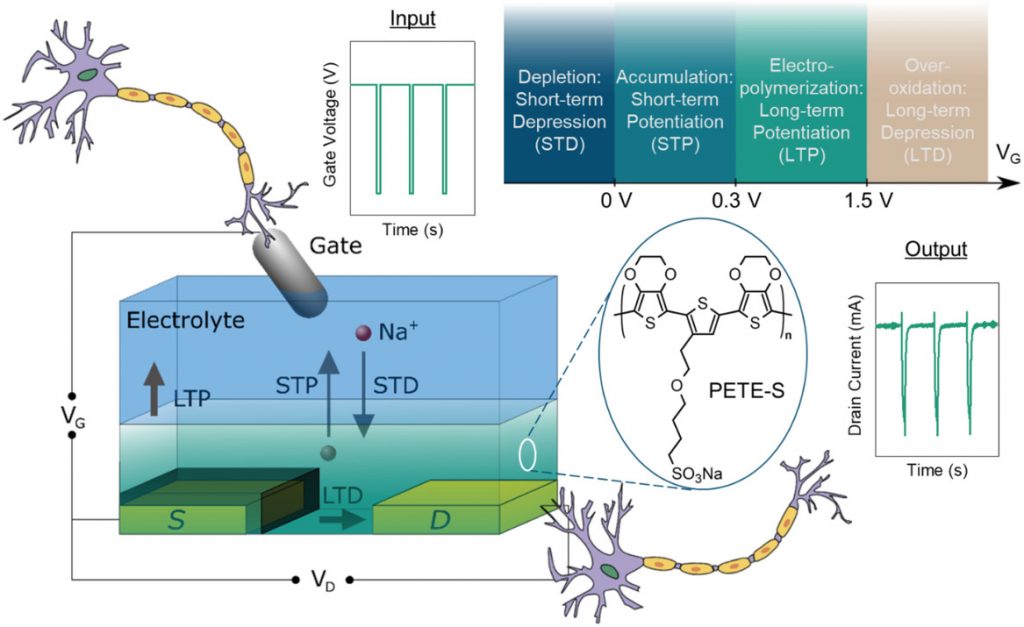

When a small voltage is applied to this region, its temperature begins to increase. But when the temperature reaches a critical value, niobium dioxide undergoes a quick change in personality, turning from an insulator to a conductor. But as it begins to conduct electric currents, its temperature drops and niobium dioxide switches back to being an insulator.

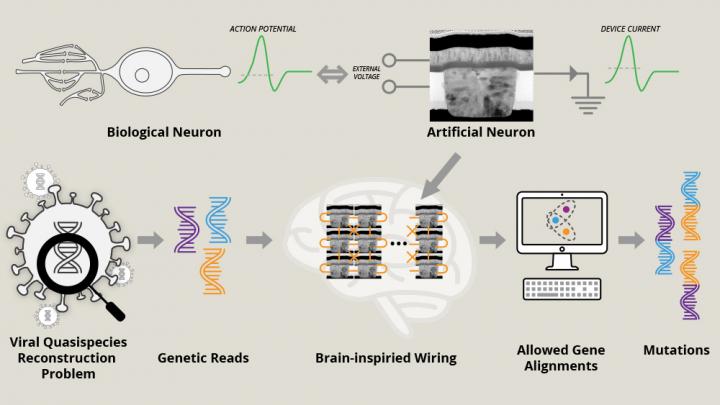

These back-and-forth transitions enable the synthetic devices to generate a pulse of electrical current that closely resembles the profile of electrical spikes, or action potentials, produced by biological neurons. Further, by changing the voltage across their synthetic neurons, the researchers reproduced a rich range of neuronal behaviors observed in the brain, such as sustained, burst and chaotic firing of electrical spikes.

“Capturing the dynamical behavior of neurons is a key goal for brain-inspired computers,” said Kumar. “Altogether, we were able to recreate around 15 types of neuronal firing profiles, all using a single electrical component and at much lower energies compared to transistor-based circuits.”

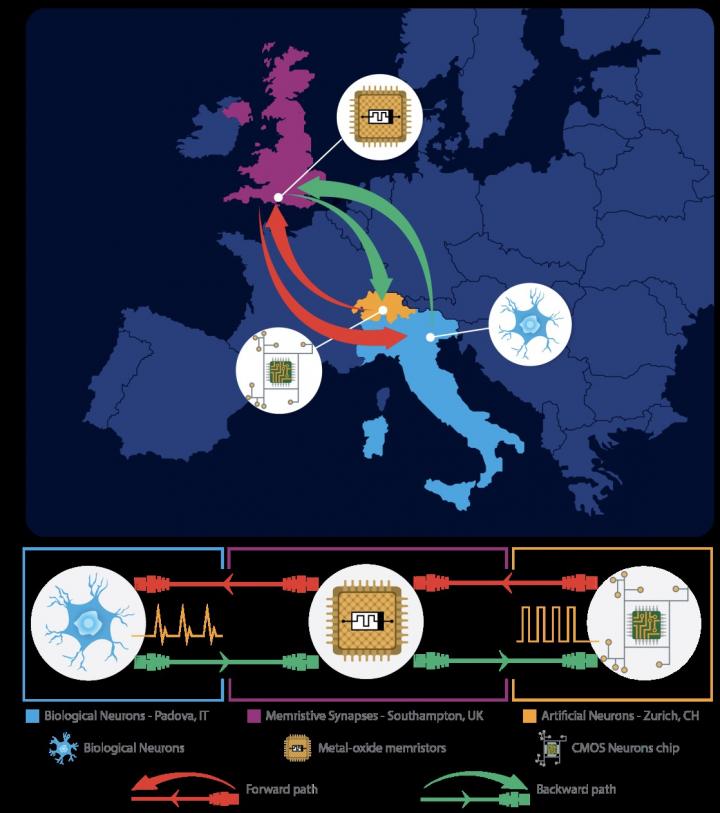

To evaluate if their synthetic neurons [neuristor?] can solve real-world problems, the researchers first wired 24 such nanoscale devices together in a network inspired by the connections between the brain’s cortex and thalamus, a well-known neural pathway involved in pattern recognition. Next, they used this system to solve a toy version of the viral quasispecies reconstruction problem, where mutant variations of a virus are identified without a reference genome.

By means of data inputs, the researchers introduced the network to short gene fragments. Then, by programming the strength of connections between the artificial neurons within the network, they established basic rules about joining these genetic fragments. The jigsaw puzzle-like task for the network was to list mutations in the virus’ genome based on these short genetic segments.

The researchers found that within a few microseconds, their network of artificial neurons settled down in a state that was indicative of the genome for a mutant strain.

Williams and Kumar noted this result is proof of principle that their neuromorphic systems can quickly perform tasks in an energy-efficient way.

The researchers said the next steps in their research will be to expand the repertoire of the problems that their brain-like networks can solve by incorporating other firing patterns and some hallmark properties of the human brain like learning and memory. They also plan to address hardware challenges for implementing their technology on a commercial scale.

“Calculating the national debt or solving some large-scale simulation is not the type of task the human brain is good at and that’s why we have digital computers. Alternatively, we can leverage our knowledge of neuronal connections for solving problems that the brain is exceptionally good at,” said Williams. “We have demonstrated that depending on the type of problem, there are different and more efficient ways of doing computations other than the conventional methods using digital computers with transistors.”

If you look at the news release on EurekAlert, you’ll see this informative image is titled: NeuristerSchematic [sic],

(On the university website, the image is credited to Rachel Barton.) You can see one of the first mentions of a ‘neuristor’ here in an August 24, 2017 posting.

Here’s a link to and a citation for the paper,

Third-order nanocircuit elements for neuromorphic engineering by Suhas Kumar, R. Stanley Williams & Ziwen Wang. Nature volume 585, pages518–523(2020) DOI: https://doi.org/10.1038/s41586-020-2735-5 Published: 23 September 2020 Issue Date: 24 September 2020

This paper is behind a paywall.