I started writing this in the aftermath of the 2021 Canadian federal budget when most of the action (so far) occurred but if you keep going to the end of this post you’ll find updates for Precision Nanosystems and AcCellera and a few extra bits. Also, you may want to check out my August 20, 2021 posting (Getting erased from the mRNA/COVID-19 story) about Ian MacLachlan and some of the ‘rough and tumble’ of the biotechnology scene in BC/Canada. Now, onto my analysis of the life sciences public relations campaign in British Columbia.

Gordon Hoekstra’s May 7, 2021 article (also in print on May 8, 2021) about the British Columbia (mostly in Vancouver) biotechnology scene in the Vancouver Sun is the starting point for this story.

His entry (whether the reporter realizes it or not) into a communications (or public relations) campaign spanning federal, provincial, and municipal jurisdictions is well written and quite informative. While it’s tempting to attribute the whole thing to a single evil genius or mastermind in answer to the question posed in the head, the ‘campaign’ is likely a targeted effort by one or more groups and individuals enhanced with a little luck.

Federal and provincial money for life sciences and technology

The Business Council of British Columbia’s April 22, 2021 Federal & B.C. Budgets 2021 Analysis (PDF), notes this in its Highlights section,

…

•Another priority reflected in both budgets is boosting innovation and accelerating the growth of technology-producing companies. The federal budget [April 19, 2021] is spending billions more to support the life sciences and bio-manufacturing industry, clean technologies, the development of electric vehicles, the aerospace sector, quantum computing, AI, genomics, and digital technologies, among others.

•B.C.’s budget [April 20, 2021] also provides funding to spur innovation, support the technology sector and grow locally-based companies. In this area the main item is the new InBC Investment Corporation [emphasis mine], first announced last summer. Endowed with $500 million financed via an agency loan, the Corporation will establish a fund to invest in growing and “anchoring” high-growth [emphasis mine] B.C. businesses.

…

Their in-depth analysis does not provide more detail about the life sciences investments in the 2021 Canadian federal budget or the 2021 BC provincial budget.

My May 4, 2021 posting details many of the Canadian federal investments in life sciences and other technology areas of interest. The 2021 BC budget announcement is so vague, it didn’t merit much more than this mention until now.

InBC Investment Corporation (BC’s contribution)

InBC Investment Corporation was set up on or about April 27, 2021 as three news ‘references’ (brief summaries with a link) suggest: InBC Investment Corp. Act, InBC Announcement, $500-million investment fund paves way for StrongerBC.

While the corporation does not have a specific mandate to fund the biotechnology sector, given the current enthusiasm, it’s easy to believe they might be more inclined to fund them than not, regardless of any expertise they or may not have specifically in that field.

Of most interest to me was InBC’s Board of Directors, which I tracked down to a BC Ministry of Jobs, Economic Recovery and Innovation May 6, 2021 news release,

InBC Investment Corp. now has a full board of directors with backgrounds in finance, economics, impact investing and business to provide strategic guidance and accountability for the new Crown corporation.

InBC will support startups [emphasis mine], help promising companies scale up and work with a “triple bottom line” mandate that considers people, the planet and profits, to position British Columbia as a front-runner in the post-pandemic economy.

…

Christine Bergeron, president and chief executive officer of Vancity, will serve as the new board chair of InBC Investment Corp. The nine-member board of directors is made up of both public and private sector members who are responsible for oversight of the corporation, including its mission, policies and goals.

…

The InBC board members were selected through a comprehensive process, guided by the principles of the Crown Agencies and Board Resourcing Office. Candidates with a variety of relevant backgrounds were considered to form a strong board consisting of seven women and two men. The members appointed represent diversity as well as appropriate areas of expertise.

The following people were selected as members on the board of directors:

- Christine Bergeron, president and CEO, Vancity

- Kevin Campbell, managing director of investment banking, board of directors, Haywood Securities

- Ingrid Leong, VP finance for JH Investments and chief investment officer, Houssian Foundation

- Glen Lougheed, serial tech entrepreneur and angel investor

- Suzanne Trottier, vice-president of Indigenous trust services, First Nations Bank Trust

- Carole James, former minister of finance and deputy premier, Government of British Columbia

- Iglika Ivanova, senior economist, public interest researcher, BC Office of the Canadian Centre for Policy Alternatives

- Bobbi Plecas, deputy minister, B.C.’s Ministry of Jobs, Economic Recovery and Innovation

- Heather Wood, deputy minister, B.C.’s Ministry of Finance

Legislation to provide the governance framework for InBC was introduced by the legislative assembly on April 27, 2021.

…

Board experience at growing a startup?

This group of people doesn’t seem to have a shred of experience with startups. Glen Lougheed’s “serial tech entrepreneur and angel investor” description means nothing to me and the description he provides in his LinkedIn profile doesn’t clear up matters,

I am a product and business development professional with an entrepreneurial attitude and strong technical skills. I have been building companies both mine and others since I was a teenager.

Having looked up the two companies for which he is currently acting as Chief Executive Officer, Lougheed’s interest appears to be focused on the use of ‘big data’ in marketing and communications campaigns.

Perhaps startup experience isn’t necessary since the board has been appointed to do this (from the BC Ministry of Jobs, Economic Recovery and Innovation May 6, 2021 news release; click on the Backgrounder),

Responsibilities of the InBC Investment Corp. board of directors

The board of directors will be responsible for oversight of the management of the affairs of the corporation. This includes:

- selecting and approving the chief executive officer and chief innovation officer and monitoring performance and accountabilities;

- reviewing and approving annual corporate financial statements;

- oversight of policies that relate to InBC’s mandate and holding the executive to account for its accountabilities with respect to InBC’s mandate;

- oversight of InBC’s operations; and

- selection and appointment of InBC’s auditor.

Relationships

So, we have two government civil servants, Wood (Deputy Minister of B.C.’s Ministry of Finance) and Plecas (Deputy Minister of B.C.’s Ministry of Jobs, Economic Recovery and Innovation), and James, a BC Minister of Finance, who left the job several months ago. Then we have Lougheed, recently resigned (May 2021) as special advisor on innovation and technology to the BC Minister of Jobs, Economic Recovery and Innovation.

It would seem almost half of this new board is or has been affiliated with the government and, likely, know each other.

I expect there are more relationships to be found but my interest is in the overall picture as it pertains to the biotechnology scene. This board (except possibly for Lougheed) does not seem to have any experience in the biotechnology sector or growing any sort of startup business in any technology field.

Presumably, the new chief executive officer (CEO) and new chief innovation officer (CIO) will have some of the necessary experience. Still, biotechnology isn’t the same as digital technology, an area where the BC technology community is quite strong. (The Canadian federal government’s Digital Technology Supercluster is headquartered in BC.)

I imagine the politics around who gets hired as CEO and as CIO will be quite interesting.

See the ‘Updates and extras’ at the end of this posting for more mention of this ‘secretive’ government corporation.

The BC biotech gorillas

AbCellera was BC’s biggest biotech story in 2020/21 (see my Avo Media, Science Telephone, and a Canadian COVID-19 billionaire scientist post from December 30, 2020 for more. Do check out the subsection titled “Avo Media …” for a look at an unexpectedly interlaced relationship). Note: The AbCellera COVID-19 treatment is not a vaccine or a vaccine delivery system.

It was a bit surprising that Acuitas Therapeutics didn’t get more attention although Hoekstra seems to have addressed that shortcoming in his May 7, 2021 article by using Thomas Madden and Acuitas as the hook for the story,

By early 2020, concern was mounting about a new, deadly coronavirus first detected in Wuhan, China.

The World Health Organization had declared the coronavirus outbreak a global health emergency just days before. There had been more than 400 deaths and more than 20,000 cases, most of those in China.

But the virus was spreading around the world. Deaths had occurred in Hong Kong and the Philippines, and the virus had been detected in the U.S. and Canada.

By early January of 2020, scientists in China had already sequenced the virus’s genome and made it public, allowing scientists to begin the research for a vaccine.

Scientists expected that could take years.

But, as a second case was confirmed in B.C. in early February, Thomas Madden, a world-renowned expert in nanotechnology who heads Vancouver-based biotech company Acuitas Therapeutics, flew to Germany. [emphases mine]

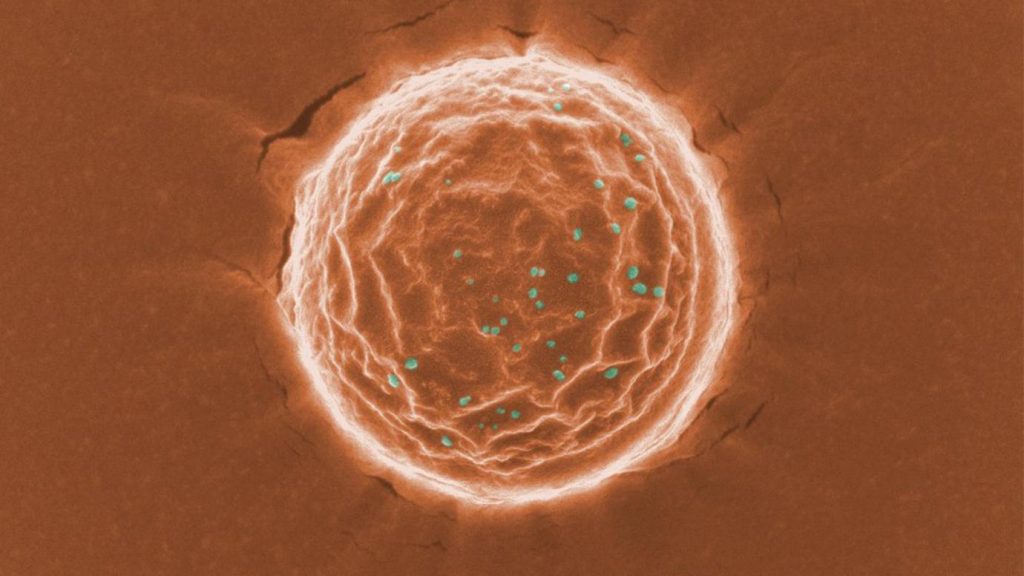

Acuitas was in the business of creating lipid nanoparticles, microscopic biological vehicles that could deliver drugs [emphasis mine] — for example, to specifically target cancers in the body.

…

Scientists are already beginning to say it’s likely that a booster vaccine will be needed [emphasis mine] next year to deal with the virus variants.

Madden, the head of Acuitas, says it makes absolute sense to use the new biotechnology, for example, the use of messenger RNA vaccines, to prepare and fight future pandemics.

Says Madden [emphasis mine]: “The technology in terms of what it’s able to do is absolutely phenomenal. It’s just taken us 40 years to get here.”

So, Hoekstra reminds us of the international nature and urgency of the crisis, then, introduces Acuitas as a vital and local player in solutions deployed internationally, and, finally, brings us back to Acuitas after providing an overview of the BC biotech scene and the federal and provincial government’s latest moves,

AbCellera Biologics is more of a supporting player, along with a number of other companies, in Hoekstra’s story,

Sandwiched in the middle, you’ll find what I think is the point of the story,

LifeSciences BC and the provincial government’s commitments

From Hoekstra’s May 7, 2021 article,

The importance of the biotech sector in providing protection against pandemics has caught the attention of the federal and B.C. governments. It has also been noticed by the private markets.

In its budget [April 19, 2021] earlier this month [sic], the federal government promised more than $2 billion in the next seven years to support “promising” life sciences and bio-manufacturing firms, research, training, education and vaccine candidates.

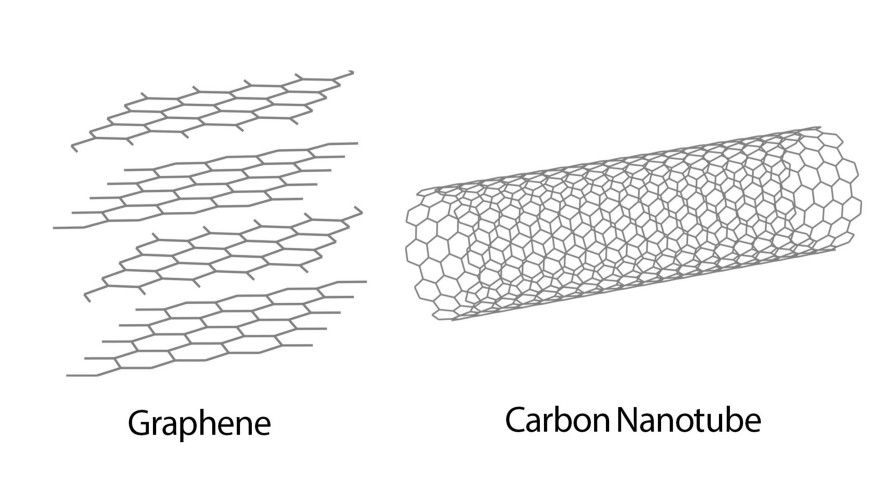

Some companies, including Precision NanoSystems, have already got federal funding. The Vancouver company received $18.2 million last year to help develop its self-replicating mRNA vaccine and another $25 million in early 2021 to assist building a $50-million facility to produce the vaccine.

Last fall, Symvivo received $2.8 million from the National Research Council to help develop its oral COVID-19 vaccine.

AbCellera has also received a pledge of $175.6 million to help build an accredited manufacturing facility in Vancouver [emphasis mine] to produce antibody treatments.

AbCellera expects to double its 230-person workforce over the next two years as it expands its Vancouver campus.

When AbCellera became a publicly traded company late last year, it raised more than $500 million and had a recent market capitalization, the value of its stock, of about $8.5 billion.

When the B.C. government delivered its throne speech recently, the contribution of the province’s life sciences sector in the fight against the COVID-19 pandemic was highlighted, with Precision NanoSystems, AbCellera and StarFish Medical getting mentions. “Their work will not only help bring us out of the pandemic, it will position our province for success in the years ahead,” said B.C.’s Lt. Gov. Jane Austen in delivering the throne speech.

When the budget was released the following week [April 20, 2021], B.C. Finance Minister Selina Robinson said a new three-year, $500-million strategic investment fund would help support and scale up tech firms.

Despite their successes, B.C. biotech firms have faced challenges.

SaNOtize had to go to the U.K. to get support for clinical trials and AbCellera has been disappointed that despite Health Canada emergency approval of its COVID-19 treatment, provinces have been reluctant to use Bamlanivimab.

Hansen, AbCellera’s CEO and a former University of B.C. professor with a PhD in applied physics and biotechnology, said he believes that biotech is the most important frontier of technology.

In the past, while great science was launched from B.C.’s universities, not as great a job was done on turning that science into innovation, jobs [emphasis mine] and the capacity to bring new products to market, possibly because of a lack of entrepreneurship and polices to make it more attractive to companies to grow and thrive here and move here, notes Hansen.

Hurlburt [Wendy Hurlburt], the LifeSciences B.C. CEO, says that policies, including tax structure and patenting [emphasis mine], that encourages innovation companies are needed to support the biotech sector.

But, adds Hansen: “Here in Vancouver, I feel like we’re turning the corner. There’s probably never been a time when Vancouver’s biotech sector [emphasis mine] was stronger. And the future looks very good.”

…

Not only is the province involved but so is the City of Vancouver (more about that in a bit).

It’s not all about the cash

Hoekstra’s May 7, 2021 article helped answer a question I had in the title of another posting, January 22, 2021: Why is Precision Nanosystems Inc. in the local (Vancouver, Canada) newspaper? (See the ‘Updates and extras’ at the end of this posting for more to the answer.)

This campaign has been building for a while. In the “Is it magic or how does the federal budget get developed? subsection of my May 4, 2021 posting on the 2021 Canadian federal budget I speculated a little bit,

I believe most of the priorities are set by power players behind the scenes. We glimpsed some of the dynamics courtesy of the WE Charity scandal 2020/21 and the SNC-Lavalin scandal in 2019.

Access to special meetings and encounters are not likely to be given to any member of the ‘great unwashed’ but we do get to see the briefs that are submitted in anticipation of a new budget. These briefs and meetings with witnesses are available on the Parliament of Canada website (Standing Committee on Finance (FINA) webpage for pre-budget consultations.

…

AbCellera submitted a brief dated August 7, 2020 (PDF) detailing how they would like to see the Income Tax Act amended. It’s not always about getting cash, although that’s very important. In this brief, the company wants “… improved access to the enhanced Scientific Research & Experimental Development tax credit.”

There are many aspects to these campaigns including the federal Income Tax Act and, in this case, municipal involvement.

Vancouver (city government) and the biotech sector

About five weeks prior to the 2021 Canadian federal budget and BC provincial budget announcements, there was some news from the City of Vancouver (from a March 10, 2021 article by Kenneth Chan for dailyhive.com), Note: Links have been removed,

Major expansion plans are abound for AbCellera over the next few years to the extent that the Vancouver-based biotechnology company is now looking to build a massive purpose-built office and medical laboratory campus in Mount Pleasant (Vancouver neighbourhood).

It would be a redevelopment of the entire city block …

… earlier today, Vancouver City Council unanimously approved a rezoning enquiry allowing city staff to work with the proponent and accept a formal application for review.

This special additional pre-application step is required due to the temporary ban [emphasis mine] on most types of rezonings within the Broadway Plan’s planning area, until the plan is finalized at the end of 2021.

But city staff are willing to make this a rare exception due to the economic opportunity [emphasis mine] presented by the proposal and the healthcare-related aspects.

“The reasons for advancing this quickly are they are rapidly growing and would like to stay in Vancouver, and we would like them to… We’re very glad to have this company in Vancouver and want to provide them with a permanent home, but in order to scale up, the timeframe to produce their therapy [for viruses] is really time sensitive,” Gil Kelley, the chief urban planner of the City of Vancouver, told city council during today’s [March 10, 2021] meeting.

….

Roughly 10 days after the 2021 budgets are announced, there’s this from Kenneth Chan’s April 29,2021 article on dailyhive.com,

Plans for AbCellera Biologics’ major footprint expansion in Vancouver’s Mount Pleasant Industrial Area are moving forward quickly.

Based on the application submitted this week, the Vancouver-based biotechnology company is proposing to redevelop 110 West 4th Avenue …

It will be designated as the rapidly growing company’s global headquarters.

… city staff are providing AbCellera with the highly rare, expedited stream of combining the rezoning and development application processes into one.

…

By the middle of this decade, AbCellera will have four locations in the area, including its current 21,000 sq ft office at 2215 Yukon Street and a new 44,000 sq ft office nearing completion at 2131 Manitoba Street, just south of its future main hub.

“We’re building state-of-the-art facilities in Vancouver to accelerate the development of new antibody therapies with biotech and pharma partners from around the world,” said Carl Hansen, CEO and president of AbCellera, in a statement.

…

AbCellera has gained significant international attention over the past year after it co-developed the first authorized COVID-19 antibody therapy for emergency use in high-risk patients in Canada and the United States.

In late 2020, the company closed a successful initial public offering, bringing in $556 million after selling nearly 28 million shares, far exceeding its original goal of raising $250 million. It was the largest-ever IPO [initial public offering] by a Canadian biotech company.

…

“We see this new site as a creative hub for engineers, software developers, data scientists, biologists and bioinformaticians to collaborate, innovate, and push the frontiers of technology.” [said Veronique Lecault, the COO of AbCellera]

Additionally, AbCellera is also planning to build a clinical-grade, antibody manufacturing facility in Metro Vancouver, funded in part by the $176-million investment it received from the federal government in Spring 2020 [see May 3, 2020 AbCellera news release].

Not cash but AbCellera did get an expedited process for rezoning and I imagine there will be more special treatment as this progresses. (See the ‘Updates and extras’ at the end of this posting for news about the expedited process.)

It’s likely there are other companies in the BC’s life science sector that are eyeing this development with great interest and high hopes for themselves.

What it takes

COVID-19 seems to have galvanized interest and support almost everywhere in the world for life sciences.

I don’t believe that anyone in the life sciences planned for or rejoiced at news of this pandemic. However, the Canadian biotech sector has been working for decades to establish itself as an important economic resource. and, sadly, COVID-19 has been a timely development.

All those years of lobbying, also known as, government relations, marketing, investor relations, public relations and more served as preparation for what looks like a concerted effort and it has paid off in BC at the federal level, provincial level, and municipal level (at least one).

The campaigns continue. Here’s Wendy Hurlburt, president and CEO of LifeSciences BC in a May 14, 2021 Conversations That Matter Vancouver Sun podcast with Stuart McNish. Note: Hurlburt makes an odd comment at about the 7 min. 30 secs. mark regarding insulin and patents.

Her dismay over lost opportunities regarding the insulin patent is right in line with Canada’s current patent mania. See my May 13, 2021 posting, Not a pretty picture: Canada and a patent rights waiver for COVID-19 vaccines. As far as I’m aware, Canada’s stance has not changed. Interestingly, Hoekstra’s article doesn’t mention COVID-19 patent waivers.

By contrast, here’s what Frederick Banting (one of the discoverers) had to say about his patent, (from the Banting House Insulin Patents webpage),

About the sale of the patent of insulin for $1 Banting reportedly said, “Insulin belongs to the world, not to me.”

… On January 23rd, 1923 Banting, [Charles] Best, and [James] Collip were awarded the American patents for insulin which they sold to the University of Toronto for $1.00 each.

…

Hurlburt goes on to express dismay over taxes and notes that some companies may leave for other jurisdictions, which means we will lose ‘innovation’. This is a very common ploy coming from any of the technology sectors and can be dated back at least 30 years.

Unmentioned is the dream/business model that so many Canadian tech entrepreneurs have: grow the company, sell it for a lot of money, and retire, preferably before the age of 40.

Getting back to my point, the current situation is not attributable to one individual or to one company’s efforts or to one life science nonprofit or to one federal Network Centre for Excellence (NanoMedicines Innovation Network [NMIN] located at the University of British Columbia).

Note: I have more about the NMIN and Acuitas Therapeutics in a November 12, 2021 posting and there’s more about NMIN’s 7th annual conference and a very high profile guest in a September 11, 2020 posting.

Strategy at the federal, provincial, and local governments, with an eye to the international scene, has been augmented by luck and opportunism.

Updates and extras

Where updates are concerned I have one for Precision Nanosystems and one for AbCellera. I have extras with regard to Moderna and Canada and, BC’s special fund, inBC Investment Corporation. For anyone who’s curious about Banting and the high cost of insulin, I have a couple of links to further reading.

Precision Nanosystems

From an August 11, 2021 article by Kenneth Chan (Note: Links have been removed),

A homegrown pharmaceutical company has announced plans to significantly scale its operations with the opening of a new production facility in Vancouver’s False Creek Flats.

…

The new Evolution Block building will contain PNI’s new global headquarters and a new genetic medicine Good Manufacturing Practice (GMP) biomanufacturing centre, which would allow the company to expand its capabilities to include the clinical manufacturing of RNA vaccines and therapeutics.

Federal funding totalling $25.1 million for PNI was first announced in February 2021 towards covering part of the development costs of such a facility, as part of the federal government’s new strategy to better ensure Canada has the domestic capacity to secure its own COVID-19 vaccines and prepare the country for future pandemics. It is estimated the vaccine production capacity of the new facility will be 240 million doses annually.

…

PNI’s location in the False Creek Flats is strategic, given the close proximity to the new St. Paul’s Hospital campus and the growing concentration of tech and healthcare-based industrial businesses.

…

AbCellera

From a June 22, 2021 article by Kenneth Chan (Note: Links have been removed),

…

The rapidly growing Vancouver-based biotechnology company announced this morning their 130,000 sq ft Good Manufacturing Practices (GMP) facility will be located on a two-acre site at the 900 block of Evans Avenue, replacing the Urban Beach volleyball courts just next to the City of Vancouver’s Evans maintenance centre and the Regional Recycling Vancouver Bottle Depot.

…

GMP is partially funded by the $175 million in federal funding received by the company last year to support research into coronavirus treatment.

…

GMP adds to AbCellera’s major plans to build a new headquarters in close proximity at 110-150 West 4th Avenue in the Mount Pleasant Industrial Area — a city block-sized campus with a total of 380,000 sq ft of laboratory and office space for research and corporate uses.

…

Both campus buildings are being reviewed under the City of Vancouver’s rare streamlined, expedited process [emphasis mine] of combining the rezoning and development permit applications. AbCellera formally announced its campus plans in April 2021.

…

AbCellera gained significant international attention last year when it developed the world’s first monoclonal antibody therapy for COVID-19 to be authorized for emergency use in high-risk patients in Canada and the United States. According to the company, over 400,000 doses of its bamlanivimab drug have been administered around the world, and it is estimated to have kept more than 22,000 people out of hospital — saving at least 11,000 lives.

In late 2020, the company closed a successful initial public offering, bringing in $556 million after selling nearly 28 million shares, far exceeding its original goal of raising $250 million. It was the largest-ever IPO by a Canadian biotech company.

…

Moderna and Canada

It seems like yesterday that Derek Rossi (co-founder of Moderna) was talking about Canada’s need for a biotechnology hub. (see this June 17, 2021 article by Barbara Shecter for the Financial Post). Interestingly, there’s been an announcement of a memorandum of understanding (these things are announced all the time and don’t necessarily result in anything) between Moderna and the government of Canada according to an August 10, 2021 item on the Canadian Broadcasting Corporation (CBC) news website,

Massachusetts-based drug maker Moderna will build an mRNA vaccine manufacturing plant in Canada within the next two years, CEO Stephane Bancel said Tuesday [August 10, 2021; Note the timing, the writ for the next federal election was dropped on August 15, 2021].

The company has signed a memorandum of understanding with the federal government that will result in Canada becoming the home of Moderna’s first foreign operation. It’s not clear yet how much money Canada has offered to Moderna [emphasis mine] for the project.

…

Canada, whose life sciences industry has been decimated over the last three decades, wants in on the action. Prime Minister Justin Trudeau has promised to rebuild the industry, and the recent budget included a $2.2 billion, seven-year investment to grow the life science and biotech sectors.

Almost half of that targets companies that want to expand or set up vaccine and drug production in Canada. None of the COVID-19 vaccines to date have been made in Canada, leaving the country entirely reliant on imports to fill vaccine orders. As a result, Canada was slower out of the gate on immunizations than some of its counterparts with domestic production, and likely had to pay more per dose for some vaccines as well.

…

The location of the new facility hasn’t been finalized, but Bancel said the availability of an educated workforce will be the main deciding factor. He said the design is done and they’ll need to start hiring very soon so training can begin.

it’s not exactly a hub but who knows what the future will bring? I imagine there’s going to be some serious wrangling behind the scenes as the provinces battle to be the location for the facility. Note that Innovation Minister François-Philippe Champagne who made the announcement with Bancel in Montréal represents a federal riding in Québec. (BTW, Bancel is from France and seems to have spent much of his adult life in the US.) Of course anything can happen and I’m sure the BC contingent will make themselves felt but it would seem that Quebec is the front runner for now, assuming this memorandum of understanding leads to a facility. Given that we are in the midst of a federal election, it seems more probable than it might otherwise.

inBC Investment Corporation

Bob Mackin’s August 13, 2021 article for theBreaker.news sheds some light on how that corporation was formed so very quickly and more,

The B.C. NDP government rejigged the B.C. Immigrant Investor Fund last year, but refused to release the business case when it was rebranded as inBC Investment Corp. in late April [2021].

theBreaker.news requested the business case for the $500 million fund, which is overseen by a board of NDP patronage appointees, on May 6 [2021].

…

The 123-page document below is heavily censored — meaning the NDP cabinet is refusing to tell British Columbians the projected operating costs (including board expenses, salary and benefits, office space, operating and administration), full-time equivalents, and cash flows for the newest Crown corporation. inBC bills itself as a triple-bottom line organization, meaning it intends to invest on the basis of social, environmental and economic values.

When its enabling legislation was tabled, the NDP took steps to exempt inBC from the freedom of information law.

Thank you, Mr. Mackin.

More on Banting, insulin and patents

Caitlyn McClure’s 2016 article (Insulin’s Inventor Sold the Patent for $1. Then Drug Companies Got Hold of It.) for other98.com is a brief and pithy explanation for why insulin costs so much. Alanna Mitchell’s August 13, 2019 article for Maclean’s magazine investigates ‘insulin tourism’ and offers more detail as to how this situation has come about.

One last reminder, my August 20, 2021 posting (Getting erased from the mRNA/COVID-19 story) about Ian MacLachlan provides insight into how competitive and rough the bitotechnology scene can be here in BC/Canada.