This post is going to feature a human genetic engineering roundup of sorts.

First, the field of human genetic engineering encompasses more than the human genome as this paper (open access until June 5, 2015) notes in the context of a discussion about a specific CRISPR gene editing tool,

CRISPR-Cas9 Based Genome Engineering: Opportunities in Agri-Food-Nutrition and Healthcare by Rajendran Subin Raj Cheri Kunnumal, Yau Yuan-Yeu, Pandey Dinesh, and Kumar Anil. OMICS: A Journal of Integrative Biology. May 2015, 19(5): 261-275. doi:10.1089/omi.2015.0023 Published Online Ahead of Print: April 14, 2015

Here’s more about the paper from a May 7, 2015 Mary Ann Liebert publisher news release on EurekAlert,

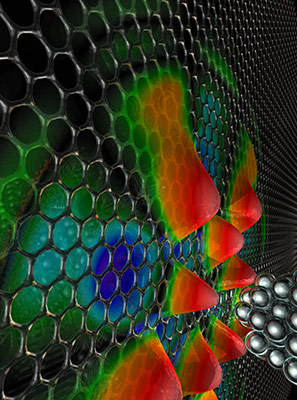

Researchers have customized and refined a technique derived from the immune system of bacteria to develop the CRISPR-Cas9 genome engineering system, which enables targeted modifications to the genes of virtually any organism. The discovery and development of CRISPR-Cas9 technology, its wide range of potential applications in the agriculture/food industry and in modern medicine, and emerging regulatory issues are explored in a Review article published in OMICS: A Journal of Integrative Biology, …

“CRISPR-Cas9 Based Genome Engineering: Opportunities in Agri-Food-Nutrition and Healthcare” provides a detailed description of the CRISPR system and its applications in post-genomics biology. Subin Raj, Cheri Kunnumal Rajendran, Dinish Pandey, and Anil Kumar, G.B. Pant University of Agriculture and Technology (Uttarakhand, India) and Yuan-Yeu Yau, Northeastern State University (Broken Arrow, OK) describe the advantages of the RNA-guided Cas9 endonuclease-based technology, including the activity, specificity, and target range of the enzyme. The authors discuss the rapidly expanding uses of the CRISPR system in both basic biological research and product development, such as for crop improvement and the discovery of novel therapeutic agents. The regulatory implications of applying CRISPR-based genome editing to agricultural products is an evolving issue awaiting guidance by international regulatory agencies.

“CRISPR-Cas9 technology has triggered a revolution in genome engineering within living systems,” says OMICS Editor-in-Chief Vural Özdemir, MD, PhD, DABCP. “This article explains the varied applications and potentials of this technology from agriculture to nutrition to medicine.

Intellectual property (patents)

The CRISPR technology has spawned a number of intellectual property (patent) issues as a Dec. 21,2014 post by Glyn Moody on Techdirt stated,

Although not many outside the world of the biological sciences have heard of it yet, the CRISPR gene editing technique may turn out to be one of the most important discoveries of recent years — if patent battles don’t ruin it. Technology Review describes it as:

… an invention that may be the most important new genetic engineering technique since the beginning of the biotechnology age in the 1970s. The CRISPR system, dubbed a “search and replace function” for DNA, lets scientists easily disable genes or change their function by replacing DNA letters. During the last few months, scientists have shown that it’s possible to use CRISPR to rid mice of muscular dystrophy, cure them of a rare liver disease, make human cells immune to HIV, and genetically modify monkeys.

Unfortunately, rivalry between scientists claiming the credit for key parts of CRISPR threatens to spill over into patent litigation:

[A researcher at the MIT-Harvard Broad Institute, Feng] Zhang cofounded Editas Medicine, and this week the startup announced that it had licensed his patent from the Broad Institute. But Editas doesn’t have CRISPR sewn up. That’s because [Jennifer] Doudna, a structural biologist at the University of California, Berkeley, was a cofounder of Editas, too. And since Zhang’s patent came out, she’s broken off with the company, and her intellectual property — in the form of her own pending patent — has been licensed to Intellia, a competing startup unveiled only last month. Making matters still more complicated, [another CRISPR researcher, Emmanuelle] Charpentier sold her own rights in the same patent application to CRISPR Therapeutics.

Things are moving quickly on the patent front, not least because the Broad Institute paid extra to speed up its application, conscious of the high stakes at play here:

Along with the patent came more than 1,000 pages of documents. According to Zhang, Doudna’s predictions in her own earlier patent application that her discovery would work in humans was “mere conjecture” and that, instead, he was the first to show it, in a separate and “surprising” act of invention.

The patent documents have caused consternation. The scientific literature shows that several scientists managed to get CRISPR to work in human cells. In fact, its easy reproducibility in different organisms is the technology’s most exciting hallmark. That would suggest that, in patent terms, it was “obvious” that CRISPR would work in human cells, and that Zhang’s invention might not be worthy of its own patent.

….

Ethical and moral issues

The CRISPR technology has reignited a discussion about ethical and moral issues of human genetic engineering some of which is reviewed in an April 7, 2015 posting about a moratorium by Sheila Jasanoff, J. Benjamin Hurlbut and Krishanu Saha for the Guardian science blogs (Note: A link has been removed),

On April 3, 2015, a group of prominent biologists and ethicists writing in Science called for a moratorium on germline gene engineering; modifications to the human genome that will be passed on to future generations. The moratorium would apply to a technology called CRISPR/Cas9, which enables the removal of undesirable genes, insertion of desirable ones, and the broad recoding of nearly any DNA sequence.

Such modifications could affect every cell in an adult human being, including germ cells, and therefore be passed down through the generations. Many organisms across the range of biological complexity have already been edited in this way to generate designer bacteria, plants and primates. There is little reason to believe the same could not be done with human eggs, sperm and embryos. Now that the technology to engineer human germlines is here, the advocates for a moratorium declared, it is time to chart a prudent path forward. They recommend four actions: a hold on clinical applications; creation of expert forums; transparent research; and a globally representative group to recommend policy approaches.

…

The authors go on to review precedents and reasons for the moratorium while suggesting we need better ways for citizens to engage with and debate these issues,

An effective moratorium must be grounded in the principle that the power to modify the human genome demands serious engagement not only from scientists and ethicists but from all citizens. We need a more complex architecture for public deliberation, built on the recognition that we, as citizens, have a duty to participate in shaping our biotechnological futures, just as governments have a duty to empower us to participate in that process. Decisions such as whether or not to edit human genes should not be left to elite and invisible experts, whether in universities, ad hoc commissions, or parliamentary advisory committees. Nor should public deliberation be temporally limited by the span of a moratorium or narrowed to topics that experts deem reasonable to debate.

I recommend reading the post in its entirety as there are nuances that are best appreciated in the entirety of the piece.

Shortly after this essay was published, Chinese scientists announced they had genetically modified (nonviable) human embryos. From an April 22, 2015 article by David Cyranoski and Sara Reardon in Nature where the research and some of the ethical issues discussed,

In a world first, Chinese scientists have reported editing the genomes of human embryos. The results are published1 in the online journal Protein & Cell and confirm widespread rumours that such experiments had been conducted — rumours that sparked a high-profile debate last month2, 3 about the ethical implications of such work.

In the paper, researchers led by Junjiu Huang, a gene-function researcher at Sun Yat-sen University in Guangzhou, tried to head off such concerns by using ‘non-viable’ embryos, which cannot result in a live birth, that were obtained from local fertility clinics. The team attempted to modify the gene responsible for β-thalassaemia, a potentially fatal blood disorder, using a gene-editing technique known as CRISPR/Cas9. The researchers say that their results reveal serious obstacles to using the method in medical applications.

“I believe this is the first report of CRISPR/Cas9 applied to human pre-implantation embryos and as such the study is a landmark, as well as a cautionary tale,” says George Daley, a stem-cell biologist at Harvard Medical School in Boston, Massachusetts. “Their study should be a stern warning to any practitioner who thinks the technology is ready for testing to eradicate disease genes.”

….

Huang says that the paper was rejected by Nature and Science, in part because of ethical objections; both journals declined to comment on the claim. (Nature’s news team is editorially independent of its research editorial team.)

He adds that critics of the paper have noted that the low efficiencies and high number of off-target mutations could be specific to the abnormal embryos used in the study. Huang acknowledges the critique, but because there are no examples of gene editing in normal embryos he says that there is no way to know if the technique operates differently in them.

Still, he maintains that the embryos allow for a more meaningful model — and one closer to a normal human embryo — than an animal model or one using adult human cells. “We wanted to show our data to the world so people know what really happened with this model, rather than just talking about what would happen without data,” he says.

This, too, is a good and thoughtful read.

There was an official response in the US to the publication of this research, from an April 29, 2015 post by David Bruggeman on his Pasco Phronesis blog (Note: Links have been removed),

In light of Chinese researchers reporting their efforts to edit the genes of ‘non-viable’ human embryos, the National Institutes of Health (NIH) Director Francis Collins issued a statement (H/T Carl Zimmer).

…

“NIH will not fund any use of gene-editing technologies in human embryos. The concept of altering the human germline in embryos for clinical purposes has been debated over many years from many different perspectives, and has been viewed almost universally as a line that should not be crossed. Advances in technology have given us an elegant new way of carrying out genome editing, but the strong arguments against engaging in this activity remain. These include the serious and unquantifiable safety issues, ethical issues presented by altering the germline in a way that affects the next generation without their consent, and a current lack of compelling medical applications justifying the use of CRISPR/Cas9 in embryos.” …

More than CRISPR

As well, following on the April 22, 2015 Nature article about the controversial research, the Guardian published an April 26, 2015 post by Filippa Lentzos, Koos van der Bruggen and Kathryn Nixdorff which makes the case that CRISPR techniques do not comprise the only worrisome genetic engineering technology,

The genome-editing technique CRISPR-Cas9 is the latest in a series of technologies to hit the headlines. This week Chinese scientists used the technology to genetically modify human embryos – the news coming less than a month after a prominent group of scientists had called for a moratorium on the technology. The use of ‘gene drives’ to alter the genetic composition of whole populations of insects and other life forms has also raised significant concern.

But the technology posing the greatest, most immediate threat to humanity comes from ‘gain-of-function’ (GOF) experiments. This technology adds new properties to biological agents such as viruses, allowing them to jump to new species or making them more transmissible. While these are not new concepts, there is grave concern about a subset of experiments on influenza and SARS viruses which could metamorphose them into pandemic pathogens with catastrophic potential.

In October 2014 the US government stepped in, imposing a federal funding pause on the most dangerous GOF experiments and announcing a year-long deliberative process. Yet, this process has not been without its teething-problems. Foremost is the de facto lack of transparency and open discussion. Genuine engagement is essential in the GOF debate where the stakes for public health and safety are unusually high, and the benefits seem marginal at best, or non-existent at worst. …

Particularly worrisome about the GOF process is that it is exceedingly US-centric and lacks engagement with the international community. Microbes know no borders. The rest of the world has a huge stake in the regulation and oversight of GOF experiments.

Canadian perspective?

I became somewhat curious about the Canadian perspective on all this genome engineering discussion and found a focus on agricultural issues in the single Canadian blog piece I found. It’s an April 30, 2015 posting by Lisa Willemse on Genome Alberta’s Livestock blog has a twist in the final paragraph,

The spectre of undesirable inherited traits as a result of DNA disruption via genome editing in human germline has placed the technique – and the ethical debate – on the front page of newspapers around the globe. Calls for a moratorium on further research until both the ethical implications can be worked out and the procedure better refined and understood, will undoubtedly temper research activities in many labs for months and years to come.

On the surface, it’s hard to see how any of this will advance similar research in livestock or crops – at least initially.

Groups already wary of so-called “frankenfoods” may step up efforts to prevent genome-edited food products from hitting supermarket shelves. In the EU, where a stringent ban on genetically-modified (GM) foods is already in place, there are concerns that genome-edited foods will be captured under this rubric, holding back many perceived benefits. This includes pork and beef from animals with disease resistance, lower methane emissions and improved feed-to-food ratios, milk from higher-yield or hornless cattle, as well as food and feed crops with better, higher quality yields or weed resistance.

…

Still, at the heart of the human germline editing is the notion of a permanent genetic change that can be passed on to offspring, leading to concerns of designer babies and other advantages afforded only to those who can pay. This is far less of a concern in genome-editing involving crops and livestock, where the overriding aim is to increase food supply for the world’s population at lower cost. Given this, and that research for human medical benefits has always relied on safety testing and data accumulation through experimentation in non-human animals, it’s more likely that any moratorium in human studies will place increased pressure to demonstrate long-term safety of such techniques on those who are conducting the work in other species.

Willemse’s last paragraph offers a strong contrast to the Guardian and Nature pieces.

Finally, there’s a May 8, 2015 posting (which seems to be an automat4d summary of an article in the New Scientist) on a blog maintained by the Canadian Raelian Movement. These are people who believe that alien scientists landed on earth and created all the forms of life on this planet. You can find more on their About page. In case it needs to be said, I do not subscribe to this belief system but I do find it interesting in and of itself and because one of the few Canadian sites that I could find offering an opinion on the matter even if it is in the form of a borrowed piece from the New Scientist.