What? 4th International Conference on Science Advice to Governments, INGSA2021.

Where? Palais des Congrès de Montréal, Québec, Canada and online at www.ingsa2021.org

When? 30 August – 2 September, 2021.

CONTEXT: The largest ever independent gathering of interest groups, thought-leaders, science advisors to governments and global institutions, researchers, academics, communicators and diplomats is taking place in Montreal and online. Organized by Prof Rémi Quirion, Chief Scientist of Québec, speakers from over 50 countries[1] from Brazil to Burkina Faso and from Ireland to Indonesia, plus over 2000 delegates from over 130 countries, will spotlight what is really at stake in the relationship between science and policy-making, both during crises and within our daily lives. From the air we breathe, the food we eat and the cars we drive, to the medical treatments or the vaccines we take, and the education we provide to children, this relationship, and the decisions it can influence, matter immensely.

Prof Rémi Quirion, Conference Organizer, Chief Scientist of Québec and incoming President of INGSA added: “For those of us who believe wholeheartedly in evidence and the integrity of science, the past 18 months have been challenging. Information, correct and incorrect, can spread like a virus. The importance of open science and access to data to inform our UN sustainable development goals discussions or domestically as we strengthen the role of cities and municipalities, has never been more critical. I have no doubt that this transparent and honest platform led from Montréal will act as a carrier-wave for greater engagement”.

Chief Science Advisor of Canada and Conference co-organizer, Dr Mona Nemer, stated that: “Rapid scientific advances in managing the Covid pandemic have generated enormous public interest in evidence-based decision making. This attention comes with high expectations and an obligation to achieve results. Overcoming the current health crisis and future challenges will require global coordination in science advice, and INGSA is well positioned to carry out this important work. Canada and our international peers can benefit greatly from this collaboration.”

Sir Peter Gluckman, founding Chair of INGSA stated that: “This is a timely conference as we are at a turning point not just in the pandemic, but globally in our management of longer-term challenges that affect us all. INGSA has helped build and elevate open and ongoing public and policy dialogue about the role of robust evidence in sound policy making”.

He added that: “Issues that were considered marginal seven years ago when the network was created are today rightly seen as central to our social, environmental and economic wellbeing. The pandemic highlights the strengths and weaknesses of evidence-based policy-making at all levels of governance. Operating on all continents, INGSA demonstrates the value of a well-networked community of emerging and experienced practitioners and academics, from countries at all levels of development. Learning from each other, we can help bring scientific evidence more centrally into policy-making. INGSA has achieved much since its formation in 2014, but the energy shown in this meeting demonstrates our potential to do so much more”.

Held previously in Auckland 2014, Brussels 2016, Tokyo 2018 and delayed for one year due to Covid, the advantage of the new hybrid and virtual format is that organizers have been able to involve more speakers, broaden the thematic scope and offer the conference as free to view online, reaching thousands more people. Examining the complex interactions between scientists, public policy and diplomatic relations at local, national, regional and international levels, especially in times of crisis, the overarching INGSA2021 theme is: “Build back wiser: knowledge, policy & publics in dialogue”.

The first three days will scrutinize everything from concrete case-studies outlining successes and failures in our advisory systems to how digital technologies and AI are reshaping the profession itself. The final day targets how expertize and action in the cultural context of the French-speaking world is encouraging partnerships and contributing to economic and social development. A highlight of the conference is the 2 September announcement of a new ‘Francophonie Science Advisory Network’.

Prof. Salim Abdool Karim, a member of the World Health Organization’s Science Council, and the face of South Africa’s Covid-19 science, speaking in the opening plenary outlined that: “As a past anti-apartheid activist now providing scientific advice to policy-makers, I have learnt that science and politics share common features. Both operate at the boundaries of knowledge and uncertainty, but approach problems differently. We scientists constantly question and challenge our assumptions, constantly searching for empiric evidence to determine the best options. In contrast, politicians are most often guided by the needs or demands of voters and constituencies, and by ideology”.

He added: “What is changing is that grass-roots citizens worldwide are no longer ill-informed and passive bystanders. And they are rightfully demanding greater transparency and accountability. This has brought the complex contradictions between evidence and ideology into the public eye. Covid-19 is not just a disease, its social fabric exemplifies humanity’s interdependence in slowing global spread and preventing new viral mutations through global vaccine equity. This starkly highlights the fault-lines between the rich and poor countries, especially the maldistribution of life-saving public health goods like vaccines. I will explore some of the key lessons from Covid-19 to guide a better response to the next pandemic”.

Speaking on a panel analysing different advisory models, Prof. Mark Ferguson, Chair of the European Innovation Council’s Advisory Board and Chief Science Advisor to the Government of Ireland, sounded a note of optimism and caution in stating that: “Around the world, many scientists have become public celebrities as citizens engage with science like never before. Every country has a new, much followed advisory body. With that comes tremendous opportunities to advance the status of science and the funding of scientific research. On the flipside, my view is that we must also be mindful of the threat of science and scientists being viewed as a political force”.

Strength in numbers

What makes the 4th edition of this biennial event stand out is the perhaps never-before assembled range of speakers from all continents working at the boundary between science, society and policy willing to make their voices heard. In a truly ‘Olympics’ approach to getting all stakeholders on-board, organisers succeeded in involving, amongst others, the UN Office for Disaster Risk Reduction, the United Nations Development Programme, UNESCO and the OECD. The in-house science services of the European Commission and Parliament, plus many country-specific science advisors also feature prominently.

As organisers foster informed debate, we get a rare glimpse inside the science advisory worlds of the Comprehensive Nuclear Test Ban Treaty Organisation, the World Economic Forum and the Global Young Academy to name a few. From Canadian doctors, educators and entrepreneurs and charitable foundations like the Welcome Trust, to Science Europe and media organisations, the programme is rich in its diversity. The International Organisation of the Francophonie and a keynote address by H.E. Laurent Fabius, President of the Constitutional Council of the French Republic are just examples of two major draws on the final day dedicated to spotlighting advisory groups working through French.

…

INGSA’s Elections: New Canadian President and Three Vice Presidents from Chile, Ethiopia, UK

…

The International Network for Government Science Advice has recently undertaken a series of internal reforms intended to better equip it to respond to the growing demands for support from its international partners, while realising the project proposals and ideas of its members.

Part of these reforms included the election in June, 2021 of a new President replacing Sir Peter Gluckman (2014 – 2021) and the creation of three new Vice President roles.

These results will be announced at 13h15 on Wednesday, 1st September during a special conference plenary and awards ceremony. While noting the election results below, media are asked to respect this embargo.

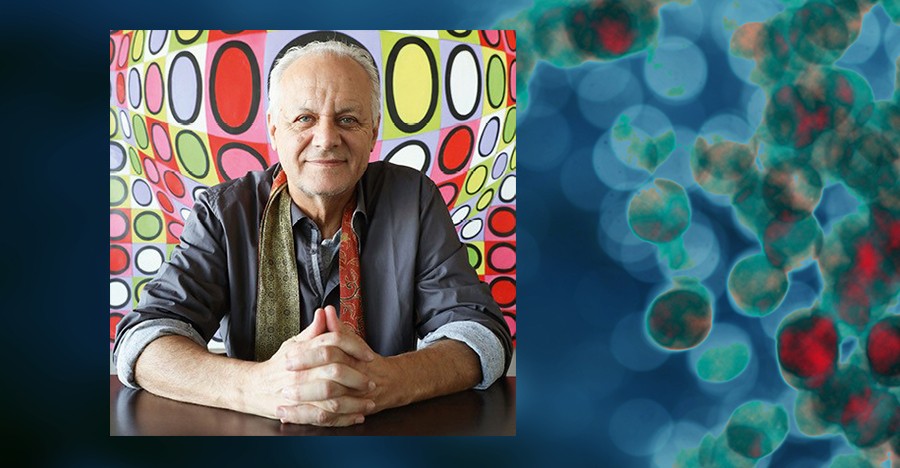

Professor Rémi Quirion, Chief Scientist of Québec (Canada), replaces Sir Peter Gluckman (New Zealand) as President of INGSA.

Professor Claire Craig (United Kingdom), CBE, Provost of Queen’s College Oxford and a member of the UK government’s AI Council, has been elected by members as the inaugural Vice President for Evidence.

Professor Binyam Sisay Mendisu (Egypt), PhD, Lecture at the University of Addis Ababa and Programme Advisor, UNESCO Institute for Building Capacity in Africa, has been elected by members as the inaugural Vice President for Capacity Building.

Professor Soledad Quiroz Valenzuela (Chile), Science Advisor on Climate Change to the Ministry of Science, Technology, Knowledge and Innovation of the government of Chile, has been elected by members as the Vice President for Policy.

Satellite Events: From 7 – 9 September, as part of INGSA2021, the conference is partnering with local, national and international organisations to ignite further conversations about the science/policy/society interface. Six satellite events are planned to cover everything from climate science advice and energy policy, open science and publishing during a crisis, to the politicisation of science and pre-school scientific education. International delegates are equally encouraged to join in online.

About INGSA: Founded in 2014 with regional chapters in Africa, Asia and Latin America and the Caribbean, INGSA has quicky established an important reputation as aa collaborative platform for policy exchange, capacity building and research across diverse global science advisory organisations and national systems. Currently, over 5000 individuals and institutions are listed as members. Science communicators and members of the media are warmly welcomed to join.

As the body of work detailed on its website shows (www.ingsa.org) through workshops, conferences and a growing catalogue of tools and guidance, the network aims to enhance the global science-policy interface to improve the potential for evidence-informed policy formation at sub-national, national and transnational levels. INGSA operates as an affiliated body of the International Science Council which acts as trustee of INGSA funds and hosts its governance committee. INGSA’s secretariat is based in Koi Tū: The Centre for Informed Futures at the University of Auckland in New Zealand.

Conference Programme: 4th International Conference on Science Advice to Government (ingsa2021.org)

Newly released compendium of Speaker Viewpoints: Download Essays From The Cutting Edge Of Science Advice – Viewpoints

[1] Argentina, Australia, Austria, Barbados, Belgium, Benin, Brazil, Burkina Faso, Cameroon, Canada, Chad, Colombia, Costa Rica, Côte D’Ivoire, Denmark, Estonia, Finland, France, Germany, Hong Kong, Indonesia, Ireland, Japan, Lebanon, Luxembourg, Malaysia, Mexico, Morocco, Netherlands, New Zealand, Pakistan, Papua New Guinea, Rwanda, Senegal, Singapore, Slovakia, South Africa, Spain, Sri Lanka, Sweden, Switzerland, Thailand, UK, USA.

As noted earlier this year in my January 28, 2021 posting, it’s SCWIST’s 40th anniversary and the organization is celebrating with a number of initiatives, here are some of the latest including as talk on science policy (from the August 2021 newsletter received via email),

SCWIST “STEM Forward Project”

Receives Federal Funding

SCWIST’s “STEM Forward for Economic Prosperity” project proposal was among 237 projects across the country to receive funding from the $100 million Feminist Response Recovery Fund of the Government of Canada through the Women and Gender Equality Canada (WAGE) federal department.

Read more.

iWIST and SCWIST Ink Affiliate MOU [memorandum of understanding]

Years in planning, the Island Women in Science and Technology (iWIST) of Victoria, British Columbia and SCWIST finally signed an Affiliate MOU (memorandum of understanding) on Aug 11, 2021.

The MOU strengthens our commitment to collaborate on advocacy (e.g. grants, policy and program changes at the Provincial and Federal level), events (networking, workshops, conferences), cross promotion ( event/ program promotion via digital media), and membership growth (discounts for iWIST members to join SCWIST and vice versa).

Dr. Khristine Carino, SCWIST President, travelled to Victoria to sign the MOU in person. She was invited as an honoured guest to the iWIST annual summer picnic by Claire Skillen, iWIST President. Khristine’s travel expenses were paid from her own personal funds.

…

Discovery Foundation x SBN x SCWIST Business Mentorship Program: Enhancing Diversity in today’s Biotechnology Landscape

The Discovery Foundation, Student Biotechnology Network, and Society for Canadian Women in Science and Technology are proud to bring you the first-ever “Business Mentorship Program: Enhancing Diversity in today’s Biotechnology Landscape”.

The Business Mentorship Program aims to support historically underrepresented communities (BIPOC, Women, LGBTQIAS+ and more) in navigating the growth of the biotechnology industry. The program aims to foster relationships between individuals and professionals through networking and mentorship, providing education and training through workshops and seminars, and providing 1:1 consultation with industry leaders. Participants will be paired with mentors throughout the week and have the opportunity to deliver a pitch for the chance to win prizes at the annual Building Biotechnology Expo.

This is a one week intensive program running from September 27th – October 1st, 2021 and is limited to 10 participants. Please apply early.

…

Events

September 10

Art of Science and Policy-Making Go Together

Science and policy-making go together. Acuitas’ [emphasis mine] Molly Sung shares her journey and how more scientists need to engage in this important area.

September 23

Au-delà de l’apparence :

des femmes de courage et de résilience en STIM

Dans le cadre de la semaine de l’égalité des sexes au Canada, ce forum de la division québécoise de la Société pour les femmes canadiennes en science et technologie (la SCWIST) mettra en vedette quatre panélistes inspirantes avec des parcours variés qui étudient ou travaillent en science, technologie, ingénierie et mathématiques (STIM) au Québec. Ces femmes immigrantes ont laissé leurs proches et leurs pays d’origine pour venir au Québec et contribuer activement à la recherche scientifique québécoise.

….

The ‘Art and Science Policy-Making Go Together’ talk seems to be aimed at persuasion and is not likely to offer any insider information as to how the BC life sciences effort is progressing. For a somewhat less rosy view of science and policy efforts, you can check out my August 23, 2021 posting, Who’s running the life science companies’ public relations campaign in British Columbia (Vancouver, Canada)?; scroll down to ‘The BC biotech gorillas’ subhead for more about Acuitas and some of the other life sciences companies in British Columbia (BC).

Gerald Bronner, Paris Diderot University, France

Gerald Bronner, Paris Diderot University, France Gordon Gauchat, University of Wisconsin-Milwaukee, United States

Gordon Gauchat, University of Wisconsin-Milwaukee, United States Mehita Iqani, University of the Witwatersrand, South Africa

Mehita Iqani, University of the Witwatersrand, South Africa Kyoko Sato, Stanford University, United States

Kyoko Sato, Stanford University, United States Peter Weingart, Bielefeld University, Germany

Peter Weingart, Bielefeld University, Germany