Following on my May 11, 2018 posting about the International Telecommunications Union (ITU) and the 2018 AI for Good Global Summit in mid- May, there’s an announcement. My other bit of AI news concerns animal testing.

Leveraging the power of AI for health

A July 24, 2018 ITU press release (a shorter version was received via email) announces a joint initiative focused on improving health,

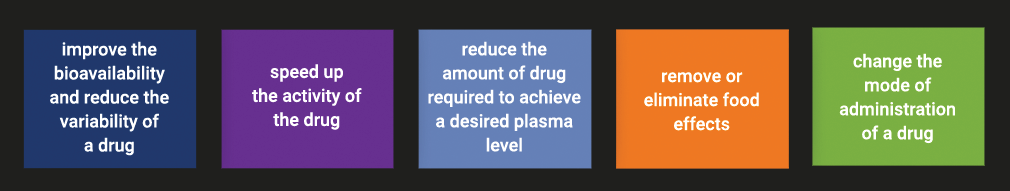

Two United Nations specialized agencies are joining forces to expand the use of artificial intelligence (AI) in the health sector to a global scale, and to leverage the power of AI to advance health for all worldwide. The International Telecommunication Union (ITU) and the World Health Organization (WHO) will work together through the newly established ITU Focus Group on AI for Health to develop an international “AI for health” standards framework and to identify use cases of AI in the health sector that can be scaled-up for global impact. The group is open to all interested parties.

“AI could help patients to assess their symptoms, enable medical professionals in underserved areas to focus on critical cases, and save great numbers of lives in emergencies by delivering medical diagnoses to hospitals before patients arrive to be treated,” said ITU Secretary-General Houlin Zhao. “ITU and WHO plan to ensure that such capabilities are available worldwide for the benefit of everyone, everywhere.”

The demand for such a platform was first identified by participants of the second AI for Good Global Summit held in Geneva, 15-17 May 2018. During the summit, AI and the health sector were recognized as a very promising combination, and it was announced that AI-powered technologies such as skin disease recognition and diagnostic applications based on symptom questions could be deployed on six billion smartphones by 2021.

The ITU Focus Group on AI for Health is coordinated through ITU’s Telecommunications Standardization Sector – which works with ITU’s 193 Member States and more than 800 industry and academic members to establish global standards for emerging ICT innovations. It will lead an intensive two-year analysis of international standardization opportunities towards delivery of a benchmarking framework of international standards and recommendations by ITU and WHO for the use of AI in the health sector.

“I believe the subject of AI for health is both important and useful for advancing health for all,” said WHO Director-General Tedros Adhanom Ghebreyesus.

The ITU Focus Group on AI for Health will also engage researchers, engineers, practitioners, entrepreneurs and policy makers to develop guidance documents for national administrations, to steer the creation of policies that ensure the safe, appropriate use of AI in the health sector.

“1.3 billion people have a mobile phone and we can use this technology to provide AI-powered health data analytics to people with limited or no access to medical care. AI can enhance health by improving medical diagnostics and associated health intervention decisions on a global scale,” said Thomas Wiegand, ITU Focus Group on AI for Health Chairman, and Executive Director of the Fraunhofer Heinrich Hertz Institute, as well as professor at TU Berlin.

He added, “The health sector is in many countries among the largest economic sectors or one of the fastest-growing, signalling a particularly timely need for international standardization of the convergence of AI and health.”

Data analytics are certain to form a large part of the ITU focus group’s work. AI systems are proving increasingly adept at interpreting laboratory results and medical imagery and extracting diagnostically relevant information from text or complex sensor streams.

As part of this, the ITU Focus Group for AI for Health will also produce an assessment framework to standardize the evaluation and validation of AI algorithms — including the identification of structured and normalized data to train AI algorithms. It will develop open benchmarks with the aim of these becoming international standards.

The ITU Focus Group for AI for Health will report to the ITU standardization expert group for multimedia, Study Group 16.

I got curious about Study Group 16 (from the Study Group 16 at a glance webpage),

Study Group 16 leads ITU’s standardization work on multimedia coding, systems and applications, including the coordination of related studies across the various ITU-T SGs. It is also the lead study group on ubiquitous and Internet of Things (IoT) applications; telecommunication/ICT accessibility for persons with disabilities; intelligent transport system (ITS) communications; e-health; and Internet Protocol television (IPTV).

Multimedia is at the core of the most recent advances in information and communication technologies (ICTs) – especially when we consider that most innovation today is agnostic of the transport and network layers, focusing rather on the higher OSI model layers.

SG16 is active in all aspects of multimedia standardization, including terminals, architecture, protocols, security, mobility, interworking and quality of service (QoS). It focuses its studies on telepresence and conferencing systems; IPTV; digital signage; speech, audio and visual coding; network signal processing; PSTN modems and interfaces; facsimile terminals; and ICT accessibility.

…

I wonder which group deals with artificial intelligence and, possibly, robots.

Chemical testing without animals

Thomas Hartung, professor of environmental health and engineering at Johns Hopkins University (US), describes in his July 25, 2018 essay (written for The Conversation) on phys.org the situation where chemical testing is concerned,

Most consumers would be dismayed with how little we know about the majority of chemicals. Only 3 percent of industrial chemicals – mostly drugs and pesticides – are comprehensively tested. Most of the 80,000 to 140,000 chemicals in consumer products have not been tested at all or just examined superficially to see what harm they may do locally, at the site of contact and at extremely high doses.

I am a physician and former head of the European Center for the Validation of Alternative Methods of the European Commission (2002-2008), and I am dedicated to finding faster, cheaper and more accurate methods of testing the safety of chemicals. To that end, I now lead a new program at Johns Hopkins University to revamp the safety sciences.

As part of this effort, we have now developed a computer method of testing chemicals that could save more than a US$1 billion annually and more than 2 million animals. Especially in times where the government is rolling back regulations on the chemical industry, new methods to identify dangerous substances are critical for human and environmental health.

Having written on the topic of alternatives to animal testing on a number of occasions (my December 26, 2014 posting provides an overview of sorts), I was particularly interested to see this in Hartung’s July 25, 2018 essay on The Conversation (Note: Links have been removed),

Following the vision of Toxicology for the 21st Century, a movement led by U.S. agencies to revamp safety testing, important work was carried out by my Ph.D. student Tom Luechtefeld at the Johns Hopkins Center for Alternatives to Animal Testing. Teaming up with Underwriters Laboratories, we have now leveraged an expanded database and machine learning to predict toxic properties. As we report in the journal Toxicological Sciences, we developed a novel algorithm and database for analyzing chemicals and determining their toxicity – what we call read-across structure activity relationship, RASAR.

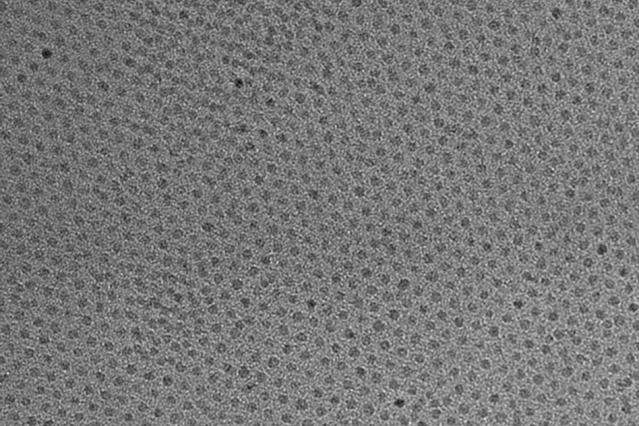

This graphic reveals a small part of the chemical universe. Each dot represents a different chemical. Chemicals that are close together have similar structures and often properties. Thomas Hartung, CC BY-SA

To do this, we first created an enormous database with 10 million chemical structures by adding more public databases filled with chemical data, which, if you crunch the numbers, represent 50 trillion pairs of chemicals. A supercomputer then created a map of the chemical universe, in which chemicals are positioned close together if they share many structures in common and far where they don’t. Most of the time, any molecule close to a toxic molecule is also dangerous. Even more likely if many toxic substances are close, harmless substances are far. Any substance can now be analyzed by placing it into this map.

If this sounds simple, it’s not. It requires half a billion mathematical calculations per chemical to see where it fits. The chemical neighborhood focuses on 74 characteristics which are used to predict the properties of a substance. Using the properties of the neighboring chemicals, we can predict whether an untested chemical is hazardous. For example, for predicting whether a chemical will cause eye irritation, our computer program not only uses information from similar chemicals, which were tested on rabbit eyes, but also information for skin irritation. This is because what typically irritates the skin also harms the eye.

How well does the computer identify toxic chemicals?

This method will be used for new untested substances. However, if you do this for chemicals for which you actually have data, and compare prediction with reality, you can test how well this prediction works. We did this for 48,000 chemicals that were well characterized for at least one aspect of toxicity, and we found the toxic substances in 89 percent of cases.

This is clearly more accurate that the corresponding animal tests which only yield the correct answer 70 percent of the time. The RASAR shall now be formally validated by an interagency committee of 16 U.S. agencies, including the EPA [Environmental Protection Agency] and FDA [Food and Drug Administration], that will challenge our computer program with chemicals for which the outcome is unknown. This is a prerequisite for acceptance and use in many countries and industries.

The potential is enormous: The RASAR approach is in essence based on chemical data that was registered for the 2010 and 2013 REACH [Registration, Evaluation, Authorizations and Restriction of Chemicals] deadlines [in Europe]. If our estimates are correct and chemical producers would have not registered chemicals after 2013, and instead used our RASAR program, we would have saved 2.8 million animals and $490 million in testing costs – and received more reliable data. We have to admit that this is a very theoretical calculation, but it shows how valuable this approach could be for other regulatory programs and safety assessments.

In the future, a chemist could check RASAR before even synthesizing their next chemical to check whether the new structure will have problems. Or a product developer can pick alternatives to toxic substances to use in their products. This is a powerful technology, which is only starting to show all its potential.

It’s been my experience that these claims having led a movement (Toxicology for the 21st Century) are often contested with many others competing for the title of ‘leader’ or ‘first’. That said, this RASAR approach seems very exciting, especially in light of the skepticism about limiting and/or making animal testing unnecessary noted in my December 26, 2014 posting.it was from someone I thought knew better.

Here’s a link to and a citation for the paper mentioned in Hartung’s essay,

Machine learning of toxicological big data enables read-across structure activity relationships (RASAR) outperforming animal test reproducibility by Thomas Luechtefeld, Dan Marsh, Craig Rowlands, Thomas Hartung. Toxicological Sciences, kfy152, https://doi.org/10.1093/toxsci/kfy152 Published: 11 July 2018

This paper is open access.

![AquaBounty's salmon (background) has been genetically modified to grow bigger and faster than a conventional Atlantic salmon of the same age (foreground.) Courtesy of AquaBounty Technologies, Inc. [downloaded from http://www.npr.org/sections/thesalt/2015/06/24/413755699/genetically-modified-salmon-coming-to-a-river-near-you]](http://www.frogheart.ca/wp-content/uploads/2015/12/GeneticallyModifiedSalmon.jpg)