I have two brain news bits, one about neural networks and quantum entanglement and another about how the brain operates in* more than three dimensions.

Quantum entanglement and neural networks

A June 13, 2017 news item on phys.org describes how machine learning can be used to solve problems in physics (Note: Links have been removed),

Machine learning, the field that’s driving a revolution in artificial intelligence, has cemented its role in modern technology. Its tools and techniques have led to rapid improvements in everything from self-driving cars and speech recognition to the digital mastery of an ancient board game.

Now, physicists are beginning to use machine learning tools to tackle a different kind of problem, one at the heart of quantum physics. In a paper published recently in Physical Review X, researchers from JQI [Joint Quantum Institute] and the Condensed Matter Theory Center (CMTC) at the University of Maryland showed that certain neural networks—abstract webs that pass information from node to node like neurons in the brain—can succinctly describe wide swathes of quantum systems.

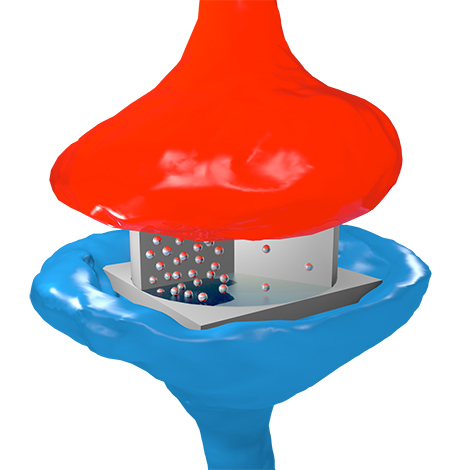

An artist’s rendering of a neural network with two layers. At the top is a real quantum system, like atoms in an optical lattice. Below is a network of hidden neurons that capture their interactions (Credit: E. Edwards/JQI)

A June 12, 2017 JQI news release by Chris Cesare, which originated the news item, describes how neural networks can represent quantum entanglement,

Dongling Deng, a JQI Postdoctoral Fellow who is a member of CMTC and the paper’s first author, says that researchers who use computers to study quantum systems might benefit from the simple descriptions that neural networks provide. “If we want to numerically tackle some quantum problem,” Deng says, “we first need to find an efficient representation.”

On paper and, more importantly, on computers, physicists have many ways of representing quantum systems. Typically these representations comprise lists of numbers describing the likelihood that a system will be found in different quantum states. But it becomes difficult to extract properties or predictions from a digital description as the number of quantum particles grows, and the prevailing wisdom has been that entanglement—an exotic quantum connection between particles—plays a key role in thwarting simple representations.

The neural networks used by Deng and his collaborators—CMTC Director and JQI Fellow Sankar Das Sarma and Fudan University physicist and former JQI Postdoctoral Fellow Xiaopeng Li—can efficiently represent quantum systems that harbor lots of entanglement, a surprising improvement over prior methods.

What’s more, the new results go beyond mere representation. “This research is unique in that it does not just provide an efficient representation of highly entangled quantum states,” Das Sarma says. “It is a new way of solving intractable, interacting quantum many-body problems that uses machine learning tools to find exact solutions.”

Neural networks and their accompanying learning techniques powered AlphaGo, the computer program that beat some of the world’s best Go players last year (link is external) (and the top player this year (link is external)). The news excited Deng, an avid fan of the board game. Last year, around the same time as AlphaGo’s triumphs, a paper appeared that introduced the idea of using neural networks to represent quantum states (link is external), although it gave no indication of exactly how wide the tool’s reach might be. “We immediately recognized that this should be a very important paper,” Deng says, “so we put all our energy and time into studying the problem more.”

The result was a more complete account of the capabilities of certain neural networks to represent quantum states. In particular, the team studied neural networks that use two distinct groups of neurons. The first group, called the visible neurons, represents real quantum particles, like atoms in an optical lattice or ions in a chain. To account for interactions between particles, the researchers employed a second group of neurons—the hidden neurons—which link up with visible neurons. These links capture the physical interactions between real particles, and as long as the number of connections stays relatively small, the neural network description remains simple.

Specifying a number for each connection and mathematically forgetting the hidden neurons can produce a compact representation of many interesting quantum states, including states with topological characteristics and some with surprising amounts of entanglement.

Beyond its potential as a tool in numerical simulations, the new framework allowed Deng and collaborators to prove some mathematical facts about the families of quantum states represented by neural networks. For instance, neural networks with only short-range interactions—those in which each hidden neuron is only connected to a small cluster of visible neurons—have a strict limit on their total entanglement. This technical result, known as an area law, is a research pursuit of many condensed matter physicists.

These neural networks can’t capture everything, though. “They are a very restricted regime,” Deng says, adding that they don’t offer an efficient universal representation. If they did, they could be used to simulate a quantum computer with an ordinary computer, something physicists and computer scientists think is very unlikely. Still, the collection of states that they do represent efficiently, and the overlap of that collection with other representation methods, is an open problem that Deng says is ripe for further exploration.

Here’s a link to and a citation for the paper,

Quantum Entanglement in Neural Network States by Dong-Ling Deng, Xiaopeng Li, and S. Das Sarma. Phys. Rev. X 7, 021021 – Published 11 May 2017

This paper is open access.

Blue Brain and the multidimensional universe

Blue Brain is a Swiss government brain research initiative which officially came to life in 2006 although the initial agreement between the École Politechnique Fédérale de Lausanne (EPFL) and IBM was signed in 2005 (according to the project’s Timeline page). Moving on, the project’s latest research reveals something astounding (from a June 12, 2017 Frontiers Publishing press release on EurekAlert),

For most people, it is a stretch of the imagination to understand the world in four dimensions but a new study has discovered structures in the brain with up to eleven dimensions – ground-breaking work that is beginning to reveal the brain’s deepest architectural secrets.

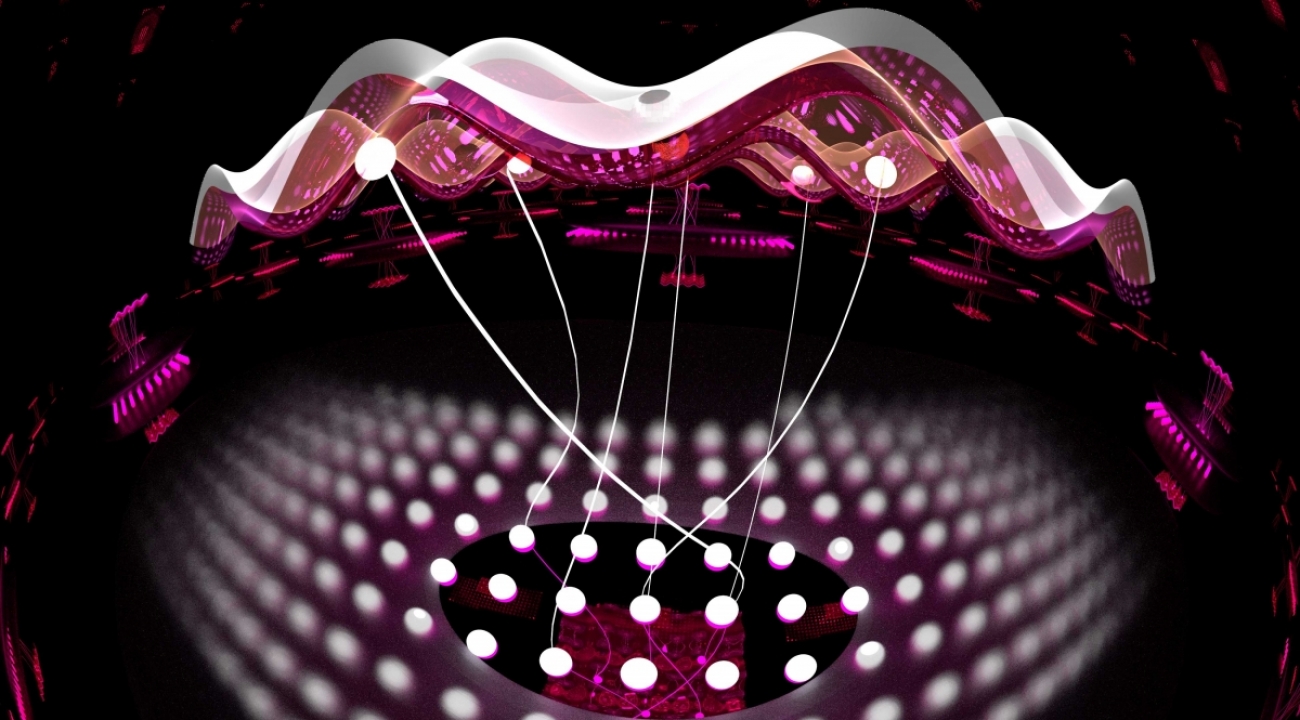

Using algebraic topology in a way that it has never been used before in neuroscience, a team from the Blue Brain Project has uncovered a universe of multi-dimensional geometrical structures and spaces within the networks of the brain.

The research, published today in Frontiers in Computational Neuroscience, shows that these structures arise when a group of neurons forms a clique: each neuron connects to every other neuron in the group in a very specific way that generates a precise geometric object. The more neurons there are in a clique, the higher the dimension of the geometric object.

“We found a world that we had never imagined,” says neuroscientist Henry Markram, director of Blue Brain Project and professor at the EPFL in Lausanne, Switzerland, “there are tens of millions of these objects even in a small speck of the brain, up through seven dimensions. In some networks, we even found structures with up to eleven dimensions.”

Markram suggests this may explain why it has been so hard to understand the brain. “The mathematics usually applied to study networks cannot detect the high-dimensional structures and spaces that we now see clearly.”

If 4D worlds stretch our imagination, worlds with 5, 6 or more dimensions are too complex for most of us to comprehend. This is where algebraic topology comes in: a branch of mathematics that can describe systems with any number of dimensions. The mathematicians who brought algebraic topology to the study of brain networks in the Blue Brain Project were Kathryn Hess from EPFL and Ran Levi from Aberdeen University.

“Algebraic topology is like a telescope and microscope at the same time. It can zoom into networks to find hidden structures – the trees in the forest – and see the empty spaces – the clearings – all at the same time,” explains Hess.

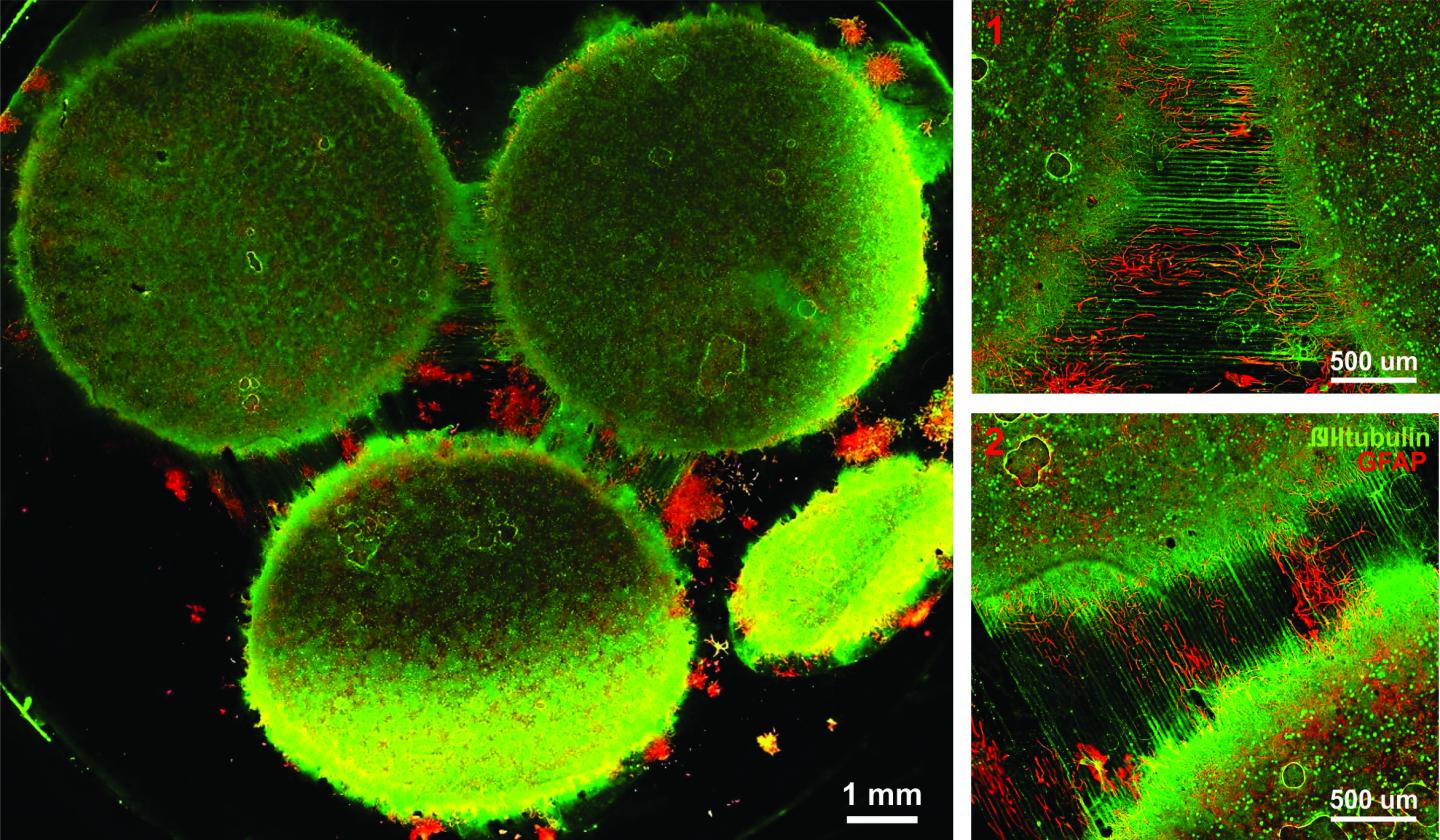

In 2015, Blue Brain published the first digital copy of a piece of the neocortex – the most evolved part of the brain and the seat of our sensations, actions, and consciousness. In this latest research, using algebraic topology, multiple tests were performed on the virtual brain tissue to show that the multi-dimensional brain structures discovered could never be produced by chance. Experiments were then performed on real brain tissue in the Blue Brain’s wet lab in Lausanne confirming that the earlier discoveries in the virtual tissue are biologically relevant and also suggesting that the brain constantly rewires during development to build a network with as many high-dimensional structures as possible.

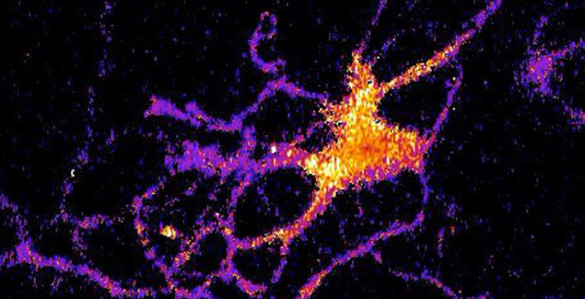

When the researchers presented the virtual brain tissue with a stimulus, cliques of progressively higher dimensions assembled momentarily to enclose high-dimensional holes, that the researchers refer to as cavities. “The appearance of high-dimensional cavities when the brain is processing information means that the neurons in the network react to stimuli in an extremely organized manner,” says Levi. “It is as if the brain reacts to a stimulus by building then razing a tower of multi-dimensional blocks, starting with rods (1D), then planks (2D), then cubes (3D), and then more complex geometries with 4D, 5D, etc. The progression of activity through the brain resembles a multi-dimensional sandcastle that materializes out of the sand and then disintegrates.”

The big question these researchers are asking now is whether the intricacy of tasks we can perform depends on the complexity of the multi-dimensional “sandcastles” the brain can build. Neuroscience has also been struggling to find where the brain stores its memories. “They may be ‘hiding’ in high-dimensional cavities,” Markram speculates.

###

…

About Blue Brain

The aim of the Blue Brain Project, a Swiss brain initiative founded and directed by Professor Henry Markram, is to build accurate, biologically detailed digital reconstructions and simulations of the rodent brain, and ultimately, the human brain. The supercomputer-based reconstructions and simulations built by Blue Brain offer a radically new approach for understanding the multilevel structure and function of the brain. http://bluebrain.

epfl. ch About Frontiers

Frontiers is a leading community-driven open-access publisher. By taking publishing entirely online, we drive innovation with new technologies to make peer review more efficient and transparent. We provide impact metrics for articles and researchers, and merge open access publishing with a research network platform – Loop – to catalyse research dissemination, and popularize research to the public, including children. Our goal is to increase the reach and impact of research articles and their authors. Frontiers has received the ALPSP Gold Award for Innovation in Publishing in 2014. http://www.

frontiersin. org.

Here’s a link to and a citation for the paper,

Cliques of Neurons Bound into Cavities Provide a Missing Link between Structure and Function by Michael W. Reimann, Max Nolte, Martina Scolamiero, Katharine Turner, Rodrigo Perin, Giuseppe Chindemi, Paweł Dłotko, Ran Levi, Kathryn Hess, and Henry Markram. Front. Comput. Neurosci., 12 June 2017 | https://doi.org/10.3389/fncom.2017.00048

This paper is open access.

*Feb. 3, 2021: ‘on’ changed to ‘in’