Kudos to anyone who recognized the reference to Pauline Kael (she changed film criticism forever) and her book “I Lost it at the Movies.” Of course, her book title was a bit of sexual innuendo, quite risqué for an important film critic in 1965 but appropriate for a period (the 1960s) associated with a sexual revolution. (There’s more about the 1960’s sexual revolution in the US along with mention of a prior sexual revolution in the 1920s in this Wikipedia entry.)

The title for this commentary is based on an anecdote from Dr. Andrew Maynard’s (director of the Arizona State University [ASU] Risk Innovation Lab) popular science and technology book, “Films from the Future: The Technology and Morality of Sci-Fi Movies.”

The ‘title-inspiring’ anecdote concerns Maynard’s first viewing of ‘2001: A Space Odyssey, when as a rather “bratty” 16-year-old who preferred to read science fiction, he discovered new ways of seeing and imaging the world. Maynard isn’t explicit about when he became a ‘techno nerd’ or how movies gave him an experience books couldn’t but presumably at 16 he was already gearing up for a career in the sciences. That ‘movie’ revelation received in front of a black and white television on January 1,1982 eventually led him to write, “Films from the Future.” (He has a PhD in physics which he is now applying to the field of risk innovation. For a more detailed description of Dr. Maynard and his work, there’s his ASU profile webpage and, of course, the introduction to his book.)

The book is quite timely. I don’t know how many people have noticed but science and scientific innovation is being covered more frequently in the media than it has been in many years. Science fairs and festivals are being founded on what seems to be a daily basis and you can now find science in art galleries. (Not to mention the movies and television where science topics are covered in comic book adaptations, in comedy, and in standard science fiction style.) Much of this activity is centered on what’s called ’emerging technologies’. These technologies are why people argue for what’s known as ‘blue sky’ or ‘basic’ or ‘fundamental’ science for without that science there would be no emerging technology.

Films from the Future

Isn’t reading the Table of Contents (ToC) the best way to approach a book? (From Films from the Future; Note: The formatting has been altered),

Table of Contents

Chapter One

In the Beginning 14

Beginnings 14

Welcome to the Future 16

The Power of Convergence 18

Socially Responsible Innovation 21

A Common Point of Focus 25

Spoiler Alert 26

Chapter Two

Jurassic Park: The Rise of Resurrection Biology 27

When Dinosaurs Ruled the World 27

De-Extinction 31

Could We, Should We? 36

The Butterfly Effect 39

Visions of Power 43

Chapter Three

Never Let Me Go: A Cautionary Tale of Human Cloning 46

Sins of Futures Past 46

Cloning 51

Genuinely Human? 56

Too Valuable to Fail? 62

Chapter Four

Minority Report: Predicting Criminal Intent 64

Criminal Intent 64

The “Science” of Predicting Bad Behavior 69

Criminal Brain Scans 74

Machine Learning-Based Precognition 77

Big Brother, Meet Big Data 79

Chapter Five

Limitless: Pharmaceutically-enhanced Intelligence 86

A Pill for Everything 86

The Seduction of Self-Enhancement 89

Nootropics 91

If You Could, Would You? 97

Privileged Technology 101

Our Obsession with Intelligence 105

Chapter Six

Elysium: Social Inequity in an Age of Technological

Extremes 110

The Poor Shall Inherit the Earth 110

Bioprinting Our Future Bodies 115

The Disposable Workforce 119

Living in an Automated Future 124

Chapter Seven

Ghost in the Shell: Being Human in an

Augmented Future 129

Through a Glass Darkly 129

Body Hacking 135

More than “Human”? 137

Plugged In, Hacked Out 142

Your Corporate Body 147

Chapter Eight

Ex Machina: AI and the Art of Manipulation 154

Plato’s Cave 154

The Lure of Permissionless Innovation 160

Technologies of Hubris 164

Superintelligence 169

Defining Artificial Intelligence 172

Artificial Manipulation 175

Chapter Nine

Transcendence: Welcome to the Singularity 180

Visions of the Future 180

Technological Convergence 184

Enter the Neo-Luddites 190

Techno-Terrorism 194

Exponential Extrapolation 200

Make-Believe in the Age of the Singularity 203

Chapter Ten

The Man in the White Suit: Living in a Material World 208

There’s Plenty of Room at the Bottom 208

Mastering the Material World 213

Myopically Benevolent Science 220

Never Underestimate the Status Quo 224

It’s Good to Talk 227

Chapter Eleven

Inferno: Immoral Logic in an Age of

Genetic Manipulation 231

Decoding Make-Believe 231

Weaponizing the Genome 234

Immoral Logic? 238

The Honest Broker 242

Dictating the Future 248

Chapter Twelve

The Day After Tomorrow: Riding the Wave of

Climate Change 251

Our Changing Climate 251

Fragile States 255

A Planetary “Microbiome” 258

The Rise of the Anthropocene 260

Building Resiliency 262

Geoengineering the Future 266

Chapter Thirteen

Contact: Living by More than Science Alone 272

An Awful Waste of Space 272

More than Science Alone 277

Occam’s Razor 280

What If We’re Not Alone? 283

Chapter Fourteen

Looking to the Future 288

Acknowledgments 293

The ToC gives the reader a pretty clue as to where the author is going with their book and Maynard explains how he chose his movies in his introductory chapter (from Films from the Future),

“There are some quite wonderful science fiction movies that didn’t make the cut because they didn’t fit the overarching narrative (Blade Runner and its sequel Blade Runner 2049, for instance, and the first of the Matrix trilogy). There are also movies that bombed with the critics, but were included because they ably fill a gap in the bigger story around emerging and converging technologies. Ultimately, the movies that made the cut were chosen because, together, they create an overarching narrative around emerging trends in biotechnologies, cybertechnologies, and materials-based technologies, and they illuminate a broader landscape around our evolving relationship with science and technology. And, to be honest, they are all movies that I get a kick out of watching.” (p. 17)

Jurassic Park (Chapter Two)

Dinosaurs do not interest me—they never have. Despite my profound indifference I did see the movie, Jurassic Park, when it was first released (someone talked me into going). And, I am still profoundly indifferent. Thankfully, Dr. Maynard finds meaning and a connection to current trends in biotechnology,

Jurassic Park is unabashedly a movie about dinosaurs. But it’s also a movie about greed, ambition, genetic engineering, and human folly—all rich pickings for thinking about the future, and what could possibly go wrong. (p. 28)

…

What really stands out with Jurassic Park, over twenty-five years later, is how it reveals a very human side of science and technology. This comes out in questions around when we should tinker with technology and when we should leave well enough alone. But there is also a narrative here that appears time and time again with the movies in this book, and that is how we get our heads around the sometimes oversized roles mega-entrepreneurs play in dictating how new tech is used, and possibly abused. These are all issues that are just as relevant now as they were in 1993, and are front and center of ensuring that the technologyenabled future we’re building is one where we want to live, and not one where we’re constantly fighting for our lives. (pp. 30-1)

He also describes a connection to current trends in biotechnology,

De-Extinction

In a far corner of Siberia, two Russians—Sergey Zimov and his son Nikita—are attempting to recreate the Ice Age. More precisely, their vision is to reconstruct the landscape and ecosystem of northern Siberia in the Pleistocene, a period in Earth’s history that stretches from around two and a half million years ago to eleven thousand years ago. This was a time when the environment was much colder than now, with huge glaciers and ice sheets flowing over much of the Earth’s northern hemisphere. It was also a time when humans

coexisted with animals that are long extinct, including saber-tooth cats, giant ground sloths, and woolly mammoths.

The Zimovs’ ambitions are an extreme example of “Pleistocene rewilding,” a movement to reintroduce relatively recently extinct large animals, or their close modern-day equivalents, to regions where they were once common. In the case of the Zimovs, the

father-and-son team believe that, by reconstructing the Pleistocene ecosystem in the Siberian steppes and elsewhere, they can slow down the impacts of climate change on these regions. These areas are dominated by permafrost, ground that never thaws through

the year. Permafrost ecosystems have developed and survived over millennia, but a warming global climate (a theme we’ll come back to in chapter twelve and the movie The Day After Tomorrow) threatens to catastrophically disrupt them, and as this happens, the impacts

on biodiversity could be devastating. But what gets climate scientists even more worried is potentially massive releases of trapped methane as the permafrost disappears.

Methane is a powerful greenhouse gas—some eighty times more effective at exacerbating global warming than carbon dioxide— and large-scale releases from warming permafrost could trigger catastrophic changes in climate. As a result, finding ways to keep it in the ground is important. And here the Zimovs came up with a rather unusual idea: maintaining the stability of the environment by reintroducing long-extinct species that could help prevent its destruction, even in a warmer world. It’s a wild idea, but one that has some merit.8 As a proof of concept, though, the Zimovs needed somewhere to start. And so they set out to create a park for deextinct Siberian animals: Pleistocene Park.9

Pleistocene Park is by no stretch of the imagination a modern-day Jurassic Park. The dinosaurs in Hammond’s park date back to the Mesozoic period, from around 250 million years ago to sixty-five million years ago. By comparison, the Pleistocene is relatively modern history, ending a mere eleven and a half thousand years ago. And the vision behind Pleistocene Park is not thrills, spills, and profit, but the serious use of science and technology to stabilize an increasingly unstable environment. Yet there is one thread that ties them together, and that’s using genetic engineering to reintroduce extinct species. In this case, the species in question is warm-blooded and furry: the woolly mammoth.

The idea of de-extinction, or bringing back species from extinction (it’s even called “resurrection biology” in some circles), has been around for a while. It’s a controversial idea, and it raises a lot of tough ethical questions. But proponents of de-extinction argue

that we’re losing species and ecosystems at such a rate that we can’t afford not to explore technological interventions to help stem the flow.

Early approaches to bringing species back from the dead have involved selective breeding. The idea was simple—if you have modern ancestors of a recently extinct species, selectively breeding specimens that have a higher genetic similarity to their forebears can potentially help reconstruct their genome in living animals. This approach is being used in attempts to bring back the aurochs, an ancestor of modern cattle.10 But it’s slow, and it depends on

the fragmented genome of the extinct species still surviving in its modern-day equivalents.

An alternative to selective breeding is cloning. This involves finding a viable cell, or cell nucleus, in an extinct but well-preserved animal and growing a new living clone from it. It’s definitely a more appealing route for impatient resurrection biologists, but it does mean getting your hands on intact cells from long-dead animals and devising ways to “resurrect” these, which is no mean feat. Cloning has potential when it comes to recently extinct species whose cells have been well preserved—for instance, where the whole animal has become frozen in ice. But it’s still a slow and extremely limited option.

Which is where advances in genetic engineering come in.

The technological premise of Jurassic Park is that scientists can reconstruct the genome of long-dead animals from preserved DNA fragments. It’s a compelling idea, if you think of DNA as a massively long and complex instruction set that tells a group of biological molecules how to build an animal. In principle, if we could reconstruct the genome of an extinct species, we would have the basic instruction set—the biological software—to reconstruct

individual members of it.

The bad news is that DNA-reconstruction-based de-extinction is far more complex than this. First you need intact fragments of DNA, which is not easy, as DNA degrades easily (and is pretty much impossible to obtain, as far as we know, for dinosaurs). Then you

need to be able to stitch all of your fragments together, which is akin to completing a billion-piece jigsaw puzzle without knowing what the final picture looks like. This is a Herculean task, although with breakthroughs in data manipulation and machine learning,

scientists are getting better at it. But even when you have your reconstructed genome, you need the biological “wetware”—all the stuff that’s needed to create, incubate, and nurture a new living thing, like eggs, nutrients, a safe space to grow and mature, and so on. Within all this complexity, it turns out that getting your DNA sequence right is just the beginning of translating that genetic code into a living, breathing entity. But in some cases, it might be possible.

In 2013, Sergey Zimov was introduced to the geneticist George Church at a conference on de-extinction. Church is an accomplished scientist in the field of DNA analysis and reconstruction, and a thought leader in the field of synthetic biology (which we’ll come

back to in chapter nine). It was a match made in resurrection biology heaven. Zimov wanted to populate his Pleistocene Park with mammoths, and Church thought he could see a way of

achieving this.

What resulted was an ambitious project to de-extinct the woolly mammoth. Church and others who are working on this have faced plenty of hurdles. But the technology has been advancing so fast that, as of 2017, scientists were predicting they would be able to reproduce the woolly mammoth within the next two years.

One of those hurdles was the lack of solid DNA sequences to work from. Frustratingly, although there are many instances of well preserved woolly mammoths, their DNA rarely survives being frozen for tens of thousands of years. To overcome this, Church and others

have taken a different tack: Take a modern, living relative of the mammoth, and engineer into it traits that would allow it to live on the Siberian tundra, just like its woolly ancestors.

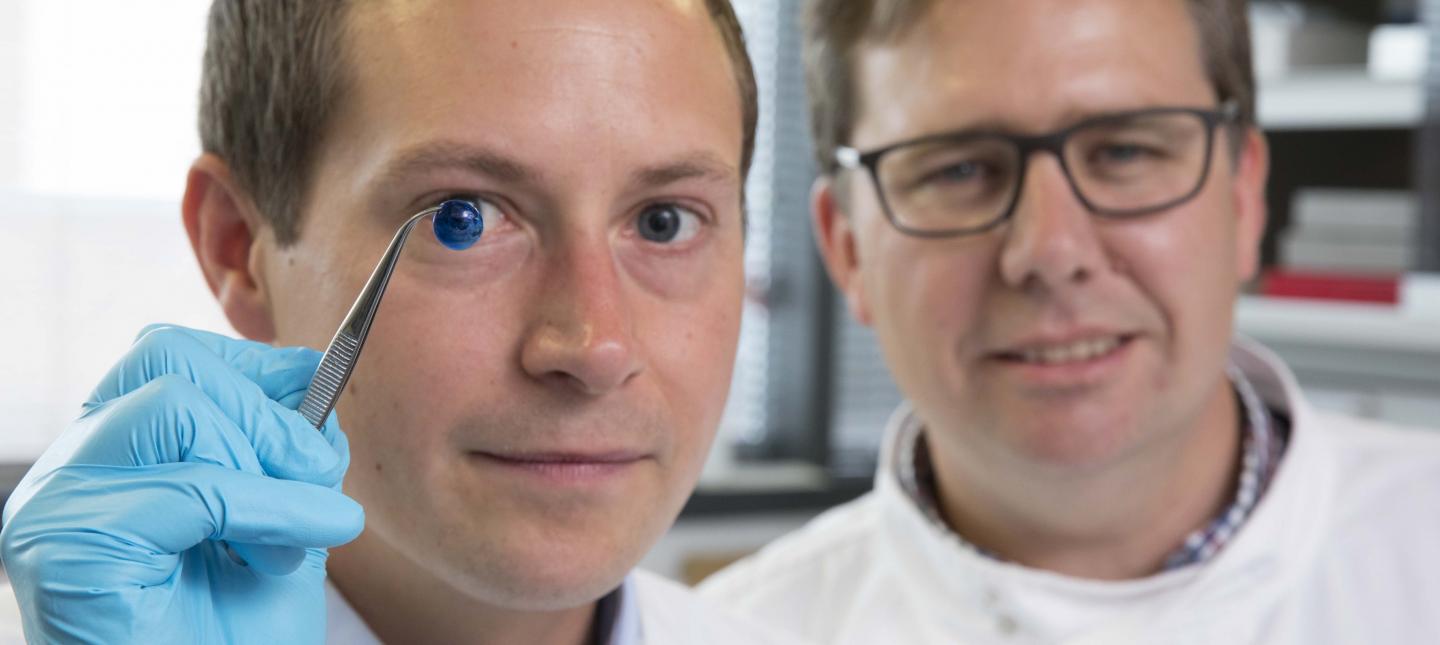

Church’s team’s starting point has been the Asian elephant. This is their source of base DNA for their “woolly mammoth 2.0”—their starting source code, if you like. So far, they’ve identified fifty plus gene sequences they think they can play with to give their modern-day woolly mammoth the traits it would need to thrive in Pleistocene Park, including a coat of hair, smaller ears, and a constitution adapted to cold.

The next hurdle they face is how to translate the code embedded in their new woolly mammoth genome into a living, breathing animal. The most obvious route would be to impregnate a female Asian elephant with a fertilized egg containing the new code. But Asian elephants are endangered, and no one’s likely to allow such cutting edge experimentation on the precious few that are still around, so scientists are working on an artificial womb for their reinvented woolly mammoth. They’re making progress with mice and hope to crack the motherless mammoth challenge relatively soon.

It’s perhaps a stretch to call this creative approach to recreating a species (or “reanimation” as Church refers to it) “de-extinction,” as what is being formed is a new species. … (pp. 31-4)

This selection illustrates what Maynard does so very well throughout the book where he uses each film as a launching pad for a clear, readable description of relevant bits of science so you understand why the premise was likely, unlikely, or pure fantasy while linking it to contemporary practices, efforts, and issues. In the context of Jurassic Park, Maynard goes on to raise some fascinating questions such as: Should we revive animals rendered extinct (due to obsolescence or inability to adapt to new conditions) when we could develop new animals?

General thoughts

‘Films for the Future’ offers readable (to non-scientific types) science, lively writing, and the occasional ‘memorish’ anecdote. As well, Dr. Maynard raises the curtain on aspects of the scientific enterprise that most of us do not get to see. For example, the meeting between Sergey Zimov and George Church and how it led to new ‘de-extinction’ work’. He also describes the problems that the scientists encountered and are encountering. This is in direct contrast to how scientific work is usually presented in the news media as one glorious breakthrough after the next.

Maynard does discuss the issues of social inequality and power and ownership. For example, who owns your transplant or data? Puzzlingly, he doesn’t touch on the current environment where scientists in the US and elsewhere are encouraged/pressured to start up companies commercializing their work.

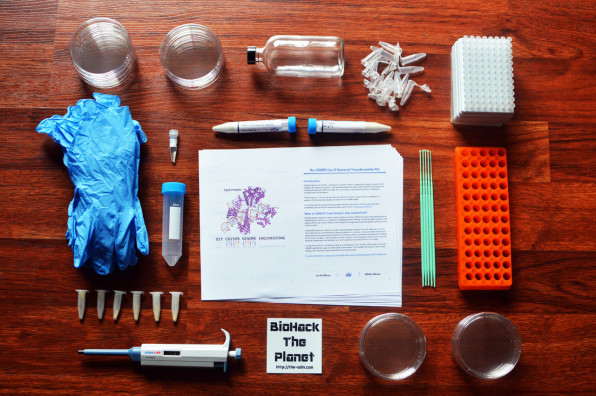

Nor is there any mention of how universities are participating in this grand business experiment often called ‘innovation’. (My March 15, 2017 posting describes an outcome for the CRISPR [gene editing system] patent fight taking place between Harvard University’s & MIT’s [Massachusetts Institute of Technology] Broad Institute vs the University of California at Berkeley and my Sept. 11, 2018 posting about an art/science exhibit in Vancouver [Canada] provides an update for round 2 of the Broad Institute vs. UC Berkeley patent fight [scroll down about 65% of the way.) *To read about how my ‘cultural blindness’ shows up here scroll down to the single asterisk at the end.*

There’s a foray through machine-learning and big data as applied to predictive policing in Maynard’s ‘Minority Report’ chapter (my November 23, 2017 posting describes Vancouver’s predictive policing initiative [no psychics involved], the first such in Canada). There’s no mention of surveillance technology, which if I recall properly was part of the future environment, both by the state and by corporations. (Mia Armstrong’s November 15, 2018 article for Slate on Chinese surveillance being exported to Venezuela provides interesting insight.)

The gaps are interesting and various. This of course points to a problem all science writers have when attempting an overview of science. (Carl Zimmer’s latest, ‘She Has Her Mother’s Laugh: The Powers, Perversions, and Potential of Heredity’] a doorstopping 574 pages, also has some gaps despite his focus on heredity,)

Maynard has worked hard to give an comprehensive overview in a remarkably compact 279 pages while developing his theme about science and the human element. In other words, science is not monolithic; it’s created by human beings and subject to all the flaws and benefits that humanity’s efforts are always subject to—scientists are people too.

The readership for ‘Films from the Future’ spans from the mildly interested science reader to someone like me who’s been writing/blogging about these topics (more or less) for about 10 years. I learned a lot reading this book.

Next time, I’m hopeful there’ll be a next time, Maynard might want to describe the parameters he’s set for his book in more detail that is possible in his chapter headings. He could have mentioned that he’s not a cinéaste so his descriptions of the movies are very much focused on the story as conveyed through words. He doesn’t mention colour palates, camera angles, or, even, cultural lenses.

Take for example, his chapter on ‘Ghost in the Shell’. Focused on the Japanese animation film and not the live action Hollywood version he talks about human enhancement and cyborgs. The Japanese have a different take on robots, inanimate objects, and, I assume, cyborgs than is found in Canada or the US or Great Britain, for that matter (according to a colleague of mine, an Englishwoman who lived in Japan for ten or more years). There’s also the chapter on the Ealing comedy, The Man in The White Suit, an English film from the 1950’s. That too has a cultural (as well as, historical) flavour but since Maynard is from England, he may take that cultural flavour for granted. ‘Never let me go’ in Chapter Two was also a UK production, albeit far more recent than the Ealing comedy and it’s interesting to consider how a UK production about cloning might differ from a US or Chinese or … production on the topic. I am hearkening back to Maynard’s anecdote about movies giving him new ways of seeing and imagining the world.

There’s a corrective. A couple of sentences in Maynard’s introductory chapter cautioning that in depth exploration of ‘cultural lenses’ was not possible without expanding the book to an unreadable size followed by a sentence in each of the two chapters that there are cultural differences.

One area where I had a significant problem was with regard to being “programmed” and having “instinctual” behaviour,

As a species, we are embarrassingly programmed to see “different” as “threatening,” and to take instinctive action against it. It’s a trait that’s exploited in many science fiction novels and movies, including those in this book. If we want to see the rise of increasingly augmented individuals, we need to be prepared for some social strife. (p. 136)

These concepts are much debated in the social sciences and there are arguments for and against ‘instincts regarding strangers and their possible differences’. I gather Dr. Maynard hies to the ‘instinct to defend/attack’ school of thought.

One final quandary, there was no sex and I was expecting it in the Ex Machina chapter, especially now that sexbots are about to take over the world (I exaggerate). Certainly, if you’re talking about “social strife,” then sexbots would seem to be fruitful line of inquiry, especially when there’s talk of how they could benefit families (my August 29, 2018 posting). Again, there could have been a sentence explaining why Maynard focused almost exclusively in this chapter on the discussions about artificial intelligence and superintelligence.

Taken in the context of the book, these are trifling issues and shouldn’t stop you from reading Films from the Future. What Maynard has accomplished here is impressive and I hope it’s just the beginning.

Final note

Bravo Andrew! (Note: We’ve been ‘internet acquaintances/friends since the first year I started blogging. When I’m referring to him in his professional capacity, he’s Dr. Maynard and when it’s not strictly in his professional capacity, it’s Andrew. For this commentary/review I wanted to emphasize his professional status.)

If you need to see a few more samples of Andrew’s writing, there’s a Nov. 15, 2018 essay on The Conversation, Sci-fi movies are the secret weapon that could help Silicon Valley grow up and a Nov. 21, 2018 article on slate.com, The True Cost of Stain-Resistant Pants; The 1951 British comedy The Man in the White Suit anticipated our fears about nanotechnology. Enjoy.

****Added at 1700 hours on Nov. 22, 2018: You can purchase Films from the Future here.

*Nov. 23, 2018: I should have been more specific and said ‘academic scientists’. In Canada, the great percentage of scientists are academic. It’s to the point where the OECD (Organization for Economic Cooperation and Development) has noted that amongst industrialized countries, Canada has very few industrial scientists in comparison to the others.

A hippocampal interneuron. Image: Biosciences Imaging Gp, Soton, Wellcome Trust via Creative Commons

A hippocampal interneuron. Image: Biosciences Imaging Gp, Soton, Wellcome Trust via Creative Commons